Xinggong Zhang

Morphe: High-Fidelity Generative Video Streaming with Vision Foundation Model

Feb 03, 2026Abstract:Video streaming is a fundamental Internet service, while the quality still cannot be guaranteed especially in poor network conditions such as bandwidth-constrained and remote areas. Existing works mainly work towards two directions: traditional pixel-codec streaming nearly approaches its limit and is hard to step further in compression; the emerging neural-enhanced or generative streaming usually fall short in latency and visual fidelity, hindering their practical deployment. Inspired by the recent success of vision foundation model (VFM), we strive to harness the powerful video understanding and processing capacities of VFM to achieve generalization, high fidelity and loss resilience for real-time video streaming with even higher compression rate. We present the first revolutionized paradigm that enables VFM-based end-to-end generative video streaming towards this goal. Specifically, Morphe employs joint training of visual tokenizers and variable-resolution spatiotemporal optimization under simulated network constraints. Additionally, a robust streaming system is constructed that leverages intelligent packet dropping to resist real-world network perturbations. Extensive evaluation demonstrates that Morphe achieves comparable visual quality while saving 62.5\% bandwidth compared to H.265, and accomplishes real-time, loss-resilient video delivery in challenging network environments, representing a milestone in VFM-enabled multimedia streaming solutions.

GaussVideoDreamer: 3D Scene Generation with Video Diffusion and Inconsistency-Aware Gaussian Splatting

Apr 16, 2025

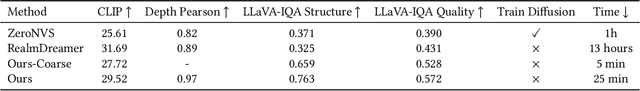

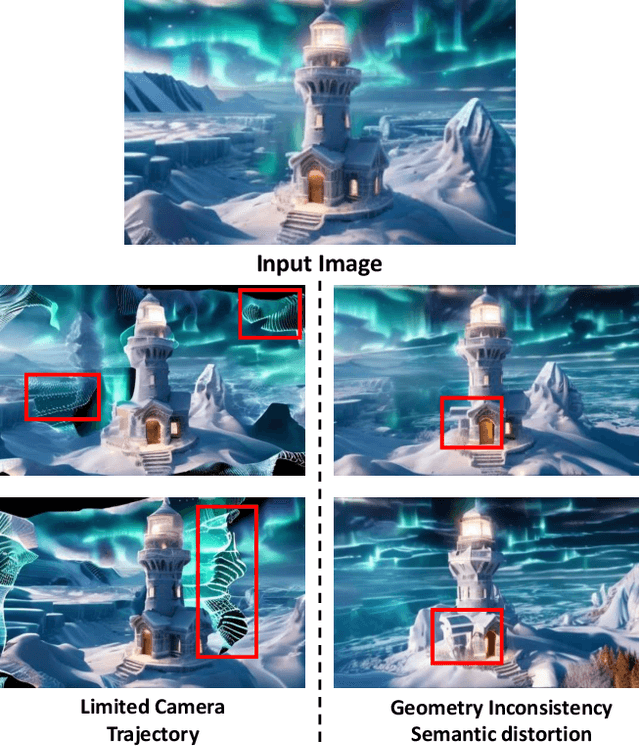

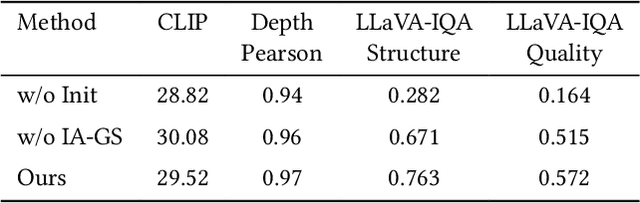

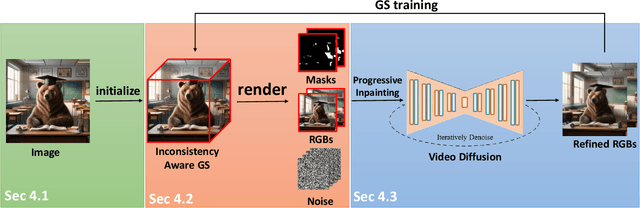

Abstract:Single-image 3D scene reconstruction presents significant challenges due to its inherently ill-posed nature and limited input constraints. Recent advances have explored two promising directions: multiview generative models that train on 3D consistent datasets but struggle with out-of-distribution generalization, and 3D scene inpainting and completion frameworks that suffer from cross-view inconsistency and suboptimal error handling, as they depend exclusively on depth data or 3D smoothness, which ultimately degrades output quality and computational performance. Building upon these approaches, we present GaussVideoDreamer, which advances generative multimedia approaches by bridging the gap between image, video, and 3D generation, integrating their strengths through two key innovations: (1) A progressive video inpainting strategy that harnesses temporal coherence for improved multiview consistency and faster convergence. (2) A 3D Gaussian Splatting consistency mask to guide the video diffusion with 3D consistent multiview evidence. Our pipeline combines three core components: a geometry-aware initialization protocol, Inconsistency-Aware Gaussian Splatting, and a progressive video inpainting strategy. Experimental results demonstrate that our approach achieves 32% higher LLaVA-IQA scores and at least 2x speedup compared to existing methods while maintaining robust performance across diverse scenes.

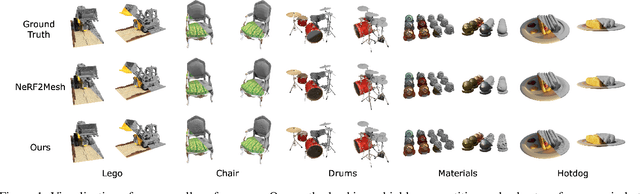

R-Meshfusion: Reinforcement Learning Powered Sparse-View Mesh Reconstruction with Diffusion Priors

Apr 16, 2025Abstract:Mesh reconstruction from multi-view images is a fundamental problem in computer vision, but its performance degrades significantly under sparse-view conditions, especially in unseen regions where no ground-truth observations are available. While recent advances in diffusion models have demonstrated strong capabilities in synthesizing novel views from limited inputs, their outputs often suffer from visual artifacts and lack 3D consistency, posing challenges for reliable mesh optimization. In this paper, we propose a novel framework that leverages diffusion models to enhance sparse-view mesh reconstruction in a principled and reliable manner. To address the instability of diffusion outputs, we propose a Consensus Diffusion Module that filters unreliable generations via interquartile range (IQR) analysis and performs variance-aware image fusion to produce robust pseudo-supervision. Building on this, we design an online reinforcement learning strategy based on the Upper Confidence Bound (UCB) to adaptively select the most informative viewpoints for enhancement, guided by diffusion loss. Finally, the fused images are used to jointly supervise a NeRF-based model alongside sparse-view ground truth, ensuring consistency across both geometry and appearance. Extensive experiments demonstrate that our method achieves significant improvements in both geometric quality and rendering quality.

MCBlock: Boosting Neural Radiance Field Training Speed by MCTS-based Dynamic-Resolution Ray Sampling

Apr 14, 2025

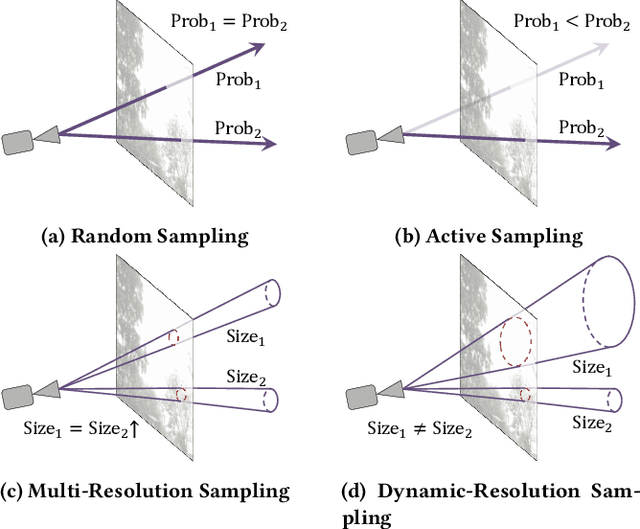

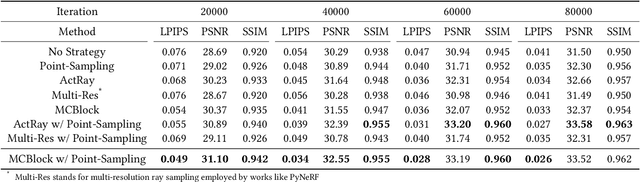

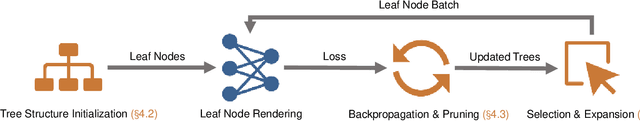

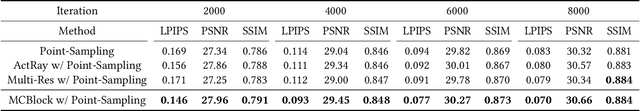

Abstract:Neural Radiance Field (NeRF) is widely known for high-fidelity novel view synthesis. However, even the state-of-the-art NeRF model, Gaussian Splatting, requires minutes for training, far from the real-time performance required by multimedia scenarios like telemedicine. One of the obstacles is its inefficient sampling, which is only partially addressed by existing works. Existing point-sampling algorithms uniformly sample simple-texture regions (easy to fit) and complex-texture regions (hard to fit), while existing ray-sampling algorithms sample these regions all in the finest granularity (i.e. the pixel level), both wasting GPU training resources. Actually, regions with different texture intensities require different sampling granularities. To this end, we propose a novel dynamic-resolution ray-sampling algorithm, MCBlock, which employs Monte Carlo Tree Search (MCTS) to partition each training image into pixel blocks with different sizes for active block-wise training. Specifically, the trees are initialized according to the texture of training images to boost the initialization speed, and an expansion/pruning module dynamically optimizes the block partition. MCBlock is implemented in Nerfstudio, an open-source toolset, and achieves a training acceleration of up to 2.33x, surpassing other ray-sampling algorithms. We believe MCBlock can apply to any cone-tracing NeRF model and contribute to the multimedia community.

PromptMobile: Efficient Promptus for Low Bandwidth Mobile Video Streaming

Mar 20, 2025

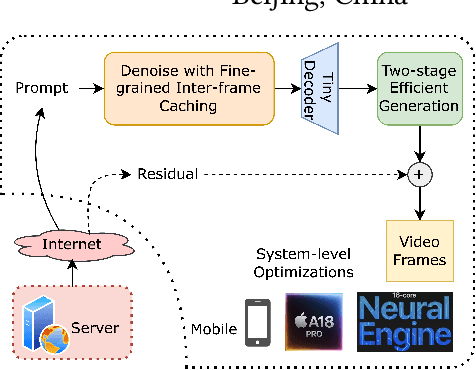

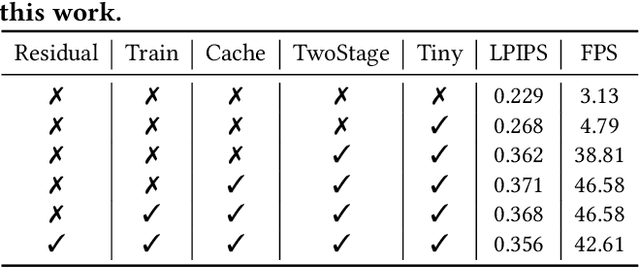

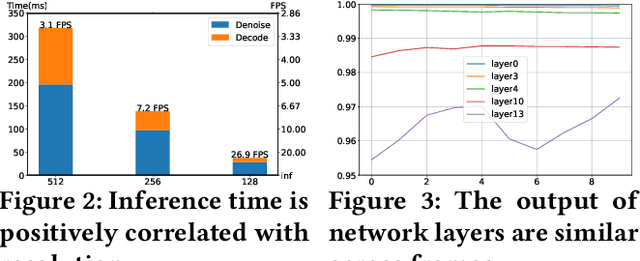

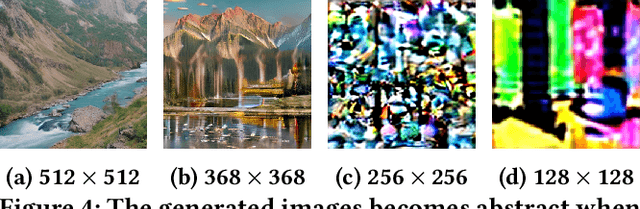

Abstract:Traditional video compression algorithms exhibit significant quality degradation at extremely low bitrates. Promptus emerges as a new paradigm for video streaming, substantially cutting down the bandwidth essential for video streaming. However, Promptus is computationally intensive and can not run in real-time on mobile devices. This paper presents PromptMobile, an efficient acceleration framework tailored for on-device Promptus. Specifically, we propose (1) a two-stage efficient generation framework to reduce computational cost by 8.1x, (2) a fine-grained inter-frame caching to reduce redundant computations by 16.6\%, (3) system-level optimizations to further enhance efficiency. The evaluations demonstrate that compared with the original Promptus, PromptMobile achieves a 13.6x increase in image generation speed. Compared with other streaming methods, PromptMobile achives an average LPIPS improvement of 0.016 (compared with H.265), reducing 60\% of severely distorted frames (compared to VQGAN).

$R^2$-Mesh: Reinforcement Learning Powered Mesh Reconstruction via Geometry and Appearance Refinement

Aug 19, 2024

Abstract:Mesh reconstruction based on Neural Radiance Fields (NeRF) is popular in a variety of applications such as computer graphics, virtual reality, and medical imaging due to its efficiency in handling complex geometric structures and facilitating real-time rendering. However, existing works often fail to capture fine geometric details accurately and struggle with optimizing rendering quality. To address these challenges, we propose a novel algorithm that progressively generates and optimizes meshes from multi-view images. Our approach initiates with the training of a NeRF model to establish an initial Signed Distance Field (SDF) and a view-dependent appearance field. Subsequently, we iteratively refine the SDF through a differentiable mesh extraction method, continuously updating both the vertex positions and their connectivity based on the loss from mesh differentiable rasterization, while also optimizing the appearance representation. To further leverage high-fidelity and detail-rich representations from NeRF, we propose an online-learning strategy based on Upper Confidence Bound (UCB) to enhance viewpoints by adaptively incorporating images rendered by the initial NeRF model into the training dataset. Through extensive experiments, we demonstrate that our method delivers highly competitive and robust performance in both mesh rendering quality and geometric quality.

Promptus: Can Prompts Streaming Replace Video Streaming with Stable Diffusion

May 30, 2024

Abstract:With the exponential growth of video traffic, traditional video streaming systems are approaching their limits in compression efficiency and communication capacity. To further reduce bitrate while maintaining quality, we propose Promptus, a disruptive novel system that streaming prompts instead of video content with Stable Diffusion, which converts video frames into a series of "prompts" for delivery. To ensure pixel alignment, a gradient descent-based prompt fitting framework is proposed. To achieve adaptive bitrate for prompts, a low-rank decomposition-based bitrate control algorithm is introduced. For inter-frame compression of prompts, a temporal smoothing-based prompt interpolation algorithm is proposed. Evaluations across various video domains and real network traces demonstrate Promptus can enhance the perceptual quality by 0.111 and 0.092 (in LPIPS) compared to VAE and H.265, respectively, and decreases the ratio of severely distorted frames by 89.3% and 91.7%. Moreover, Promptus achieves real-time video generation from prompts at over 150 FPS. To the best of our knowledge, Promptus is the first attempt to replace video codecs with prompt inversion and the first to use prompt streaming instead of video streaming. Our work opens up a new paradigm for efficient video communication beyond the Shannon limit.

DoLLM: How Large Language Models Understanding Network Flow Data to Detect Carpet Bombing DDoS

May 13, 2024

Abstract:It is an interesting question Can and How Large Language Models (LLMs) understand non-language network data, and help us detect unknown malicious flows. This paper takes Carpet Bombing as a case study and shows how to exploit LLMs' powerful capability in the networking area. Carpet Bombing is a new DDoS attack that has dramatically increased in recent years, significantly threatening network infrastructures. It targets multiple victim IPs within subnets, causing congestion on access links and disrupting network services for a vast number of users. Characterized by low-rates, multi-vectors, these attacks challenge traditional DDoS defenses. We propose DoLLM, a DDoS detection model utilizes open-source LLMs as backbone. By reorganizing non-contextual network flows into Flow-Sequences and projecting them into LLMs semantic space as token embeddings, DoLLM leverages LLMs' contextual understanding to extract flow representations in overall network context. The representations are used to improve the DDoS detection performance. We evaluate DoLLM with public datasets CIC-DDoS2019 and real NetFlow trace from Top-3 countrywide ISP. The tests have proven that DoLLM possesses strong detection capabilities. Its F1 score increased by up to 33.3% in zero-shot scenarios and by at least 20.6% in real ISP traces.

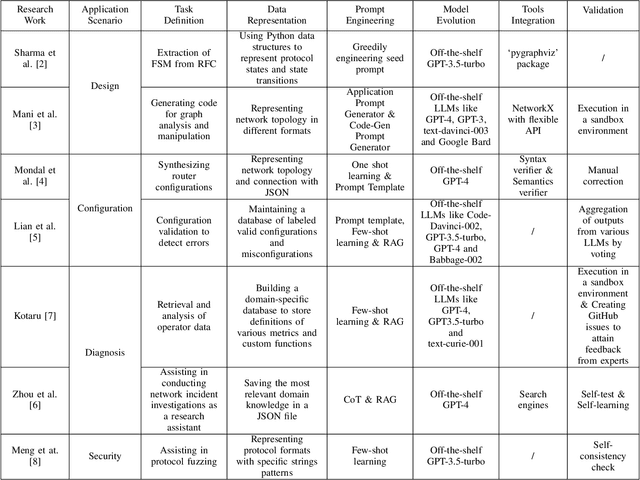

Large Language Models for Networking: Workflow, Advances and Challenges

Apr 19, 2024

Abstract:The networking field is characterized by its high complexity and rapid iteration, requiring extensive expertise to accomplish network tasks, ranging from network design, diagnosis, configuration and security. The inherent complexity of these tasks, coupled with the ever-changing landscape of networking technologies and protocols, poses significant hurdles for traditional machine learning-based methods. These methods often struggle to generalize and automate complex tasks in networking, as they require extensive labeled data, domain-specific feature engineering, and frequent retraining to adapt to new scenarios. However, the recent emergence of large language models (LLMs) has sparked a new wave of possibilities in addressing these challenges. LLMs have demonstrated remarkable capabilities in natural language understanding, generation, and reasoning. These models, trained on extensive data, can benefit the networking domain. Some efforts have already explored the application of LLMs in the networking domain and revealed promising results. By reviewing recent advances, we present an abstract workflow to describe the fundamental process involved in applying LLM for Networking. We introduce the highlights of existing works by category and explain in detail how they operate at different stages of the workflow. Furthermore, we delve into the challenges encountered, discuss potential solutions, and outline future research prospects. We hope that this survey will provide insight for researchers and practitioners, promoting the development of this interdisciplinary research field.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge