Jiangkai Wu

HybridPrompt: Bridging Generative Priors and Traditional Codecs for Mobile Streaming

Feb 19, 2026Abstract:In Video on Demand (VoD) scenarios, traditional codecs are the industry standard due to their high decoding efficiency. However, they suffer from severe quality degradation under low bandwidth conditions. While emerging generative neural codecs offer significantly higher perceptual quality, their reliance on heavy frame-by-frame generation makes real-time playback on mobile devices impractical. We ask: is it possible to combine the blazing-fast speed of traditional standards with the superior visual fidelity of neural approaches? We present HybridPrompt, the first generative-based video system capable of achieving real-time 1080p decoding at over 150 FPS on a commercial smartphone. Specifically, we employ a hybrid architecture that encodes Keyframes using a generative model while relying on traditional codecs for the remaining frames. A major challenge is that the two paradigms have conflicting objectives: the "hallucinated" details from generative models often misalign with the rigid prediction mechanisms of traditional codecs, causing bitrate inefficiency. To address this, we demonstrate that the traditional decoding process is differentiable, enabling an end-to-end optimization loop. This allows us to use subsequent frames as additional supervision, forcing the generative model to synthesize keyframes that are not only perceptually high-fidelity but also mathematically optimal references for the traditional codec. By integrating a two-stage generation strategy, our system outperforms pure neural baselines by orders of magnitude in speed while achieving an average LPIPS gain of 8% over traditional codecs at 200kbps.

Artic: AI-oriented Real-time Communication for MLLM Video Assistant

Feb 13, 2026Abstract:AI Video Assistant emerges as a new paradigm for Real-time Communication (RTC), where one peer is a Multimodal Large Language Model (MLLM) deployed in the cloud. This makes interaction between humans and AI more intuitive, akin to chatting with a real person. However, a fundamental mismatch exists between current RTC frameworks and AI Video Assistants, stemming from the drastic shift in Quality of Experience (QoE) and more challenging networks. Measurements on our production prototype also confirm that current RTC fails, causing latency spikes and accuracy drops. To address these challenges, we propose Artic, an AI-oriented RTC framework for MLLM Video Assistants, exploring the shift from "humans watching video" to "AI understanding video." Specifically, Artic proposes: (1) Response Capability-aware Adaptive Bitrate, which utilizes MLLM accuracy saturation to proactively cap bitrate, reserving bandwidth headroom to absorb future fluctuations for latency reduction; (2) Zero-overhead Context-aware Streaming, which allocates limited bitrate to regions most important for the response, maintaining accuracy even under ultra-low bitrates; and (3) Degraded Video Understanding Benchmark, the first benchmark evaluating how RTC-induced video degradation affects MLLM accuracy. Prototype experiments using real-world uplink traces show that compared with existing methods, Artic significantly improves accuracy by 15.12% and reduces latency by 135.31 ms. We will release the benchmark and codes at https://github.com/pku-netvideo/DeViBench.

Smaller is Better: Generative Models Can Power Short Video Preloading

Feb 10, 2026Abstract:Preloading is widely used in short video platforms to minimize playback stalls by downloading future content in advance. However, existing strategies face a tradeoff. Aggressive preloading reduces stalls but wastes bandwidth, while conservative strategies save data but increase the risk of playback stalls. This paper presents PromptPream, a computation powered preloading paradigm that breaks this tradeoff by using local computation to reduce bandwidth demand. Instead of transmitting pixel level video chunks, PromptPream sends compact semantic prompts that are decoded into high quality frames using generative models such as Stable Diffusion. We propose three core techniques to enable this paradigm: (1) a gradient based prompt inversion method that compresses frames into small sets of compact token embeddings; (2) a computation aware scheduling strategy that jointly optimizes network and compute resource usage; and (3) a scalable searching algorithm that addresses the enlarged scheduling space introduced by scheduler. Evaluations show that PromptStream reduces both stalls and bandwidth waste by over 31%, and improves Quality of Experience (QoE) by 45%, compared to traditional strategies.

* 6 pages, 7 figures, to appear in ICC 2026

Morphe: High-Fidelity Generative Video Streaming with Vision Foundation Model

Feb 03, 2026Abstract:Video streaming is a fundamental Internet service, while the quality still cannot be guaranteed especially in poor network conditions such as bandwidth-constrained and remote areas. Existing works mainly work towards two directions: traditional pixel-codec streaming nearly approaches its limit and is hard to step further in compression; the emerging neural-enhanced or generative streaming usually fall short in latency and visual fidelity, hindering their practical deployment. Inspired by the recent success of vision foundation model (VFM), we strive to harness the powerful video understanding and processing capacities of VFM to achieve generalization, high fidelity and loss resilience for real-time video streaming with even higher compression rate. We present the first revolutionized paradigm that enables VFM-based end-to-end generative video streaming towards this goal. Specifically, Morphe employs joint training of visual tokenizers and variable-resolution spatiotemporal optimization under simulated network constraints. Additionally, a robust streaming system is constructed that leverages intelligent packet dropping to resist real-world network perturbations. Extensive evaluation demonstrates that Morphe achieves comparable visual quality while saving 62.5\% bandwidth compared to H.265, and accomplishes real-time, loss-resilient video delivery in challenging network environments, representing a milestone in VFM-enabled multimedia streaming solutions.

R-Meshfusion: Reinforcement Learning Powered Sparse-View Mesh Reconstruction with Diffusion Priors

Apr 16, 2025Abstract:Mesh reconstruction from multi-view images is a fundamental problem in computer vision, but its performance degrades significantly under sparse-view conditions, especially in unseen regions where no ground-truth observations are available. While recent advances in diffusion models have demonstrated strong capabilities in synthesizing novel views from limited inputs, their outputs often suffer from visual artifacts and lack 3D consistency, posing challenges for reliable mesh optimization. In this paper, we propose a novel framework that leverages diffusion models to enhance sparse-view mesh reconstruction in a principled and reliable manner. To address the instability of diffusion outputs, we propose a Consensus Diffusion Module that filters unreliable generations via interquartile range (IQR) analysis and performs variance-aware image fusion to produce robust pseudo-supervision. Building on this, we design an online reinforcement learning strategy based on the Upper Confidence Bound (UCB) to adaptively select the most informative viewpoints for enhancement, guided by diffusion loss. Finally, the fused images are used to jointly supervise a NeRF-based model alongside sparse-view ground truth, ensuring consistency across both geometry and appearance. Extensive experiments demonstrate that our method achieves significant improvements in both geometric quality and rendering quality.

MCBlock: Boosting Neural Radiance Field Training Speed by MCTS-based Dynamic-Resolution Ray Sampling

Apr 14, 2025

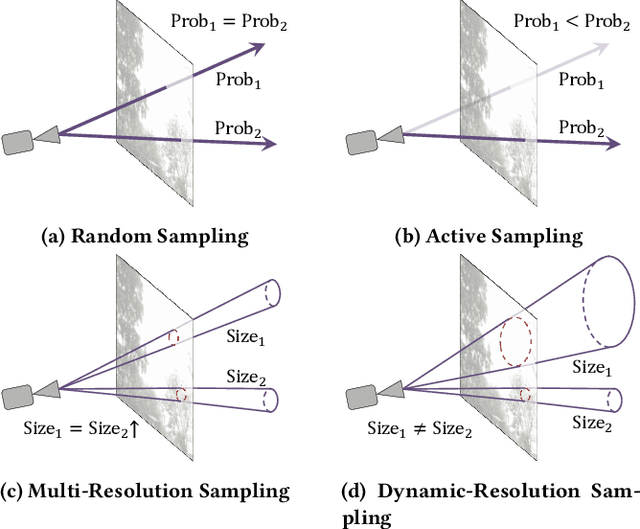

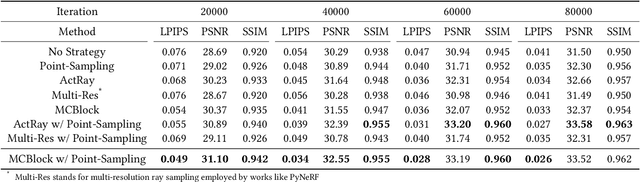

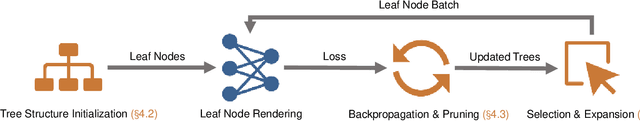

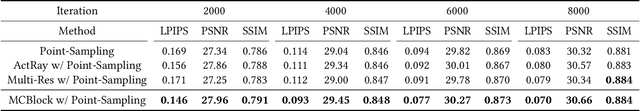

Abstract:Neural Radiance Field (NeRF) is widely known for high-fidelity novel view synthesis. However, even the state-of-the-art NeRF model, Gaussian Splatting, requires minutes for training, far from the real-time performance required by multimedia scenarios like telemedicine. One of the obstacles is its inefficient sampling, which is only partially addressed by existing works. Existing point-sampling algorithms uniformly sample simple-texture regions (easy to fit) and complex-texture regions (hard to fit), while existing ray-sampling algorithms sample these regions all in the finest granularity (i.e. the pixel level), both wasting GPU training resources. Actually, regions with different texture intensities require different sampling granularities. To this end, we propose a novel dynamic-resolution ray-sampling algorithm, MCBlock, which employs Monte Carlo Tree Search (MCTS) to partition each training image into pixel blocks with different sizes for active block-wise training. Specifically, the trees are initialized according to the texture of training images to boost the initialization speed, and an expansion/pruning module dynamically optimizes the block partition. MCBlock is implemented in Nerfstudio, an open-source toolset, and achieves a training acceleration of up to 2.33x, surpassing other ray-sampling algorithms. We believe MCBlock can apply to any cone-tracing NeRF model and contribute to the multimedia community.

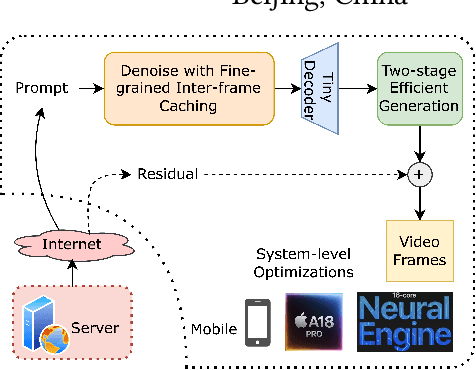

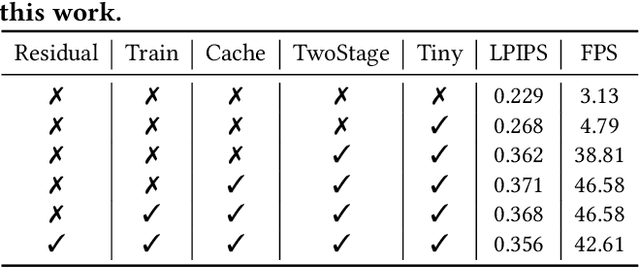

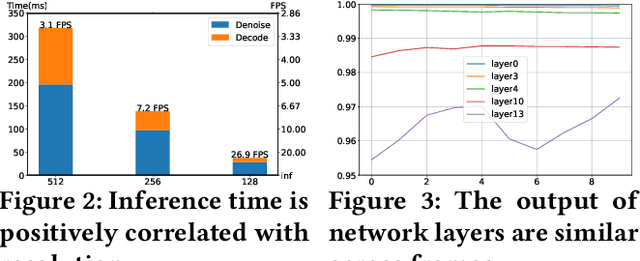

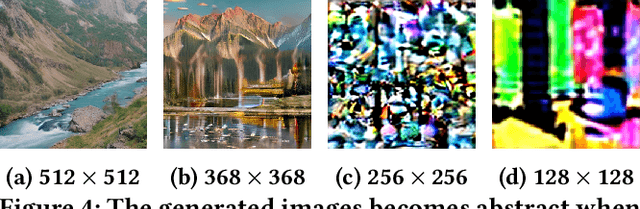

PromptMobile: Efficient Promptus for Low Bandwidth Mobile Video Streaming

Mar 20, 2025

Abstract:Traditional video compression algorithms exhibit significant quality degradation at extremely low bitrates. Promptus emerges as a new paradigm for video streaming, substantially cutting down the bandwidth essential for video streaming. However, Promptus is computationally intensive and can not run in real-time on mobile devices. This paper presents PromptMobile, an efficient acceleration framework tailored for on-device Promptus. Specifically, we propose (1) a two-stage efficient generation framework to reduce computational cost by 8.1x, (2) a fine-grained inter-frame caching to reduce redundant computations by 16.6\%, (3) system-level optimizations to further enhance efficiency. The evaluations demonstrate that compared with the original Promptus, PromptMobile achieves a 13.6x increase in image generation speed. Compared with other streaming methods, PromptMobile achives an average LPIPS improvement of 0.016 (compared with H.265), reducing 60\% of severely distorted frames (compared to VQGAN).

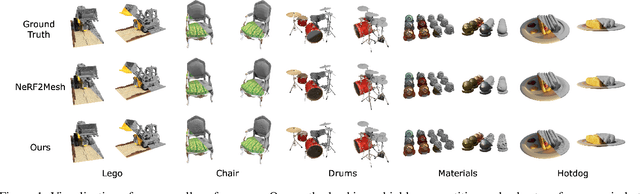

$R^2$-Mesh: Reinforcement Learning Powered Mesh Reconstruction via Geometry and Appearance Refinement

Aug 19, 2024

Abstract:Mesh reconstruction based on Neural Radiance Fields (NeRF) is popular in a variety of applications such as computer graphics, virtual reality, and medical imaging due to its efficiency in handling complex geometric structures and facilitating real-time rendering. However, existing works often fail to capture fine geometric details accurately and struggle with optimizing rendering quality. To address these challenges, we propose a novel algorithm that progressively generates and optimizes meshes from multi-view images. Our approach initiates with the training of a NeRF model to establish an initial Signed Distance Field (SDF) and a view-dependent appearance field. Subsequently, we iteratively refine the SDF through a differentiable mesh extraction method, continuously updating both the vertex positions and their connectivity based on the loss from mesh differentiable rasterization, while also optimizing the appearance representation. To further leverage high-fidelity and detail-rich representations from NeRF, we propose an online-learning strategy based on Upper Confidence Bound (UCB) to enhance viewpoints by adaptively incorporating images rendered by the initial NeRF model into the training dataset. Through extensive experiments, we demonstrate that our method delivers highly competitive and robust performance in both mesh rendering quality and geometric quality.

Promptus: Can Prompts Streaming Replace Video Streaming with Stable Diffusion

May 30, 2024

Abstract:With the exponential growth of video traffic, traditional video streaming systems are approaching their limits in compression efficiency and communication capacity. To further reduce bitrate while maintaining quality, we propose Promptus, a disruptive novel system that streaming prompts instead of video content with Stable Diffusion, which converts video frames into a series of "prompts" for delivery. To ensure pixel alignment, a gradient descent-based prompt fitting framework is proposed. To achieve adaptive bitrate for prompts, a low-rank decomposition-based bitrate control algorithm is introduced. For inter-frame compression of prompts, a temporal smoothing-based prompt interpolation algorithm is proposed. Evaluations across various video domains and real network traces demonstrate Promptus can enhance the perceptual quality by 0.111 and 0.092 (in LPIPS) compared to VAE and H.265, respectively, and decreases the ratio of severely distorted frames by 89.3% and 91.7%. Moreover, Promptus achieves real-time video generation from prompts at over 150 FPS. To the best of our knowledge, Promptus is the first attempt to replace video codecs with prompt inversion and the first to use prompt streaming instead of video streaming. Our work opens up a new paradigm for efficient video communication beyond the Shannon limit.

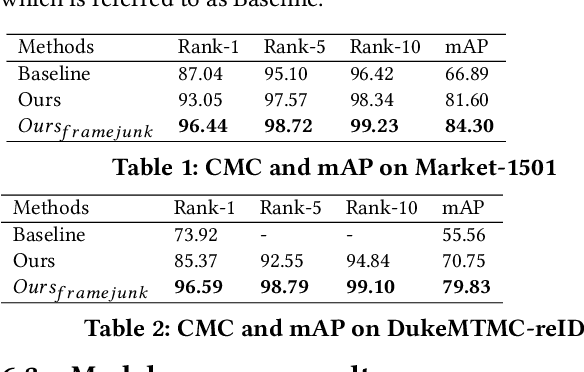

Identity-Aware Attribute Recognition via Real-Time Distributed Inference in Mobile Edge Clouds

Aug 12, 2020

Abstract:With the development of deep learning technologies, attribute recognition and person re-identification (re-ID) have attracted extensive attention and achieved continuous improvement via executing computing-intensive deep neural networks in cloud datacenters. However, the datacenter deployment cannot meet the real-time requirement of attribute recognition and person re-ID, due to the prohibitive delay of backhaul networks and large data transmissions from cameras to datacenters. A feasible solution thus is to employ mobile edge clouds (MEC) within the proximity of cameras and enable distributed inference. In this paper, we design novel models for pedestrian attribute recognition with re-ID in an MEC-enabled camera monitoring system. We also investigate the problem of distributed inference in the MEC-enabled camera network. To this end, we first propose a novel inference framework with a set of distributed modules, by jointly considering the attribute recognition and person re-ID. We then devise a learning-based algorithm for the distributions of the modules of the proposed distributed inference framework, considering the dynamic MEC-enabled camera network with uncertainties. We finally evaluate the performance of the proposed algorithm by both simulations with real datasets and system implementation in a real testbed. Evaluation results show that the performance of the proposed algorithm with distributed inference framework is promising, by reaching the accuracies of attribute recognition and person identification up to 92.9% and 96.6% respectively, and significantly reducing the inference delay by at least 40.6% compared with existing methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge