Xinggang Hu

Dy3DGS-SLAM: Monocular 3D Gaussian Splatting SLAM for Dynamic Environments

Jun 06, 2025Abstract:Current Simultaneous Localization and Mapping (SLAM) methods based on Neural Radiance Fields (NeRF) or 3D Gaussian Splatting excel in reconstructing static 3D scenes but struggle with tracking and reconstruction in dynamic environments, such as real-world scenes with moving elements. Existing NeRF-based SLAM approaches addressing dynamic challenges typically rely on RGB-D inputs, with few methods accommodating pure RGB input. To overcome these limitations, we propose Dy3DGS-SLAM, the first 3D Gaussian Splatting (3DGS) SLAM method for dynamic scenes using monocular RGB input. To address dynamic interference, we fuse optical flow masks and depth masks through a probabilistic model to obtain a fused dynamic mask. With only a single network iteration, this can constrain tracking scales and refine rendered geometry. Based on the fused dynamic mask, we designed a novel motion loss to constrain the pose estimation network for tracking. In mapping, we use the rendering loss of dynamic pixels, color, and depth to eliminate transient interference and occlusion caused by dynamic objects. Experimental results demonstrate that Dy3DGS-SLAM achieves state-of-the-art tracking and rendering in dynamic environments, outperforming or matching existing RGB-D methods.

PAS-SLAM: A Visual SLAM System for Planar Ambiguous Scenes

Feb 09, 2024Abstract:Visual SLAM (Simultaneous Localization and Mapping) based on planar features has found widespread applications in fields such as environmental structure perception and augmented reality. However, current research faces challenges in accurately localizing and mapping in planar ambiguous scenes, primarily due to the poor accuracy of the employed planar features and data association methods. In this paper, we propose a visual SLAM system based on planar features designed for planar ambiguous scenes, encompassing planar processing, data association, and multi-constraint factor graph optimization. We introduce a planar processing strategy that integrates semantic information with planar features, extracting the edges and vertices of planes to be utilized in tasks such as plane selection, data association, and pose optimization. Next, we present an integrated data association strategy that combines plane parameters, semantic information, projection IoU (Intersection over Union), and non-parametric tests, achieving accurate and robust plane data association in planar ambiguous scenes. Finally, we design a set of multi-constraint factor graphs for camera pose optimization. Qualitative and quantitative experiments conducted on publicly available datasets demonstrate that our proposed system competes effectively in both accuracy and robustness in terms of map construction and camera localization compared to state-of-the-art methods.

UniQuadric: A SLAM Backend for Unknown Rigid Object 3D Tracking and Light-Weight Modeling

Oct 02, 2023Abstract:Tracking and modeling unknown rigid objects in the environment play a crucial role in autonomous unmanned systems and virtual-real interactive applications. However, many existing Simultaneous Localization, Mapping and Moving Object Tracking (SLAMMOT) methods focus solely on estimating specific object poses and lack estimation of object scales and are unable to effectively track unknown objects. In this paper, we propose a novel SLAM backend that unifies ego-motion tracking, rigid object motion tracking, and modeling within a joint optimization framework. In the perception part, we designed a pixel-level asynchronous object tracker (AOT) based on the Segment Anything Model (SAM) and DeAOT, enabling the tracker to effectively track target unknown objects guided by various predefined tasks and prompts. In the modeling part, we present a novel object-centric quadric parameterization to unify both static and dynamic object initialization and optimization. Subsequently, in the part of object state estimation, we propose a tightly coupled optimization model for object pose and scale estimation, incorporating hybrids constraints into a novel dual sliding window optimization framework for joint estimation. To our knowledge, we are the first to tightly couple object pose tracking with light-weight modeling of dynamic and static objects using quadric. We conduct qualitative and quantitative experiments on simulation datasets and real-world datasets, demonstrating the state-of-the-art robustness and accuracy in motion estimation and modeling. Our system showcases the potential application of object perception in complex dynamic scenes.

Multi-level Map Construction for Dynamic Scenes

Aug 08, 2023

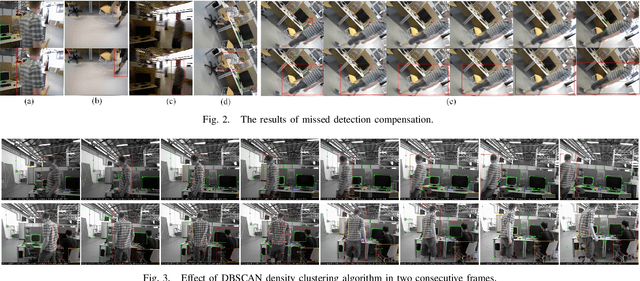

Abstract:In dynamic scenes, both localization and mapping in visual SLAM face significant challenges. In recent years, numerous outstanding research works have proposed effective solutions for the localization problem. However, there has been a scarcity of excellent works focusing on constructing long-term consistent maps in dynamic scenes, which severely hampers map applications. To address this issue, we have designed a multi-level map construction system tailored for dynamic scenes. In this system, we employ multi-object tracking algorithms, DBSCAN clustering algorithm, and depth information to rectify the results of object detection, accurately extract static point clouds, and construct dense point cloud maps and octree maps. We propose a plane map construction algorithm specialized for dynamic scenes, involving the extraction, filtering, data association, and fusion optimization of planes in dynamic environments, thus creating a plane map. Additionally, we introduce an object map construction algorithm targeted at dynamic scenes, which includes object parameterization, data association, and update optimization. Extensive experiments on public datasets and real-world scenarios validate the accuracy of the multi-level maps constructed in this study and the robustness of the proposed algorithms. Furthermore, we demonstrate the practical application prospects of our algorithms by utilizing the constructed object maps for dynamic object tracking.

DYP-SLAM: A Real-time Visual SLAM Based on YOLO and Probability in Dynamic Environments

Feb 04, 2022

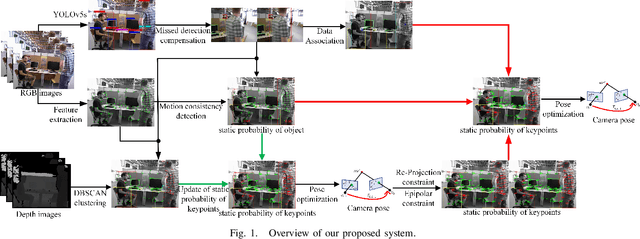

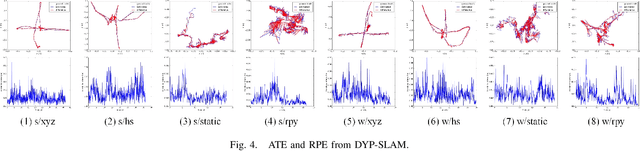

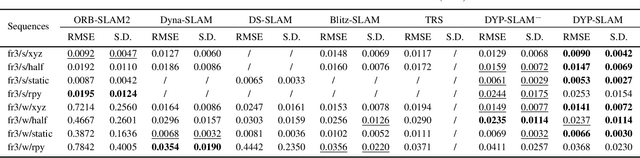

Abstract:SLAM algorithm is based on the static assumption of environment. Therefore, the dynamic factors in the environment will have a great impact on the matching points due to violating this assumption, and then directly affect the accuracy of subsequent camera pose estimation. Recently, some related works generally use the combination of semantic constraints and geometric constraints to deal with dynamic objects, but there are some problems, such as poor real-time performance, easy to treat people as rigid bodies, and poor performance in low dynamic scenes. In this paper, a dynamic scene oriented visual SLAM algorithm based on target detection and static probability named DYP-SLAM is proposed. The algorithm combines semantic constraints and geometric constraints to calculate the static probability of objects, keypoints and map points, and takes them as weights to participate in camera pose estimation. The proposed algorithm is evaluated on the public dataset and compared with a variety of advanced algorithms. It has achieved the best results in almost all low dynamics and high dynamic scenarios, and showing quite high real-time.

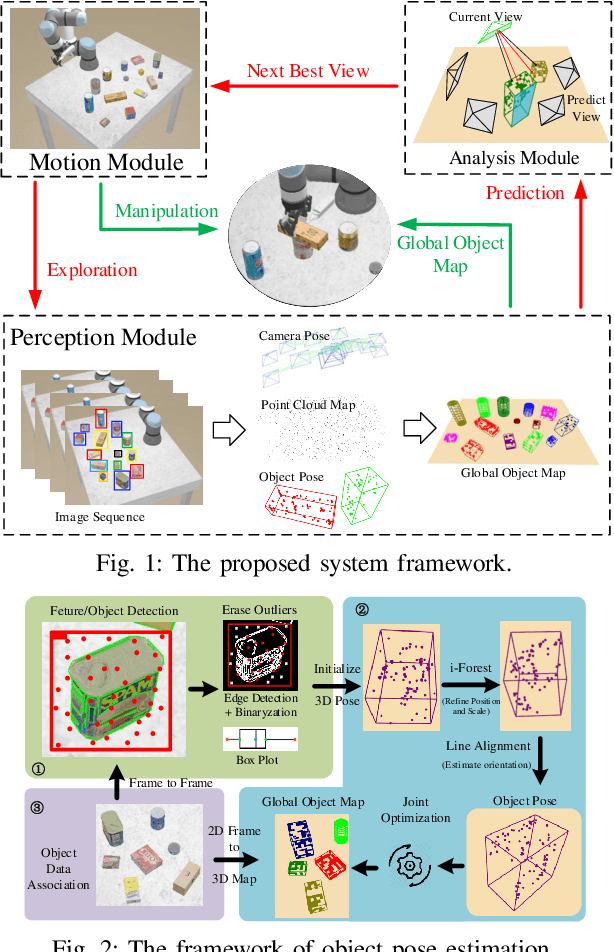

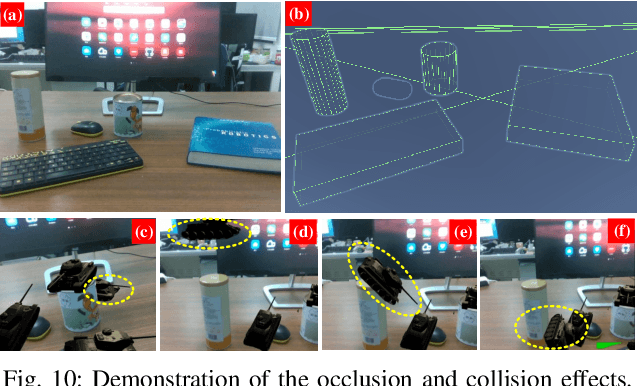

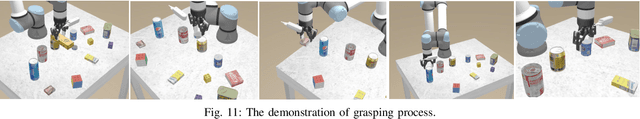

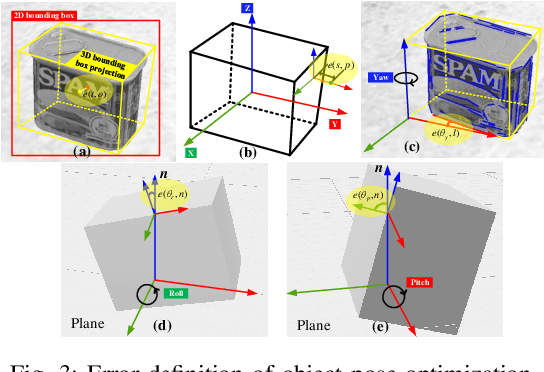

Object-Driven Active Mapping for More Accurate Object Pose Estimation and Robotic Grasping

Dec 03, 2020

Abstract:This paper presents the first active object mapping framework for complex robotic grasping tasks. The framework is built on an object SLAM system integrated with a simultaneous multi-object pose estimation process. Aiming to reduce the observation uncertainty on target objects and increase their pose estimation accuracy, we also design an object-driven exploration strategy to guide the object mapping process. By combining the mapping module and the exploration strategy, an accurate object map that is compatible with robotic grasping can be generated. Quantitative evaluations also show that the proposed framework has a very high mapping accuracy. Manipulation experiments, including object grasping, object placement, and the augmented reality, significantly demonstrate the effectiveness and advantages of our proposed framework.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge