Zhiqiang Deng

An Object SLAM Framework for Association, Mapping, and High-Level Tasks

May 12, 2023

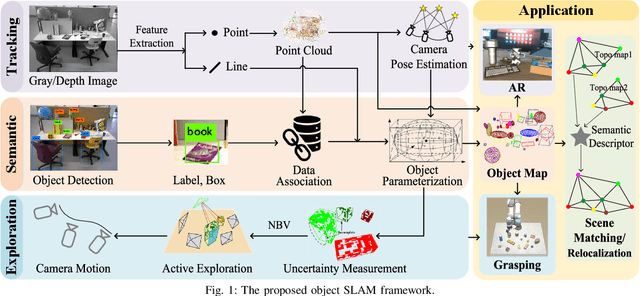

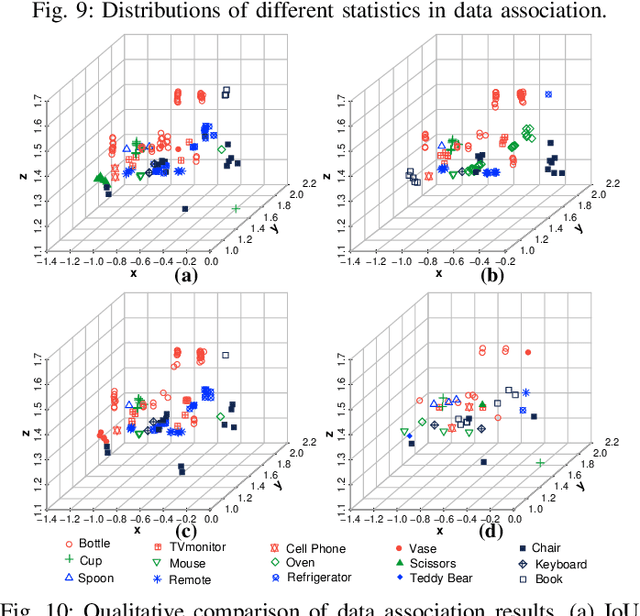

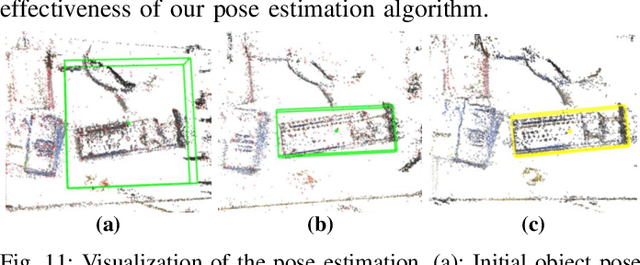

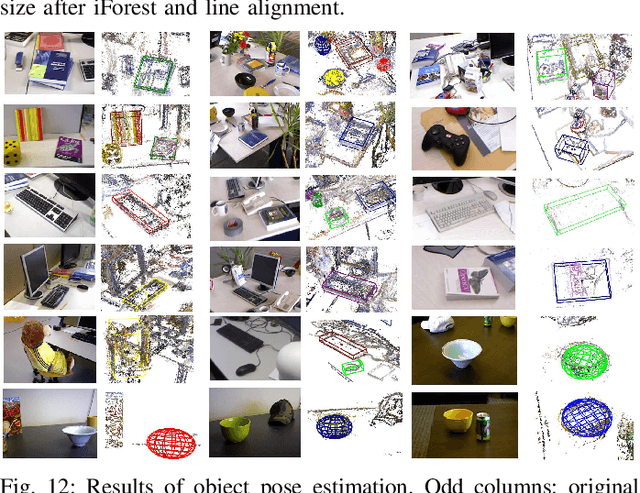

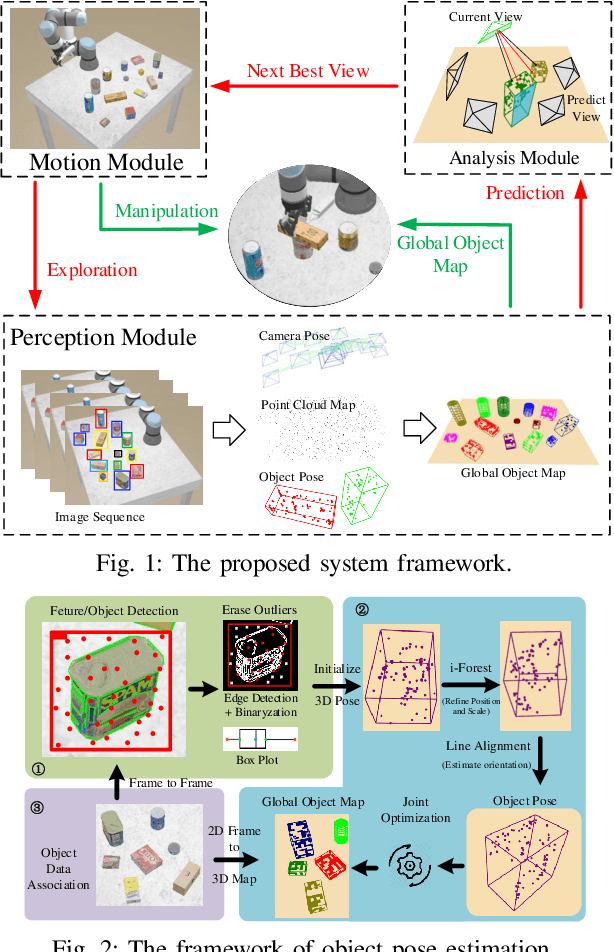

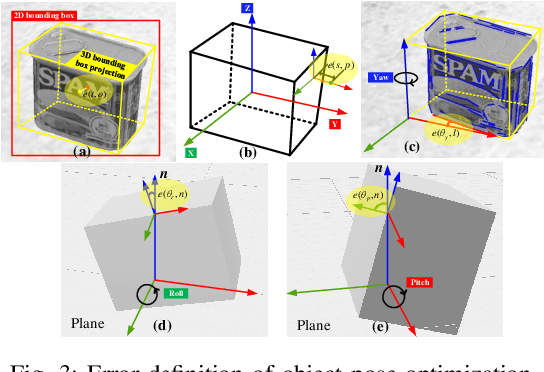

Abstract:Object SLAM is considered increasingly significant for robot high-level perception and decision-making. Existing studies fall short in terms of data association, object representation, and semantic mapping and frequently rely on additional assumptions, limiting their performance. In this paper, we present a comprehensive object SLAM framework that focuses on object-based perception and object-oriented robot tasks. First, we propose an ensemble data association approach for associating objects in complicated conditions by incorporating parametric and nonparametric statistic testing. In addition, we suggest an outlier-robust centroid and scale estimation algorithm for modeling objects based on the iForest and line alignment. Then a lightweight and object-oriented map is represented by estimated general object models. Taking into consideration the semantic invariance of objects, we convert the object map to a topological map to provide semantic descriptors to enable multi-map matching. Finally, we suggest an object-driven active exploration strategy to achieve autonomous mapping in the grasping scenario. A range of public datasets and real-world results in mapping, augmented reality, scene matching, relocalization, and robotic manipulation have been used to evaluate the proposed object SLAM framework for its efficient performance.

DYP-SLAM: A Real-time Visual SLAM Based on YOLO and Probability in Dynamic Environments

Feb 04, 2022

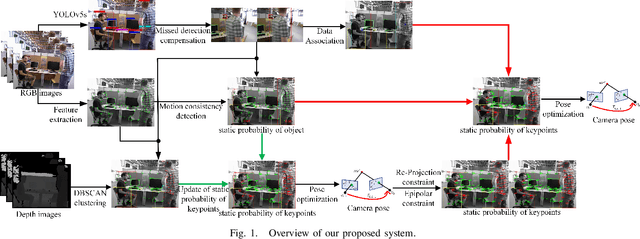

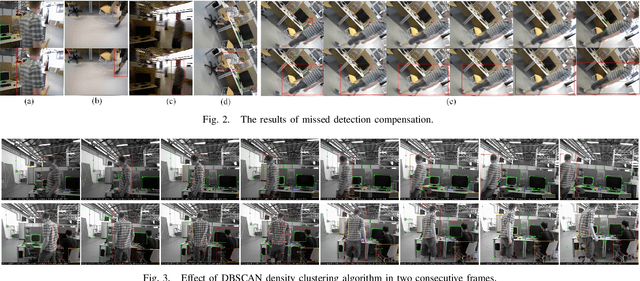

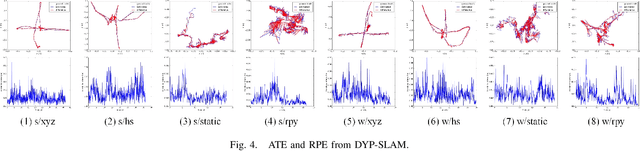

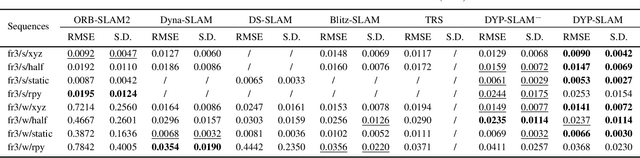

Abstract:SLAM algorithm is based on the static assumption of environment. Therefore, the dynamic factors in the environment will have a great impact on the matching points due to violating this assumption, and then directly affect the accuracy of subsequent camera pose estimation. Recently, some related works generally use the combination of semantic constraints and geometric constraints to deal with dynamic objects, but there are some problems, such as poor real-time performance, easy to treat people as rigid bodies, and poor performance in low dynamic scenes. In this paper, a dynamic scene oriented visual SLAM algorithm based on target detection and static probability named DYP-SLAM is proposed. The algorithm combines semantic constraints and geometric constraints to calculate the static probability of objects, keypoints and map points, and takes them as weights to participate in camera pose estimation. The proposed algorithm is evaluated on the public dataset and compared with a variety of advanced algorithms. It has achieved the best results in almost all low dynamics and high dynamic scenarios, and showing quite high real-time.

Fractal Pyramid Networks

Jun 28, 2021

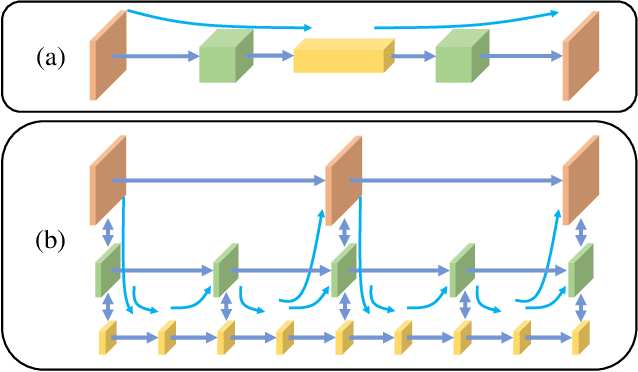

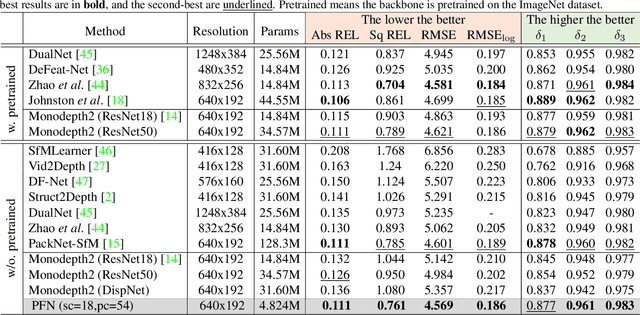

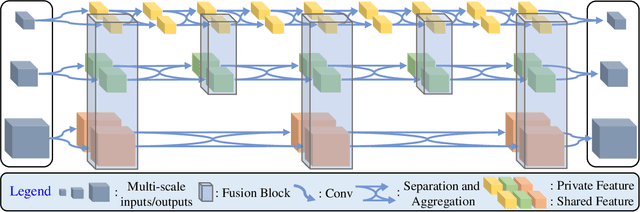

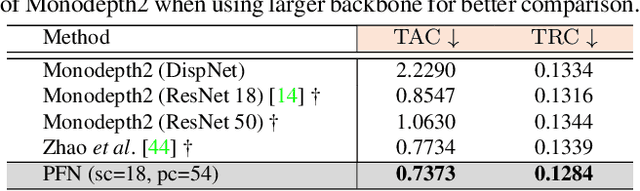

Abstract:We propose a new network architecture, the Fractal Pyramid Networks (PFNs) for pixel-wise prediction tasks as an alternative to the widely used encoder-decoder structure. In the encoder-decoder structure, the input is processed by an encoding-decoding pipeline that tries to get a semantic large-channel feature. Different from that, our proposed PFNs hold multiple information processing pathways and encode the information to multiple separate small-channel features. On the task of self-supervised monocular depth estimation, even without ImageNet pretrained, our models can compete or outperform the state-of-the-art methods on the KITTI dataset with much fewer parameters. Moreover, the visual quality of the prediction is significantly improved. The experiment of semantic segmentation provides evidence that the PFNs can be applied to other pixel-wise prediction tasks, and demonstrates that our models can catch more global structure information.

Object-Driven Active Mapping for More Accurate Object Pose Estimation and Robotic Grasping

Dec 03, 2020

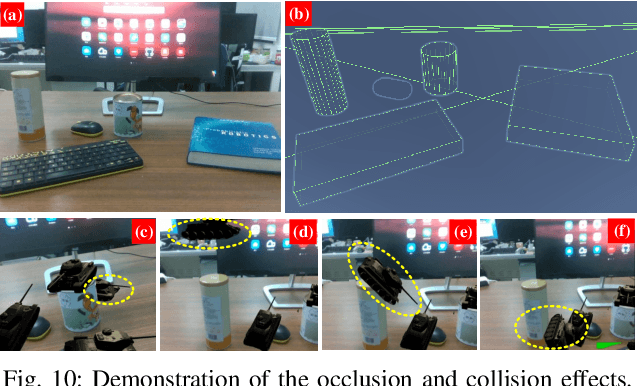

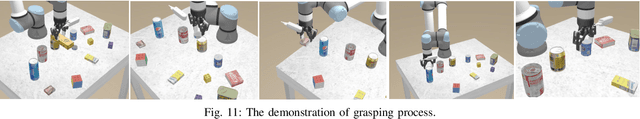

Abstract:This paper presents the first active object mapping framework for complex robotic grasping tasks. The framework is built on an object SLAM system integrated with a simultaneous multi-object pose estimation process. Aiming to reduce the observation uncertainty on target objects and increase their pose estimation accuracy, we also design an object-driven exploration strategy to guide the object mapping process. By combining the mapping module and the exploration strategy, an accurate object map that is compatible with robotic grasping can be generated. Quantitative evaluations also show that the proposed framework has a very high mapping accuracy. Manipulation experiments, including object grasping, object placement, and the augmented reality, significantly demonstrate the effectiveness and advantages of our proposed framework.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge