Xiaowen Gong

Single-Loop Federated Actor-Critic across Heterogeneous Environments

Dec 19, 2024Abstract:Federated reinforcement learning (FRL) has emerged as a promising paradigm, enabling multiple agents to collaborate and learn a shared policy adaptable across heterogeneous environments. Among the various reinforcement learning (RL) algorithms, the actor-critic (AC) algorithm stands out for its low variance and high sample efficiency. However, little to nothing is known theoretically about AC in a federated manner, especially each agent interacts with a potentially different environment. The lack of such results is attributed to various technical challenges: a two-level structure illustrating the coupling effect between the actor and the critic, heterogeneous environments, Markovian sampling and multiple local updates. In response, we study \textit{Single-loop Federated Actor Critic} (SFAC) where agents perform actor-critic learning in a two-level federated manner while interacting with heterogeneous environments. We then provide bounds on the convergence error of SFAC. The results show that the convergence error asymptotically converges to a near-stationary point, with the extent proportional to environment heterogeneity. Moreover, the sample complexity exhibits a linear speed-up through the federation of agents. We evaluate the performance of SFAC through numerical experiments using common RL benchmarks, which demonstrate its effectiveness.

Truthful Incentive Mechanism for Federated Learning with Crowdsourced Data Labeling

Jan 31, 2023

Abstract:Federated learning (FL) has emerged as a promising paradigm that trains machine learning (ML) models on clients' devices in a distributed manner without the need of transmitting clients' data to the FL server. In many applications of ML, the labels of training data need to be generated manually by human agents. In this paper, we study FL with crowdsourced data labeling where the local data of each participating client of FL are labeled manually by the client. We consider the strategic behavior of clients who may not make desired effort in their local data labeling and local model computation and may misreport their local models to the FL server. We characterize the performance bounds on the training loss as a function of clients' data labeling effort, local computation effort, and reported local models. We devise truthful incentive mechanisms which incentivize strategic clients to make truthful efforts and report true local models to the server. The truthful design exploits the non-trivial dependence of the training loss on clients' efforts and local models. Under the truthful mechanisms, we characterize the server's optimal local computation effort assignments. We evaluate the proposed FL algorithms with crowdsourced data labeling and the incentive mechanisms using experiments.

Olive Branch Learning: A Topology-Aware Federated Learning Framework for Space-Air-Ground Integrated Network

Dec 02, 2022Abstract:The space-air-ground integrated network (SAGIN), one of the key technologies for next-generation mobile communication systems, can facilitate data transmission for users all over the world, especially in some remote areas where vast amounts of informative data are collected by Internet of remote things (IoRT) devices to support various data-driven artificial intelligence (AI) services. However, training AI models centrally with the assistance of SAGIN faces the challenges of highly constrained network topology, inefficient data transmission, and privacy issues. To tackle these challenges, we first propose a novel topology-aware federated learning framework for the SAGIN, namely Olive Branch Learning (OBL). Specifically, the IoRT devices in the ground layer leverage their private data to perform model training locally, while the air nodes in the air layer and the ring-structured low earth orbit (LEO) satellite constellation in the space layer are in charge of model aggregation (synchronization) at different scales.To further enhance communication efficiency and inference performance of OBL, an efficient Communication and Non-IID-aware Air node-Satellite Assignment (CNASA) algorithm is designed by taking the data class distribution of the air nodes as well as their geographic locations into account. Furthermore, we extend our OBL framework and CNASA algorithm to adapt to more complex multi-orbit satellite networks. We analyze the convergence of our OBL framework and conclude that the CNASA algorithm contributes to the fast convergence of the global model. Extensive experiments based on realistic datasets corroborate the superior performance of our algorithm over the benchmark policies.

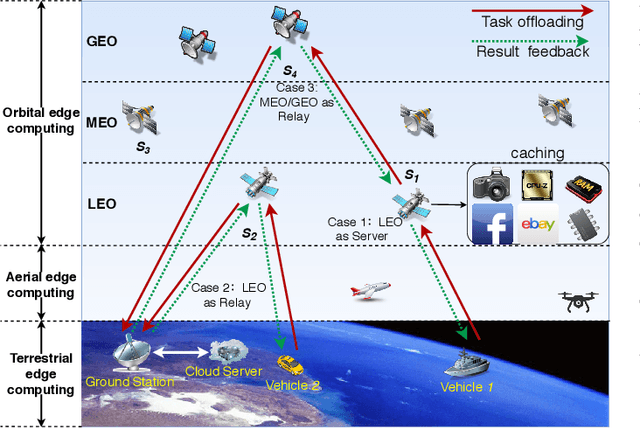

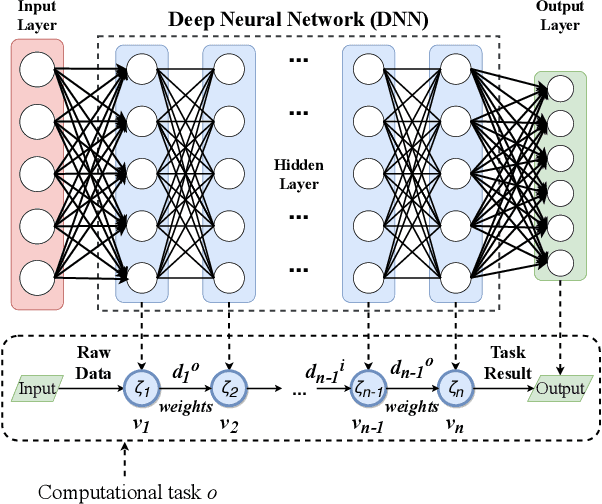

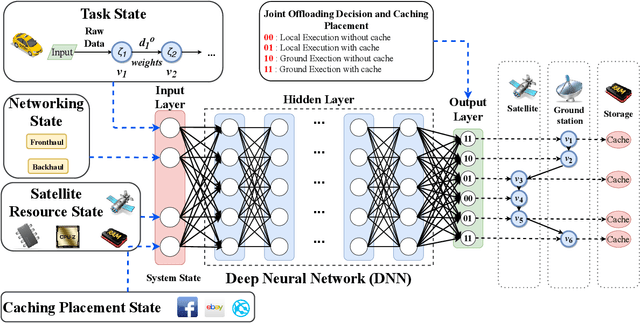

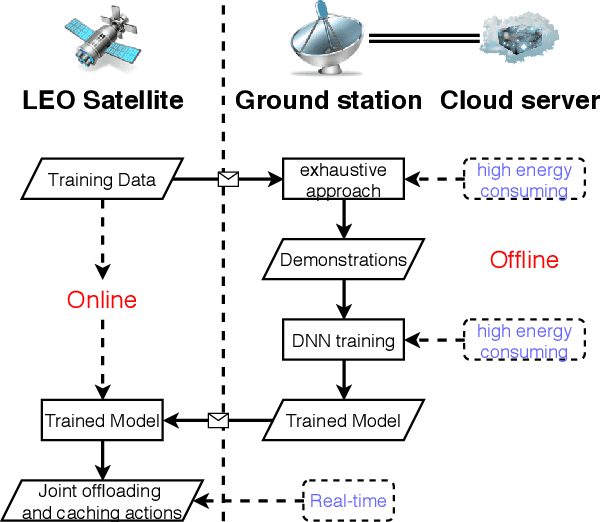

EC-SAGINs: Edge Computing-enhanced Space-Air-Ground Integrated Networks for Internet of Vehicles

Jan 15, 2021

Abstract:Edge computing-enhanced Internet of Vehicles (EC-IoV) enables ubiquitous data processing and content sharing among vehicles and terrestrial edge computing (TEC) infrastructures (e.g., 5G base stations and roadside units) with little or no human intervention, plays a key role in the intelligent transportation systems. However, EC-IoV is heavily dependent on the connections and interactions between vehicles and TEC infrastructures, thus will break down in some remote areas where TEC infrastructures are unavailable (e.g., desert, isolated islands and disaster-stricken areas). Driven by the ubiquitous connections and global-area coverage, space-air-ground integrated networks (SAGINs) efficiently support seamless coverage and efficient resource management, represent the next frontier for edge computing. In light of this, we first review the state-of-the-art edge computing research for SAGINs in this article. After discussing several existing orbital and aerial edge computing architectures, we propose a framework of edge computing-enabled space-air-ground integrated networks (EC-SAGINs) to support various IoV services for the vehicles in remote areas. The main objective of the framework is to minimize the task completion time and satellite resource usage. To this end, a pre-classification scheme is presented to reduce the size of action space, and a deep imitation learning (DIL) driven offloading and caching algorithm is proposed to achieve real-time decision making. Simulation results show the effectiveness of our proposed scheme. At last, we also discuss some technology challenges and future directions.

When Deep Reinforcement Learning Meets Federated Learning: Intelligent Multi-Timescale Resource Management for Multi-access Edge Computing in 5G Ultra Dense Network

Sep 22, 2020

Abstract:Ultra-dense edge computing (UDEC) has great potential, especially in the 5G era, but it still faces challenges in its current solutions, such as the lack of: i) efficient utilization of multiple 5G resources (e.g., computation, communication, storage and service resources); ii) low overhead offloading decision making and resource allocation strategies; and iii) privacy and security protection schemes. Thus, we first propose an intelligent ultra-dense edge computing (I-UDEC) framework, which integrates blockchain and Artificial Intelligence (AI) into 5G ultra-dense edge computing networks. First, we show the architecture of the framework. Then, in order to achieve real-time and low overhead computation offloading decisions and resource allocation strategies, we design a novel two-timescale deep reinforcement learning (\textit{2Ts-DRL}) approach, consisting of a fast-timescale and a slow-timescale learning process, respectively. The primary objective is to minimize the total offloading delay and network resource usage by jointly optimizing computation offloading, resource allocation and service caching placement. We also leverage federated learning (FL) to train the \textit{2Ts-DRL} model in a distributed manner, aiming to protect the edge devices' data privacy. Simulation results corroborate the effectiveness of both the \textit{2Ts-DRL} and FL in the I-UDEC framework and prove that our proposed algorithm can reduce task execution time up to 31.87%.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge