Wolfgang Förstner

A Large Scale Homography Benchmark

Feb 20, 2023

Abstract:We present a large-scale dataset of Planes in 3D, Pi3D, of roughly 1000 planes observed in 10 000 images from the 1DSfM dataset, and HEB, a large-scale homography estimation benchmark leveraging Pi3D. The applications of the Pi3D dataset are diverse, e.g. training or evaluating monocular depth, surface normal estimation and image matching algorithms. The HEB dataset consists of 226 260 homographies and includes roughly 4M correspondences. The homographies link images that often undergo significant viewpoint and illumination changes. As applications of HEB, we perform a rigorous evaluation of a wide range of robust estimators and deep learning-based correspondence filtering methods, establishing the current state-of-the-art in robust homography estimation. We also evaluate the uncertainty of the SIFT orientations and scales w.r.t. the ground truth coming from the underlying homographies and provide codes for comparing uncertainty of custom detectors. The dataset is available at \url{https://github.com/danini/homography-benchmark}.

Making Affine Correspondences Work in Camera Geometry Computation

Jul 20, 2020

Abstract:Local features e.g. SIFT and its affine and learned variants provide region-to-region rather than point-to-point correspondences. This has recently been exploited to create new minimal solvers for classical problems such as homography, essential and fundamental matrix estimation. The main advantage of such solvers is that their sample size is smaller, e.g., only two instead of four matches are required to estimate a homography. Works proposing such solvers often claim a significant improvement in run-time thanks to fewer RANSAC iterations. We show that this argument is not valid in practice if the solvers are used naively. To overcome this, we propose guidelines for effective use of region-to-region matches in the course of a full model estimation pipeline. We propose a method for refining the local feature geometries by symmetric intensity-based matching, combine uncertainty propagation inside RANSAC with preemptive model verification, show a general scheme for computing uncertainty of minimal solvers results, and adapt the sample cheirality check for homography estimation. Our experiments show that affine solvers can achieve accuracy comparable to point-based solvers at faster run-times when following our guidelines. We make code available at https://github.com/danini/affine-correspondences-for-camera-geometry.

Fast and Accurate Camera Covariance Computation for Large 3D Reconstruction

Aug 07, 2018

Abstract:Estimating uncertainty of camera parameters computed in Structure from Motion (SfM) is an important tool for evaluating the quality of the reconstruction and guiding the reconstruction process. Yet, the quality of the estimated parameters of large reconstructions has been rarely evaluated due to the computational challenges. We present a new algorithm which employs the sparsity of the uncertainty propagation and speeds the computation up about ten times \wrt previous approaches. Our computation is accurate and does not use any approximations. We can compute uncertainties of thousands of cameras in tens of seconds on a standard PC. We also demonstrate that our approach can be effectively used for reconstructions of any size by applying it to smaller sub-reconstructions.

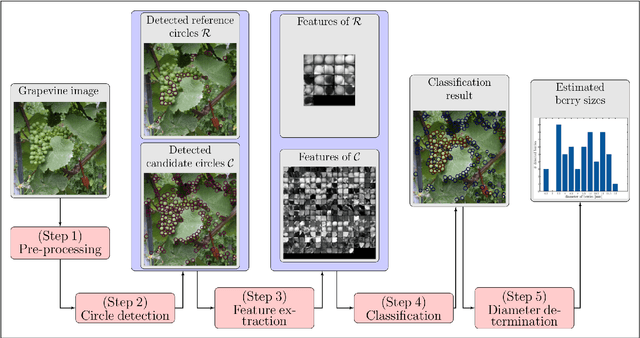

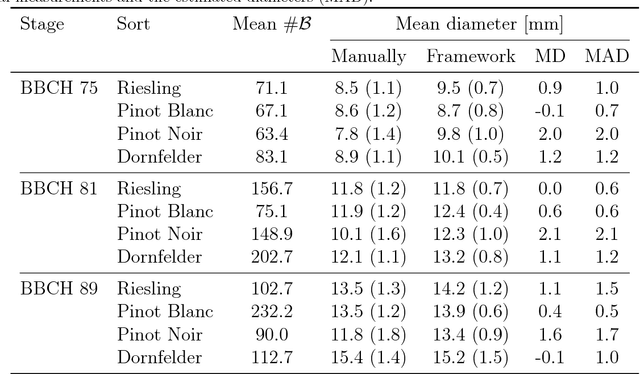

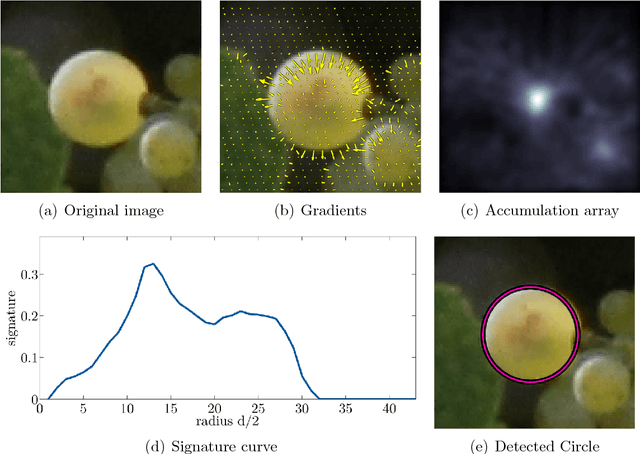

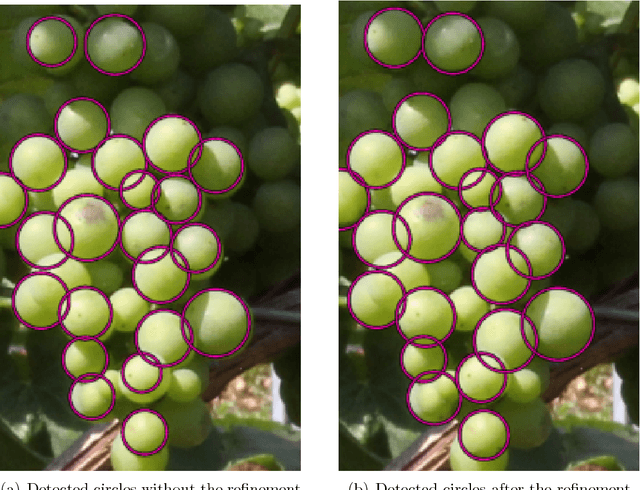

Automated Image Analysis Framework for the High-Throughput Determination of Grapevine Berry Sizes Using Conditional Random Fields

Dec 15, 2017

Abstract:The berry size is one of the most important fruit traits in grapevine breeding. Non-invasive, image-based phenotyping promises a fast and precise method for the monitoring of the grapevine berry size. In the present study an automated image analyzing framework was developed in order to estimate the size of grapevine berries from images in a high-throughput manner. The framework includes (i) the detection of circular structures which are potentially berries and (ii) the classification of these into the class 'berry' or 'non-berry' by utilizing a conditional random field. The approach used the concept of a one-class classification, since only the target class 'berry' is of interest and needs to be modeled. Moreover, the classification was carried out by using an automated active learning approach, i.e no user interaction is required during the classification process and in addition, the process adapts automatically to changing image conditions, e.g. illumination or berry color. The framework was tested on three datasets consisting in total of 139 images. The images were taken in an experimental vineyard at different stages of grapevine growth according to the BBCH scale. The mean berry size of a plant estimated by the framework correlates with the manually measured berry size by $0.88$.

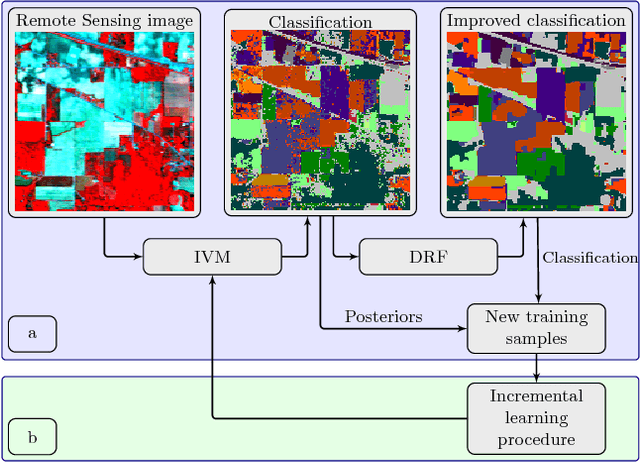

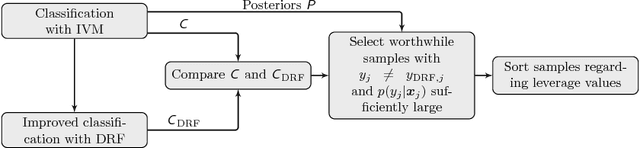

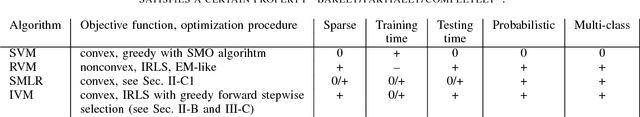

Incremental Import Vector Machines for Classifying Hyperspectral Data

Aug 20, 2017

Abstract:In this paper we propose an incremental learning strategy for import vector machines (IVM), which is a sparse kernel logistic regression approach. We use the procedure for the concept of self-training for sequential classification of hyperspectral data. The strategy comprises the inclusion of new training samples to increase the classification accuracy and the deletion of non-informative samples to be memory- and runtime-efficient. Moreover, we update the parameters in the incremental IVM model without re-training from scratch. Therefore, the incremental classifier is able to deal with large data sets. The performance of the IVM in comparison to support vector machines (SVM) is evaluated in terms of accuracy and experiments are conducted to assess the potential of the probabilistic outputs of the IVM. Experimental results demonstrate that the IVM and SVM perform similar in terms of classification accuracy. However, the number of import vectors is significantly lower when compared to the number of support vectors and thus, the computation time during classification can be decreased. Moreover, the probabilities provided by IVM are more reliable, when compared to the probabilistic information, derived from an SVM's output. In addition, the proposed self-training strategy can increase the classification accuracy. Overall, the IVM and the its incremental version is worthwhile for the classification of hyperspectral data.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge