Weixiong Zhang

Transformer-based toxin-protein interaction analysis prioritizes airborne particulate matter components with potential adverse health effects

Dec 21, 2024Abstract:Air pollution, particularly airborne particulate matter (PM), poses a significant threat to public health globally. It is crucial to comprehend the association between PM-associated toxic components and their cellular targets in humans to understand the mechanisms by which air pollution impacts health and to establish causal relationships between air pollution and public health consequences. Although many studies have explored the impact of PM on human health, the understanding of the association between toxins and the associated targets remain limited. Leveraging cutting-edge deep learning technologies, we developed tipFormer (toxin-protein interaction prediction based on transformer), a novel deep-learning tool for identifying toxic components capable of penetrating human cells and instigating pathogenic biological activities and signaling cascades. Experimental results show that tipFormer effectively captures interactions between proteins and toxic components. It incorporates dual pre-trained language models to encode protein sequences and chemicals. It employs a convolutional encoder to assimilate the sequential attributes of proteins and chemicals. It then introduces a learning module with a cross-attention mechanism to decode and elucidate the multifaceted interactions pivotal for the hotspots binding proteins and chemicals. Experimental results show that tipFormer effectively captures interactions between proteins and toxic components. This approach offers significant value to air quality and toxicology researchers by allowing high-throughput identification and prioritization of hazards. It supports more targeted laboratory studies and field measurements, ultimately enhancing our understanding of how air pollution impacts human health.

Pixel-global Self-supervised Learning with Uncertainty-aware Context Stabilizer

Oct 02, 2022

Abstract:We developed a novel SSL approach to capture global consistency and pixel-level local consistencies between differently augmented views of the same images to accommodate downstream discriminative and dense predictive tasks. We adopted the teacher-student architecture used in previous contrastive SSL methods. In our method, the global consistency is enforced by aggregating the compressed representations of augmented views of the same image. The pixel-level consistency is enforced by pursuing similar representations for the same pixel in differently augmented views. Importantly, we introduced an uncertainty-aware context stabilizer to adaptively preserve the context gap created by the two views from different augmentations. Moreover, we used Monte Carlo dropout in the stabilizer to measure uncertainty and adaptively balance the discrepancy between the representations of the same pixels in different views.

RAW-GNN: RAndom Walk Aggregation based Graph Neural Network

Jun 28, 2022

Abstract:Graph-Convolution-based methods have been successfully applied to representation learning on homophily graphs where nodes with the same label or similar attributes tend to connect with one another. Due to the homophily assumption of Graph Convolutional Networks (GCNs) that these methods use, they are not suitable for heterophily graphs where nodes with different labels or dissimilar attributes tend to be adjacent. Several methods have attempted to address this heterophily problem, but they do not change the fundamental aggregation mechanism of GCNs because they rely on summation operators to aggregate information from neighboring nodes, which is implicitly subject to the homophily assumption. Here, we introduce a novel aggregation mechanism and develop a RAndom Walk Aggregation-based Graph Neural Network (called RAW-GNN) method. The proposed approach integrates the random walk strategy with graph neural networks. The new method utilizes breadth-first random walk search to capture homophily information and depth-first search to collect heterophily information. It replaces the conventional neighborhoods with path-based neighborhoods and introduces a new path-based aggregator based on Recurrent Neural Networks. These designs make RAW-GNN suitable for both homophily and heterophily graphs. Extensive experimental results showed that the new method achieved state-of-the-art performance on a variety of homophily and heterophily graphs.

Response to: Significance and stability of deep learning-based identification of subtypes within major psychiatric disorders. Molecular Psychiatry (2022)

Jun 10, 2022Abstract:Recently, Winter and Hahn [1] commented on our work on identifying subtypes of major psychiatry disorders (MPDs) based on neurobiological features using machine learning [2]. They questioned the generalizability of our methods and the statistical significance, stability, and overfitting of the results, and proposed a pipeline for disease subtyping. We appreciate their earnest consideration of our work, however, we need to point out their misconceptions of basic machine-learning concepts and delineate some key issues involved.

Heterogeneous Graph Neural Networks using Self-supervised Reciprocally Contrastive Learning

Apr 30, 2022

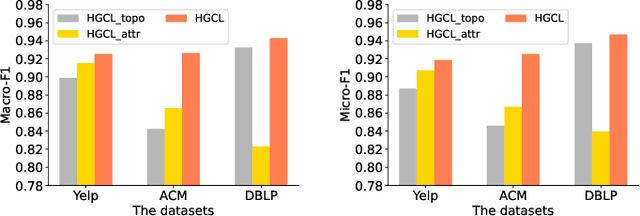

Abstract:Heterogeneous graph neural network (HGNN) is a very popular technique for the modeling and analysis of heterogeneous graphs. Most existing HGNN-based approaches are supervised or semi-supervised learning methods requiring graphs to be annotated, which is costly and time-consuming. Self-supervised contrastive learning has been proposed to address the problem of requiring annotated data by mining intrinsic information hidden within the given data. However, the existing contrastive learning methods are inadequate for heterogeneous graphs because they construct contrastive views only based on data perturbation or pre-defined structural properties (e.g., meta-path) in graph data while ignore the noises that may exist in both node attributes and graph topologies. We develop for the first time a novel and robust heterogeneous graph contrastive learning approach, namely HGCL, which introduces two views on respective guidance of node attributes and graph topologies and integrates and enhances them by reciprocally contrastive mechanism to better model heterogeneous graphs. In this new approach, we adopt distinct but most suitable attribute and topology fusion mechanisms in the two views, which are conducive to mining relevant information in attributes and topologies separately. We further use both attribute similarity and topological correlation to construct high-quality contrastive samples. Extensive experiments on three large real-world heterogeneous graphs demonstrate the superiority and robustness of HGCL over state-of-the-art methods.

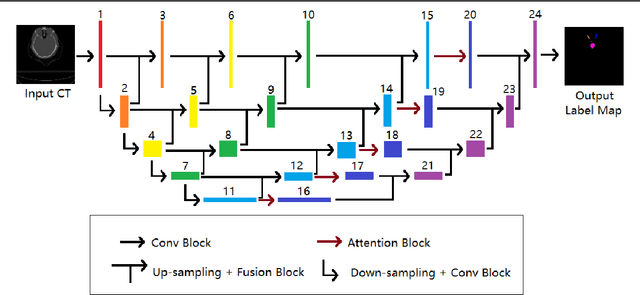

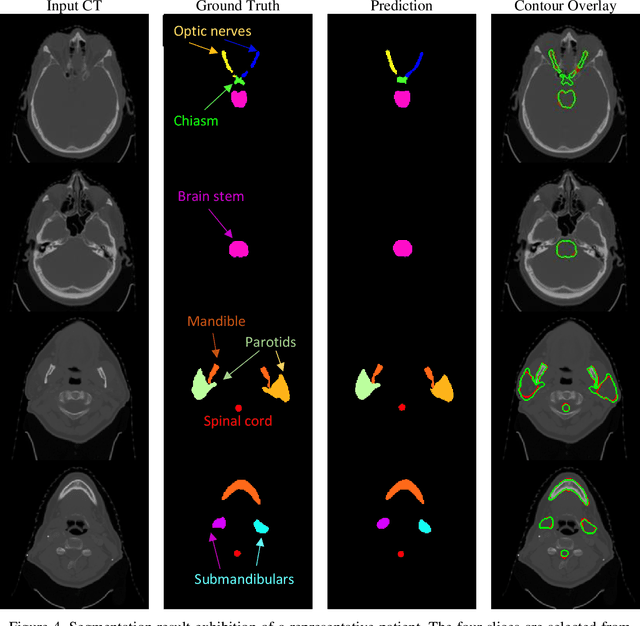

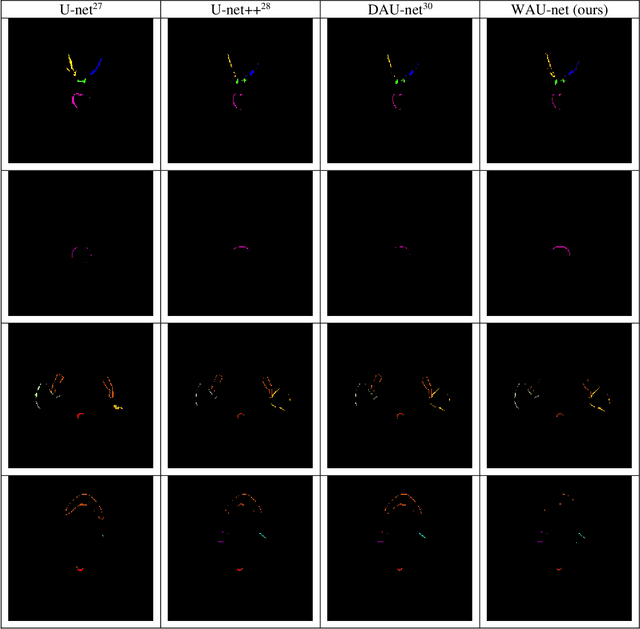

Weaving Attention U-net: A Novel Hybrid CNN and Attention-based Method for Organs-at-risk Segmentation in Head and Neck CT Images

Jul 10, 2021

Abstract:In radiotherapy planning, manual contouring is labor-intensive and time-consuming. Accurate and robust automated segmentation models improve the efficiency and treatment outcome. We aim to develop a novel hybrid deep learning approach, combining convolutional neural networks (CNNs) and the self-attention mechanism, for rapid and accurate multi-organ segmentation on head and neck computed tomography (CT) images. Head and neck CT images with manual contours of 115 patients were retrospectively collected and used. We set the training/validation/testing ratio to 81/9/25 and used the 10-fold cross-validation strategy to select the best model parameters. The proposed hybrid model segmented ten organs-at-risk (OARs) altogether for each case. The performance of the model was evaluated by three metrics, i.e., the Dice Similarity Coefficient (DSC), Hausdorff distance 95% (HD95), and mean surface distance (MSD). We also tested the performance of the model on the Head and Neck 2015 challenge dataset and compared it against several state-of-the-art automated segmentation algorithms. The proposed method generated contours that closely resemble the ground truth for ten OARs. Our results of the new Weaving Attention U-net demonstrate superior or similar performance on the segmentation of head and neck CT images.

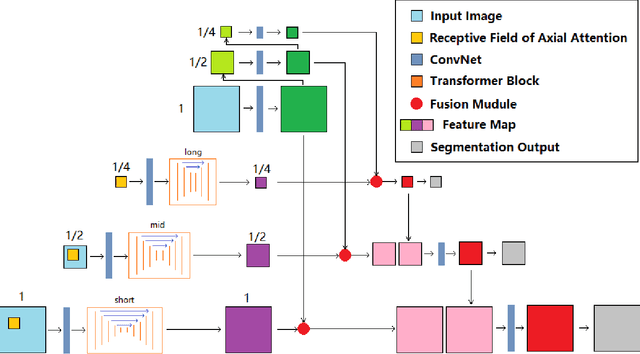

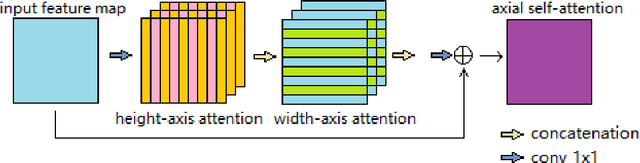

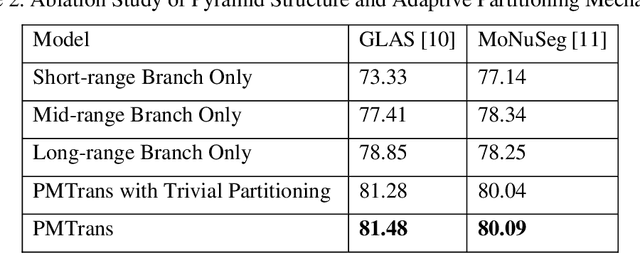

Pyramid Medical Transformer for Medical Image Segmentation

Apr 29, 2021

Abstract:Deep neural networks have been a prevailing technique in the field of medical image processing. However, the most popular convolutional neural networks (CNNs) based methods for medical image segmentation are imperfect because they cannot adequately model long-range pixel relations. Transformers and the self-attention mechanism are recently proposed to effectively learn long-range dependencies by modeling all pairs of word-to-word attention regardless of their positions. The idea has also been extended to the computer vision field by creating and treating image patches as embeddings. Considering the computation complexity for whole image self-attention, current transformer-based models settle for a rigid partitioning scheme that would potentially lose informative relations. Besides, current medical transformers model global context on full resolution images, leading to unnecessary computation costs. To address these issues, we developed a novel method to integrate multi-scale attention and CNN feature extraction using a pyramidal network architecture, namely Pyramid Medical Transformer (PMTrans). The PMTrans captured multi-range relations by working on multi-resolution images. An adaptive partitioning scheme was implemented to retain informative relations and to access different receptive fields efficiently. Experimental results on two medical image datasets, gland segmentation and MoNuSeg datasets, showed that PMTrans outperformed the latest CNN-based and transformer-based models for medical image segmentation.

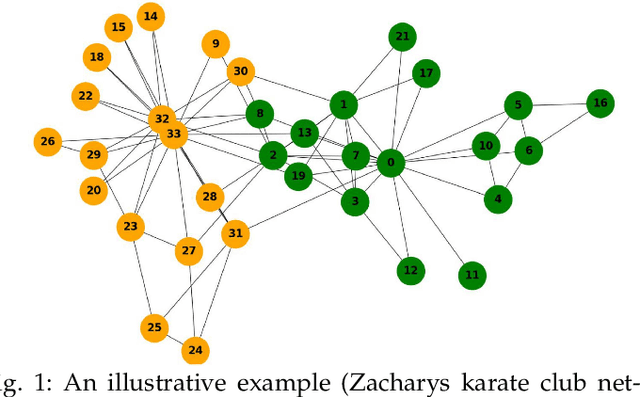

A Survey of Community Detection Approaches: From Statistical Modeling to Deep Learning

Jan 03, 2021

Abstract:Community detection, a fundamental task for network analysis, aims to partition a network into multiple sub-structures to help reveal their latent functions. Community detection has been extensively studied in and broadly applied to many real-world network problems. Classical approaches to community detection typically utilize probabilistic graphical models and adopt a variety of prior knowledge to infer community structures. As the problems that network methods try to solve and the network data to be analyzed become increasingly more sophisticated, new approaches have also been proposed and developed, particularly those that utilize deep learning and convert networked data into low dimensional representation. Despite all the recent advancement, there is still a lack of insightful understanding of the theoretical and methodological underpinning of community detection, which will be critically important for future development of the area of network analysis. In this paper, we develop and present a unified architecture of network community-finding methods to characterize the state-of-the-art of the field of community detection. Specifically, we provide a comprehensive review of the existing community detection methods and introduce a new taxonomy that divides the existing methods into two categories, namely probabilistic graphical model and deep learning. We then discuss in detail the main idea behind each method in the two categories. Furthermore, to promote future development of community detection, we release several benchmark datasets from several problem domains and highlight their applications to various network analysis tasks. We conclude with discussions of the challenges of the field and suggestions of possible directions for future research.

Semi-supervised Semantic Segmentation of Organs at Risk on 3D Pelvic CT Images

Sep 21, 2020

Abstract:Automated segmentation of organs-at-risk in pelvic computed tomography (CT) images can assist the radiotherapy treatment planning by saving time and effort of manual contouring and reducing intra-observer and inter-observer variation. However, training high-performance deep-learning segmentation models usually requires broad labeled data, which are labor-intensive to collect. Lack of annotated data presents a significant challenge for many medical imaging-related deep learning solutions. This paper proposes a novel end-to-end convolutional neural network-based semi-supervised adversarial method that can segment multiple organs-at-risk, including prostate, bladder, rectum, left femur, and right femur. New design schemes are introduced to enhance the baseline residual U-net architecture to improve performance. Importantly, new unlabeled CT images are synthesized by a generative adversarial network (GAN) that is trained on given images to overcome the inherent problem of insufficient annotated data in practice. A semi-supervised adversarial strategy is then introduced to utilize labeled and unlabeled 3D CT images. The new method is evaluated on a dataset of 100 training cases and 20 testing cases. Experimental results, including four metrics (dice similarity coefficient, average Hausdorff distance, average surface Hausdorff distance, and relative volume difference), show that the new method outperforms several state-of-the-art segmentation approaches.

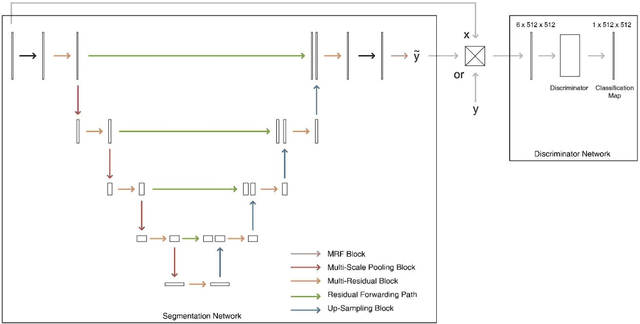

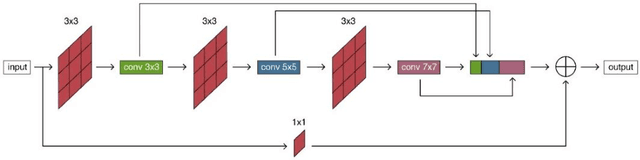

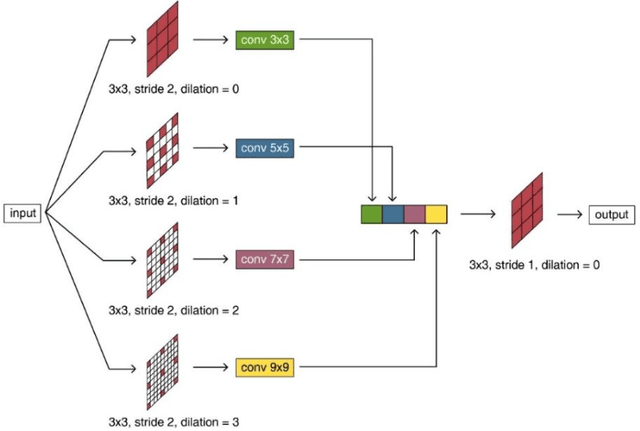

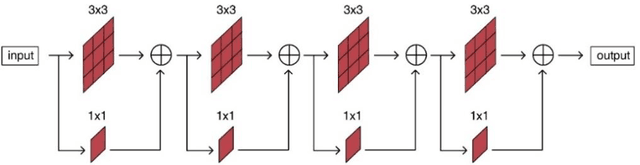

ARPM-net: A novel CNN-based adversarial method with Markov Random Field enhancement for prostate and organs at risk segmentation in pelvic CT images

Aug 26, 2020

Abstract:Purpose: The research is to develop a novel CNN-based adversarial deep learning method to improve and expedite the multi-organ semantic segmentation of CT images, and to generate accurate contours on pelvic CT images. Methods: Planning CT and structure datasets for 110 patients with intact prostate cancer were retrospectively selected and divided for 10-fold cross-validation. The proposed adversarial multi-residual multi-scale pooling Markov Random Field (MRF) enhanced network (ARPM-net) implements an adversarial training scheme. A segmentation network and a discriminator network were trained jointly, and only the segmentation network was used for prediction. The segmentation network integrates a newly designed MRF block into a variation of multi-residual U-net. The discriminator takes the product of the original CT and the prediction/ground-truth as input and classifies the input into fake/real. The segmentation network and discriminator network can be trained jointly as a whole, or the discriminator can be used for fine-tuning after the segmentation network is coarsely trained. Multi-scale pooling layers were introduced to preserve spatial resolution during pooling using less memory compared to atrous convolution layers. An adaptive loss function was proposed to enhance the training on small or low contrast organs. The accuracy of modeled contours was measured with the Dice similarity coefficient (DSC), Average Hausdorff Distance (AHD), Average Surface Hausdorff Distance (ASHD), and relative Volume Difference (VD) using clinical contours as references to the ground-truth. The proposed ARPM-net method was compared to several stateof-the-art deep learning methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge