Baozhou Sun

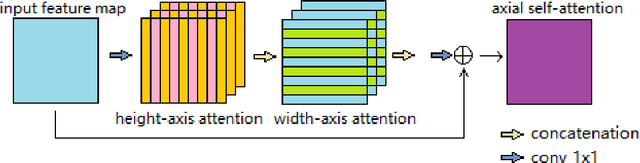

Weaving Attention U-net: A Novel Hybrid CNN and Attention-based Method for Organs-at-risk Segmentation in Head and Neck CT Images

Jul 10, 2021

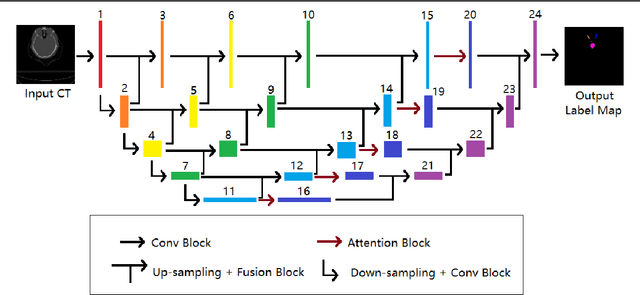

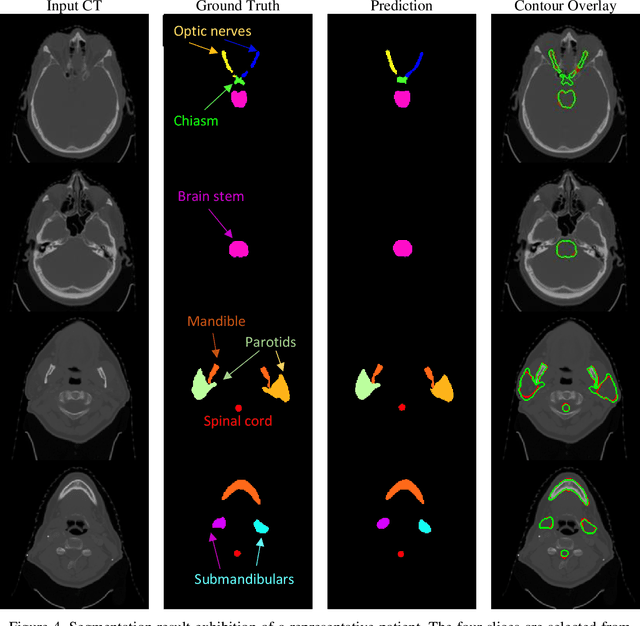

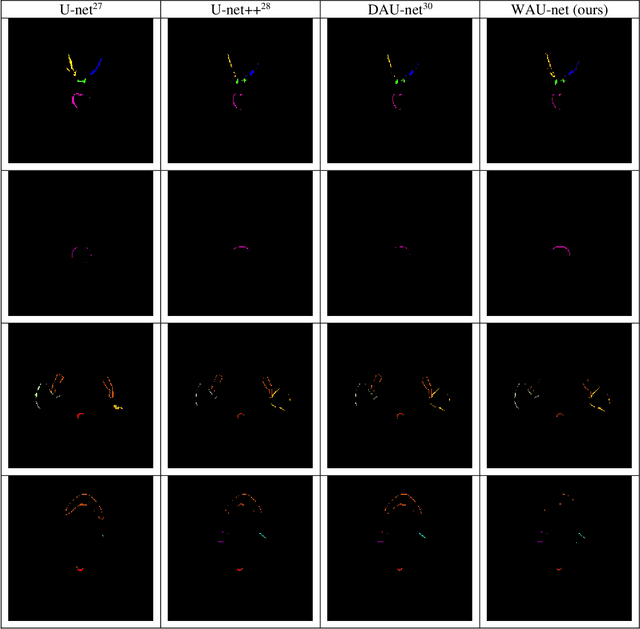

Abstract:In radiotherapy planning, manual contouring is labor-intensive and time-consuming. Accurate and robust automated segmentation models improve the efficiency and treatment outcome. We aim to develop a novel hybrid deep learning approach, combining convolutional neural networks (CNNs) and the self-attention mechanism, for rapid and accurate multi-organ segmentation on head and neck computed tomography (CT) images. Head and neck CT images with manual contours of 115 patients were retrospectively collected and used. We set the training/validation/testing ratio to 81/9/25 and used the 10-fold cross-validation strategy to select the best model parameters. The proposed hybrid model segmented ten organs-at-risk (OARs) altogether for each case. The performance of the model was evaluated by three metrics, i.e., the Dice Similarity Coefficient (DSC), Hausdorff distance 95% (HD95), and mean surface distance (MSD). We also tested the performance of the model on the Head and Neck 2015 challenge dataset and compared it against several state-of-the-art automated segmentation algorithms. The proposed method generated contours that closely resemble the ground truth for ten OARs. Our results of the new Weaving Attention U-net demonstrate superior or similar performance on the segmentation of head and neck CT images.

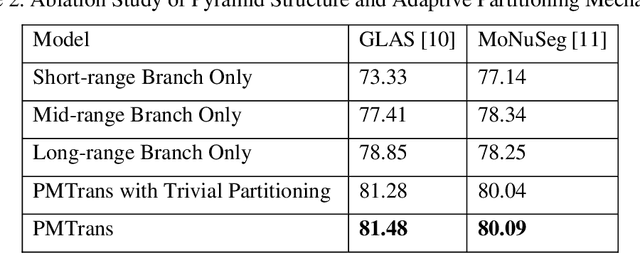

Pyramid Medical Transformer for Medical Image Segmentation

Apr 29, 2021

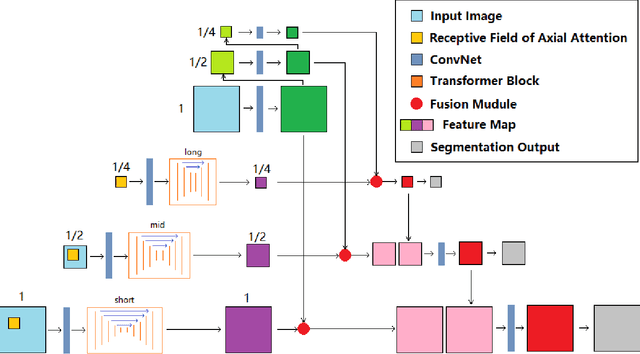

Abstract:Deep neural networks have been a prevailing technique in the field of medical image processing. However, the most popular convolutional neural networks (CNNs) based methods for medical image segmentation are imperfect because they cannot adequately model long-range pixel relations. Transformers and the self-attention mechanism are recently proposed to effectively learn long-range dependencies by modeling all pairs of word-to-word attention regardless of their positions. The idea has also been extended to the computer vision field by creating and treating image patches as embeddings. Considering the computation complexity for whole image self-attention, current transformer-based models settle for a rigid partitioning scheme that would potentially lose informative relations. Besides, current medical transformers model global context on full resolution images, leading to unnecessary computation costs. To address these issues, we developed a novel method to integrate multi-scale attention and CNN feature extraction using a pyramidal network architecture, namely Pyramid Medical Transformer (PMTrans). The PMTrans captured multi-range relations by working on multi-resolution images. An adaptive partitioning scheme was implemented to retain informative relations and to access different receptive fields efficiently. Experimental results on two medical image datasets, gland segmentation and MoNuSeg datasets, showed that PMTrans outperformed the latest CNN-based and transformer-based models for medical image segmentation.

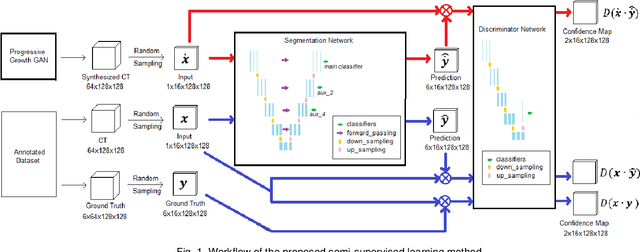

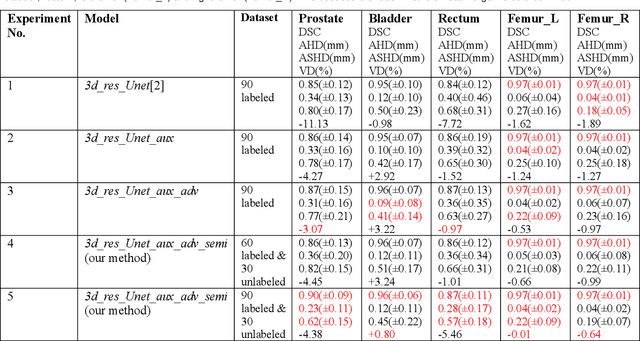

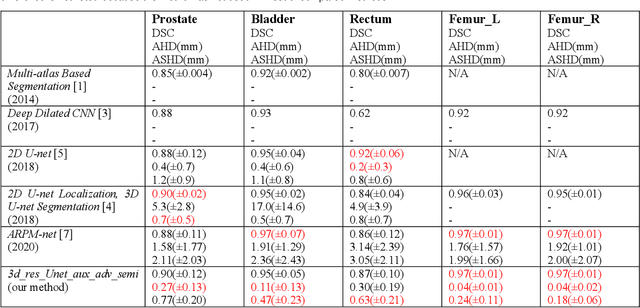

Semi-supervised Semantic Segmentation of Organs at Risk on 3D Pelvic CT Images

Sep 21, 2020

Abstract:Automated segmentation of organs-at-risk in pelvic computed tomography (CT) images can assist the radiotherapy treatment planning by saving time and effort of manual contouring and reducing intra-observer and inter-observer variation. However, training high-performance deep-learning segmentation models usually requires broad labeled data, which are labor-intensive to collect. Lack of annotated data presents a significant challenge for many medical imaging-related deep learning solutions. This paper proposes a novel end-to-end convolutional neural network-based semi-supervised adversarial method that can segment multiple organs-at-risk, including prostate, bladder, rectum, left femur, and right femur. New design schemes are introduced to enhance the baseline residual U-net architecture to improve performance. Importantly, new unlabeled CT images are synthesized by a generative adversarial network (GAN) that is trained on given images to overcome the inherent problem of insufficient annotated data in practice. A semi-supervised adversarial strategy is then introduced to utilize labeled and unlabeled 3D CT images. The new method is evaluated on a dataset of 100 training cases and 20 testing cases. Experimental results, including four metrics (dice similarity coefficient, average Hausdorff distance, average surface Hausdorff distance, and relative volume difference), show that the new method outperforms several state-of-the-art segmentation approaches.

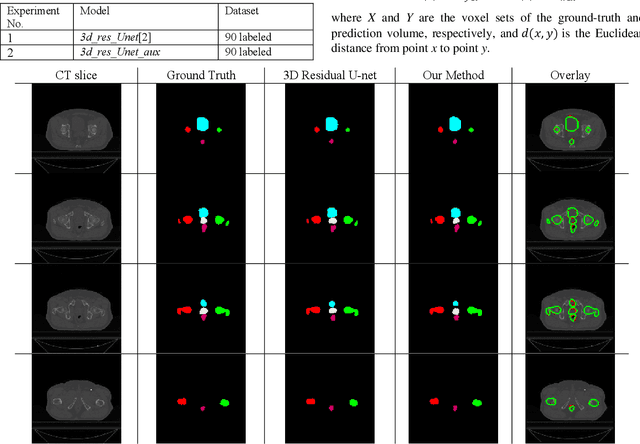

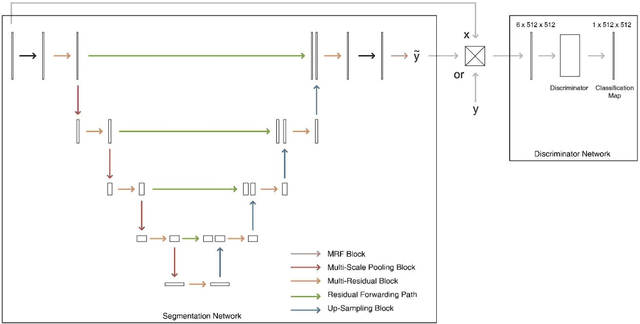

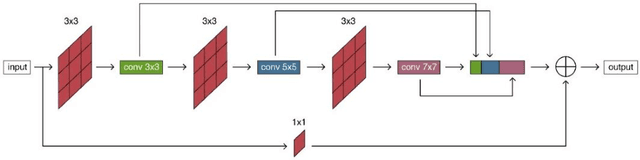

ARPM-net: A novel CNN-based adversarial method with Markov Random Field enhancement for prostate and organs at risk segmentation in pelvic CT images

Aug 26, 2020

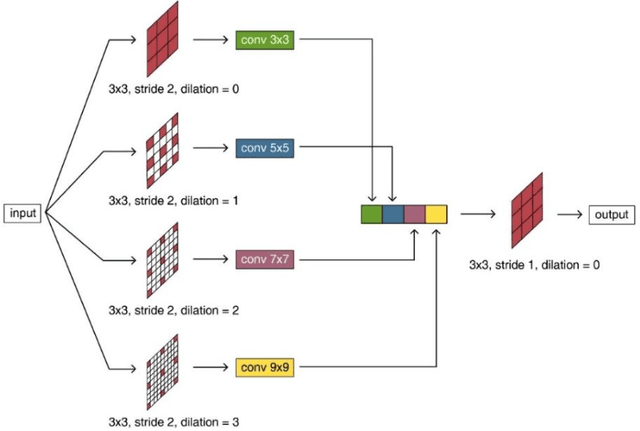

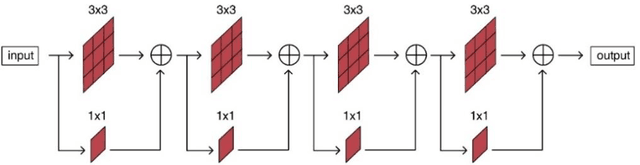

Abstract:Purpose: The research is to develop a novel CNN-based adversarial deep learning method to improve and expedite the multi-organ semantic segmentation of CT images, and to generate accurate contours on pelvic CT images. Methods: Planning CT and structure datasets for 110 patients with intact prostate cancer were retrospectively selected and divided for 10-fold cross-validation. The proposed adversarial multi-residual multi-scale pooling Markov Random Field (MRF) enhanced network (ARPM-net) implements an adversarial training scheme. A segmentation network and a discriminator network were trained jointly, and only the segmentation network was used for prediction. The segmentation network integrates a newly designed MRF block into a variation of multi-residual U-net. The discriminator takes the product of the original CT and the prediction/ground-truth as input and classifies the input into fake/real. The segmentation network and discriminator network can be trained jointly as a whole, or the discriminator can be used for fine-tuning after the segmentation network is coarsely trained. Multi-scale pooling layers were introduced to preserve spatial resolution during pooling using less memory compared to atrous convolution layers. An adaptive loss function was proposed to enhance the training on small or low contrast organs. The accuracy of modeled contours was measured with the Dice similarity coefficient (DSC), Average Hausdorff Distance (AHD), Average Surface Hausdorff Distance (ASHD), and relative Volume Difference (VD) using clinical contours as references to the ground-truth. The proposed ARPM-net method was compared to several stateof-the-art deep learning methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge