Wang-Zhou Dai

Pre-Training Meta-Rule Selection Policy for Visual Generative Abductive Learning

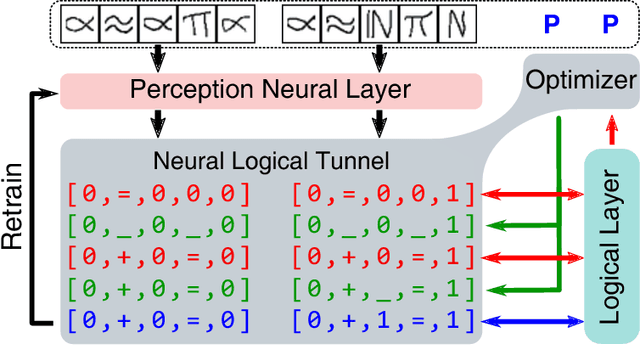

Mar 09, 2025Abstract:Visual generative abductive learning studies jointly training symbol-grounded neural visual generator and inducing logic rules from data, such that after learning, the visual generation process is guided by the induced logic rules. A major challenge for this task is to reduce the time cost of logic abduction during learning, an essential step when the logic symbol set is large and the logic rule to induce is complicated. To address this challenge, we propose a pre-training method for obtaining meta-rule selection policy for the recently proposed visual generative learning approach AbdGen [Peng et al., 2023], aiming at significantly reducing the candidate meta-rule set and pruning the search space. The selection model is built based on the embedding representation of both symbol grounding of cases and meta-rules, which can be effectively integrated with both neural model and logic reasoning system. The pre-training process is done on pure symbol data, not involving symbol grounding learning of raw visual inputs, making the entire learning process low-cost. An additional interesting observation is that the selection policy can rectify symbol grounding errors unseen during pre-training, which is resulted from the memorization ability of attention mechanism and the relative stability of symbolic patterns. Experimental results show that our method is able to effectively address the meta-rule selection problem for visual abduction, boosting the efficiency of visual generative abductive learning. Code is available at https://github.com/future-item/metarule-select.

Efficient Rectification of Neuro-Symbolic Reasoning Inconsistencies by Abductive Reflection

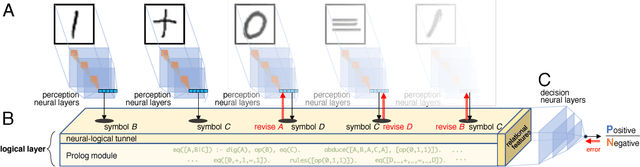

Dec 11, 2024Abstract:Neuro-Symbolic (NeSy) AI could be regarded as an analogy to human dual-process cognition, modeling the intuitive System 1 with neural networks and the algorithmic System 2 with symbolic reasoning. However, for complex learning targets, NeSy systems often generate outputs inconsistent with domain knowledge and it is challenging to rectify them. Inspired by the human Cognitive Reflection, which promptly detects errors in our intuitive response and revises them by invoking the System 2 reasoning, we propose to improve NeSy systems by introducing Abductive Reflection (ABL-Refl) based on the Abductive Learning (ABL) framework. ABL-Refl leverages domain knowledge to abduce a reflection vector during training, which can then flag potential errors in the neural network outputs and invoke abduction to rectify them and generate consistent outputs during inference. ABL-Refl is highly efficient in contrast to previous ABL implementations. Experiments show that ABL-Refl outperforms state-of-the-art NeSy methods, achieving excellent accuracy with fewer training resources and enhanced efficiency.

Generating by Understanding: Neural Visual Generation with Logical Symbol Groundings

Oct 26, 2023Abstract:Despite the great success of neural visual generative models in recent years, integrating them with strong symbolic knowledge reasoning systems remains a challenging task. The main challenges are two-fold: one is symbol assignment, i.e. bonding latent factors of neural visual generators with meaningful symbols from knowledge reasoning systems. Another is rule learning, i.e. learning new rules, which govern the generative process of the data, to augment the knowledge reasoning systems. To deal with these symbol grounding problems, we propose a neural-symbolic learning approach, Abductive Visual Generation (AbdGen), for integrating logic programming systems with neural visual generative models based on the abductive learning framework. To achieve reliable and efficient symbol assignment, the quantized abduction method is introduced for generating abduction proposals by the nearest-neighbor lookups within semantic codebooks. To achieve precise rule learning, the contrastive meta-abduction method is proposed to eliminate wrong rules with positive cases and avoid less-informative rules with negative cases simultaneously. Experimental results on various benchmark datasets show that compared to the baselines, AbdGen requires significantly fewer instance-level labeling information for symbol assignment. Furthermore, our approach can effectively learn underlying logical generative rules from data, which is out of the capability of existing approaches.

Human Comprehensible Active Learning of Genome-Scale Metabolic Networks

Aug 31, 2023

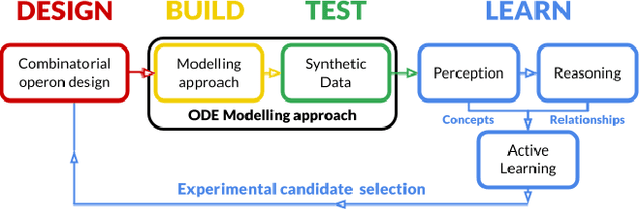

Abstract:An important application of Synthetic Biology is the engineering of the host cell system to yield useful products. However, an increase in the scale of the host system leads to huge design space and requires a large number of validation trials with high experimental costs. A comprehensible machine learning approach that efficiently explores the hypothesis space and guides experimental design is urgently needed for the Design-Build-Test-Learn (DBTL) cycle of the host cell system. We introduce a novel machine learning framework ILP-iML1515 based on Inductive Logic Programming (ILP) that performs abductive logical reasoning and actively learns from training examples. In contrast to numerical models, ILP-iML1515 is built on comprehensible logical representations of a genome-scale metabolic model and can update the model by learning new logical structures from auxotrophic mutant trials. The ILP-iML1515 framework 1) allows high-throughput simulations and 2) actively selects experiments that reduce the experimental cost of learning gene functions in comparison to randomly selected experiments.

Deciphering Raw Data in Neuro-Symbolic Learning with Provable Guarantees

Aug 21, 2023

Abstract:Neuro-symbolic hybrid systems are promising for integrating machine learning and symbolic reasoning, where perception models are facilitated with information inferred from a symbolic knowledge base through logical reasoning. Despite empirical evidence showing the ability of hybrid systems to learn accurate perception models, the theoretical understanding of learnability is still lacking. Hence, it remains unclear why a hybrid system succeeds for a specific task and when it may fail given a different knowledge base. In this paper, we introduce a novel way of characterising supervision signals from a knowledge base, and establish a criterion for determining the knowledge's efficacy in facilitating successful learning. This, for the first time, allows us to address the two questions above by inspecting the knowledge base under investigation. Our analysis suggests that many knowledge bases satisfy the criterion, thus enabling effective learning, while some fail to satisfy it, indicating potential failures. Comprehensive experiments confirm the utility of our criterion on benchmark tasks.

Automated Biodesign Engineering by Abductive Meta-Interpretive Learning

May 17, 2021

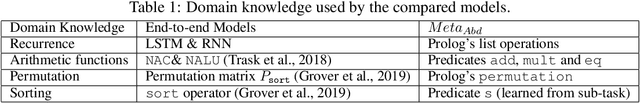

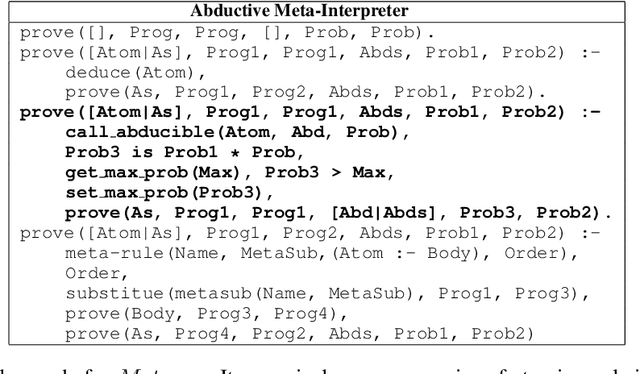

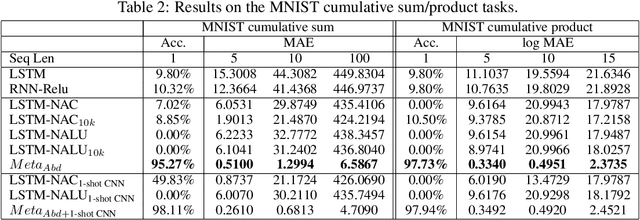

Abstract:The application of Artificial Intelligence (AI) to synthetic biology will provide the foundation for the creation of a high throughput automated platform for genetic design, in which a learning machine is used to iteratively optimise the system through a design-build-test-learn (DBTL) cycle. However, mainstream machine learning techniques represented by deep learning lacks the capability to represent relational knowledge and requires prodigious amounts of annotated training data. These drawbacks strongly restrict AI's role in synthetic biology in which experimentation is inherently resource and time intensive. In this work, we propose an automated biodesign engineering framework empowered by Abductive Meta-Interpretive Learning ($Meta_{Abd}$), a novel machine learning approach that combines symbolic and sub-symbolic machine learning, to further enhance the DBTL cycle by enabling the learning machine to 1) exploit domain knowledge and learn human-interpretable models that are expressed by formal languages such as first-order logic; 2) simultaneously optimise the structure and parameters of the models to make accurate numerical predictions; 3) reduce the cost of experiments and effort on data annotation by actively generating hypotheses and examples. To verify the effectiveness of $Meta_{Abd}$, we have modelled a synthetic dataset for the production of proteins from a three gene operon in a microbial host, which represents a common synthetic biology problem.

Abductive Knowledge Induction From Raw Data

Oct 07, 2020

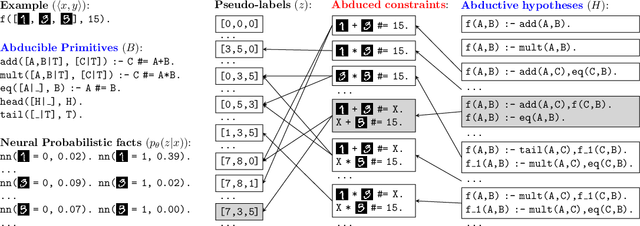

Abstract:For many reasoning-heavy tasks, it is challenging to find an appropriate end-to-end differentiable approximation to domain-specific inference mechanisms. Neural-Symbolic (NeSy) AI divides the end-to-end pipeline into neural perception and symbolic reasoning, which can directly exploit general domain knowledge such as algorithms and logic rules. However, it suffers from the exponential computational complexity caused by the interface between the two components, where the neural model lacks direct supervision, and the symbolic model lacks accurate input facts. As a result, they usually focus on learning the neural model with a sound and complete symbolic knowledge base while avoiding a crucial problem: where does the knowledge come from? In this paper, we present Abductive Meta-Interpretive Learning ($Meta_{Abd}$), which unites abduction and induction to learn perceptual neural network and first-order logic theories simultaneously from raw data. Given the same amount of domain knowledge, we demonstrate that $Meta_{Abd}$ not only outperforms the compared end-to-end models in predictive accuracy and data efficiency but also induces logic programs that can be re-used as background knowledge in subsequent learning tasks. To the best of our knowledge, $Meta_{Abd}$ is the first system that can jointly learn neural networks and recursive first-order logic theories with predicate invention.

Tunneling Neural Perception and Logic Reasoning through Abductive Learning

Feb 06, 2018

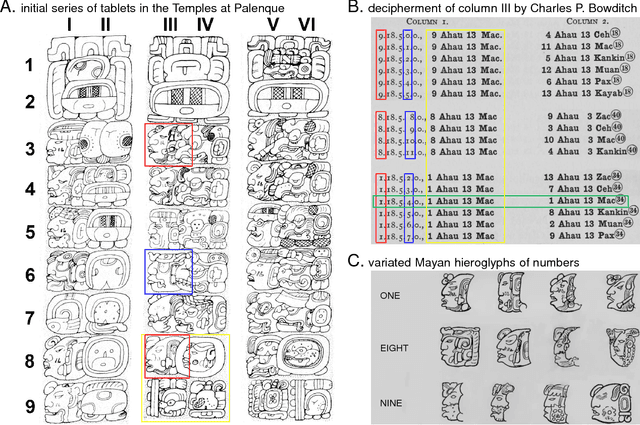

Abstract:Perception and reasoning are basic human abilities that are seamlessly connected as part of human intelligence. However, in current machine learning systems, the perception and reasoning modules are incompatible. Tasks requiring joint perception and reasoning ability are difficult to accomplish autonomously and still demand human intervention. Inspired by the way language experts decoded Mayan scripts by joining two abilities in an abductive manner, this paper proposes the abductive learning framework. The framework learns perception and reasoning simultaneously with the help of a trial-and-error abductive process. We present the Neural-Logical Machine as an implementation of this novel learning framework. We demonstrate that--using human-like abductive learning--the machine learns from a small set of simple hand-written equations and then generalizes well to complex equations, a feat that is beyond the capability of state-of-the-art neural network models. The abductive learning framework explores a new direction for approaching human-level learning ability.

Inductive Logic Boosting

Feb 25, 2014

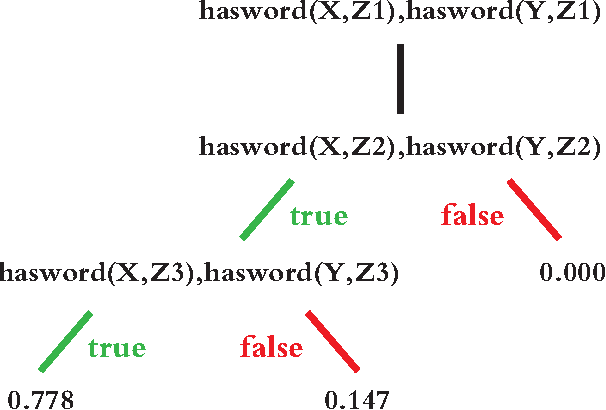

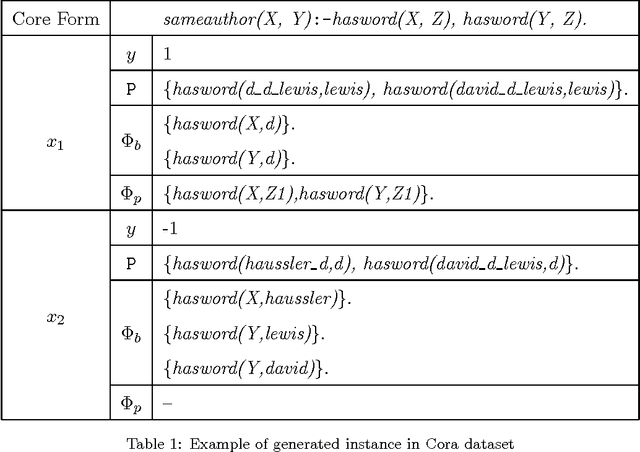

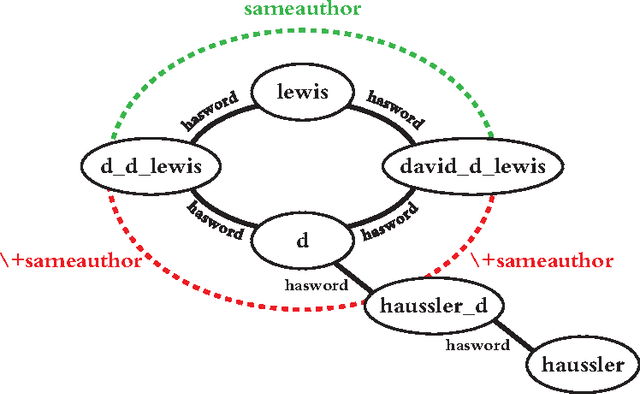

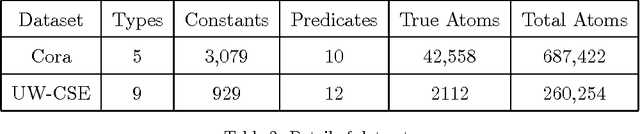

Abstract:Recent years have seen a surge of interest in Probabilistic Logic Programming (PLP) and Statistical Relational Learning (SRL) models that combine logic with probabilities. Structure learning of these systems is an intersection area of Inductive Logic Programming (ILP) and statistical learning (SL). However, ILP cannot deal with probabilities, SL cannot model relational hypothesis. The biggest challenge of integrating these two machine learning frameworks is how to estimate the probability of a logic clause only from the observation of grounded logic atoms. Many current methods models a joint probability by representing clause as graphical model and literals as vertices in it. This model is still too complicate and only can be approximate by pseudo-likelihood. We propose Inductive Logic Boosting framework to transform the relational dataset into a feature-based dataset, induces logic rules by boosting Problog Rule Trees and relaxes the independence constraint of pseudo-likelihood. Experimental evaluation on benchmark datasets demonstrates that the AUC-PR and AUC-ROC value of ILP learned rules are higher than current state-of-the-art SRL methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge