Wael Farhan

Gemini: A Family of Highly Capable Multimodal Models

Dec 19, 2023Abstract:This report introduces a new family of multimodal models, Gemini, that exhibit remarkable capabilities across image, audio, video, and text understanding. The Gemini family consists of Ultra, Pro, and Nano sizes, suitable for applications ranging from complex reasoning tasks to on-device memory-constrained use-cases. Evaluation on a broad range of benchmarks shows that our most-capable Gemini Ultra model advances the state of the art in 30 of 32 of these benchmarks - notably being the first model to achieve human-expert performance on the well-studied exam benchmark MMLU, and improving the state of the art in every one of the 20 multimodal benchmarks we examined. We believe that the new capabilities of Gemini models in cross-modal reasoning and language understanding will enable a wide variety of use cases and we discuss our approach toward deploying them responsibly to users.

SPARTA: Speaker Profiling for ARabic TAlk

Dec 13, 2020

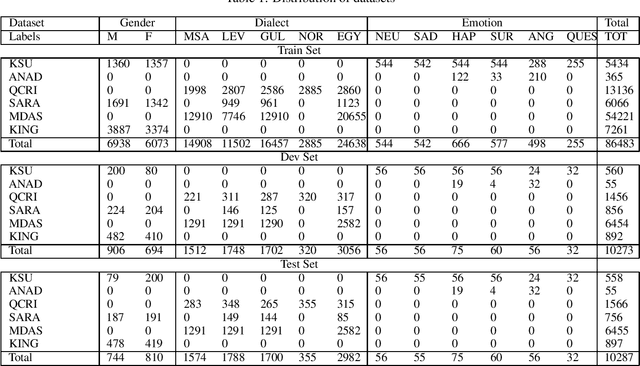

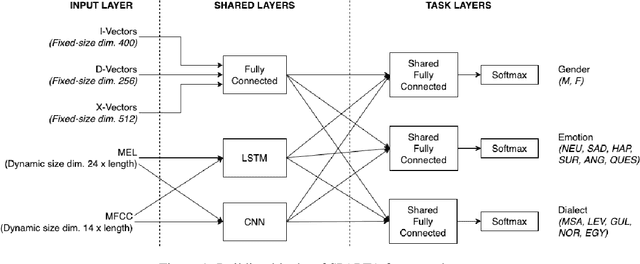

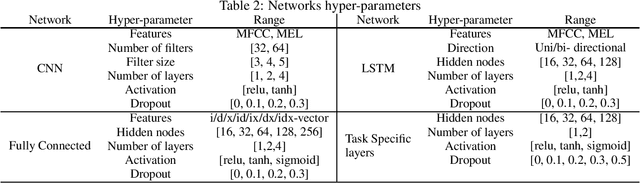

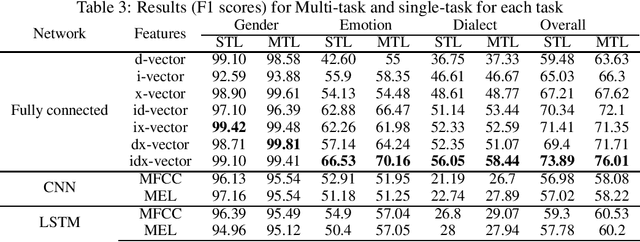

Abstract:This paper proposes a novel approach to an automatic estimation of three speaker traits from Arabic speech: gender, emotion, and dialect. After showing promising results on different text classification tasks, the multi-task learning (MTL) approach is used in this paper for Arabic speech classification tasks. The dataset was assembled from six publicly available datasets. First, The datasets were edited and thoroughly divided into train, development, and test sets (open to the public), and a benchmark was set for each task and dataset throughout the paper. Then, three different networks were explored: Long Short Term Memory (LSTM), Convolutional Neural Network (CNN), and Fully-Connected Neural Network (FCNN) on five different types of features: two raw features (MFCC and MEL) and three pre-trained vectors (i-vectors, d-vectors, and x-vectors). LSTM and CNN networks were implemented using raw features: MFCC and MEL, where FCNN was explored on the pre-trained vectors while varying the hyper-parameters of these networks to obtain the best results for each dataset and task. MTL was evaluated against the single task learning (STL) approach for the three tasks and six datasets, in which the MTL and pre-trained vectors almost constantly outperformed STL. All the data and pre-trained models used in this paper are available and can be acquired by the public.

Multi-Dialect Arabic BERT for Country-Level Dialect Identification

Jul 10, 2020

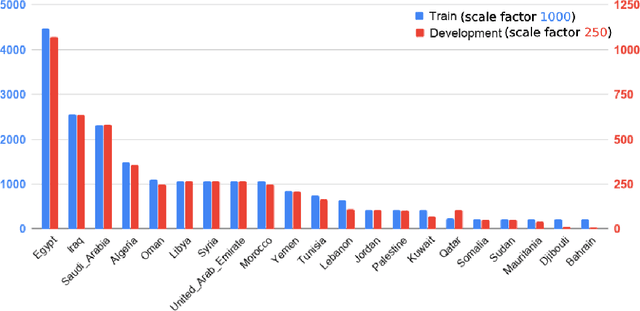

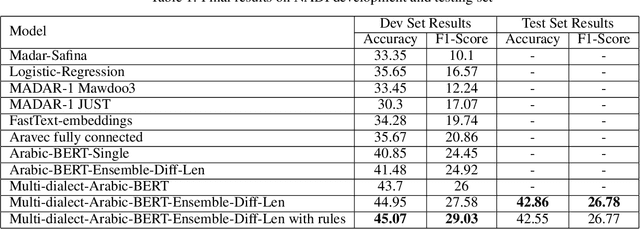

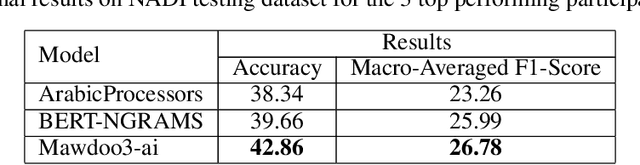

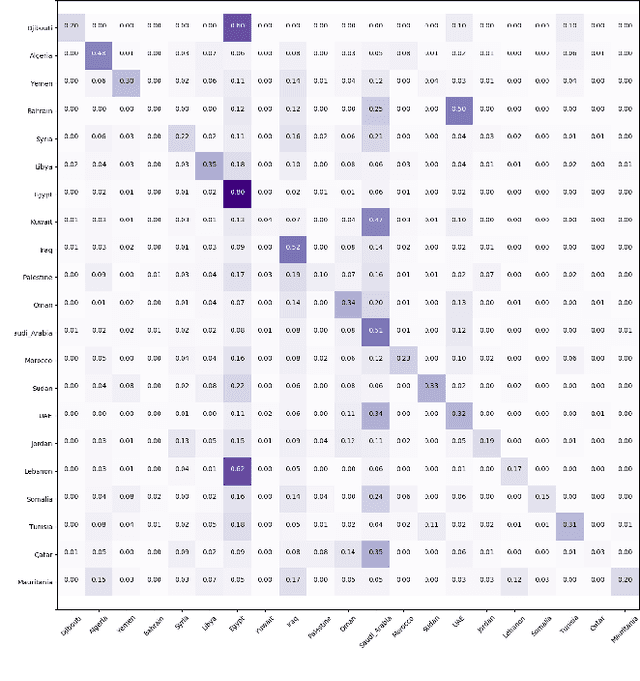

Abstract:Arabic dialect identification is a complex problem for a number of inherent properties of the language itself. In this paper, we present the experiments conducted, and the models developed by our competing team, Mawdoo3 AI, along the way to achieving our winning solution to subtask 1 of the Nuanced Arabic Dialect Identification (NADI) shared task. The dialect identification subtask provides 21,000 country-level labeled tweets covering all 21 Arab countries. An unlabeled corpus of 10M tweets from the same domain is also presented by the competition organizers for optional use. Our winning solution itself came in the form of an ensemble of different training iterations of our pre-trained BERT model, which achieved a micro-averaged F1-score of 26.78% on the subtask at hand. We publicly release the pre-trained language model component of our winning solution under the name of Multi-dialect-Arabic-BERT model, for any interested researcher out there.

Deep Contextualized Pairwise Semantic Similarity for Arabic Language Questions

Sep 19, 2019

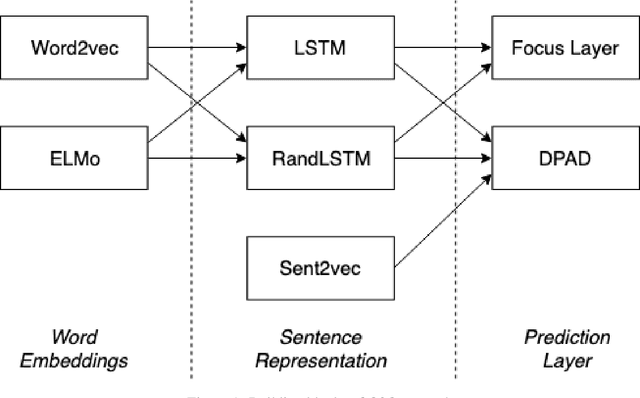

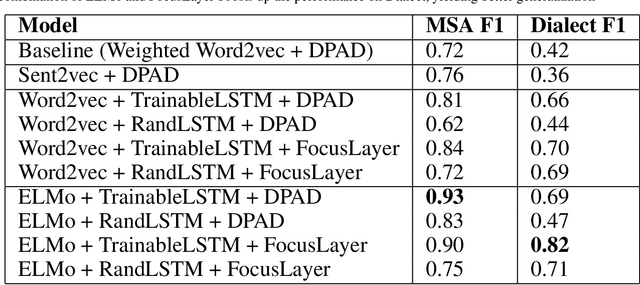

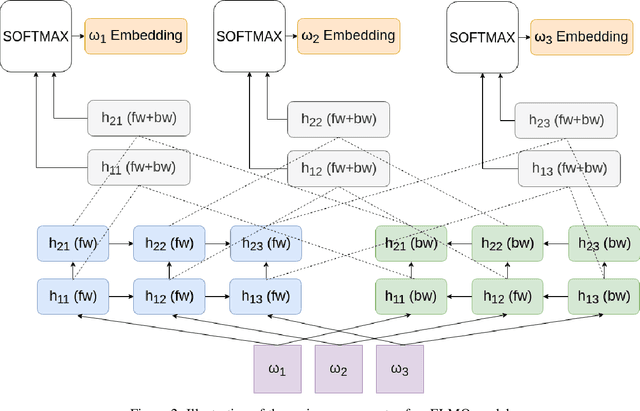

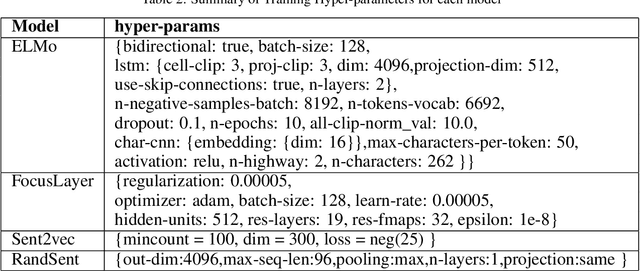

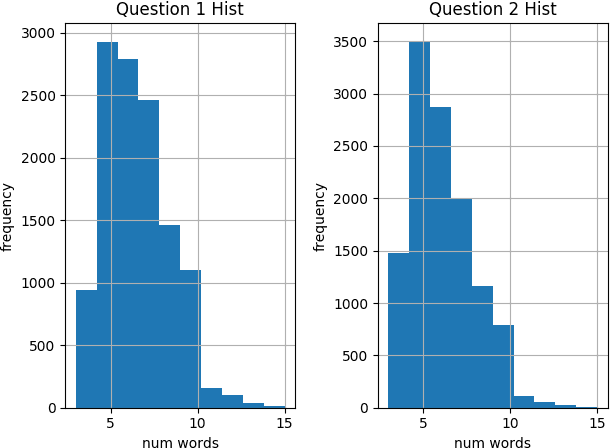

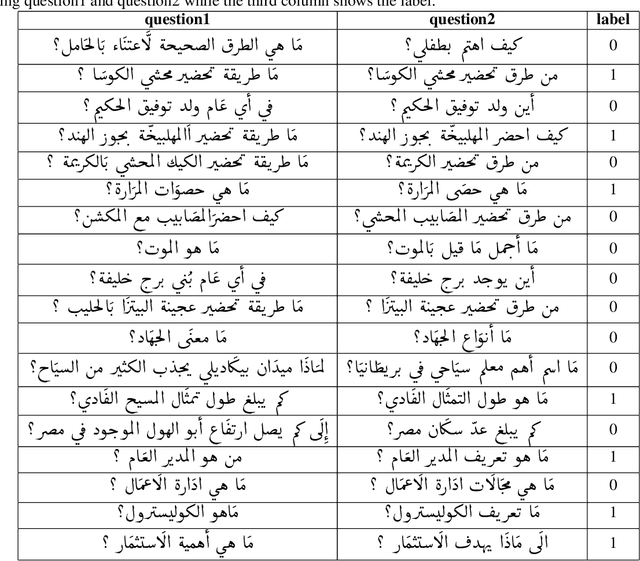

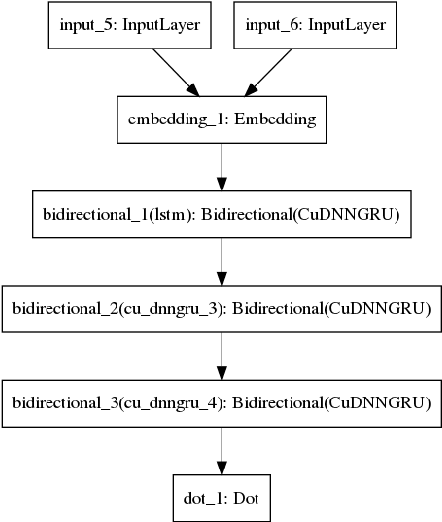

Abstract:Question semantic similarity is a challenging and active research problem that is very useful in many NLP applications, such as detecting duplicate questions in community question answering platforms such as Quora. Arabic is considered to be an under-resourced language, has many dialects, and rich in morphology. Combined together, these challenges make identifying semantically similar questions in Arabic even more difficult. In this paper, we introduce a novel approach to tackle this problem, and test it on two benchmarks; one for Modern Standard Arabic (MSA), and another for the 24 major Arabic dialects. We are able to show that our new system outperforms state-of-the-art approaches by achieving 93% F1-score on the MSA benchmark and 82% on the dialectical one. This is achieved by utilizing contextualized word representations (ELMo embeddings) trained on a text corpus containing MSA and dialectic sentences. This in combination with a pairwise fine-grained similarity layer, helps our question-to-question similarity model to generalize predictions on different dialects while being trained only on question-to-question MSA data.

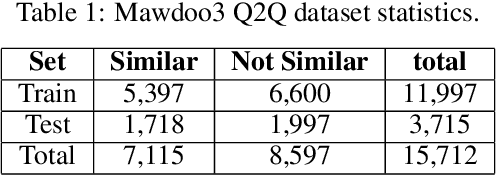

NSURL-2019 Shared Task 8: Semantic Question Similarity in Arabic

Sep 12, 2019

Abstract:Question semantic similarity (Q2Q) is a challenging task that is very useful in many NLP applications, such as detecting duplicate questions and question answering systems. In this paper, we present the results and findings of the shared task (Semantic Question Similarity in Arabic). The task was organized as part of the first workshop on NLP Solutions for Under Resourced Languages (NSURL 2019) The goal of the task is to predict whether two questions are semantically similar or not, even if they are phrased differently. A total of 9 teams participated in the task. The datasets created for this task are made publicly available to support further research on Arabic Q2Q.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge