Vinitha Ranganeni

Multiple Ways of Working with Users to Develop Physically Assistive Robots

Mar 07, 2024

Abstract:Despite the growth of physically assistive robotics (PAR) research over the last decade, nearly half of PAR user studies do not involve participants with the target disabilities. There are several reasons for this -- recruitment challenges, small sample sizes, and transportation logistics -- all influenced by systemic barriers that people with disabilities face. However, it is well-established that working with end-users results in technology that better addresses their needs and integrates with their lived circumstances. In this paper, we reflect on multiple approaches we have taken to working with people with motor impairments across the design, development, and evaluation of three PAR projects: (a) assistive feeding with a robot arm; (b) assistive teleoperation with a mobile manipulator; and (c) shared control with a robot arm. We discuss these approaches to working with users along three dimensions -- individual vs. community-level insight, logistic burden on end-users vs. researchers, and benefit to researchers vs. community -- and share recommendations for how other PAR researchers can incorporate users into their work.

Evaluating Customization of Remote Tele-operation Interfaces for Assistive Robots

Apr 05, 2023

Abstract:Mobile manipulator platforms, like the Stretch RE1 robot, make the promise of in-home robotic assistance feasible. For people with severe physical limitations, like those with quadriplegia, the ability to tele-operate these robots themselves means that they can perform physical tasks they cannot otherwise do themselves, thereby increasing their level of independence. In order for users with physical limitations to operate these robots, their interfaces must be accessible and cater to the specific needs of all users. As physical limitations vary amongst users, it is difficult to make a single interface that will accommodate all users. Instead, such interfaces should be customizable to each individual user. In this paper we explore the value of customization of a browser-based interface for tele-operating the Stretch RE1 robot. More specifically, we evaluate the usability and effectiveness of a customized interface in comparison to the default interface configurations from prior work. We present a user study involving participants with motor impairments (N=10) and without motor impairments, who could serve as a caregiver, (N=13) that use the robot to perform mobile manipulation tasks in a real kitchen environment. Our study demonstrates that no single interface configuration satisfies all users' needs and preferences. Users perform better when using the customized interface for navigation, but not for manipulation due to higher complexity of learning to manipulate through the robot. All participants are able to use the robot to complete all tasks and participants with motor impairments believe that having the robot in their home would make them more independent.

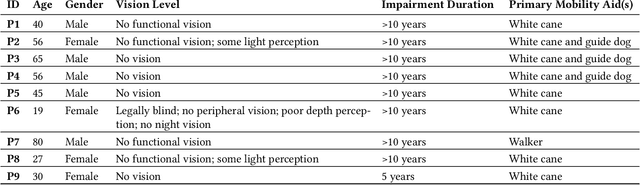

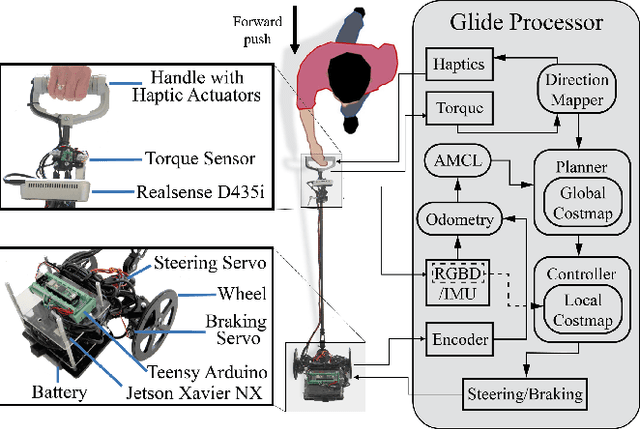

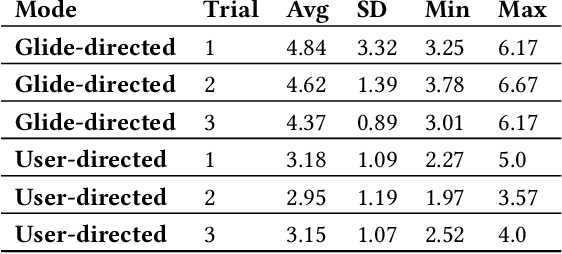

Exploring Levels of Control for a Navigation Assistant for Blind Travelers

Jan 05, 2023

Abstract:Only a small percentage of blind and low-vision people use traditional mobility aids such as a cane or a guide dog. Various assistive technologies have been proposed to address the limitations of traditional mobility aids. These devices often give either the user or the device majority of the control. In this work, we explore how varying levels of control affect the users' sense of agency, trust in the device, confidence, and successful navigation. We present Glide, a novel mobility aid with two modes for control: Glide-directed and User-directed. We employ Glide in a study (N=9) in which blind or low-vision participants used both modes to navigate through an indoor environment. Overall, participants found that Glide was easy to use and learn. Most participants trusted Glide despite its current limitations, and their confidence and performance increased as they continued to use Glide. Users' control mode preference varied in different situations; no single mode "won" in all situations.

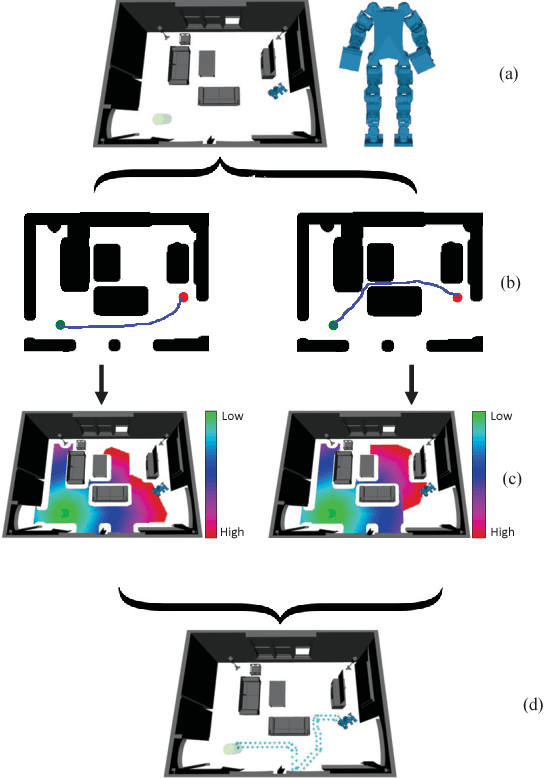

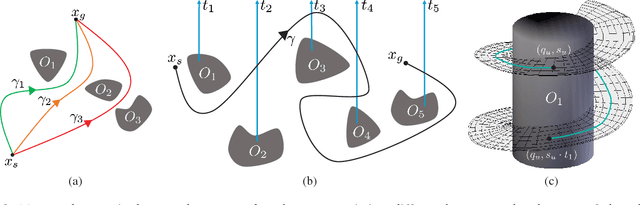

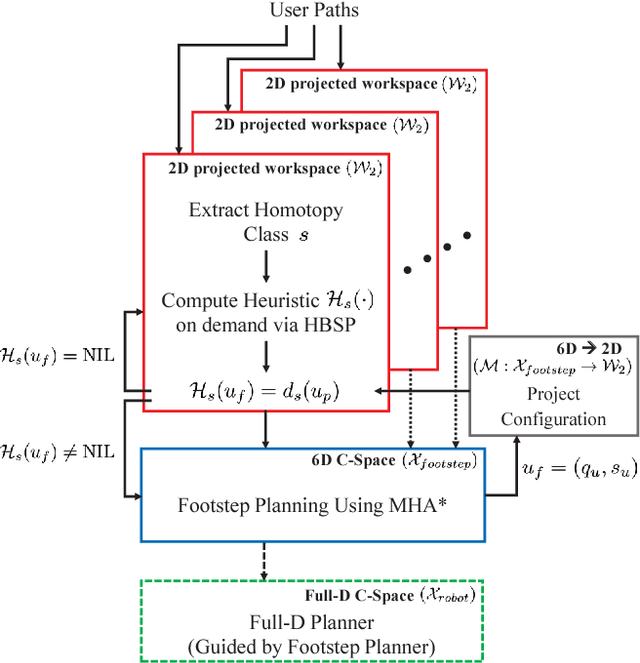

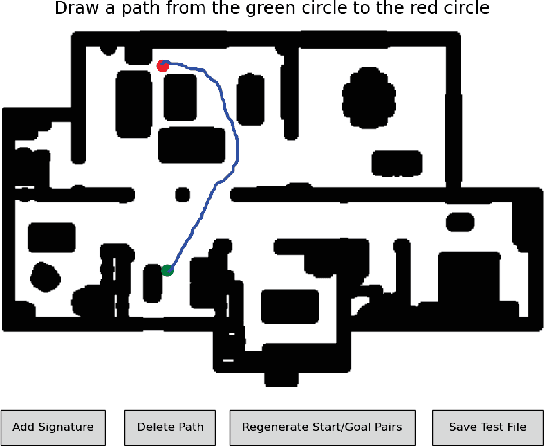

Effective Footstep Planning Using Homotopy-Class Guidance

Apr 10, 2019

Abstract:Planning the motion for humanoid robots is a computationally-complex task due to the high dimensionality of the system. Thus, a common approach is to first plan in the low-dimensional space induced by the robot's feet---a task referred to as footstep planning. This low-dimensional plan is then used to guide the full motion of the robot. One approach that has proven successful in footstep planning is using search-based planners such as A* and its many variants. To do so, these search-based planners have to be endowed with effective heuristics to efficiently guide them through the search space. However, designing effective heuristics is a time-consuming task that requires the user to have good domain knowledge. Thus, our goal is to be able to effectively plan the footstep motions taken by a humanoid robot while obviating the burden on the user to carefully design local-minima free heuristics. To this end, we propose to use user-defined homotopy classes in the workspace that are intuitive to define. These homotopy classes are used to automatically generate heuristic functions that efficiently guide the footstep planner. Additionally, we present an extension to homotopy classes such that they are applicable to complex multi-level environments. We compare our approach for footstep planning with a standard approach that uses a heuristic common to footstep planning. In simple scenarios, the performance of both algorithms is comparable. However, in more complex scenarios our approach allows for a speedup in planning of several orders of magnitude when compared to the standard approach.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge