Valentin Bazarevsky

BlazePose GHUM Holistic: Real-time 3D Human Landmarks and Pose Estimation

Jun 23, 2022

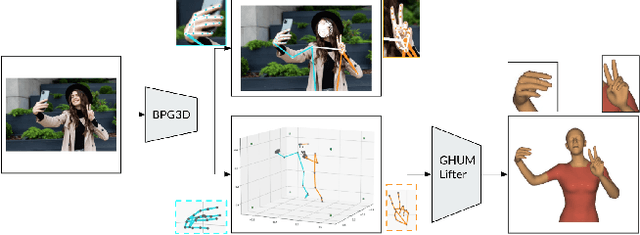

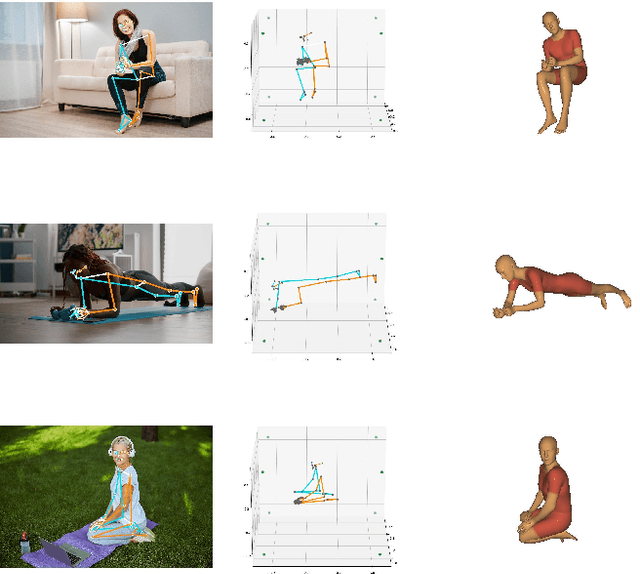

Abstract:We present BlazePose GHUM Holistic, a lightweight neural network pipeline for 3D human body landmarks and pose estimation, specifically tailored to real-time on-device inference. BlazePose GHUM Holistic enables motion capture from a single RGB image including avatar control, fitness tracking and AR/VR effects. Our main contributions include i) a novel method for 3D ground truth data acquisition, ii) updated 3D body tracking with additional hand landmarks and iii) full body pose estimation from a monocular image.

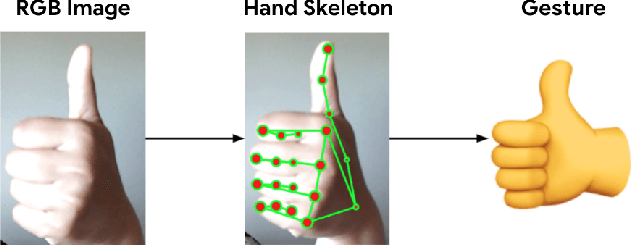

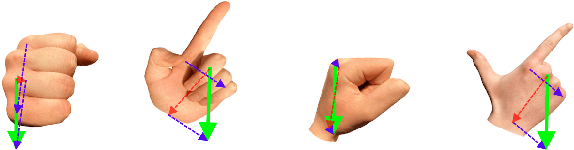

On-device Real-time Hand Gesture Recognition

Oct 29, 2021

Abstract:We present an on-device real-time hand gesture recognition (HGR) system, which detects a set of predefined static gestures from a single RGB camera. The system consists of two parts: a hand skeleton tracker and a gesture classifier. We use MediaPipe Hands as the basis of the hand skeleton tracker, improve the keypoint accuracy, and add the estimation of 3D keypoints in a world metric space. We create two different gesture classifiers, one based on heuristics and the other using neural networks (NN).

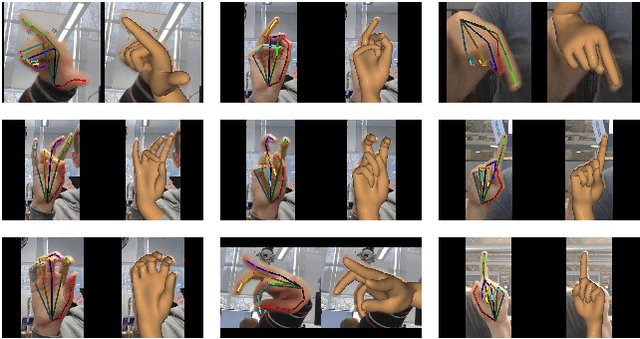

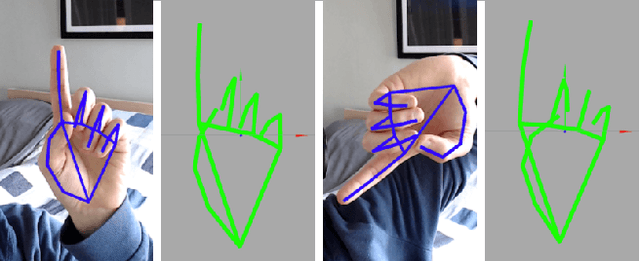

MediaPipe Hands: On-device Real-time Hand Tracking

Jun 18, 2020

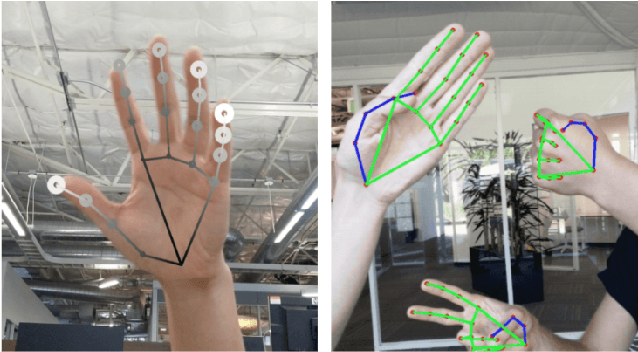

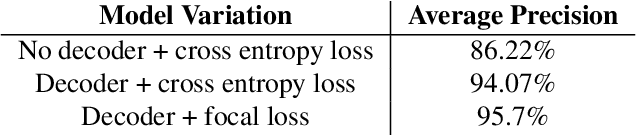

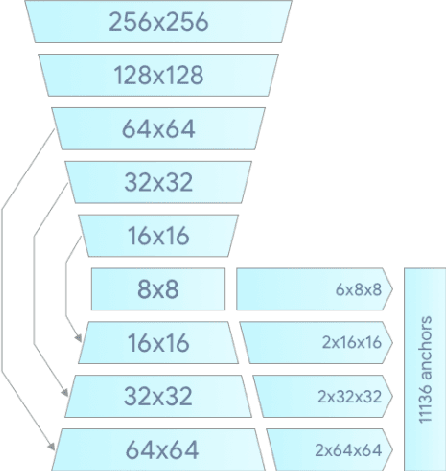

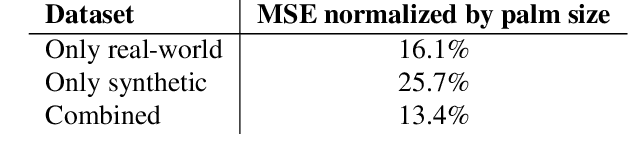

Abstract:We present a real-time on-device hand tracking pipeline that predicts hand skeleton from single RGB camera for AR/VR applications. The pipeline consists of two models: 1) a palm detector, 2) a hand landmark model. It's implemented via MediaPipe, a framework for building cross-platform ML solutions. The proposed model and pipeline architecture demonstrates real-time inference speed on mobile GPUs and high prediction quality. MediaPipe Hands is open sourced at https://mediapipe.dev.

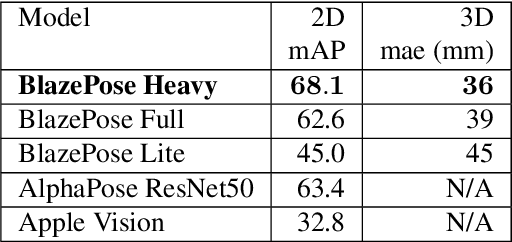

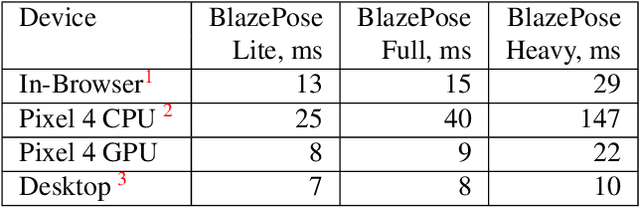

BlazePose: On-device Real-time Body Pose tracking

Jun 17, 2020

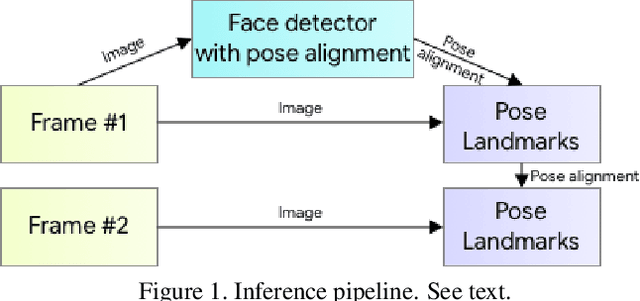

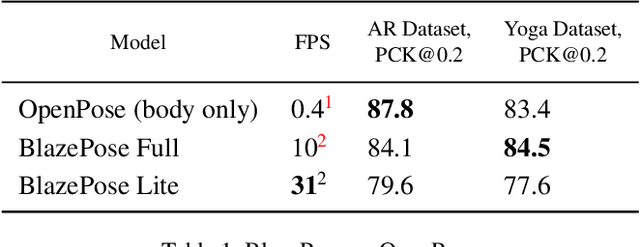

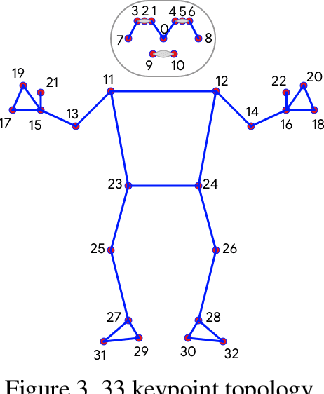

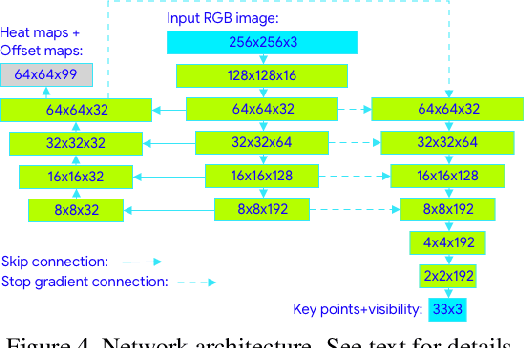

Abstract:We present BlazePose, a lightweight convolutional neural network architecture for human pose estimation that is tailored for real-time inference on mobile devices. During inference, the network produces 33 body keypoints for a single person and runs at over 30 frames per second on a Pixel 2 phone. This makes it particularly suited to real-time use cases like fitness tracking and sign language recognition. Our main contributions include a novel body pose tracking solution and a lightweight body pose estimation neural network that uses both heatmaps and regression to keypoint coordinates.

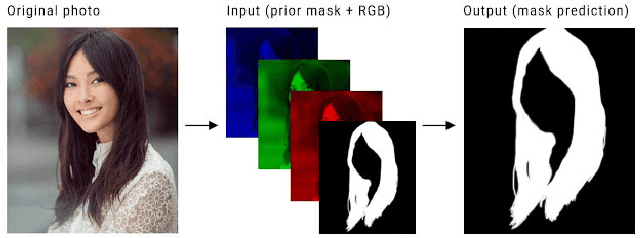

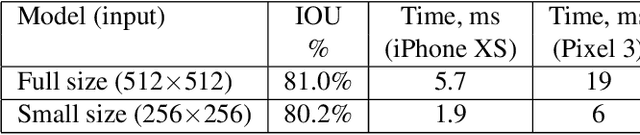

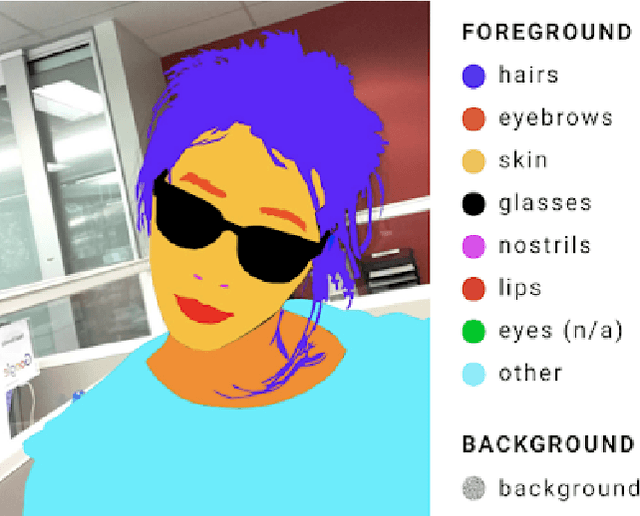

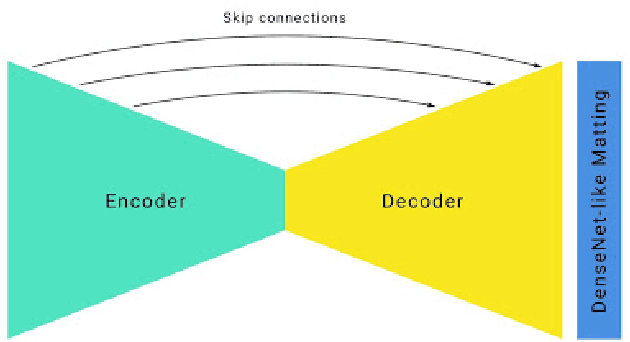

Real-time Hair Segmentation and Recoloring on Mobile GPUs

Jul 15, 2019

Abstract:We present a novel approach for neural network-based hair segmentation from a single camera input specifically designed for real-time, mobile application. Our relatively small neural network produces a high-quality hair segmentation mask that is well suited for AR effects, e.g. virtual hair recoloring. The proposed model achieves real-time inference speed on mobile GPUs (30-100+ FPS, depending on the device) with high accuracy. We also propose a very realistic hair recoloring scheme. Our method has been deployed in major AR application and is used by millions of users.

BlazeFace: Sub-millisecond Neural Face Detection on Mobile GPUs

Jul 14, 2019

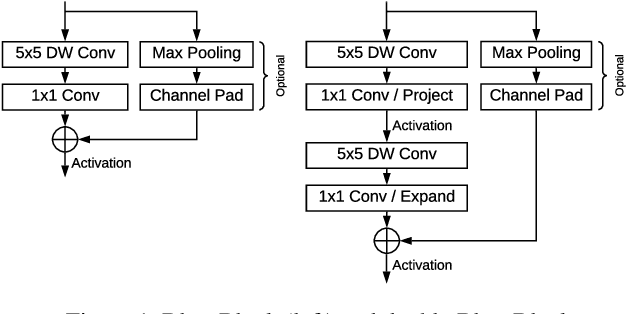

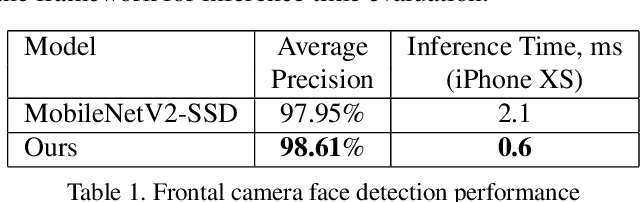

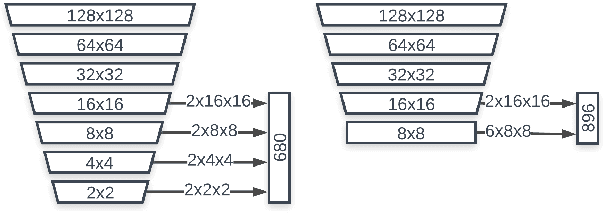

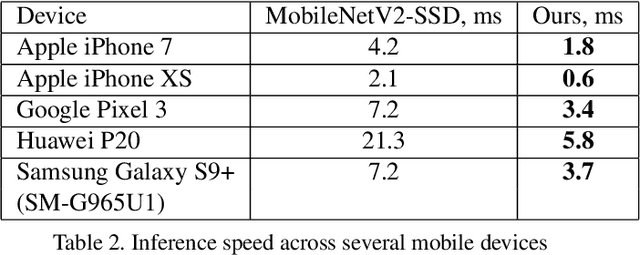

Abstract:We present BlazeFace, a lightweight and well-performing face detector tailored for mobile GPU inference. It runs at a speed of 200-1000+ FPS on flagship devices. This super-realtime performance enables it to be applied to any augmented reality pipeline that requires an accurate facial region of interest as an input for task-specific models, such as 2D/3D facial keypoint or geometry estimation, facial features or expression classification, and face region segmentation. Our contributions include a lightweight feature extraction network inspired by, but distinct from MobileNetV1/V2, a GPU-friendly anchor scheme modified from Single Shot MultiBox Detector (SSD), and an improved tie resolution strategy alternative to non-maximum suppression.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge