Siargey Pisarchyk

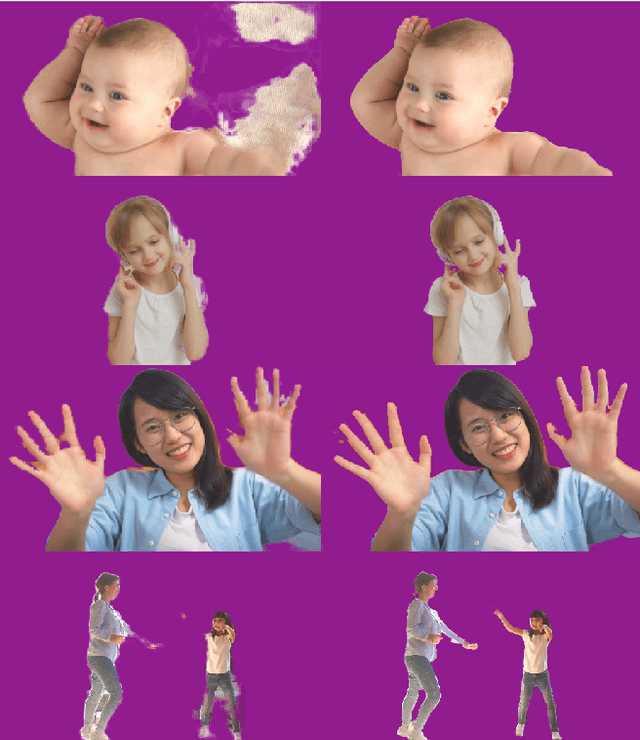

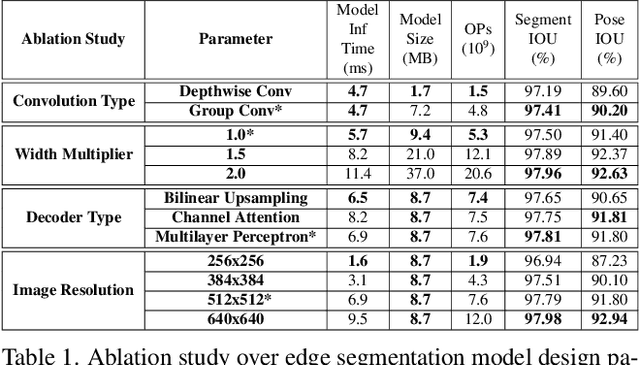

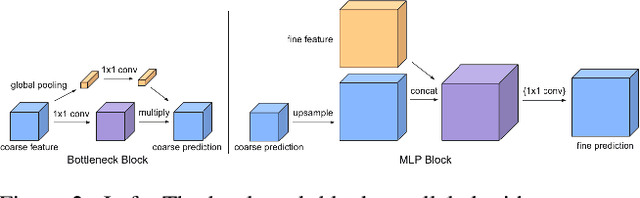

Efficient Heterogeneous Video Segmentation at the Edge

Aug 24, 2022

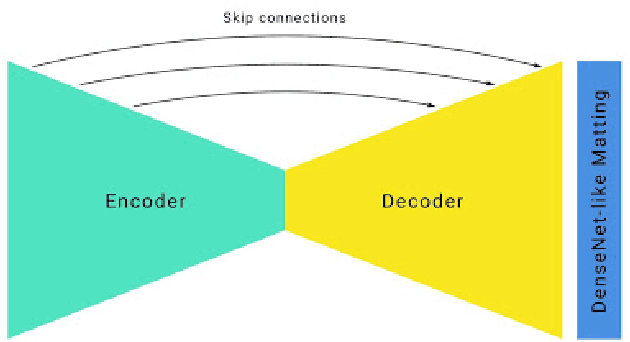

Abstract:We introduce an efficient video segmentation system for resource-limited edge devices leveraging heterogeneous compute. Specifically, we design network models by searching across multiple dimensions of specifications for the neural architectures and operations on top of already light-weight backbones, targeting commercially available edge inference engines. We further analyze and optimize the heterogeneous data flows in our systems across the CPU, the GPU and the NPU. Our approach has empirically factored well into our real-time AR system, enabling remarkably higher accuracy with quadrupled effective resolutions, yet at much shorter end-to-end latency, much higher frame rate, and even lower power consumption on edge platforms.

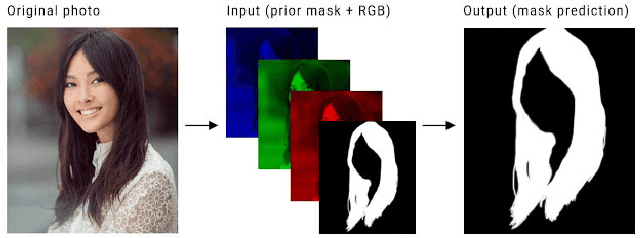

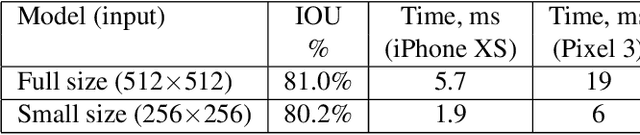

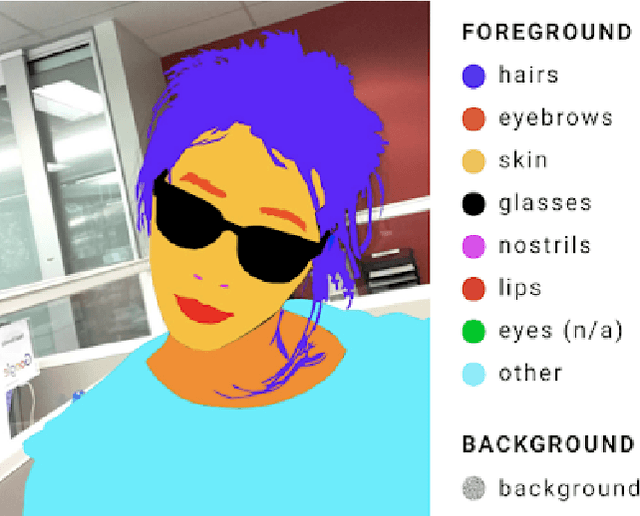

Real-time Hair Segmentation and Recoloring on Mobile GPUs

Jul 15, 2019

Abstract:We present a novel approach for neural network-based hair segmentation from a single camera input specifically designed for real-time, mobile application. Our relatively small neural network produces a high-quality hair segmentation mask that is well suited for AR effects, e.g. virtual hair recoloring. The proposed model achieves real-time inference speed on mobile GPUs (30-100+ FPS, depending on the device) with high accuracy. We also propose a very realistic hair recoloring scheme. Our method has been deployed in major AR application and is used by millions of users.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge