Tung D. Ta

CADKnitter: Compositional CAD Generation from Text and Geometry Guidance

Dec 12, 2025Abstract:Crafting computer-aided design (CAD) models has long been a painstaking and time-intensive task, demanding both precision and expertise from designers. With the emergence of 3D generation, this task has undergone a transformative impact, shifting not only from visual fidelity to functional utility but also enabling editable CAD designs. Prior works have achieved early success in single-part CAD generation, which is not well-suited for real-world applications, as multiple parts need to be assembled under semantic and geometric constraints. In this paper, we propose CADKnitter, a compositional CAD generation framework with a geometry-guided diffusion sampling strategy. CADKnitter is able to generate a complementary CAD part that follows both the geometric constraints of the given CAD model and the semantic constraints of the desired design text prompt. We also curate a dataset, so-called KnitCAD, containing over 310,000 samples of CAD models, along with textual prompts and assembly metadata that provide semantic and geometric constraints. Intensive experiments demonstrate that our proposed method outperforms other state-of-the-art baselines by a clear margin.

MobiDock: Design and Control of A Modular Self Reconfigurable Bimanual Mobile Manipulator via Robotic Docking

Oct 31, 2025Abstract:Multi-robot systems, particularly mobile manipulators, face challenges in control coordination and dynamic stability when working together. To address this issue, this study proposes MobiDock, a modular self-reconfigurable mobile manipulator system that allows two independent robots to physically connect and form a unified mobile bimanual platform. This process helps transform a complex multi-robot control problem into the management of a simpler, single system. The system utilizes an autonomous docking strategy based on computer vision with AprilTag markers and a new threaded screw-lock mechanism. Experimental results show that the docked configuration demonstrates better performance in dynamic stability and operational efficiency compared to two independently cooperating robots. Specifically, the unified system has lower Root Mean Square (RMS) Acceleration and Jerk values, higher angular precision, and completes tasks significantly faster. These findings confirm that physical reconfiguration is a powerful design principle that simplifies cooperative control, improving stability and performance for complex tasks in real-world environments.

Hybrid Gripper Finger Enabling In-Grasp Friction Modulation Using Inflatable Silicone Pockets

Oct 31, 2025Abstract:Grasping objects with diverse mechanical properties, such as heavy, slippery, or fragile items, remains a significant challenge in robotics. Conventional grippers often rely on applying high normal forces, which can cause damage to objects. To address this limitation, we present a hybrid gripper finger that combines a rigid structural shell with a soft, inflatable silicone pocket. The gripper finger can actively modulate its surface friction by controlling the internal air pressure of the silicone pocket. Results from fundamental experiments indicate that increasing the internal pressure results in a proportional increase in the effective coefficient of friction. This enables the gripper to stably lift heavy and slippery objects without increasing the gripping force and to handle fragile or deformable objects, such as eggs, fruits, and paper cups, with minimal damage by increasing friction rather than applying excessive force. The experimental results demonstrate that the hybrid gripper finger with adaptable friction provides a robust and safer alternative to relying solely on high normal forces, thereby enhancing the gripper flexibility in handling delicate, fragile, and diverse objects.

RoboDesign1M: A Large-scale Dataset for Robot Design Understanding

Mar 09, 2025Abstract:Robot design is a complex and time-consuming process that requires specialized expertise. Gaining a deeper understanding of robot design data can enable various applications, including automated design generation, retrieving example designs from text, and developing AI-powered design assistants. While recent advancements in foundation models present promising approaches to addressing these challenges, progress in this field is hindered by the lack of large-scale design datasets. In this paper, we introduce RoboDesign1M, a large-scale dataset comprising 1 million samples. Our dataset features multimodal data collected from scientific literature, covering various robotics domains. We propose a semi-automated data collection pipeline, enabling efficient and diverse data acquisition. To assess the effectiveness of RoboDesign1M, we conduct extensive experiments across multiple tasks, including design image generation, visual question answering about designs, and design image retrieval. The results demonstrate that our dataset serves as a challenging new benchmark for design understanding tasks and has the potential to advance research in this field. RoboDesign1M will be released to support further developments in AI-driven robotic design automation.

SplineFormer: An Explainable Transformer-Based Approach for Autonomous Endovascular Navigation

Jan 08, 2025Abstract:Endovascular navigation is a crucial aspect of minimally invasive procedures, where precise control of curvilinear instruments like guidewires is critical for successful interventions. A key challenge in this task is accurately predicting the evolving shape of the guidewire as it navigates through the vasculature, which presents complex deformations due to interactions with the vessel walls. Traditional segmentation methods often fail to provide accurate real-time shape predictions, limiting their effectiveness in highly dynamic environments. To address this, we propose SplineFormer, a new transformer-based architecture, designed specifically to predict the continuous, smooth shape of the guidewire in an explainable way. By leveraging the transformer's ability, our network effectively captures the intricate bending and twisting of the guidewire, representing it as a spline for greater accuracy and smoothness. We integrate our SplineFormer into an end-to-end robot navigation system by leveraging the condensed information. The experimental results demonstrate that our SplineFormer is able to perform endovascular navigation autonomously and achieves a 50% success rate when cannulating the brachiocephalic artery on the real robot.

Hybrid Gripper with Passive Pneumatic Soft Joints for Grasping Deformable Thin Objects

Oct 10, 2024Abstract:Grasping a variety of objects remains a key challenge in the development of versatile robotic systems. The human hand is remarkably dexterous, capable of grasping and manipulating objects with diverse shapes, mechanical properties, and textures. Inspired by how humans use two fingers to pick up thin and large objects such as fabric or sheets of paper, we aim to develop a gripper optimized for grasping such deformable objects. Observing how the soft and flexible fingertip joints of the hand approach and grasp thin materials, a hybrid gripper design that incorporates both soft and rigid components was proposed. The gripper utilizes a soft pneumatic ring wrapped around a rigid revolute joint to create a flexible two-fingered gripper. Experiments were conducted to characterize and evaluate the gripper performance in handling sheets of paper and other objects. Compared to rigid grippers, the proposed design improves grasping efficiency and reduces the gripping distance by up to eightfold.

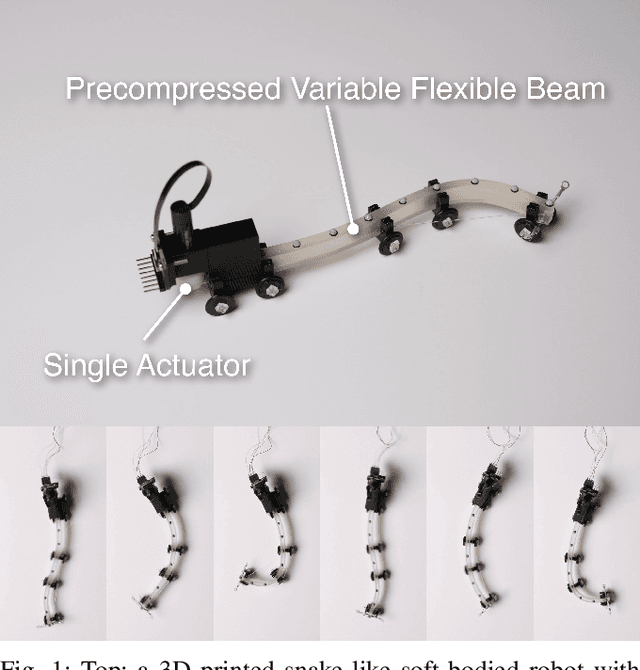

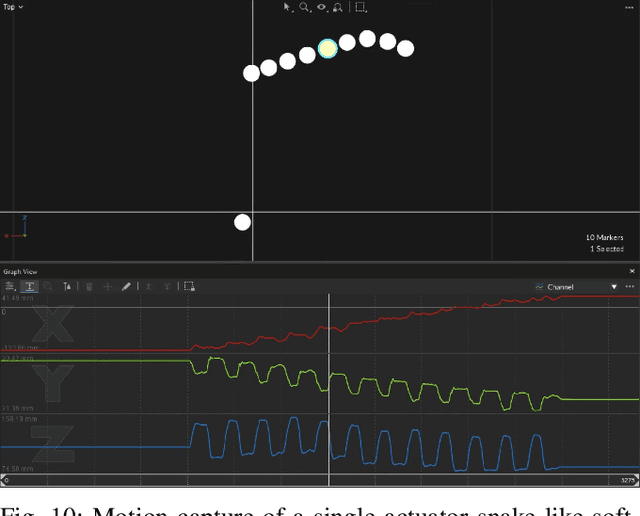

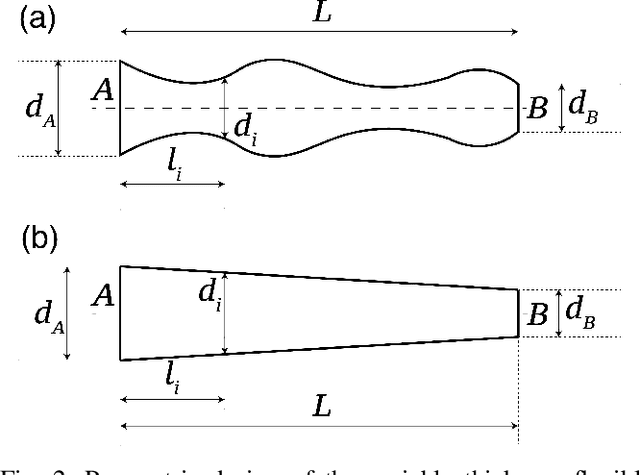

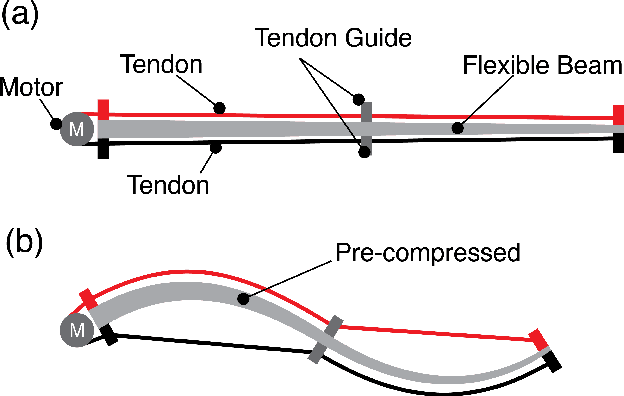

Single Actuator Undulation Soft-bodied Robots Using A Precompressed Variable Thickness Flexible Beam

Oct 08, 2024

Abstract:Soft robots - due to their intrinsic flexibility of the body - can adaptively navigate unstructured environments. One of the most popular locomotion gaits that has been implemented in soft robots is undulation. The undulation motion in soft robots resembles the locomotion gait of stringy creatures such as snakes, eels, and C. Elegans. Typically, the implementation of undulation locomotion on a soft robot requires many actuators to control each segment of the stringy body. The added weight of multiple actuators limits the navigating performance of soft-bodied robots. In this paper, we propose a simple tendon-driven flexible beam with only one actuator (a DC motor) that can generate a mechanical traveling wave along the beam to support the undulation locomotion of soft robots. The beam will be precompressed along its axis by shortening the length of the two tendons to form an S-shape, thus pretensioning the tendons. The motor will wind and unwind the tendons to deform the flexible beam and generate traveling waves along the body of the robot. We experiment with different pre-tension to characterize the relationship between tendon pre-tension forces and the DC-motor winding/unwinding. Our proposal enables a simple implementation of undulation motion to support the locomotion of soft-bodied robots.

Robotic-CLIP: Fine-tuning CLIP on Action Data for Robotic Applications

Sep 26, 2024

Abstract:Vision language models have played a key role in extracting meaningful features for various robotic applications. Among these, Contrastive Language-Image Pretraining (CLIP) is widely used in robotic tasks that require both vision and natural language understanding. However, CLIP was trained solely on static images paired with text prompts and has not yet been fully adapted for robotic tasks involving dynamic actions. In this paper, we introduce Robotic-CLIP to enhance robotic perception capabilities. We first gather and label large-scale action data, and then build our Robotic-CLIP by fine-tuning CLIP on 309,433 videos (~7.4 million frames) of action data using contrastive learning. By leveraging action data, Robotic-CLIP inherits CLIP's strong image performance while gaining the ability to understand actions in robotic contexts. Intensive experiments show that our Robotic-CLIP outperforms other CLIP-based models across various language-driven robotic tasks. Additionally, we demonstrate the practical effectiveness of Robotic-CLIP in real-world grasping applications.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge