Toon Calders

Interpretable and Fair Mechanisms for Abstaining Classifiers

Mar 24, 2025Abstract:Abstaining classifiers have the option to refrain from providing a prediction for instances that are difficult to classify. The abstention mechanism is designed to trade off the classifier's performance on the accepted data while ensuring a minimum number of predictions. In this setting, often fairness concerns arise when the abstention mechanism solely reduces errors for the majority groups of the data, resulting in increased performance differences across demographic groups. While there exist a bunch of methods that aim to reduce discrimination when abstaining, there is no mechanism that can do so in an explainable way. In this paper, we fill this gap by introducing Interpretable and Fair Abstaining Classifier IFAC, an algorithm that can reject predictions both based on their uncertainty and their unfairness. By rejecting possibly unfair predictions, our method reduces error and positive decision rate differences across demographic groups of the non-rejected data. Since the unfairness-based rejections are based on an interpretable-by-design method, i.e., rule-based fairness checks and situation testing, we create a transparent process that can empower human decision-makers to review the unfair predictions and make more just decisions for them. This explainable aspect is especially important in light of recent AI regulations, mandating that any high-risk decision task should be overseen by human experts to reduce discrimination risks.

"Patriarchy Hurts Men Too." Does Your Model Agree? A Discussion on Fairness Assumptions

Aug 01, 2024Abstract:The pipeline of a fair ML practitioner is generally divided into three phases: 1) Selecting a fairness measure. 2) Choosing a model that minimizes this measure. 3) Maximizing the model's performance on the data. In the context of group fairness, this approach often obscures implicit assumptions about how bias is introduced into the data. For instance, in binary classification, it is often assumed that the best model, with equal fairness, is the one with better performance. However, this belief already imposes specific properties on the process that introduced bias. More precisely, we are already assuming that the biasing process is a monotonic function of the fair scores, dependent solely on the sensitive attribute. We formally prove this claim regarding several implicit fairness assumptions. This leads, in our view, to two possible conclusions: either the behavior of the biasing process is more complex than mere monotonicity, which means we need to identify and reject our implicit assumptions in order to develop models capable of tackling more complex situations; or the bias introduced in the data behaves predictably, implying that many of the developed models are superfluous.

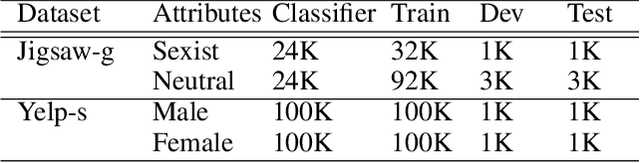

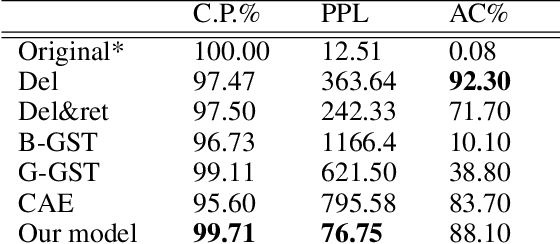

FairFlow: An Automated Approach to Model-based Counterfactual Data Augmentation For NLP

Jul 23, 2024

Abstract:Despite the evolution of language models, they continue to portray harmful societal biases and stereotypes inadvertently learned from training data. These inherent biases often result in detrimental effects in various applications. Counterfactual Data Augmentation (CDA), which seeks to balance demographic attributes in training data, has been a widely adopted approach to mitigate bias in natural language processing. However, many existing CDA approaches rely on word substitution techniques using manually compiled word-pair dictionaries. These techniques often lead to out-of-context substitutions, resulting in potential quality issues. The advancement of model-based techniques, on the other hand, has been challenged by the need for parallel training data. Works in this area resort to manually generated parallel data that are expensive to collect and are consequently limited in scale. This paper proposes FairFlow, an automated approach to generating parallel data for training counterfactual text generator models that limits the need for human intervention. Furthermore, we show that FairFlow significantly overcomes the limitations of dictionary-based word-substitution approaches whilst maintaining good performance.

Cherry on the Cake: Fairness is NOT an Optimization Problem

Jun 24, 2024

Abstract:Fair cake-cutting is a mathematical subfield that studies the problem of fairly dividing a resource among a number of participants. The so-called ``cake,'' as an object, represents any resource that can be distributed among players. This concept is connected to supervised multi-label classification: any dataset can be thought of as a cake that needs to be distributed, where each label is a player that receives its share of the dataset. In particular, any efficient cake-cutting solution for the dataset is equivalent to an optimal decision function. Although we are not the first to demonstrate this connection, the important ramifications of this parallel seem to have been partially forgotten. We revisit these classical results and demonstrate how this connection can be prolifically used for fairness in machine learning problems. Understanding the set of achievable fair decisions is a fundamental step in finding optimal fair solutions and satisfying fairness requirements. By employing the tools of cake-cutting theory, we have been able to describe the behavior of optimal fair decisions, which, counterintuitively, often exhibit quite unfair properties. Specifically, in order to satisfy fairness constraints, it is sometimes preferable, in the name of optimality, to purposefully make mistakes and deny giving the positive label to deserving individuals in a community in favor of less worthy individuals within the same community. This practice is known in the literature as cherry-picking and has been described as ``blatantly unfair.''

How to be fair? A study of label and selection bias

Mar 21, 2024Abstract:It is widely accepted that biased data leads to biased and thus potentially unfair models. Therefore, several measures for bias in data and model predictions have been proposed, as well as bias mitigation techniques whose aim is to learn models that are fair by design. Despite the myriad of mitigation techniques developed in the past decade, however, it is still poorly understood under what circumstances which methods work. Recently, Wick et al. showed, with experiments on synthetic data, that there exist situations in which bias mitigation techniques lead to more accurate models when measured on unbiased data. Nevertheless, in the absence of a thorough mathematical analysis, it remains unclear which techniques are effective under what circumstances. We propose to address this problem by establishing relationships between the type of bias and the effectiveness of a mitigation technique, where we categorize the mitigation techniques by the bias measure they optimize. In this paper we illustrate this principle for label and selection bias on the one hand, and demographic parity and ``We're All Equal'' on the other hand. Our theoretical analysis allows to explain the results of Wick et al. and we also show that there are situations where minimizing fairness measures does not result in the fairest possible distribution.

Beyond Accuracy-Fairness: Stop evaluating bias mitigation methods solely on between-group metrics

Jan 24, 2024

Abstract:Artificial Intelligence (AI) finds widespread applications across various domains, sparking concerns about fairness in its deployment. While fairness in AI remains a central concern, the prevailing discourse often emphasizes outcome-based metrics without a nuanced consideration of the differential impacts within subgroups. Bias mitigation techniques do not only affect the ranking of pairs of instances across sensitive groups, but often also significantly affect the ranking of instances within these groups. Such changes are hard to explain and raise concerns regarding the validity of the intervention. Unfortunately, these effects largely remain under the radar in the accuracy-fairness evaluation framework that is usually applied. This paper challenges the prevailing metrics for assessing bias mitigation techniques, arguing that they do not take into account the changes within-groups and that the resulting prediction labels fall short of reflecting real-world scenarios. We propose a paradigm shift: initially, we should focus on generating the most precise ranking for each subgroup. Following this, individuals should be chosen from these rankings to meet both fairness standards and practical considerations.

Model-based Counterfactual Generator for Gender Bias Mitigation

Nov 06, 2023

Abstract:Counterfactual Data Augmentation (CDA) has been one of the preferred techniques for mitigating gender bias in natural language models. CDA techniques have mostly employed word substitution based on dictionaries. Although such dictionary-based CDA techniques have been shown to significantly improve the mitigation of gender bias, in this paper, we highlight some limitations of such dictionary-based counterfactual data augmentation techniques, such as susceptibility to ungrammatical compositions, and lack of generalization outside the set of predefined dictionary words. Model-based solutions can alleviate these problems, yet the lack of qualitative parallel training data hinders development in this direction. Therefore, we propose a combination of data processing techniques and a bi-objective training regime to develop a model-based solution for generating counterfactuals to mitigate gender bias. We implemented our proposed solution and performed an empirical evaluation which shows how our model alleviates the shortcomings of dictionary-based solutions.

How Far Can It Go?: On Intrinsic Gender Bias Mitigation for Text Classification

Jan 30, 2023

Abstract:To mitigate gender bias in contextualized language models, different intrinsic mitigation strategies have been proposed, alongside many bias metrics. Considering that the end use of these language models is for downstream tasks like text classification, it is important to understand how these intrinsic bias mitigation strategies actually translate to fairness in downstream tasks and the extent of this. In this work, we design a probe to investigate the effects that some of the major intrinsic gender bias mitigation strategies have on downstream text classification tasks. We discover that instead of resolving gender bias, intrinsic mitigation techniques and metrics are able to hide it in such a way that significant gender information is retained in the embeddings. Furthermore, we show that each mitigation technique is able to hide the bias from some of the intrinsic bias measures but not all, and each intrinsic bias measure can be fooled by some mitigation techniques, but not all. We confirm experimentally, that none of the intrinsic mitigation techniques used without any other fairness intervention is able to consistently impact extrinsic bias. We recommend that intrinsic bias mitigation techniques should be combined with other fairness interventions for downstream tasks.

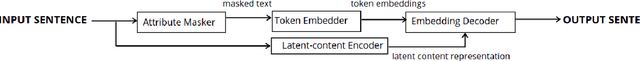

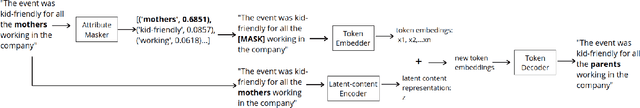

Text Style Transfer for Bias Mitigation using Masked Language Modeling

Jan 21, 2022

Abstract:It is well known that textual data on the internet and other digital platforms contain significant levels of bias and stereotypes. Although many such texts contain stereotypes and biases that inherently exist in natural language for reasons that are not necessarily malicious, there are crucial reasons to mitigate these biases. For one, these texts are being used as training corpus to train language models for salient applications like cv-screening, search engines, and chatbots; such applications are turning out to produce discriminatory results. Also, several research findings have concluded that biased texts have significant effects on the target demographic groups. For instance, masculine-worded job advertisements tend to be less appealing to female applicants. In this paper, we present a text style transfer model that can be used to automatically debias textual data. Our style transfer model improves on the limitations of many existing style transfer techniques such as loss of content information. Our model solves such issues by combining latent content encoding with explicit keyword replacement. We will show that this technique produces better content preservation whilst maintaining good style transfer accuracy.

Measuring Fairness with Biased Rulers: A Survey on Quantifying Biases in Pretrained Language Models

Dec 14, 2021

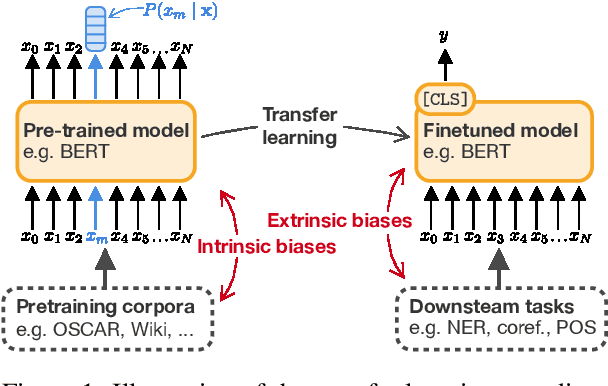

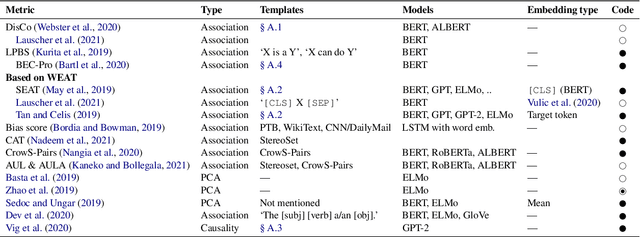

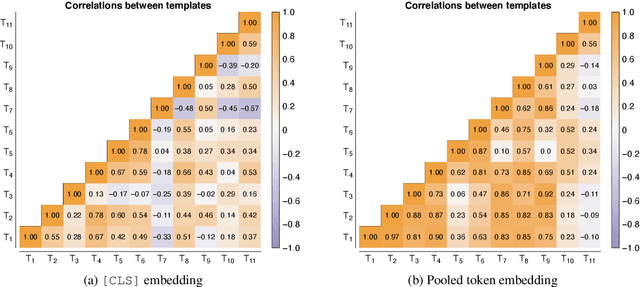

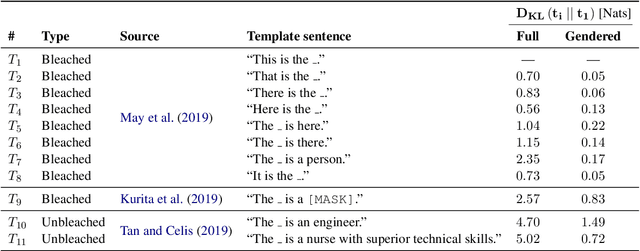

Abstract:An increasing awareness of biased patterns in natural language processing resources, like BERT, has motivated many metrics to quantify `bias' and `fairness'. But comparing the results of different metrics and the works that evaluate with such metrics remains difficult, if not outright impossible. We survey the existing literature on fairness metrics for pretrained language models and experimentally evaluate compatibility, including both biases in language models as in their downstream tasks. We do this by a mixture of traditional literature survey and correlation analysis, as well as by running empirical evaluations. We find that many metrics are not compatible and highly depend on (i) templates, (ii) attribute and target seeds and (iii) the choice of embeddings. These results indicate that fairness or bias evaluation remains challenging for contextualized language models, if not at least highly subjective. To improve future comparisons and fairness evaluations, we recommend avoiding embedding-based metrics and focusing on fairness evaluations in downstream tasks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge