Tomas Jenicek

Dark Side Augmentation: Generating Diverse Night Examples for Metric Learning

Sep 28, 2023Abstract:Image retrieval methods based on CNN descriptors rely on metric learning from a large number of diverse examples of positive and negative image pairs. Domains, such as night-time images, with limited availability and variability of training data suffer from poor retrieval performance even with methods performing well on standard benchmarks. We propose to train a GAN-based synthetic-image generator, translating available day-time image examples into night images. Such a generator is used in metric learning as a form of augmentation, supplying training data to the scarce domain. Various types of generators are evaluated and analyzed. We contribute with a novel light-weight GAN architecture that enforces the consistency between the original and translated image through edge consistency. The proposed architecture also allows a simultaneous training of an edge detector that operates on both night and day images. To further increase the variability in the training examples and to maximize the generalization of the trained model, we propose a novel method of diverse anchor mining. The proposed method improves over the state-of-the-art results on a standard Tokyo 24/7 day-night retrieval benchmark while preserving the performance on Oxford and Paris datasets. This is achieved without the need of training image pairs of matching day and night images. The source code is available at https://github.com/mohwald/gandtr .

* 11 pages, 4 figures, 8 tables

Results and findings of the 2021 Image Similarity Challenge

Feb 08, 2022

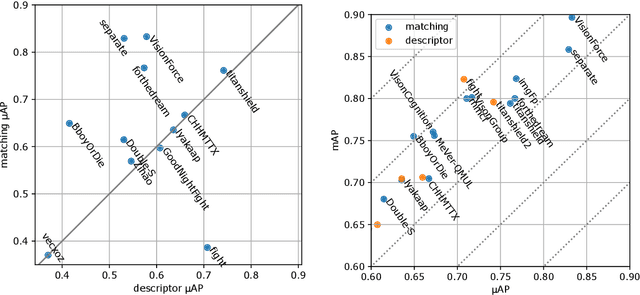

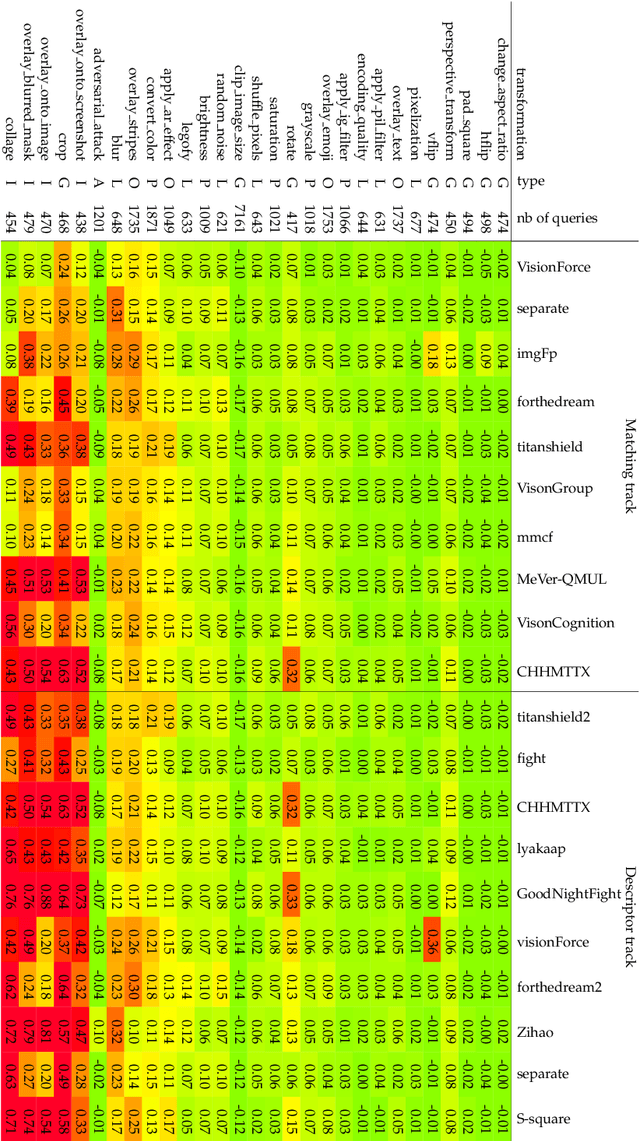

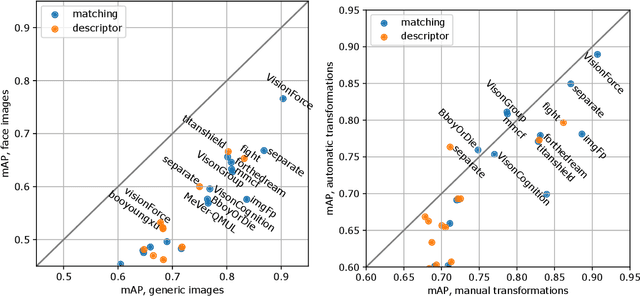

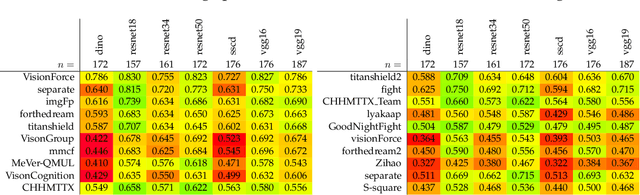

Abstract:The 2021 Image Similarity Challenge introduced a dataset to serve as a new benchmark to evaluate recent image copy detection methods. There were 200 participants to the competition. This paper presents a quantitative and qualitative analysis of the top submissions. It appears that the most difficult image transformations involve either severe image crops or hiding into unrelated images, combined with local pixel perturbations. The key algorithmic elements in the winning submissions are: training on strong augmentations, self-supervised learning, score normalization, explicit overlay detection, and global descriptor matching followed by pairwise image comparison.

The 2021 Image Similarity Dataset and Challenge

Jul 01, 2021

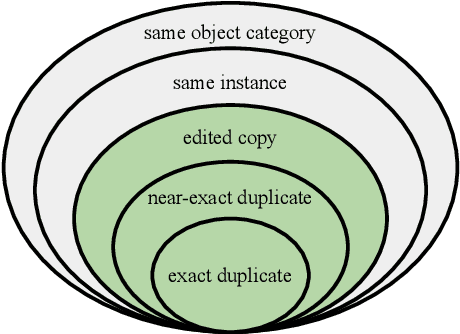

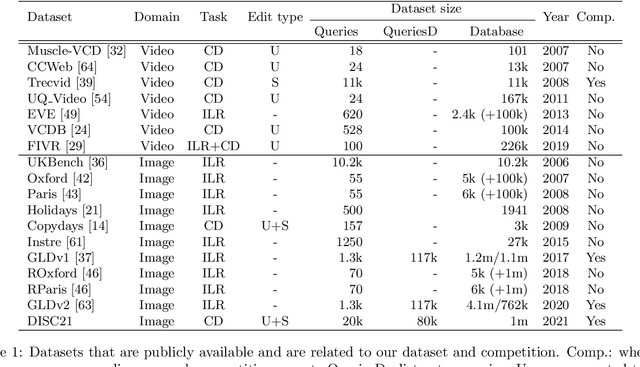

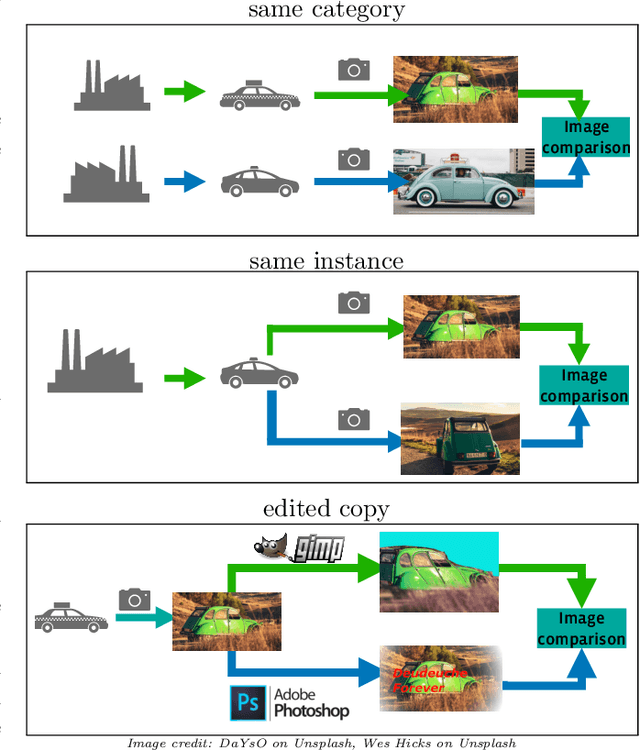

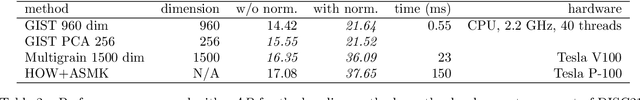

Abstract:This paper introduces a new benchmark for large-scale image similarity detection. This benchmark is used for the Image Similarity Challenge at NeurIPS'21 (ISC2021). The goal is to determine whether a query image is a modified copy of any image in a reference corpus of size 1~million. The benchmark features a variety of image transformations such as automated transformations, hand-crafted image edits and machine-learning based manipulations. This mimics real-life cases appearing in social media, for example for integrity-related problems dealing with misinformation and objectionable content. The strength of the image manipulations, and therefore the difficulty of the benchmark, is calibrated according to the performance of a set of baseline approaches. Both the query and reference set contain a majority of "distractor" images that do not match, which corresponds to a real-life needle-in-haystack setting, and the evaluation metric reflects that. We expect the DISC21 benchmark to promote image copy detection as an important and challenging computer vision task and refresh the state of the art.

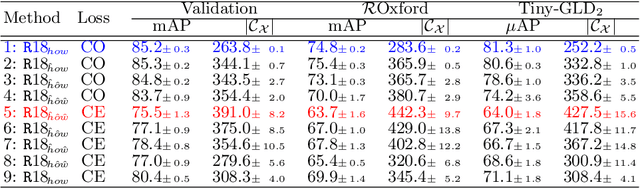

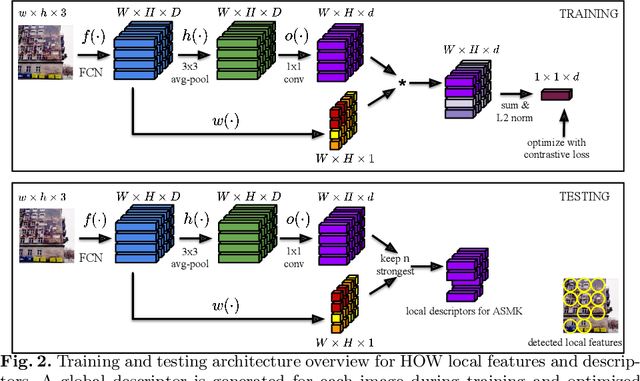

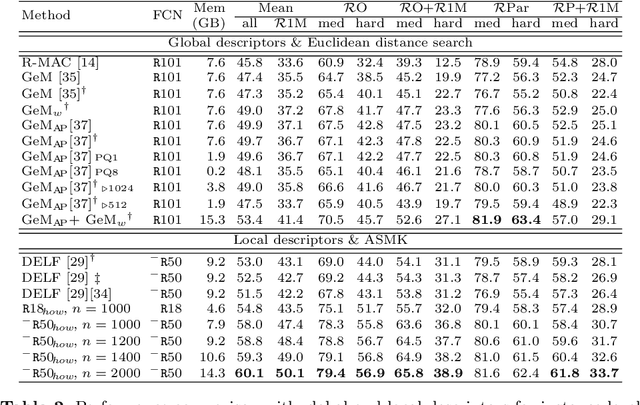

Learning and aggregating deep local descriptors for instance-level recognition

Jul 26, 2020

Abstract:We propose an efficient method to learn deep local descriptors for instance-level recognition. The training only requires examples of positive and negative image pairs and is performed as metric learning of sum-pooled global image descriptors. At inference, the local descriptors are provided by the activations of internal components of the network. We demonstrate why such an approach learns local descriptors that work well for image similarity estimation with classical efficient match kernel methods. The experimental validation studies the trade-off between performance and memory requirements of the state-of-the-art image search approach based on match kernels. Compared to existing local descriptors, the proposed ones perform better in two instance-level recognition tasks and keep memory requirements lower. We experimentally show that global descriptors are not effective enough at large scale and that local descriptors are essential. We achieve state-of-the-art performance, in some cases even with a backbone network as small as ResNet18.

No Fear of the Dark: Image Retrieval under Varying Illumination Conditions

Aug 23, 2019

Abstract:Image retrieval under varying illumination conditions, such as day and night images, is addressed by image preprocessing, both hand-crafted and learned. Prior to extracting image descriptors by a convolutional neural network, images are photometrically normalised in order to reduce the descriptor sensitivity to illumination changes. We propose a learnable normalisation based on the U-Net architecture, which is trained on a combination of single-camera multi-exposure images and a newly constructed collection of similar views of landmarks during day and night. We experimentally show that both hand-crafted normalisation based on local histogram equalisation and the learnable normalisation outperform standard approaches in varying illumination conditions, while staying on par with the state-of-the-art methods on daylight illumination benchmarks, such as Oxford or Paris datasets.

Linking Art through Human Poses

Jul 08, 2019

Abstract:We address the discovery of composition transfer in artworks based on their visual content. Automated analysis of large art collections, which are growing as a result of art digitization among museums and galleries, is an important tool for art history and assists cultural heritage preservation. Modern image retrieval systems offer good performance on visually similar artworks, but fail in the cases of more abstract composition transfer. The proposed approach links artworks through a pose similarity of human figures depicted in images. Human figures are the subject of a large fraction of visual art from middle ages to modernity and their distinctive poses were often a source of inspiration among artists. The method consists of two steps -- fast pose matching and robust spatial verification. We experimentally show that explicit human pose matching is superior to standard content-based image retrieval methods on a manually annotated art composition transfer dataset.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge