Thi Ngoc Tho Nguyen

Polyphonic audio event detection: multi-label or multi-class multi-task classification problem?

Jan 29, 2022

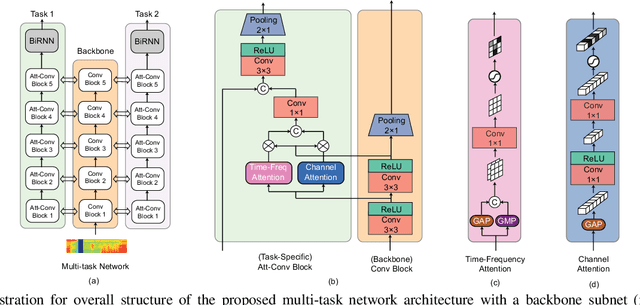

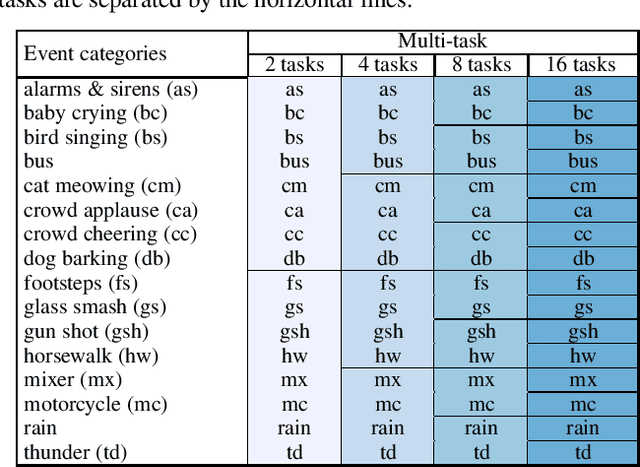

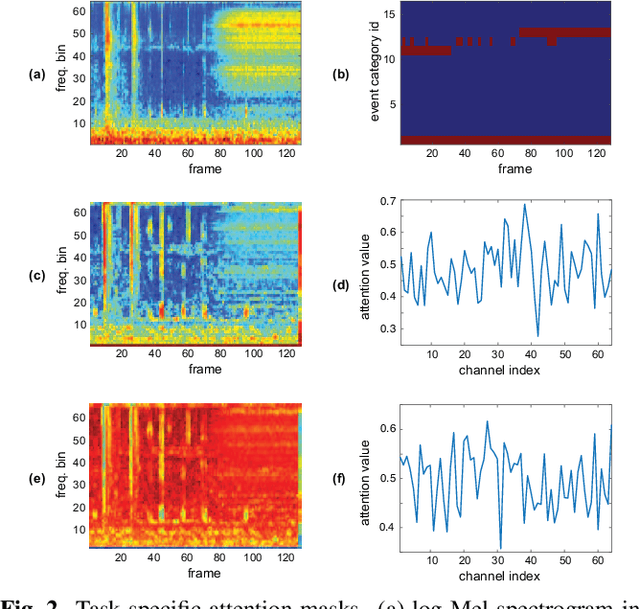

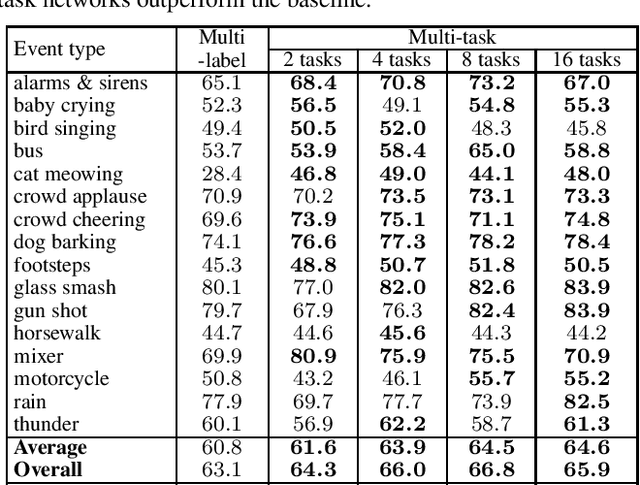

Abstract:Polyphonic events are the main error source of audio event detection (AED) systems. In deep-learning context, the most common approach to deal with event overlaps is to treat the AED task as a multi-label classification problem. By doing this, we inherently consider multiple one-vs.-rest classification problems, which are jointly solved by a single (i.e. shared) network. In this work, to better handle polyphonic mixtures, we propose to frame the task as a multi-class classification problem by considering each possible label combination as one class. To circumvent the large number of arising classes due to combinatorial explosion, we divide the event categories into multiple groups and construct a multi-task problem in a divide-and-conquer fashion, where each of the tasks is a multi-class classification problem. A network architecture is then devised for multi-class multi-task modelling. The network is composed of a backbone subnet and multiple task-specific subnets. The task-specific subnets are designed to learn time-frequency and channel attention masks to extract features for the task at hand from the common feature maps learned by the backbone. Experiments on the TUT-SED-Synthetic-2016 with high degree of event overlap show that the proposed approach results in more favorable performance than the common multi-label approach.

SALSA-Lite: A Fast and Effective Feature for Polyphonic Sound Event Localization and Detection with Microphone Arrays

Nov 16, 2021

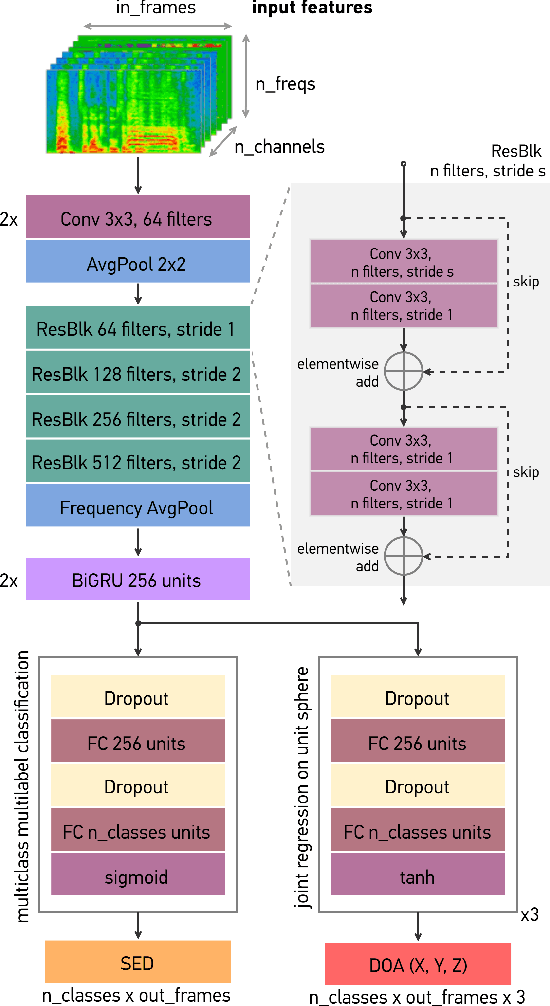

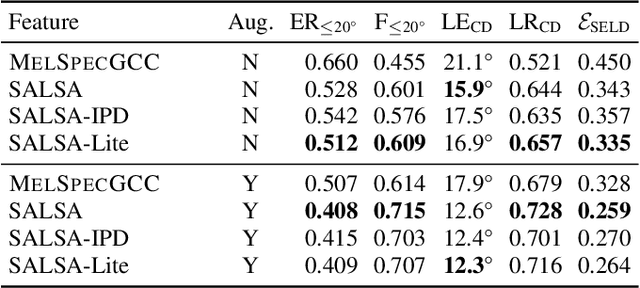

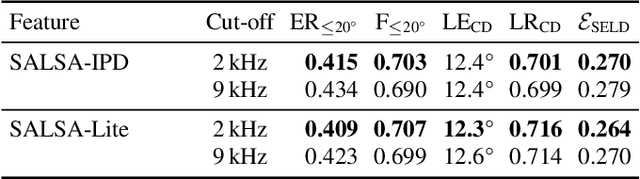

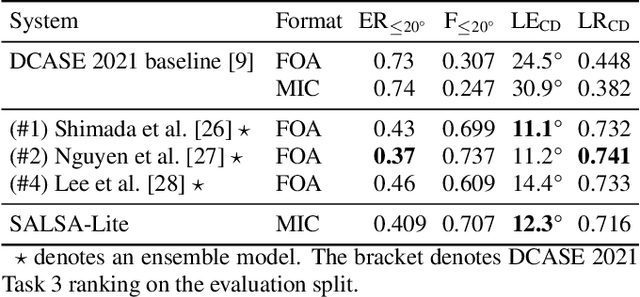

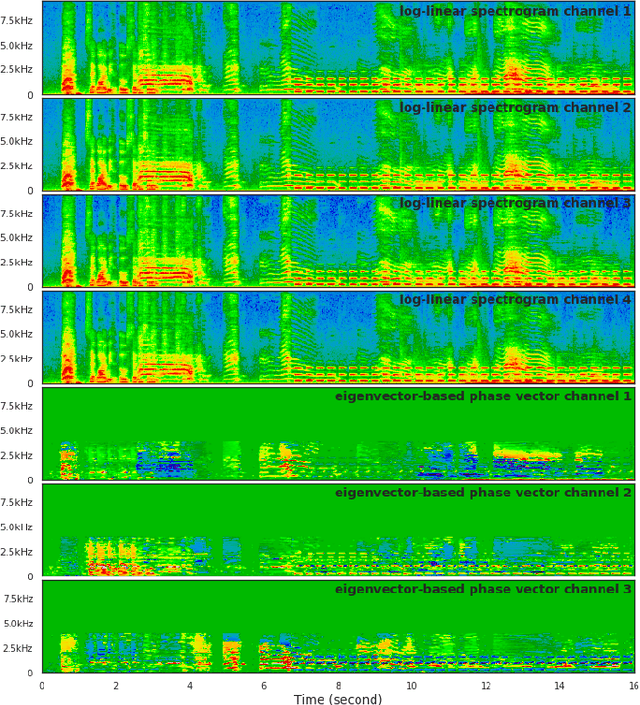

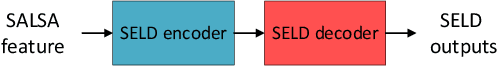

Abstract:Polyphonic sound event localization and detection (SELD) has many practical applications in acoustic sensing and monitoring. However, the development of real-time SELD has been limited by the demanding computational requirement of most recent SELD systems. In this work, we introduce SALSA-Lite, a fast and effective feature for polyphonic SELD using microphone array inputs. SALSA-Lite is a lightweight variation of a previously proposed SALSA feature for polyphonic SELD. SALSA, which stands for Spatial Cue-Augmented Log-Spectrogram, consists of multichannel log-spectrograms stacked channelwise with the normalized principal eigenvectors of the spectrotemporally corresponding spatial covariance matrices. In contrast to SALSA, which uses eigenvector-based spatial features, SALSA-Lite uses normalized inter-channel phase differences as spatial features, allowing a 30-fold speedup compared to the original SALSA feature. Experimental results on the TAU-NIGENS Spatial Sound Events 2021 dataset showed that the SALSA-Lite feature achieved competitive performance compared to the full SALSA feature, and significantly outperformed the traditional feature set of multichannel log-mel spectrograms with generalized cross-correlation spectra. Specifically, using SALSA-Lite features increased localization-dependent F1 score and class-dependent localization recall by 15% and 5%, respectively, compared to using multichannel log-mel spectrograms with generalized cross-correlation spectra.

End-to-End Complex-Valued Multidilated Convolutional Neural Network for Joint Acoustic Echo Cancellation and Noise Suppression

Oct 11, 2021

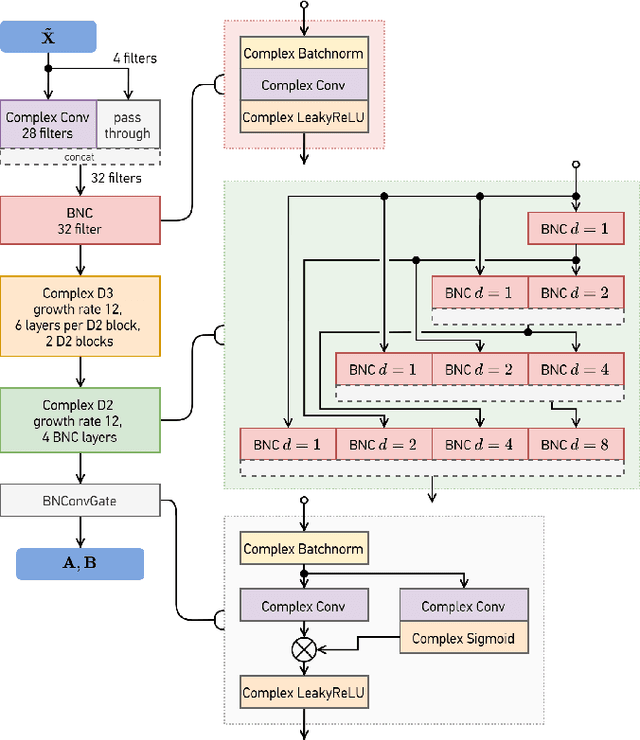

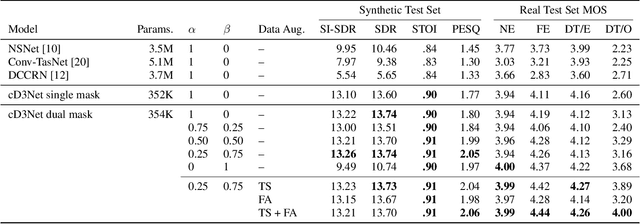

Abstract:Echo and noise suppression is an integral part of a full-duplex communication system. Many recent acoustic echo cancellation (AEC) systems rely on a separate adaptive filtering module for linear echo suppression and a neural module for residual echo suppression. However, not only do adaptive filtering modules require convergence and remain susceptible to changes in acoustic environments, but this two-stage framework also often introduces unnecessary delays to the AEC system when neural modules are already capable of both linear and nonlinear echo suppression. In this paper, we exploit the offset-compensating ability of complex time-frequency masks and propose an end-to-end complex-valued neural network architecture. The building block of the proposed model is a pseudocomplex extension based on the densely-connected multidilated DenseNet (D3Net) building block, resulting in a very small network of only 354K parameters. The architecture utilized the multi-resolution nature of the D3Net building blocks to eliminate the need for pooling, allowing the network to extract features using large receptive fields without any loss of output resolution. We also propose a dual-mask technique for joint echo and noise suppression with simultaneous speech enhancement. Evaluation on both synthetic and real test sets demonstrated promising results across multiple energy-based metrics and perceptual proxies.

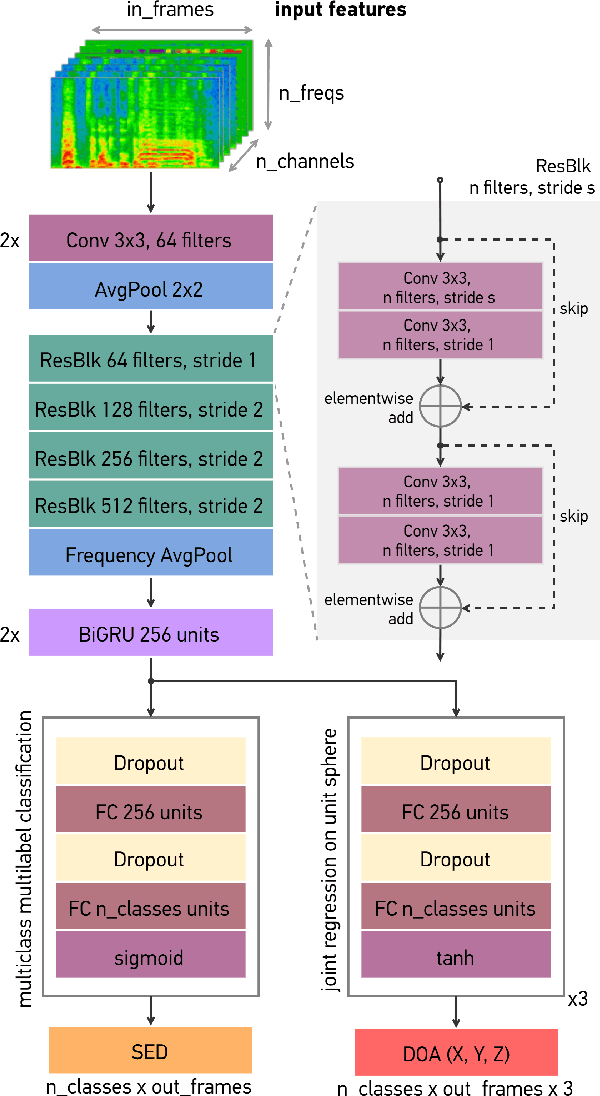

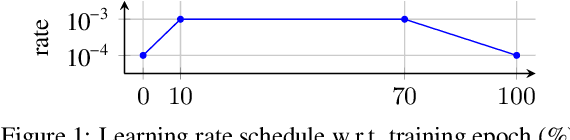

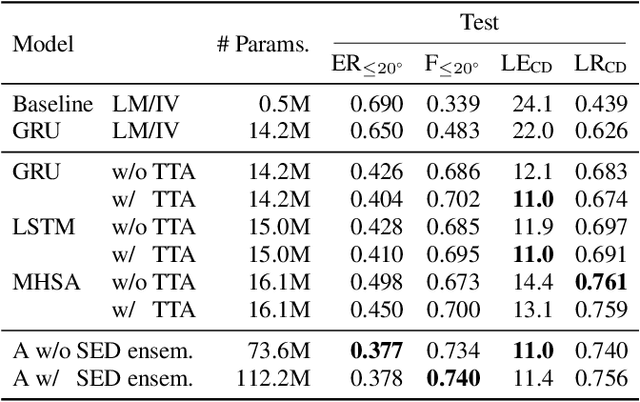

SALSA: Spatial Cue-Augmented Log-Spectrogram Features for Polyphonic Sound Event Localization and Detection

Oct 01, 2021

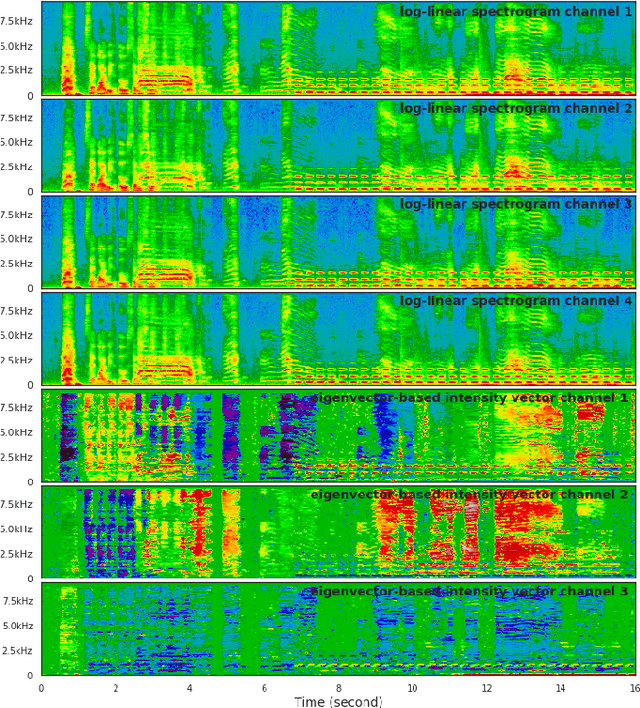

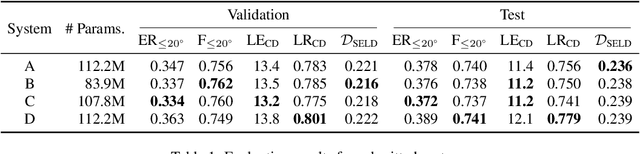

Abstract:Sound event localization and detection (SELD) consists of two subtasks, which are sound event detection and direction-of-arrival estimation. While sound event detection mainly relies on time-frequency patterns to distinguish different sound classes, direction-of-arrival estimation uses amplitude and/or phase differences between microphones to estimate source directions. As a result, it is often difficult to jointly optimize these two subtasks. We propose a novel feature called Spatial cue-Augmented Log-SpectrogrAm (SALSA) with exact time-frequency mapping between the signal power and the source directional cues, which is crucial for resolving overlapping sound sources. The SALSA feature consists of multichannel log-spectrograms stacked along with the normalized principal eigenvector of the spatial covariance matrix at each corresponding time-frequency bin. Depending on the microphone array format, the principal eigenvector can be normalized differently to extract amplitude and/or phase differences between the microphones. As a result, SALSA features are applicable for different microphone array formats such as first-order ambisonics (FOA) and multichannel microphone array (MIC). Experimental results on the TAU-NIGENS Spatial Sound Events 2021 dataset with directional interferences showed that SALSA features outperformed other state-of-the-art features. Specifically, the use of SALSA features in the FOA format increased the F1 score and localization recall by 6% each, compared to the multichannel log-mel spectrograms with intensity vectors. For the MIC format, using SALSA features increased F1 score and localization recall by 16% and 7%, respectively, compared to using multichannel log-mel spectrograms with generalized cross-correlation spectra. Our ensemble model trained on SALSA features ranked second in the team category of the SELD task in the 2021 DCASE Challenge.

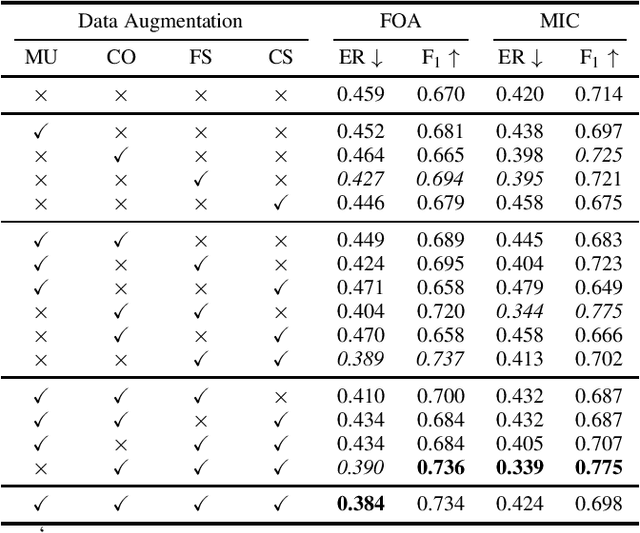

Improving Polyphonic Sound Event Detection on Multichannel Recordings with the Sørensen-Dice Coefficient Loss and Transfer Learning

Jul 22, 2021

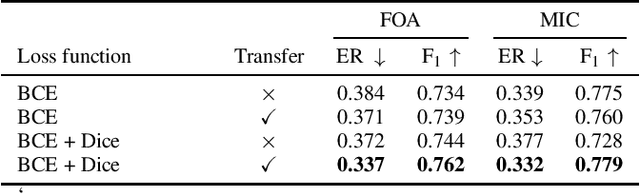

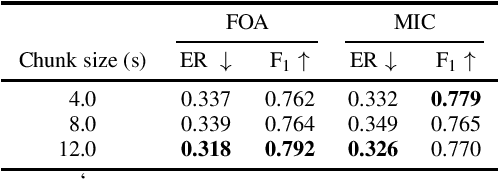

Abstract:The S{\o}rensen--Dice Coefficient has recently seen rising popularity as a loss function (also known as Dice loss) due to its robustness in tasks where the number of negative samples significantly exceeds that of positive samples, such as semantic segmentation, natural language processing, and sound event detection. Conventional training of polyphonic sound event detection systems with binary cross-entropy loss often results in suboptimal detection performance as the training is often overwhelmed by updates from negative samples. In this paper, we investigated the effect of the Dice loss, intra- and inter-modal transfer learning, data augmentation, and recording formats, on the performance of polyphonic sound event detection systems with multichannel inputs. Our analysis showed that polyphonic sound event detection systems trained with Dice loss consistently outperformed those trained with cross-entropy loss across different training settings and recording formats in terms of F1 score and error rate. We achieved further performance gains via the use of transfer learning and an appropriate combination of different data augmentation techniques.

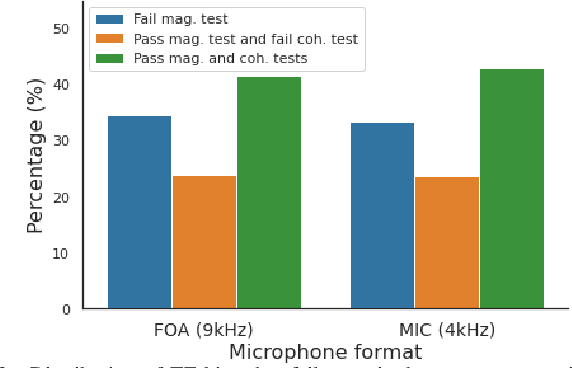

What Makes Sound Event Localization and Detection Difficult? Insights from Error Analysis

Jul 22, 2021

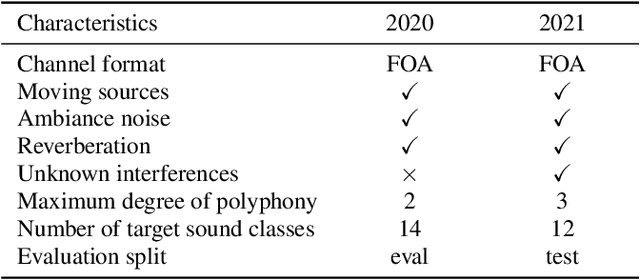

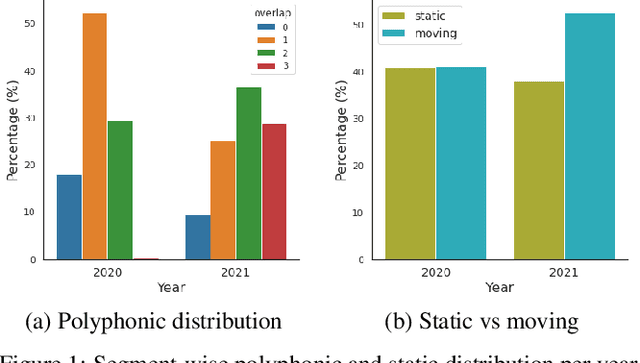

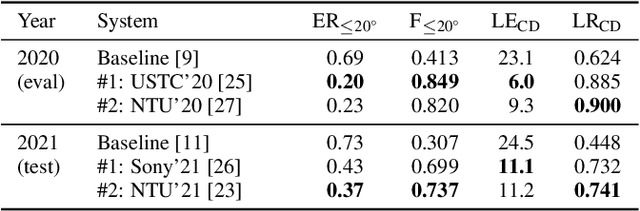

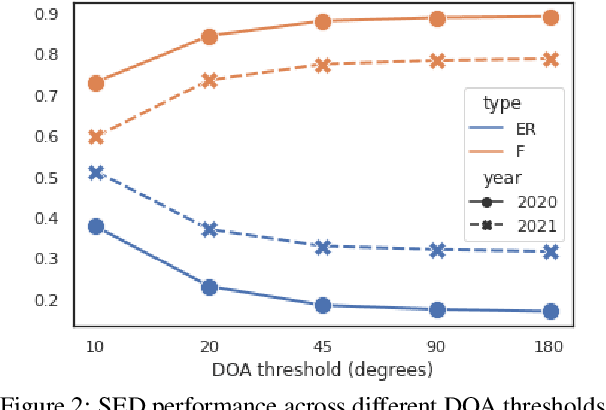

Abstract:Sound event localization and detection (SELD) is an emerging research topic that aims to unify the tasks of sound event detection and direction-of-arrival estimation. As a result, SELD inherits the challenges of both tasks, such as noise, reverberation, interference, polyphony, and non-stationarity of sound sources. Furthermore, SELD often faces an additional challenge of assigning correct correspondences between the detected sound classes and directions of arrival to multiple overlapping sound events. Previous studies have shown that unknown interferences in reverberant environments often cause major degradation in the performance of SELD systems. To further understand the challenges of the SELD task, we performed a detailed error analysis on two of our SELD systems, which both ranked second in the team category of DCASE SELD Challenge, one in 2020 and one in 2021. Experimental results indicate polyphony as the main challenge in SELD, due to the difficulty in detecting all sound events of interest. In addition, the SELD systems tend to make fewer errors for the polyphonic scenario that is dominant in the training set.

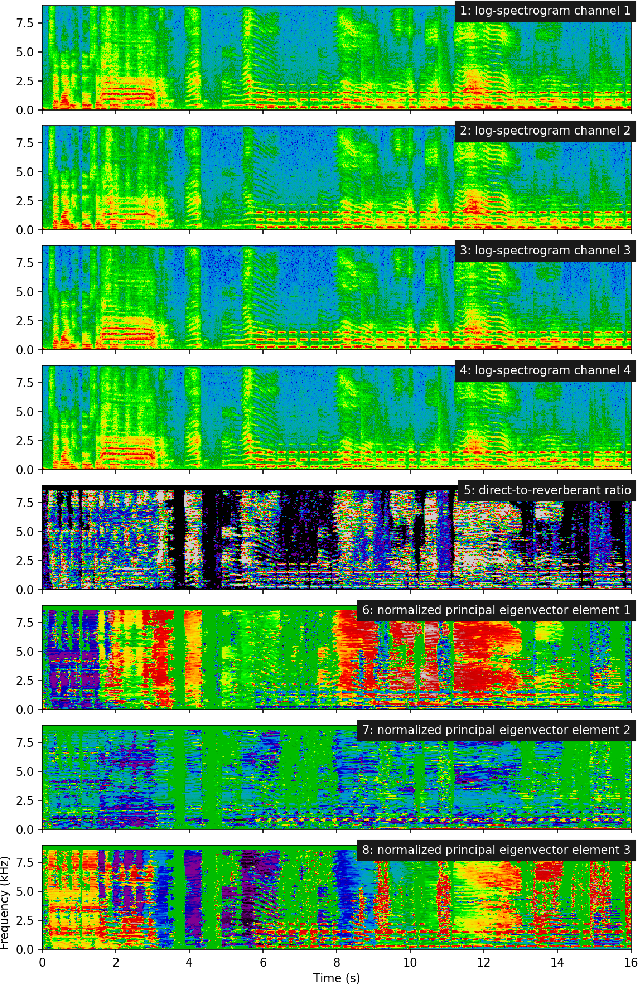

DCASE 2021 Task 3: Spectrotemporally-aligned Features for Polyphonic Sound Event Localization and Detection

Jun 29, 2021

Abstract:Sound event localization and detection consists of two subtasks which are sound event detection and direction-of-arrival estimation. While sound event detection mainly relies on time-frequency patterns to distinguish different sound classes, direction-of-arrival estimation uses magnitude or phase differences between microphones to estimate source directions. Therefore, it is often difficult to jointly train these two subtasks simultaneously. We propose a novel feature called spatial cue-augmented log-spectrogram (SALSA) with exact time-frequency mapping between the signal power and the source direction-of-arrival. The feature includes multichannel log-spectrograms stacked along with the estimated direct-to-reverberant ratio and a normalized version of the principal eigenvector of the spatial covariance matrix at each time-frequency bin on the spectrograms. Experimental results on the DCASE 2021 dataset for sound event localization and detection with directional interference showed that the deep learning-based models trained on this new feature outperformed the DCASE challenge baseline by a large margin. We combined several models with slightly different architectures that were trained on the new feature to further improve the system performances for the DCASE sound event localization and detection challenge.

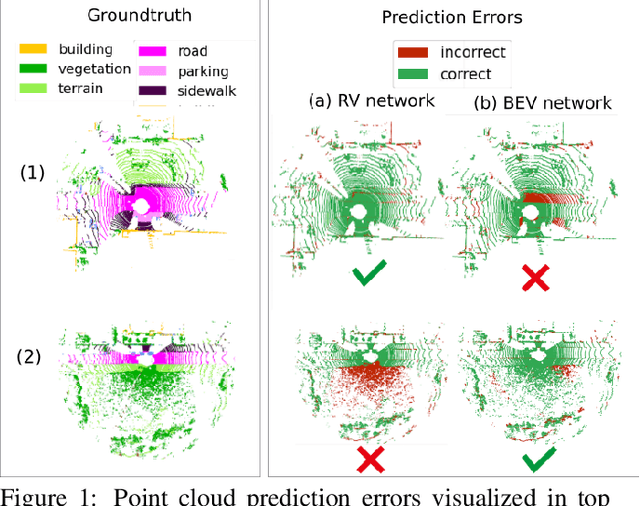

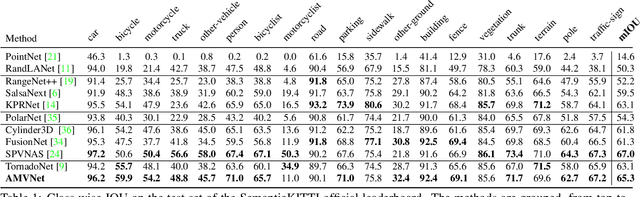

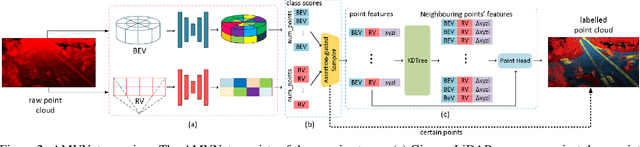

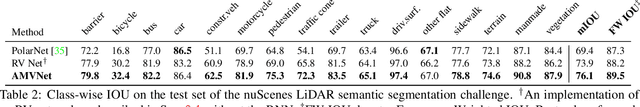

AMVNet: Assertion-based Multi-View Fusion Network for LiDAR Semantic Segmentation

Dec 09, 2020

Abstract:In this paper, we present an Assertion-based Multi-View Fusion network (AMVNet) for LiDAR semantic segmentation which aggregates the semantic features of individual projection-based networks using late fusion. Given class scores from different projection-based networks, we perform assertion-guided point sampling on score disagreements and pass a set of point-level features for each sampled point to a simple point head which refines the predictions. This modular-and-hierarchical late fusion approach provides the flexibility of having two independent networks with a minor overhead from a light-weight network. Such approaches are desirable for robotic systems, e.g. autonomous vehicles, for which the computational and memory resources are often limited. Extensive experiments show that AMVNet achieves state-of-the-art results in both the SemanticKITTI and nuScenes benchmark datasets and that our approach outperforms the baseline method of combining the class scores of the projection-based networks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge