Tali Treibitz

The Hatter Department of Marine Technologies within the Leon H. Charney School for Marine Sciences, University of Haifa

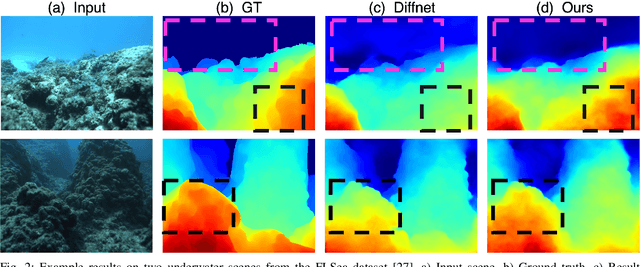

Osmosis: RGBD Diffusion Prior for Underwater Image Restoration

Mar 21, 2024

Abstract:Underwater image restoration is a challenging task because of strong water effects that increase dramatically with distance. This is worsened by lack of ground truth data of clean scenes without water. Diffusion priors have emerged as strong image restoration priors. However, they are often trained with a dataset of the desired restored output, which is not available in our case. To overcome this critical issue, we show how to leverage in-air images to train diffusion priors for underwater restoration. We also observe that only color data is insufficient, and augment the prior with a depth channel. We train an unconditional diffusion model prior on the joint space of color and depth, using standard RGBD datasets of natural outdoor scenes in air. Using this prior together with a novel guidance method based on the underwater image formation model, we generate posterior samples of clean images, removing the water effects. Even though our prior did not see any underwater images during training, our method outperforms state-of-the-art baselines for image restoration on very challenging scenes. Data, models and code are published in the project page.

Towards Monocular Shape from Refraction

May 31, 2023Abstract:Refraction is a common physical phenomenon and has long been researched in computer vision. Objects imaged through a refractive object appear distorted in the image as a function of the shape of the interface between the media. This hinders many computer vision applications, but can be utilized for obtaining the geometry of the refractive interface. Previous approaches for refractive surface recovery largely relied on various priors or additional information like multiple images of the analyzed surface. In contrast, we claim that a simple energy function based on Snell's law enables the reconstruction of an arbitrary refractive surface geometry using just a single image and known background texture and geometry. In the case of a single point, Snell's law has two degrees of freedom, therefore to estimate a surface depth, we need additional information. We show that solving for an entire surface at once introduces implicit parameter-free spatial regularization and yields convincing results when an intelligent initial guess is provided. We demonstrate our approach through simulations and real-world experiments, where the reconstruction shows encouraging results in the single-frame monocular setting.

* 12 pages, 6 figures, The 32nd British Machine Vision Conference (BMVC)

SeaThru-NeRF: Neural Radiance Fields in Scattering Media

Apr 16, 2023

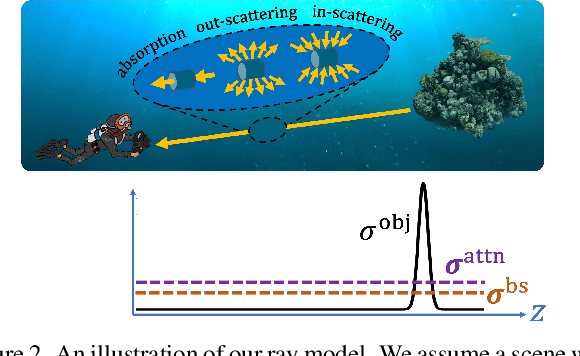

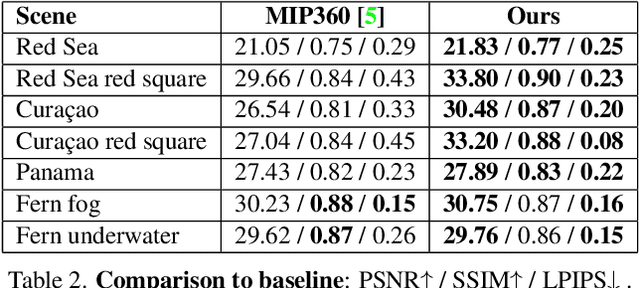

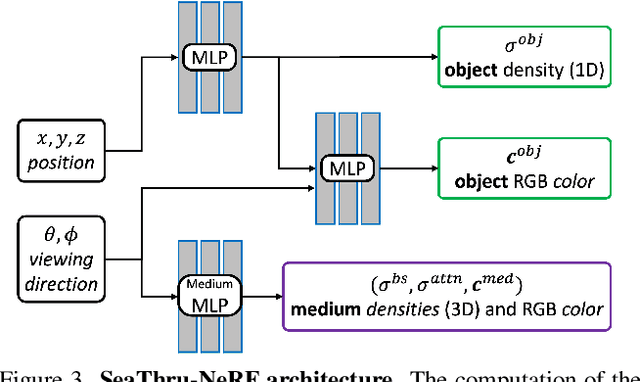

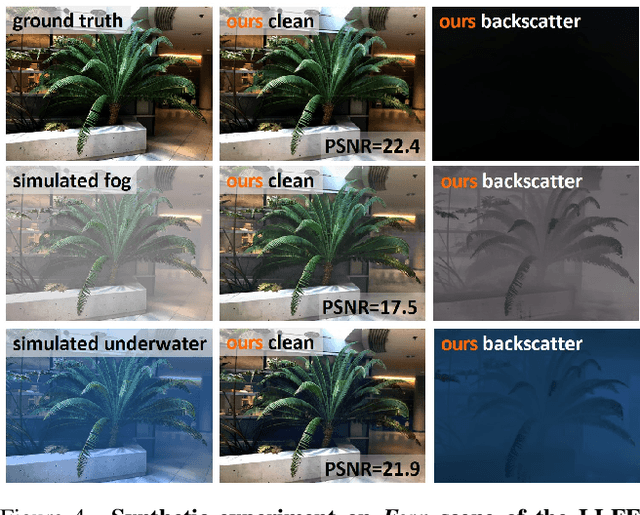

Abstract:Research on neural radiance fields (NeRFs) for novel view generation is exploding with new models and extensions. However, a question that remains unanswered is what happens in underwater or foggy scenes where the medium strongly influences the appearance of objects. Thus far, NeRF and its variants have ignored these cases. However, since the NeRF framework is based on volumetric rendering, it has inherent capability to account for the medium's effects, once modeled appropriately. We develop a new rendering model for NeRFs in scattering media, which is based on the SeaThru image formation model, and suggest a suitable architecture for learning both scene information and medium parameters. We demonstrate the strength of our method using simulated and real-world scenes, correctly rendering novel photorealistic views underwater. Even more excitingly, we can render clear views of these scenes, removing the medium between the camera and the scene and reconstructing the appearance and depth of far objects, which are severely occluded by the medium. Our code and unique datasets are available on the project's website.

FLSea: Underwater Visual-Inertial and Stereo-Vision Forward-Looking Datasets

Feb 24, 2023Abstract:Visibility underwater is challenging, and degrades as the distance between the subject and camera increases, making vision tasks in the forward-looking direction more difficult. We have collected underwater forward-looking stereo-vision and visual-inertial image sets in the Mediterranean and Red Sea. To our knowledge there are no other public datasets in the underwater environment acquired with this camera-sensor orientation published with ground-truth. These datasets are critical for the development of several underwater applications, including obstacle avoidance, visual odometry, 3D tracking, Simultaneous Localization and Mapping (SLAM) and depth estimation. The stereo datasets include synchronized stereo images in dynamic underwater environments with objects of known-size. The visual-inertial datasets contain monocular images and IMU measurements, aligned with millisecond resolution timestamps and objects of known size which were placed in the scene. Both sensor configurations allow for scale estimation, with the calibrated baseline in the stereo setup and the IMU in the visual-inertial setup. Ground truth depth maps were created offline for both dataset types using photogrammetry. The ground truth is validated with multiple known measurements placed throughout the imaged environment. There are 5 stereo and 8 visual-inertial datasets in total, each containing thousands of images, with a range of different underwater visibility and ambient light conditions, natural and man-made structures and dynamic camera motions. The forward-looking orientation of the camera makes these datasets unique and ideal for testing underwater obstacle-avoidance algorithms and for navigation close to the seafloor in dynamic environments. With our datasets, we hope to encourage the advancement of autonomous functionality for underwater vehicles in dynamic and/or shallow water environments.

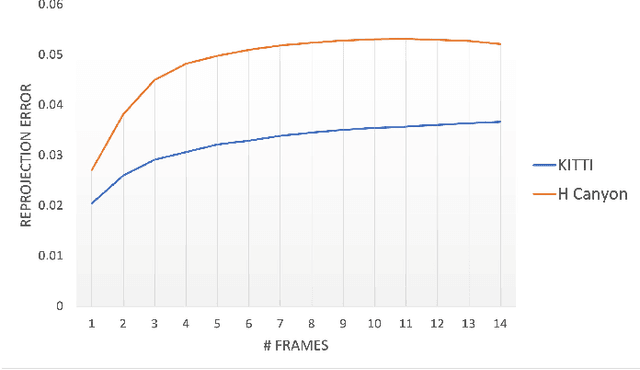

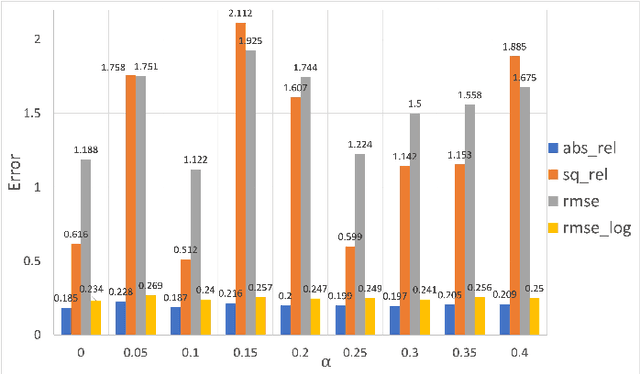

Self-Supervised Monocular Depth Underwater

Oct 06, 2022

Abstract:Depth estimation is critical for any robotic system. In the past years estimation of depth from monocular images have shown great improvement, however, in the underwater environment results are still lagging behind due to appearance changes caused by the medium. So far little effort has been invested on overcoming this. Moreover, underwater, there are more limitations for using high resolution depth sensors, this makes generating ground truth for learning methods another enormous obstacle. So far unsupervised methods that tried to solve this have achieved very limited success as they relied on domain transfer from dataset in air. We suggest training using subsequent frames self-supervised by a reprojection loss, as was demonstrated successfully above water. We suggest several additions to the self-supervised framework to cope with the underwater environment and achieve state-of-the-art results on a challenging forward-looking underwater dataset.

NAN: Noise-Aware NeRFs for Burst-Denoising

Apr 12, 2022

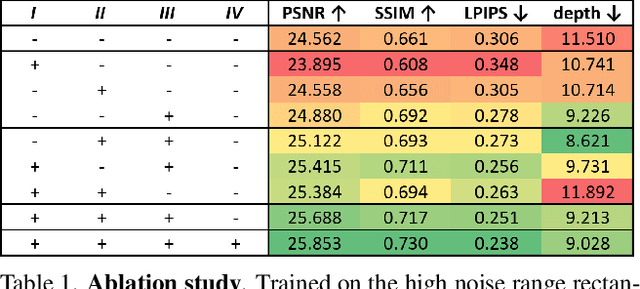

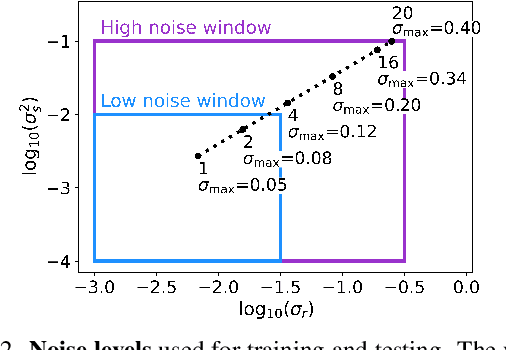

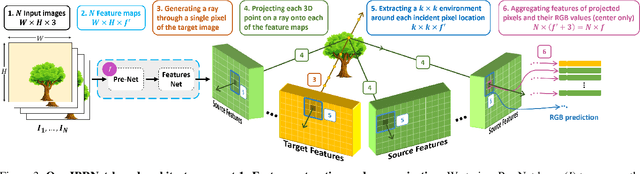

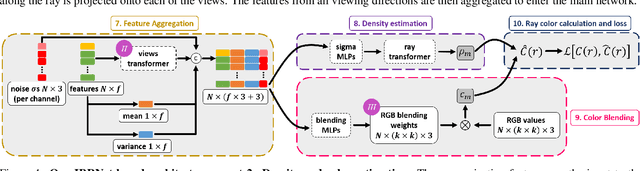

Abstract:Burst denoising is now more relevant than ever, as computational photography helps overcome sensitivity issues inherent in mobile phones and small cameras. A major challenge in burst-denoising is in coping with pixel misalignment, which was so far handled with rather simplistic assumptions of simple motion, or the ability to align in pre-processing. Such assumptions are not realistic in the presence of large motion and high levels of noise. We show that Neural Radiance Fields (NeRFs), originally suggested for physics-based novel-view rendering, can serve as a powerful framework for burst denoising. NeRFs have an inherent capability of handling noise as they integrate information from multiple images, but they are limited in doing so, mainly since they build on pixel-wise operations which are suitable to ideal imaging conditions. Our approach, termed NAN, leverages inter-view and spatial information in NeRFs to better deal with noise. It achieves state-of-the-art results in burst denoising and is especially successful in coping with large movement and occlusions, under very high levels of noise. With the rapid advances in accelerating NeRFs, it could provide a powerful platform for denoising in challenging environments.

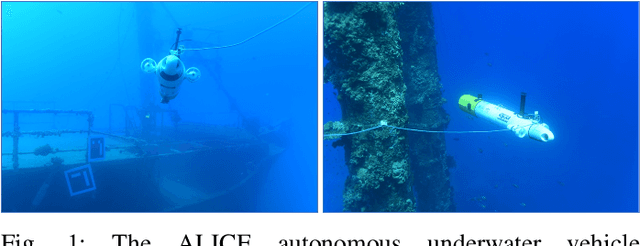

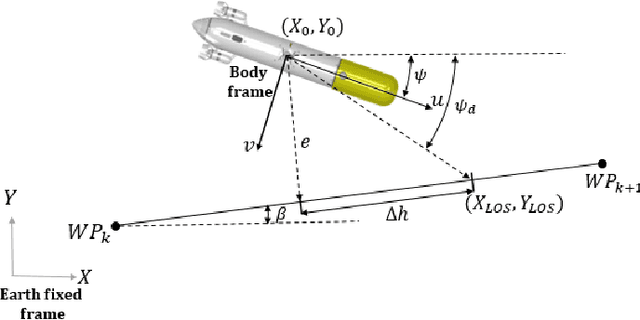

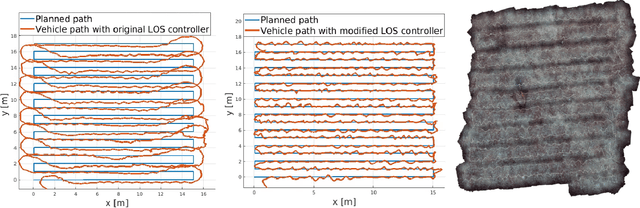

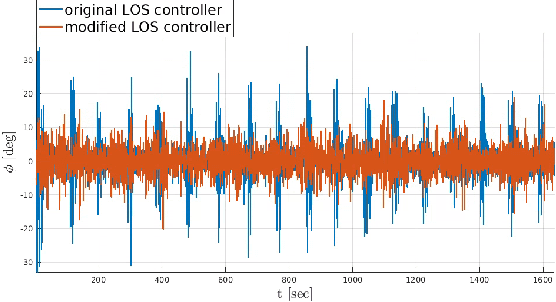

On the modification of the SPARUS II AUV for close range imaging survey platform

Nov 17, 2021

Abstract:Based on the need for high resolution underwater visual surveys, this study presents the adaptation of an existing SPARUS II autonomous underwater vehicle (AUV) into an entirely hovering AUV fully capable of performing autonomous, close range imaging survey missions. This paper focuses on the enhancement of the AUVs maneuvering capability (enabling improved maneuvering control), implementation of an state of the art thruster allocation algorithm (allowing optimal thrusters allocation and thrusters redundancy), and the development of an upgraded path following controller to facilitate precise and delicate motions necessary for high resolution imaging missions. To facilitate the vehicles adaptation, a dynamic model is developed. The calibration process of the dynamic model coefficients initially obtained using well accepted formulas, by computational fluid dynamics and in real sea experiments is presented. The in house development of a pressure resistant imaging system is also presented. This system which includes a stereo camera and high power lightning strobes was developed and fitted as a dedicated AUV payload. Finally, the performance of the platform is demonstrated in an actual seabed visual survey mission.

Underwater Single Image Color Restoration Using Haze-Lines and a New Quantitative Dataset

Nov 06, 2018

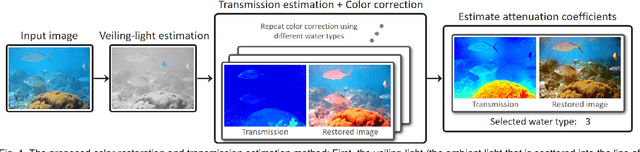

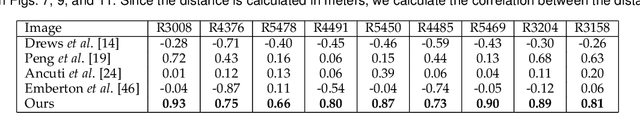

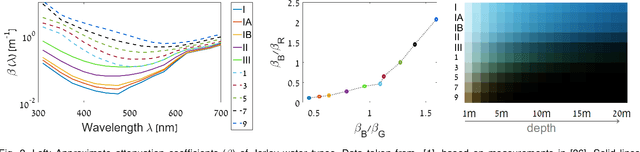

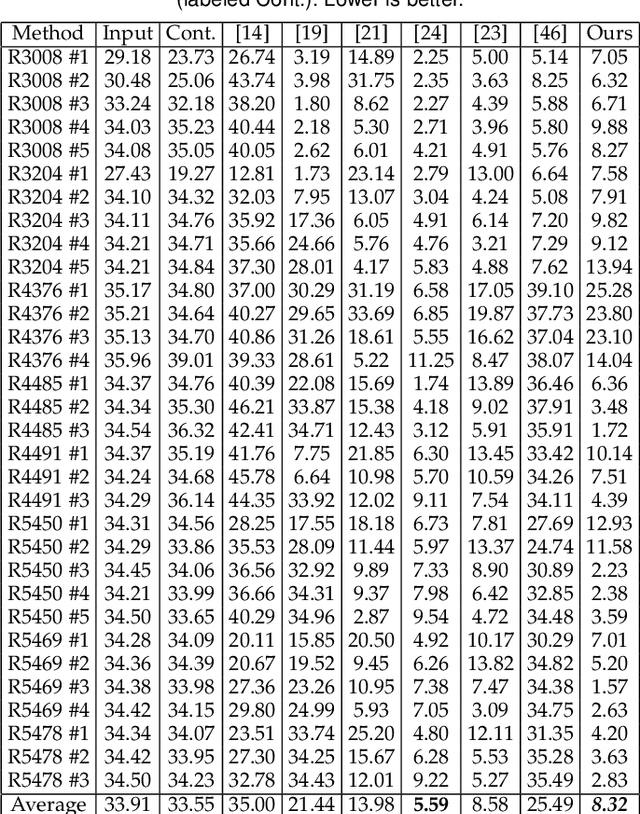

Abstract:Underwater images suffer from color distortion and low contrast, because light is attenuated while it propagates through water. Attenuation under water varies with wavelength, unlike terrestrial images where attenuation is assumed to be spectrally uniform. The attenuation depends both on the water body and the 3D structure of the scene, making color restoration difficult. Unlike existing single underwater image enhancement techniques, our method takes into account multiple spectral profiles of different water types. By estimating just two additional global parameters: the attenuation ratios of the blue-red and blue-green color channels, the problem is reduced to single image dehazing, where all color channels have the same attenuation coefficients. Since the water type is unknown, we evaluate different parameters out of an existing library of water types. Each type leads to a different restored image and the best result is automatically chosen based on color distribution. We collected a dataset of images taken in different locations with varying water properties, showing color charts in the scenes. Moreover, to obtain ground truth, the 3D structure of the scene was calculated based on stereo imaging. This dataset enables a quantitative evaluation of restoration algorithms on natural images and shows the advantage of our method.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge