Taekyun Lee

Fine-Tuning Masked Diffusion for Provable Self-Correction

Oct 01, 2025Abstract:A natural desideratum for generative models is self-correction--detecting and revising low-quality tokens at inference. While Masked Diffusion Models (MDMs) have emerged as a promising approach for generative modeling in discrete spaces, their capacity for self-correction remains poorly understood. Prior attempts to incorporate self-correction into MDMs either require overhauling MDM architectures/training or rely on imprecise proxies for token quality, limiting their applicability. Motivated by this, we introduce PRISM--Plug-in Remasking for Inference-time Self-correction of Masked Diffusions--a lightweight, model-agnostic approach that applies to any pretrained MDM. Theoretically, PRISM defines a self-correction loss that provably learns per-token quality scores, without RL or a verifier. These quality scores are computed in the same forward pass with MDM and used to detect low-quality tokens. Empirically, PRISM advances MDM inference across domains and scales: Sudoku; unconditional text (170M); and code with LLaDA (8B).

Satellite Selection for In-Band Coexistence of Dense LEO Networks

Mar 19, 2025Abstract:We study spectrum sharing between two dense low-earth orbit (LEO) satellite constellations, an incumbent primary system and a secondary system that must respect interference protection constraints on the primary system. In particular, we propose a secondary satellite selection framework and algorithm that maximizes capacity while guaranteeing that the time-average interference and absolute interference inflicted upon each primary ground user never exceeds specified thresholds. We solve this NP-hard constrained, combinatorial satellite selection problem through Lagrangian relaxation to decompose it into simpler problems which can then be solved through subgradient methods. A high-fidelity simulation is developed based on public FCC filings and technical specifications of the Starlink and Kuiper systems. We use this case study to illustrate the effectiveness of our approach and that explicit protection is indeed necessary for healthy coexistence. We further demonstrate that deep learning models can be used to predict the primary satellite system associations, which helps the secondary system avoid inflicting excessive interference and maximize its own capacity.

Self-Supervised Graph Contrastive Pretraining for Device-level Integrated Circuits

Feb 13, 2025

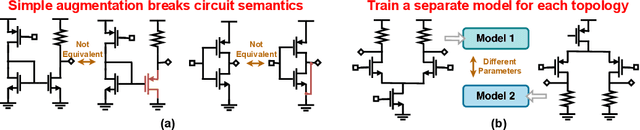

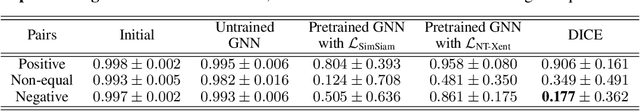

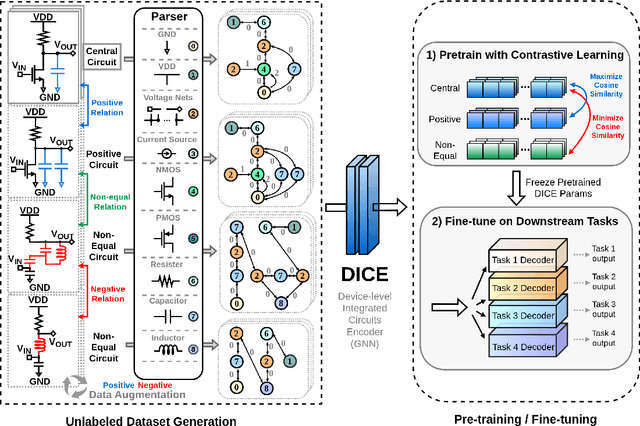

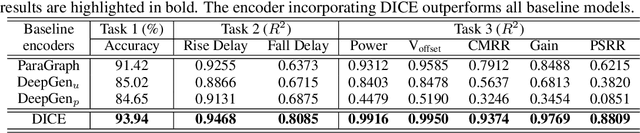

Abstract:Self-supervised graph representation learning has driven significant advancements in domains such as social network analysis, molecular design, and electronics design automation (EDA). However, prior works in EDA have mainly focused on the representation of gate-level digital circuits, failing to capture analog and mixed-signal circuits. To address this gap, we introduce DICE: Device-level Integrated Circuits Encoder, the first self-supervised pretrained graph neural network (GNN) model for any circuit expressed at the device level. DICE is a message-passing neural network (MPNN) trained through graph contrastive learning, and its pretraining process is simulation-free, incorporating two novel data augmentation techniques. Experimental results demonstrate that DICE achieves substantial performance gains across three downstream tasks, underscoring its effectiveness for both analog and digital circuits.

Importance Sampling via Score-based Generative Models

Feb 07, 2025

Abstract:Importance sampling, which involves sampling from a probability density function (PDF) proportional to the product of an importance weight function and a base PDF, is a powerful technique with applications in variance reduction, biased or customized sampling, data augmentation, and beyond. Inspired by the growing availability of score-based generative models (SGMs), we propose an entirely training-free Importance sampling framework that relies solely on an SGM for the base PDF. Our key innovation is realizing the importance sampling process as a backward diffusion process, expressed in terms of the score function of the base PDF and the specified importance weight function--both readily available--eliminating the need for any additional training. We conduct a thorough analysis demonstrating the method's scalability and effectiveness across diverse datasets and tasks, including importance sampling for industrial and natural images with neural importance weight functions. The training-free aspect of our method is particularly compelling in real-world scenarios where a single base distribution underlies multiple biased sampling tasks, each requiring a different importance weight function. To the best of our knowledge our approach is the first importance sampling framework to achieve this.

Generating High Dimensional User-Specific Wireless Channels using Diffusion Models

Sep 05, 2024

Abstract:Deep neural network (DNN)-based algorithms are emerging as an important tool for many physical and MAC layer functions in future wireless communication systems, including for large multi-antenna channels. However, training such models typically requires a large dataset of high-dimensional channel measurements, which are very difficult and expensive to obtain. This paper introduces a novel method for generating synthetic wireless channel data using diffusion-based models to produce user-specific channels that accurately reflect real-world wireless environments. Our approach employs a conditional denoising diffusion implicit models (cDDIM) framework, effectively capturing the relationship between user location and multi-antenna channel characteristics. We generate synthetic high fidelity channel samples using user positions as conditional inputs, creating larger augmented datasets to overcome measurement scarcity. The utility of this method is demonstrated through its efficacy in training various downstream tasks such as channel compression and beam alignment. Our approach significantly improves over prior methods, such as adding noise or using generative adversarial networks (GANs), especially in scenarios with limited initial measurements.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge