Jeffrey G. Andrews

Self-Nomination: Deep Learning for Decentralized CSI Feedback Reduction in MU-MIMO Systems

Apr 23, 2025

Abstract:This paper introduces a novel deep learning-based user-side feedback reduction framework, termed self-nomination. The goal of self-nomination is to reduce the number of users (UEs) feeding back channel state information (CSI) to the base station (BS), by letting each UE decide whether to feed back based on its estimated likelihood of being scheduled and its potential contribution to precoding in a multiuser MIMO (MU-MIMO) downlink. Unlike SNR- or SINR-based thresholding methods, the proposed approach uses rich spatial channel statistics and learns nontrivial correlation effects that affect eventual MU-MIMO scheduling decisions. To train the self-nomination network under an average feedback constraint, we propose two different strategies: one based on direct optimization with gradient approximations, and another using policy gradient-based optimization with a stochastic Bernoulli policy to handle non-differentiable scheduling. The framework also supports proportional-fair scheduling by incorporating dynamic user weights. Numerical results confirm that the proposed self-nomination method significantly reduces CSI feedback overhead. Compared to baseline feedback methods, self-nomination can reduce feedback by as much as 65%, saving not only bandwidth but also allowing many UEs to avoid feedback altogether (and thus, potentially enter a sleep mode). Self-nomination achieves this significant savings with negligible reduction in sum-rate or fairness.

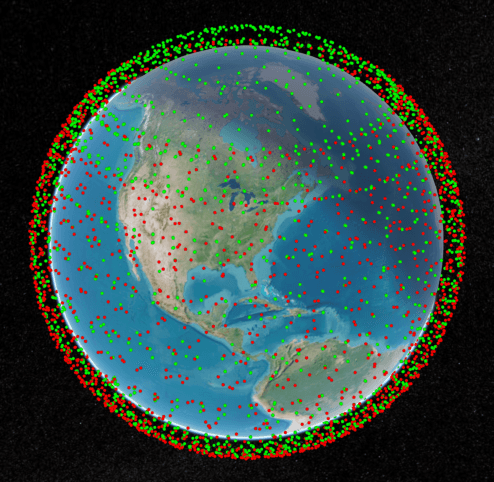

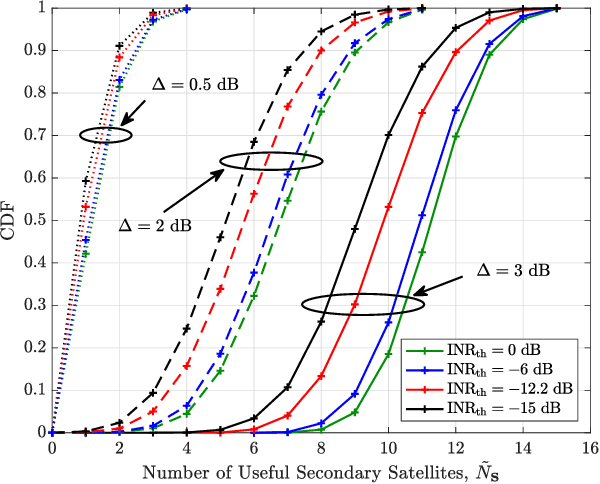

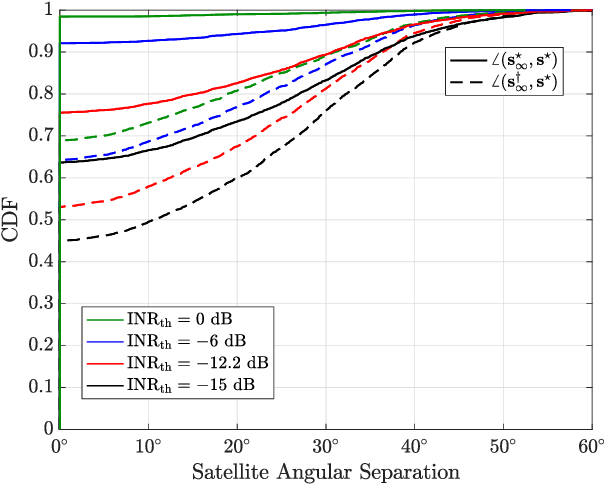

Satellite Selection for In-Band Coexistence of Dense LEO Networks

Mar 19, 2025Abstract:We study spectrum sharing between two dense low-earth orbit (LEO) satellite constellations, an incumbent primary system and a secondary system that must respect interference protection constraints on the primary system. In particular, we propose a secondary satellite selection framework and algorithm that maximizes capacity while guaranteeing that the time-average interference and absolute interference inflicted upon each primary ground user never exceeds specified thresholds. We solve this NP-hard constrained, combinatorial satellite selection problem through Lagrangian relaxation to decompose it into simpler problems which can then be solved through subgradient methods. A high-fidelity simulation is developed based on public FCC filings and technical specifications of the Starlink and Kuiper systems. We use this case study to illustrate the effectiveness of our approach and that explicit protection is indeed necessary for healthy coexistence. We further demonstrate that deep learning models can be used to predict the primary satellite system associations, which helps the secondary system avoid inflicting excessive interference and maximize its own capacity.

Generating High Dimensional User-Specific Wireless Channels using Diffusion Models

Sep 05, 2024

Abstract:Deep neural network (DNN)-based algorithms are emerging as an important tool for many physical and MAC layer functions in future wireless communication systems, including for large multi-antenna channels. However, training such models typically requires a large dataset of high-dimensional channel measurements, which are very difficult and expensive to obtain. This paper introduces a novel method for generating synthetic wireless channel data using diffusion-based models to produce user-specific channels that accurately reflect real-world wireless environments. Our approach employs a conditional denoising diffusion implicit models (cDDIM) framework, effectively capturing the relationship between user location and multi-antenna channel characteristics. We generate synthetic high fidelity channel samples using user positions as conditional inputs, creating larger augmented datasets to overcome measurement scarcity. The utility of this method is demonstrated through its efficacy in training various downstream tasks such as channel compression and beam alignment. Our approach significantly improves over prior methods, such as adding noise or using generative adversarial networks (GANs), especially in scenarios with limited initial measurements.

Site-Specific Beam Alignment in 6G via Deep Learning

Mar 24, 2024

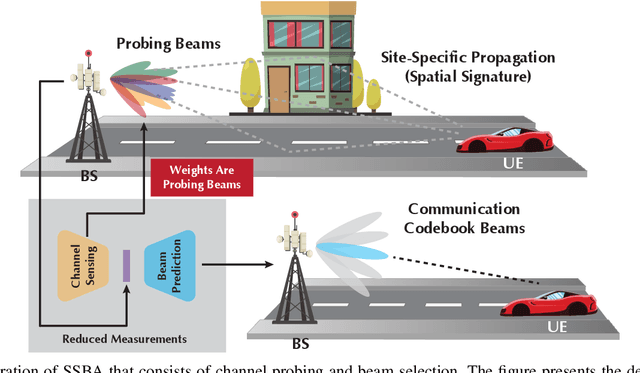

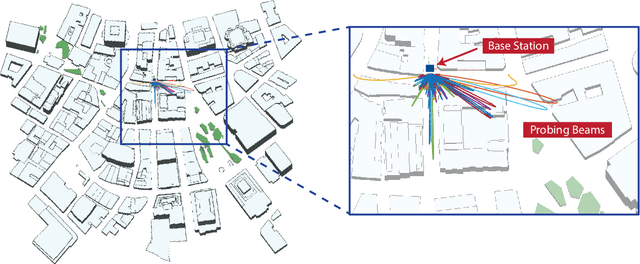

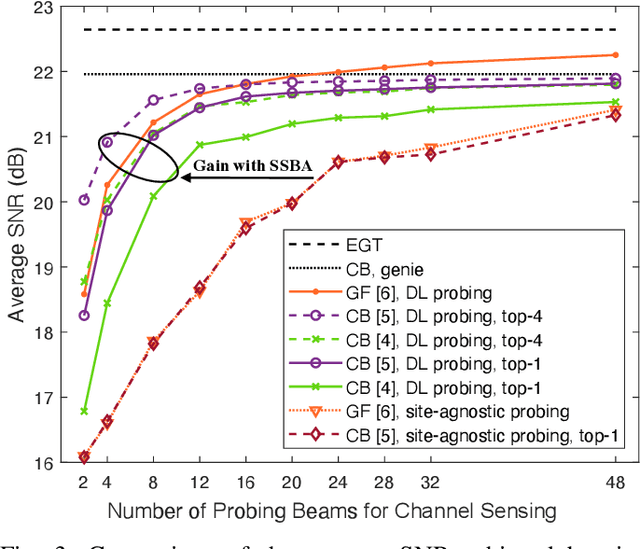

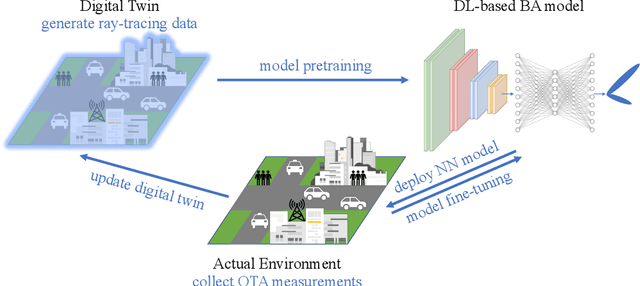

Abstract:Beam alignment (BA) in modern millimeter wave standards such as 5G NR and WiGig (802.11ay) is based on exhaustive and/or hierarchical beam searches over pre-defined codebooks of wide and narrow beams. This approach is slow and bandwidth/power-intensive, and is a considerable hindrance to the wide deployment of millimeter wave bands. A new approach is needed as we move towards 6G. BA is a promising use case for deep learning (DL) in the 6G air interface, offering the possibility of automated custom tuning of the BA procedure for each cell based on its unique propagation environment and user equipment (UE) location patterns. We overview and advocate for such an approach in this paper, which we term site-specific beam alignment (SSBA). SSBA largely eliminates wasteful searches and allows UEs to be found much more quickly and reliably, without many of the drawbacks of other machine learning-aided approaches. We first overview and demonstrate new results on SSBA, then identify the key open challenges facing SSBA.

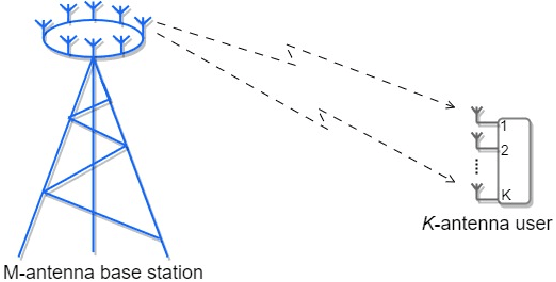

End-to-End Deep Learning for TDD MIMO Systems in the 6G Upper Midbands

Feb 01, 2024

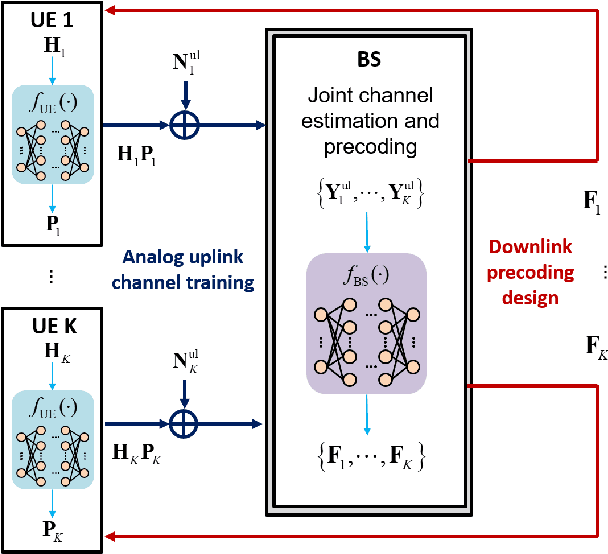

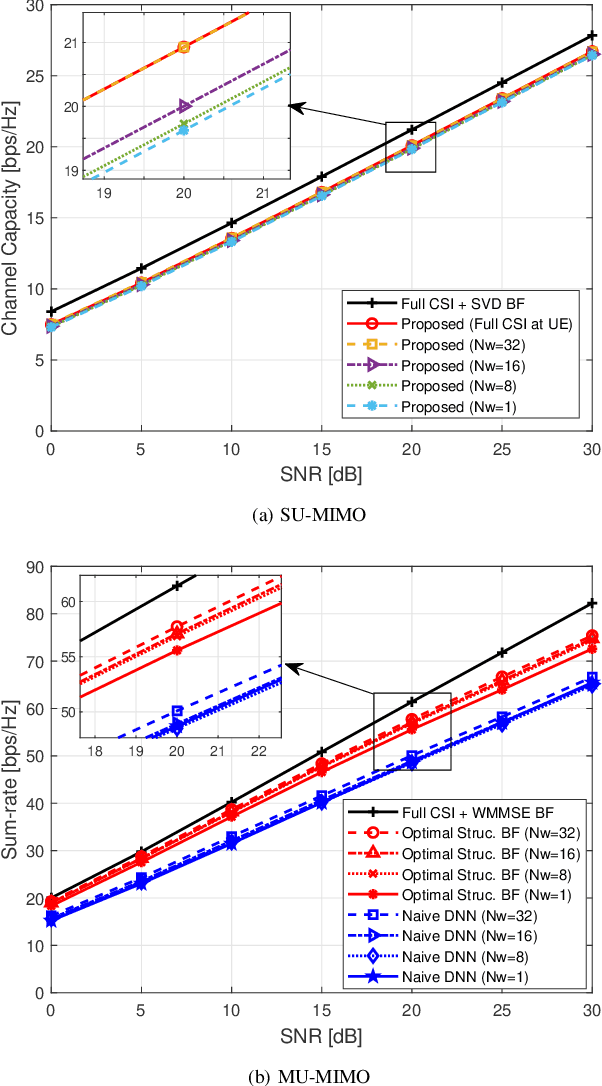

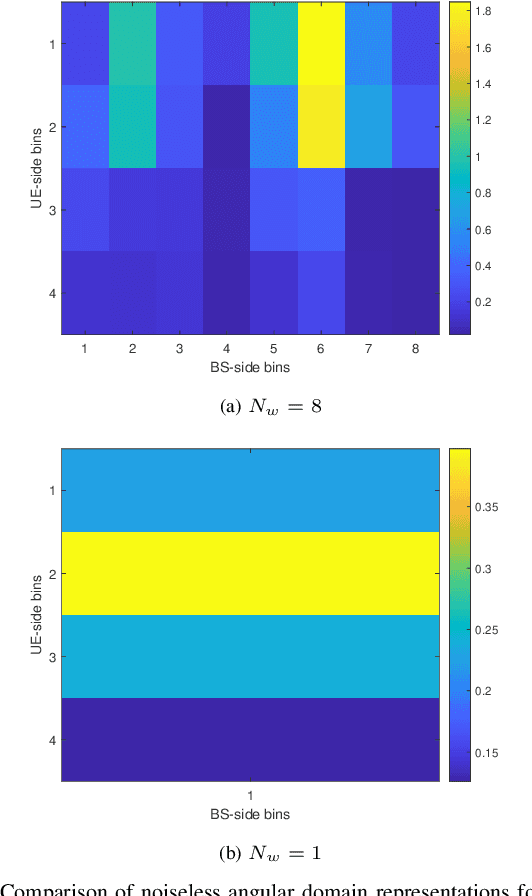

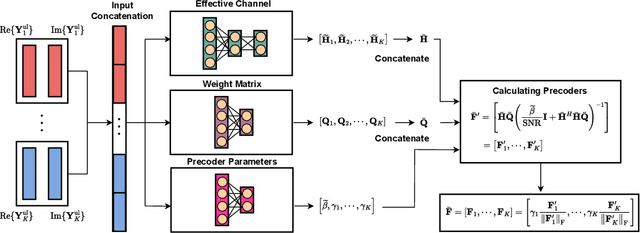

Abstract:This paper proposes and analyzes novel deep learning methods for downlink (DL) single-user multiple-input multiple-output (SU-MIMO) and multi-user MIMO (MU-MIMO) systems operating in time division duplex (TDD) mode. A motivating application is the 6G upper midbands (7-24 GHz), where the base station (BS) antenna arrays are large, user equipment (UE) array sizes are moderate, and theoretically optimal approaches are practically infeasible for several reasons. To deal with uplink (UL) pilot overhead and low signal power issues, we introduce the channel-adaptive pilot, as part of an analog channel state information feedback mechanism. Deep neural network (DNN)-generated pilots are used to linearly transform the UL channel matrix into lower-dimensional latent vectors. Meanwhile, the BS employs a second DNN that processes the received UL pilots to directly generate near-optimal DL precoders. The training is end-to-end which exploits synergies between the two DNNs. For MU-MIMO precoding, we propose a DNN structure inspired by theoretically optimum linear precoding. The proposed methods are evaluated against genie-aided upper bounds and conventional approaches, using realistic upper midband datasets. Numerical results demonstrate the potential of our approach to achieve significantly increased sum-rate, particularly at moderate to high signal-to-noise ratio (SNR) and when UL pilot overhead is constrained.

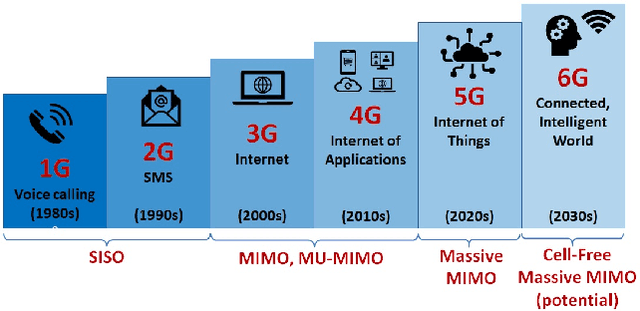

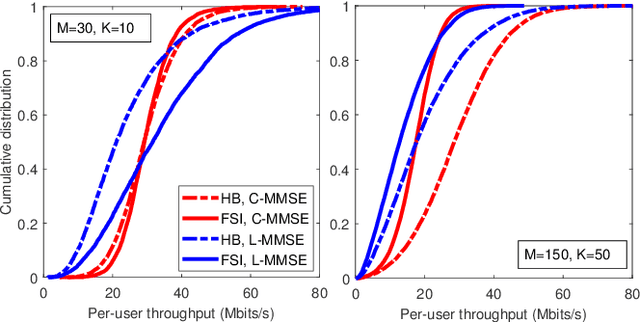

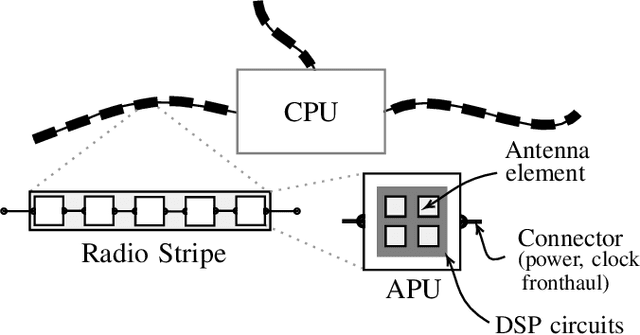

Ultra-Dense Cell-Free Massive MIMO for 6G: Technical Overview and Open Questions

Jan 08, 2024

Abstract:Ultra-dense cell-free massive multiple-input multiple-output (CF-MMIMO) has emerged as a promising technology expected to meet the future ubiquitous connectivity requirements and ever-growing data traffic demands in 6G. This article provides a contemporary overview of ultra-dense CF-MMIMO networks, and addresses important unresolved questions on their future deployment. We first present a comprehensive survey of state-of-the-art research on CF-MMIMO and ultra-dense networks. Then, we discuss the key challenges of CF-MMIMO under ultra-dense scenarios such as low-complexity architecture and processing, low-complexity/scalable resource allocation, fronthaul limitation, massive access, synchronization, and channel acquisition. Finally, we answer key open questions, considering different design comparisons and discussing suitable methods dealing with the key challenges of ultra-dense CF-MMIMO. The discussion aims to provide a valuable roadmap for interesting future research directions in this area, facilitating the development of CF-MMIMO MIMO for 6G.

Feasibility Analysis of In-Band Coexistence in Dense LEO Satellite Communication Systems

Dec 01, 2023

Abstract:This work provides a rigorous methodology for assessing the feasibility of spectrum sharing between large low-earth orbit (LEO) satellite constellations. For concreteness, we focus on the existing Starlink system and the soon-to-be-launched Kuiper system, which is prohibited from inflicting excessive interference onto the incumbent Starlink ground users. We carefully model and study the potential downlink interference between the two systems and investigate how strategic satellite selection may be used by Kuiper to serve its ground users while also protecting Starlink ground users. We then extend this notion of satellite selection to the case where Kuiper has limited knowledge of Starlink's serving satellite. Our findings reveal that there is always the potential for very high and extremely low interference, depending on which Starlink and Kuiper satellites are being used to serve their users. Consequently, we show that Kuiper can protect Starlink ground users with high probability, by strategically selecting which of its satellites are used to serve its ground users. Simultaneously, Kuiper is capable of delivering near-maximal downlink SINR to its own ground users. This highlights a feasible route to the coexistence of two dense LEO satellite systems, even in scenarios where one system has limited knowledge of the other's serving satellites.

One-bit mmWave MIMO Channel Estimation using Deep Generative Networks

Nov 16, 2022

Abstract:As future wireless systems trend towards higher carrier frequencies and large antenna arrays, receivers with one-bit analog-to-digital converters (ADCs) are being explored owing to their reduced power consumption. However, the combination of large antenna arrays and one-bit ADCs makes channel estimation challenging. In this paper, we formulate channel estimation from a limited number of one-bit quantized pilot measurements as an inverse problem and reconstruct the channel by optimizing the input vector of a pre-trained deep generative model with the objective of maximizing a novel correlation-based loss function. We observe that deep generative priors adapted to the underlying channel model significantly outperform Bernoulli-Gaussian Approximate Message Passing (BG-GAMP), while a single generative model that uses a conditional input to distinguish between Line-of-Sight (LOS) and Non-Line-of-Sight (NLOS) channel realizations outperforms BG-GAMP on LOS channels and achieves comparable performance on NLOS channels in terms of the normalized channel reconstruction error.

Joint Uplink-Downlink Capacity and Coverage Optimization via Site-Specific Learning of Antenna Settings

Oct 27, 2022

Abstract:We propose a novel framework for optimizing antenna parameter settings in a heterogeneous cellular network. We formulate an optimization problem for both coverage and capacity - in both the downlink (DL) and uplink (UL) - which configures the tilt angle, vertical half-power beamwidth (HPBW), and horizontal HPBW of each cell's antenna array across the network. The novel data-driven framework proposed for this non-convex problem, inspired by Bayesian optimization (BO) and differential evolution algorithms, is sample-efficient and converges quickly, while being scalable to large networks. By jointly optimizing DL and UL performance, we take into account the different signal power and interference characteristics of these two links, allowing a graceful trade-off between coverage and capacity in each one. Our experiments on a state-of-the-art 5G NR cellular system-level simulator developed by AT&T Labs show that the proposed algorithm consistently and significantly outperforms the 3GPP default settings, random search, and conventional BO. In one realistic setting, and compared to conventional BO, our approach increases the average sum-log-rate by over 60% while decreasing the outage probability by over 80%. Compared to the 3GPP default settings, the gains from our approach are considerably larger. The results also indicate that the practically important combination of DL throughput and UL coverage can be greatly improved by joint UL-DL optimization.

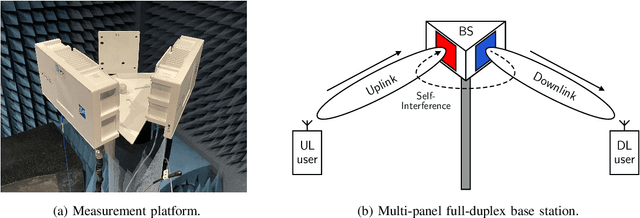

Spatial and Statistical Modeling of Multi-Panel Millimeter Wave Self-Interference

Oct 14, 2022

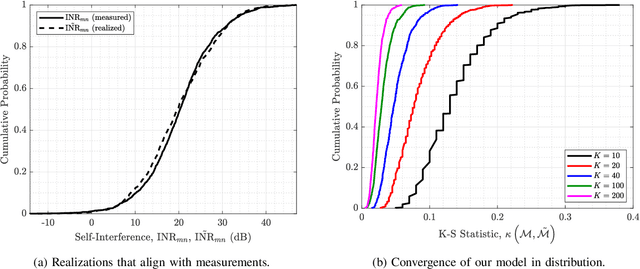

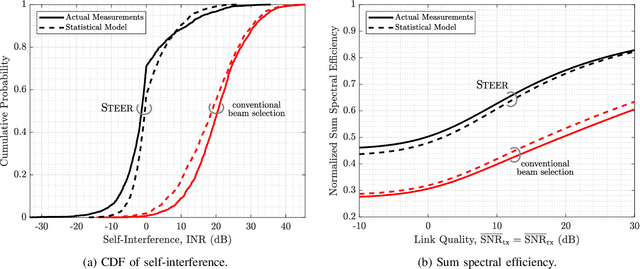

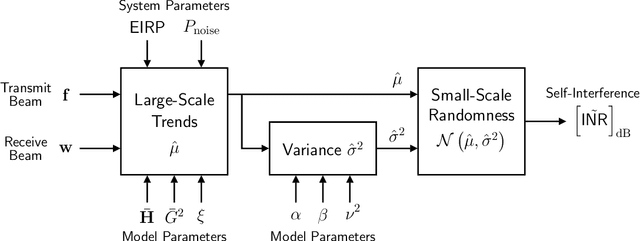

Abstract:Characterizing self-interference is essential to the design and evaluation of in-band full-duplex communication systems. Until now, little has been understood about this coupling in full-duplex systems operating at millimeter wave (mmWave) frequencies, and it has been shown that highly-idealized models proposed for such do not align with practice. This work presents the first spatial and statistical model of multi-panel mmWave self-interference backed by measurements, enabling engineers to draw realizations that exhibit the large-scale and small-scale spatial characteristics observed in our nearly 6.5 million measurements. Core to our model is its use of system and model parameters having real-world meaning, which facilitates the extension of our model to systems beyond our own phased array platform through proper parameterization. We demonstrate this by collecting nearly 13 million additional measurements to show that our model can generalize to two other system configurations. We assess our model by comparing it against actual measurements to confirm its ability to align spatially and in distribution with real-world self-interference. In addition, using both measurements and our model of self-interference, we evaluate an existing beamforming-based full-duplex mmWave solution to illustrate that our model can be reliably used to design new solutions and validate the performance improvements they may offer.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge