Seunggeun Kim

Fine-Tuning Masked Diffusion for Provable Self-Correction

Oct 01, 2025Abstract:A natural desideratum for generative models is self-correction--detecting and revising low-quality tokens at inference. While Masked Diffusion Models (MDMs) have emerged as a promising approach for generative modeling in discrete spaces, their capacity for self-correction remains poorly understood. Prior attempts to incorporate self-correction into MDMs either require overhauling MDM architectures/training or rely on imprecise proxies for token quality, limiting their applicability. Motivated by this, we introduce PRISM--Plug-in Remasking for Inference-time Self-correction of Masked Diffusions--a lightweight, model-agnostic approach that applies to any pretrained MDM. Theoretically, PRISM defines a self-correction loss that provably learns per-token quality scores, without RL or a verifier. These quality scores are computed in the same forward pass with MDM and used to detect low-quality tokens. Empirically, PRISM advances MDM inference across domains and scales: Sudoku; unconditional text (170M); and code with LLaDA (8B).

PPAAS: PVT and Pareto Aware Analog Sizing via Goal-conditioned Reinforcement Learning

Jul 22, 2025

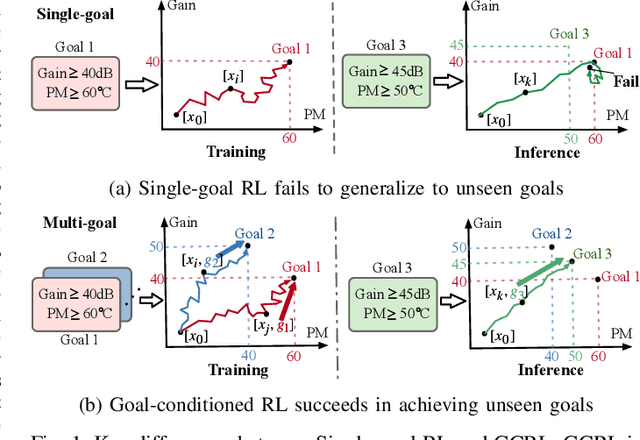

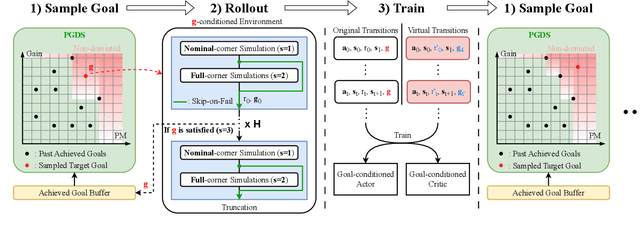

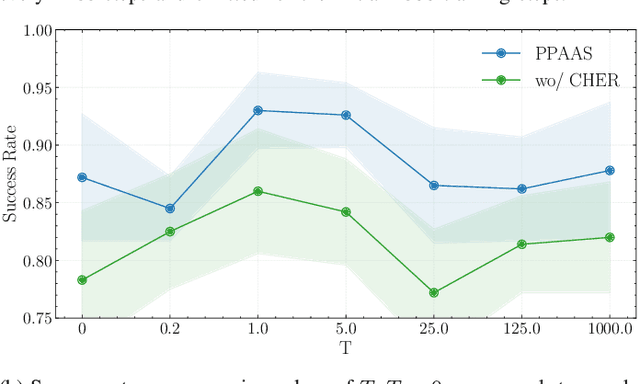

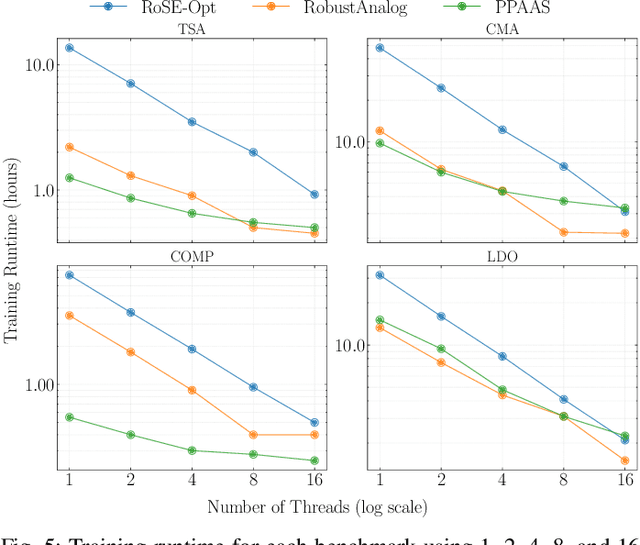

Abstract:Device sizing is a critical yet challenging step in analog and mixed-signal circuit design, requiring careful optimization to meet diverse performance specifications. This challenge is further amplified under process, voltage, and temperature (PVT) variations, which cause circuit behavior to shift across different corners. While reinforcement learning (RL) has shown promise in automating sizing for fixed targets, training a generalized policy that can adapt to a wide range of design specifications under PVT variations requires much more training samples and resources. To address these challenges, we propose a \textbf{Goal-conditioned RL framework} that enables efficient policy training for analog device sizing across PVT corners, with strong generalization capability. To improve sample efficiency, we introduce Pareto-front Dominance Goal Sampling, which constructs an automatic curriculum by sampling goals from the Pareto frontier of previously achieved goals. This strategy is further enhanced by integrating Conservative Hindsight Experience Replay, which assigns relabeled goals with conservative virtual rewards to stabilize training and accelerate convergence. To reduce simulation overhead, our framework incorporates a Skip-on-Fail simulation strategy, which skips full-corner simulations when nominal-corner simulation fails to meet target specifications. Experiments on benchmark circuits demonstrate $\sim$1.6$\times$ improvement in sample efficiency and $\sim$4.1$\times$ improvement in simulation efficiency compared to existing sizing methods. Code and benchmarks are publicly available at https://github.com/SeunggeunKimkr/PPAAS

UniMoCo: Unified Modality Completion for Robust Multi-Modal Embeddings

May 17, 2025Abstract:Current research has explored vision-language models for multi-modal embedding tasks, such as information retrieval, visual grounding, and classification. However, real-world scenarios often involve diverse modality combinations between queries and targets, such as text and image to text, text and image to text and image, and text to text and image. These diverse combinations pose significant challenges for existing models, as they struggle to align all modality combinations within a unified embedding space during training, which degrades performance at inference. To address this limitation, we propose UniMoCo, a novel vision-language model architecture designed for multi-modal embedding tasks. UniMoCo introduces a modality-completion module that generates visual features from textual inputs, ensuring modality completeness for both queries and targets. Additionally, we develop a specialized training strategy to align embeddings from both original and modality-completed inputs, ensuring consistency within the embedding space. This enables the model to robustly handle a wide range of modality combinations across embedding tasks. Experiments show that UniMoCo outperforms previous methods while demonstrating consistent robustness across diverse settings. More importantly, we identify and quantify the inherent bias in conventional approaches caused by imbalance of modality combinations in training data, which can be mitigated through our modality-completion paradigm. The code is available at https://github.com/HobbitQia/UniMoCo.

Self-Supervised Graph Contrastive Pretraining for Device-level Integrated Circuits

Feb 13, 2025

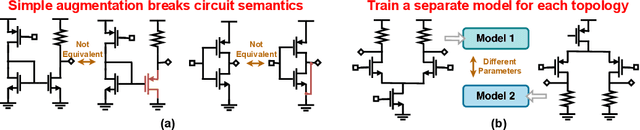

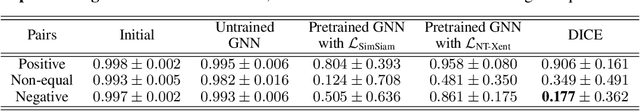

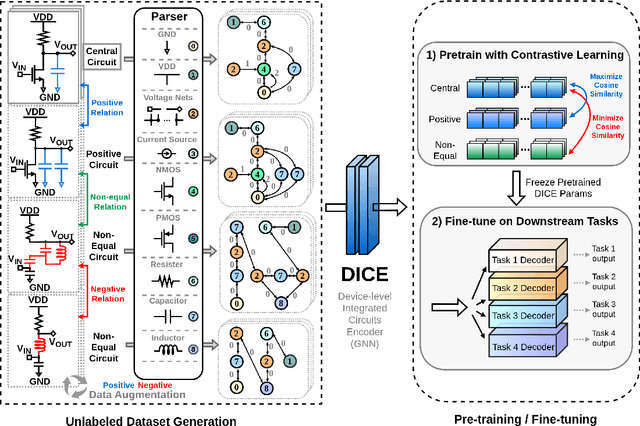

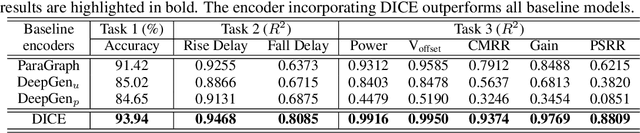

Abstract:Self-supervised graph representation learning has driven significant advancements in domains such as social network analysis, molecular design, and electronics design automation (EDA). However, prior works in EDA have mainly focused on the representation of gate-level digital circuits, failing to capture analog and mixed-signal circuits. To address this gap, we introduce DICE: Device-level Integrated Circuits Encoder, the first self-supervised pretrained graph neural network (GNN) model for any circuit expressed at the device level. DICE is a message-passing neural network (MPNN) trained through graph contrastive learning, and its pretraining process is simulation-free, incorporating two novel data augmentation techniques. Experimental results demonstrate that DICE achieves substantial performance gains across three downstream tasks, underscoring its effectiveness for both analog and digital circuits.

M3: Mamba-assisted Multi-Circuit Optimization via MBRL with Effective Scheduling

Nov 25, 2024

Abstract:Recent advancements in reinforcement learning (RL) for analog circuit optimization have demonstrated significant potential for improving sample efficiency and generalization across diverse circuit topologies and target specifications. However, there are challenges such as high computational overhead, the need for bespoke models for each circuit. To address them, we propose M3, a novel Model-based RL (MBRL) method employing the Mamba architecture and effective scheduling. The Mamba architecture, known as a strong alternative to the transformer architecture, enables multi-circuit optimization with distinct parameters and target specifications. The effective scheduling strategy enhances sample efficiency by adjusting crucial MBRL training parameters. To the best of our knowledge, M3 is the first method for multi-circuit optimization by leveraging both the Mamba architecture and a MBRL with effective scheduling. As a result, it significantly improves sample efficiency compared to existing RL methods.

LLM-Enhanced Bayesian Optimization for Efficient Analog Layout Constraint Generation

Jun 07, 2024

Abstract:Analog layout synthesis faces significant challenges due to its dependence on manual processes, considerable time requirements, and performance instability. Current Bayesian Optimization (BO)-based techniques for analog layout synthesis, despite their potential for automation, suffer from slow convergence and extensive data needs, limiting their practical application. This paper presents the \texttt{LLANA} framework, a novel approach that leverages Large Language Models (LLMs) to enhance BO by exploiting the few-shot learning abilities of LLMs for more efficient generation of analog design-dependent parameter constraints. Experimental results demonstrate that \texttt{LLANA} not only achieves performance comparable to state-of-the-art (SOTA) BO methods but also enables a more effective exploration of the analog circuit design space, thanks to LLM's superior contextual understanding and learning efficiency. The code is available at \url{https://github.com/dekura/LLANA}.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge