Tadashi Nomoto

The Fewer Splits are Better: Deconstructing Readability in Sentence Splitting

Feb 02, 2023Abstract:In this work, we focus on sentence splitting, a subfield of text simplification, motivated largely by an unproven idea that if you divide a sentence in pieces, it should become easier to understand. Our primary goal in this paper is to find out whether this is true. In particular, we ask, does it matter whether we break a sentence into two or three? We report on our findings based on Amazon Mechanical Turk. More specifically, we introduce a Bayesian modeling framework to further investigate to what degree a particular way of splitting the complex sentence affects readability, along with a number of other parameters adopted from diverse perspectives, including clinical linguistics, and cognitive linguistics. The Bayesian modeling experiment provides clear evidence that bisecting the sentence leads to enhanced readability to a degree greater than what we create by trisection.

Extended Multilingual Protest News Detection -- Shared Task 1, CASE 2021 and 2022

Nov 21, 2022

Abstract:We report results of the CASE 2022 Shared Task 1 on Multilingual Protest Event Detection. This task is a continuation of CASE 2021 that consists of four subtasks that are i) document classification, ii) sentence classification, iii) event sentence coreference identification, and iv) event extraction. The CASE 2022 extension consists of expanding the test data with more data in previously available languages, namely, English, Hindi, Portuguese, and Spanish, and adding new test data in Mandarin, Turkish, and Urdu for Sub-task 1, document classification. The training data from CASE 2021 in English, Portuguese and Spanish were utilized. Therefore, predicting document labels in Hindi, Mandarin, Turkish, and Urdu occurs in a zero-shot setting. The CASE 2022 workshop accepts reports on systems developed for predicting test data of CASE 2021 as well. We observe that the best systems submitted by CASE 2022 participants achieve between 79.71 and 84.06 F1-macro for new languages in a zero-shot setting. The winning approaches are mainly ensembling models and merging data in multiple languages. The best two submissions on CASE 2021 data outperform submissions from last year for Subtask 1 and Subtask 2 in all languages. Only the following scenarios were not outperformed by new submissions on CASE 2021: Subtask 3 Portuguese \& Subtask 4 English.

The Causal News Corpus: Annotating Causal Relations in Event Sentences from News

Apr 25, 2022

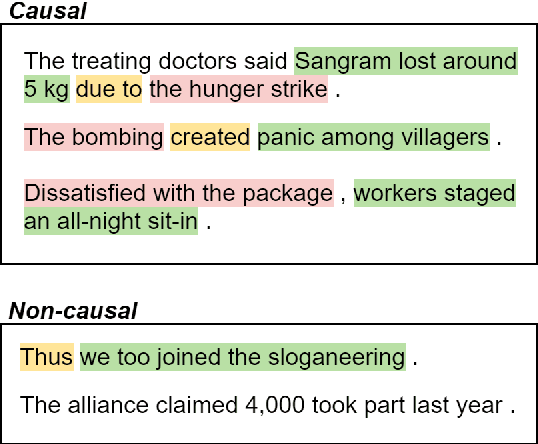

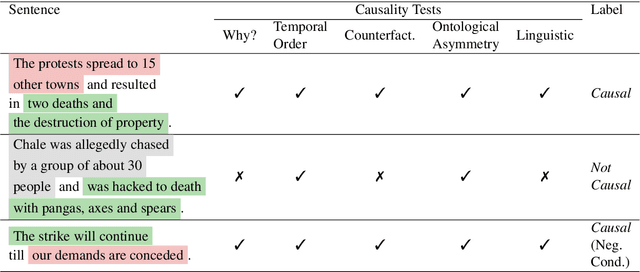

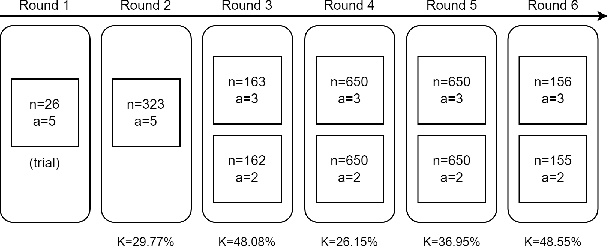

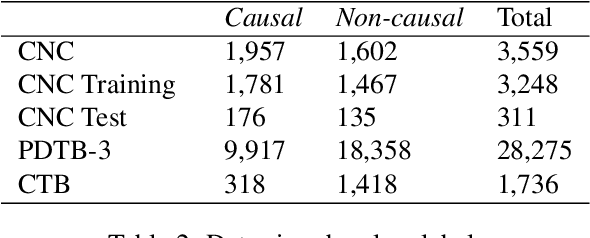

Abstract:Despite the importance of understanding causality, corpora addressing causal relations are limited. There is a discrepancy between existing annotation guidelines of event causality and conventional causality corpora that focus more on linguistics. Many guidelines restrict themselves to include only explicit relations or clause-based arguments. Therefore, we propose an annotation schema for event causality that addresses these concerns. We annotated 3,559 event sentences from protest event news with labels on whether it contains causal relations or not. Our corpus is known as the Causal News Corpus (CNC). A neural network built upon a state-of-the-art pre-trained language model performed well with 81.20% F1 score on test set, and 83.46% in 5-folds cross-validation. CNC is transferable across two external corpora: CausalTimeBank (CTB) and Penn Discourse Treebank (PDTB). Leveraging each of these external datasets for training, we achieved up to approximately 64% F1 on the CNC test set without additional fine-tuning. CNC also served as an effective training and pre-training dataset for the two external corpora. Lastly, we demonstrate the difficulty of our task to the layman in a crowd-sourced annotation exercise. Our annotated corpus is publicly available, providing a valuable resource for causal text mining researchers.

Learning to Simplify with Data Hopelessly Out of Alignment

Apr 02, 2022

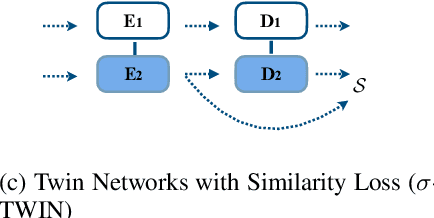

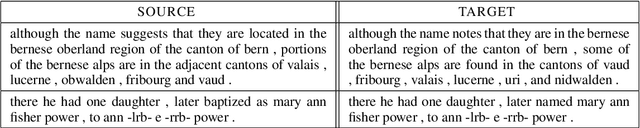

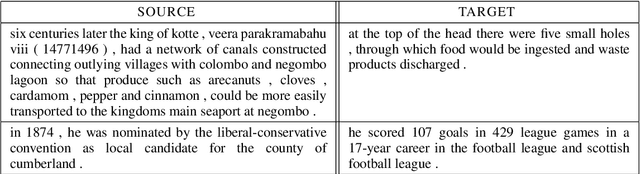

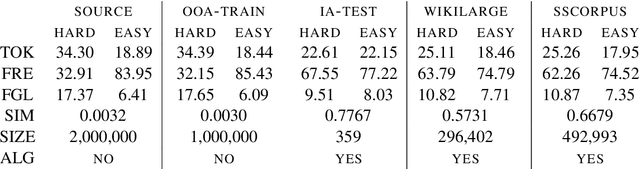

Abstract:We consider whether it is possible to do text simplification without relying on a "parallel" corpus, one that is made up of sentence-by-sentence alignments of complex and ground truth simple sentences. To this end, we introduce a number of concepts, some new and some not, including what we call Conjoined Twin Networks, Flip-Flop Auto-Encoders (FFA) and Adversarial Networks (GAN). A comparison is made between Jensen-Shannon (JS-GAN) and Wasserstein GAN, to see how they impact performance, with stronger results for the former. An experiment we conducted with a large dataset derived from Wikipedia found the solid superiority of Twin Networks equipped with FFA and JS-GAN, over the current best performing system. Furthermore, we discuss where we stand in a relation to fully supervised methods in the past literature, and highlight with examples qualitative differences that exist among simplified sentences generated by supervision-free systems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge