Suzhi Bi

Robust MIMO Semantic Communication with Imperfect CSI via Knowledge Distillation

Sep 04, 2025Abstract:Semantic communication (SemComm) has emerged as a new communication paradigm. To enhance efficiency, multiple-input-multiple-output (MIMO) technology has been further integrated into SemComm systems. However, existing MIMO SemComm systems assume perfect channel matrix estimation for channel-adaptive joint source-channel coding, which is impractical due to hardware and pilot overhead constraints. In this paper, we propose a semantic image transmission system with channel matrix and channel noise adaptation, named HANA-JSCC, to cope with channel estimation errors in MIMO systems. We propose a channel matrix adaptor that collaborates with the channel codec to adapt to misaligned channel state information, thereby mitigating the impact of estimation errors. Since the relationship between the estimated channel matrix and true channel matrix is ill-posed (one-to-many), we further introduce a two-stage training strategy with knowledge distillation to overcome the convergence difficulties caused by the ill-posed problem. Comparing with the state-of-the-art benchmarks, HANA-JSCC achieves $0.40\sim0.54$dB higher average performance across various noise and estimation error levels in various datasets.

Transferable Deployment of Semantic Edge Inference Systems via Unsupervised Domain Adaption

Apr 16, 2025Abstract:This paper investigates deploying semantic edge inference systems for performing a common image clarification task. In particular, each system consists of multiple Internet of Things (IoT) devices that first locally encode the sensing data into semantic features and then transmit them to an edge server for subsequent data fusion and task inference. The inference accuracy is determined by efficient training of the feature encoder/decoder using labeled data samples. Due to the difference in sensing data and communication channel distributions, deploying the system in a new environment may induce high costs in annotating data labels and re-training the encoder/decoder models. To achieve cost-effective transferable system deployment, we propose an efficient Domain Adaptation method for Semantic Edge INference systems (DASEIN) that can maintain high inference accuracy in a new environment without the need for labeled samples. Specifically, DASEIN exploits the task-relevant data correlation between different deployment scenarios by leveraging the techniques of unsupervised domain adaptation and knowledge distillation. It devises an efficient two-step adaptation procedure that sequentially aligns the data distributions and adapts to the channel variations. Numerical results show that, under a substantial change in sensing data distributions, the proposed DASEIN outperforms the best-performing benchmark method by 7.09% and 21.33% in inference accuracy when the new environment has similar or 25 dB lower channel signal to noise power ratios (SNRs), respectively. This verifies the effectiveness of the proposed method in adapting both data and channel distributions in practical transfer deployment applications.

Scalable Multi-task Edge Sensing via Task-oriented Joint Information Gathering and Broadcast

Apr 16, 2025Abstract:The recent advance of edge computing technology enables significant sensing performance improvement of Internet of Things (IoT) networks. In particular, an edge server (ES) is responsible for gathering sensing data from distributed sensing devices, and immediately executing different sensing tasks to accommodate the heterogeneous service demands of mobile users. However, as the number of users surges and the sensing tasks become increasingly compute-intensive, the huge amount of computation workloads and data transmissions may overwhelm the edge system of limited resources. Accordingly, we propose in this paper a scalable edge sensing framework for multi-task execution, in the sense that the computation workload and communication overhead of the ES do not increase with the number of downstream users or tasks. By exploiting the task-relevant correlations, the proposed scheme implements a unified encoder at the ES, which produces a common low-dimensional message from the sensing data and broadcasts it to all users to execute their individual tasks. To achieve high sensing accuracy, we extend the well-known information bottleneck theory to a multi-task scenario to jointly optimize the information gathering and broadcast processes. We also develop an efficient two-step training procedure to optimize the parameters of the neural network-based codecs deployed in the edge sensing system. Experiment results show that the proposed scheme significantly outperforms the considered representative benchmark methods in multi-task inference accuracy. Besides, the proposed scheme is scalable to the network size, which maintains almost constant computation delay with less than 1% degradation of inference performance when the user number increases by four times.

Traversing Distortion-Perception Tradeoff using a Single Score-Based Generative Model

Mar 26, 2025Abstract:The distortion-perception (DP) tradeoff reveals a fundamental conflict between distortion metrics (e.g., MSE and PSNR) and perceptual quality. Recent research has increasingly concentrated on evaluating denoising algorithms within the DP framework. However, existing algorithms either prioritize perceptual quality by sacrificing acceptable distortion, or focus on minimizing MSE for faithful restoration. When the goal shifts or noisy measurements vary, adapting to different points on the DP plane needs retraining or even re-designing the model. Inspired by recent advances in solving inverse problems using score-based generative models, we explore the potential of flexibly and optimally traversing DP tradeoffs using a single pre-trained score-based model. Specifically, we introduce a variance-scaled reverse diffusion process and theoretically characterize the marginal distribution. We then prove that the proposed sample process is an optimal solution to the DP tradeoff for conditional Gaussian distribution. Experimental results on two-dimensional and image datasets illustrate that a single score network can effectively and flexibly traverse the DP tradeoff for general denoising problems.

Digital Semantic Communications: An Alternating Multi-Phase Training Strategy with Mask Attack

Aug 09, 2024Abstract:Semantic communication (SemComm) has emerged as new paradigm shifts.Most existing SemComm systems transmit continuously distributed signals in analog fashion.However, the analog paradigm is not compatible with current digital communication frameworks. In this paper, we propose an alternating multi-phase training strategy (AMP) to enable the joint training of the networks in the encoder and decoder through non-differentiable digital processes. AMP contains three training phases, aiming at feature extraction (FE), robustness enhancement (RE), and training-testing alignment (TTA), respectively. AMP contains three training phases, aiming at feature extraction (FE), robustness enhancement (RE), and training-testing alignment (TTA), respectively. In particular, in the FE stage, we learn the representation ability of semantic information by end-to-end training the encoder and decoder in an analog manner. When we take digital communication into consideration, the domain shift between digital and analog demands the fine-tuning for encoder and decoder. To cope with joint training process within the non-differentiable digital processes, we propose the alternation between updating the decoder individually and jointly training the codec in RE phase. To boost robustness further, we investigate a mask-attack (MATK) in RE to simulate an evident and severe bit-flipping effect in a differentiable manner. To address the training-testing inconsistency introduced by MATK, we employ an additional TTA phase, fine-tuning the decoder without MATK. Combining with AMP and an information restoration network, we propose a digital SemComm system for image transmission, named AMP-SC. Comparing with the representative benchmark, AMP-SC achieves $0.82 \sim 1.65$dB higher average reconstruction performance among various representative datasets at different scales and a wide range of signal-to-noise ratio.

Compression before Fusion: Broadcast Semantic Communication System for Heterogeneous Tasks

Jan 31, 2024Abstract:Semantic communication has emerged as new paradigm shifts in 6G from the conventional syntax-oriented communications. Recently, the wireless broadcast technology has been introduced to support semantic communication system toward higher communication efficiency. Nevertheless, existing broadcast semantic communication systems target on general representation within one stage and fail to balance the inference accuracy among users. In this paper, the broadcast encoding process is decomposed into compression and fusion to improves communication efficiency with adaptation to tasks and channels.Particularly, we propose multiple task-channel-aware sub-encoders (TCE) and a channel-aware feature fusion sub-encoder (CFE) towards compression and fusion, respectively. In TCEs, multiple local-channel-aware attention blocks are employed to extract and compress task-relevant information for each user. In GFE, we introduce a global-channel-aware fine-tuning block to merge these compressed task-relevant signals into a compact broadcast signal. Notably, we retrieve the bottleneck in DeepBroadcast and leverage information bottleneck theory to further optimize the parameter tuning of TCEs and CFE.We substantiate our approach through experiments on a range of heterogeneous tasks across various channels with additive white Gaussian noise (AWGN) channel, Rayleigh fading channel, and Rician fading channel. Simulation results evidence that the proposed DeepBroadcast outperforms the state-of-the-art methods.

DASECount: Domain-Agnostic Sample-Efficient Wireless Indoor Crowd Counting via Few-shot Learning

Nov 18, 2022

Abstract:Accurate indoor crowd counting (ICC) is a key enabler to many smart home/office applications. In this paper, we propose a Domain-Agnostic and Sample-Efficient wireless indoor crowd Counting (DASECount) framework that suffices to attain robust cross-domain detection accuracy given very limited data samples in new domains. DASECount leverages the wisdom of few-shot learning (FSL) paradigm consisting of two major stages: source domain meta training and target domain meta testing. Specifically, in the meta-training stage, we design and train two separate convolutional neural network (CNN) modules on the source domain dataset to fully capture the implicit amplitude and phase features of CSI measurements related to human activities. A subsequent knowledge distillation procedure is designed to iteratively update the CNN parameters for better generalization performance. In the meta-testing stage, we use the partial CNN modules to extract low-dimension features out of the high-dimension input target domain CSI data. With the obtained low-dimension CSI features, we can even use very few shots of target domain data samples (e.g., 5-shot samples) to train a lightweight logistic regression (LR) classifier, and attain very high cross-domain ICC accuracy. Experiment results show that the proposed DASECount method achieves over 92.68\%, and on average 96.37\% detection accuracy in a 0-8 people counting task under various domain setups, which significantly outperforms the other representative benchmark methods considered.

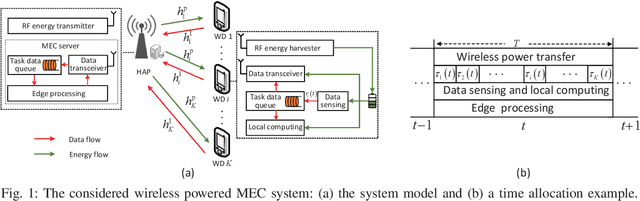

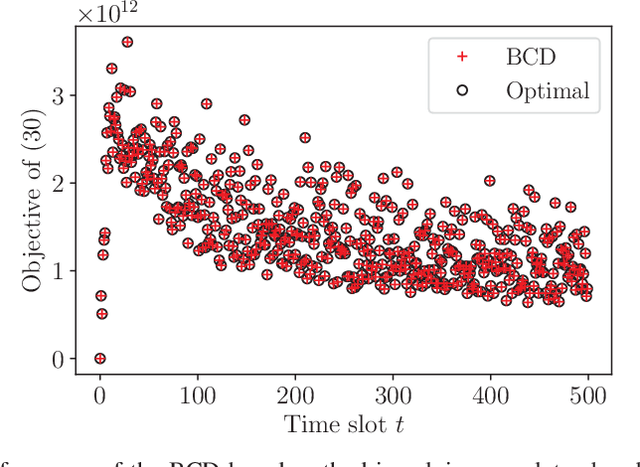

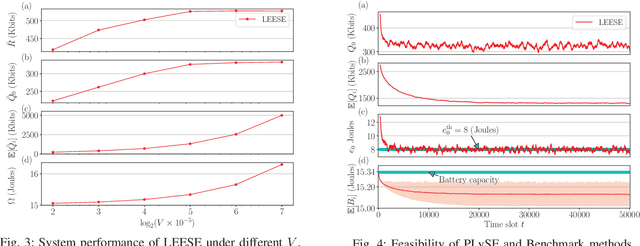

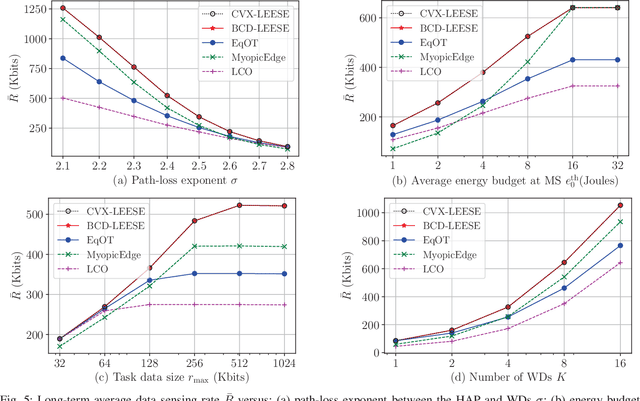

Energy-Efficient Online Data Sensing and Processing in Wireless Powered Edge Computing Systems

Nov 04, 2021

Abstract:This paper focuses on developing energy-efficient online data processing strategy of wireless powered MEC systems under stochastic fading channels. In particular, we consider a hybrid access point (HAP) transmitting RF energy to and processing the sensing data offloaded from multiple WDs. Under an average power constraint of the HAP, we aim to maximize the long-term average data sensing rate of the WDs while maintaining task data queue stability. We formulate the problem as a multi-stage stochastic optimization to control the energy transfer and task data processing in sequential time slots. Without the knowledge of future channel fading, it is very challenging to determine the sequential control actions that are tightly coupled by the battery and data buffer dynamics. To solve the problem, we propose an online algorithm named LEESE that applies the perturbed Lyapunov optimization technique to decompose the multi-stage stochastic problem into per-slot deterministic optimization problems. We show that each per-slot problem can be equivalently transformed into a convex optimization problem. To facilitate online implementation in large-scale MEC systems, instead of solving the per-slot problem with off-the-shelf convex algorithms, we propose a block coordinate descent (BCD)-based method that produces close-to-optimal solution in less than 0.04\% of the computation delay. Simulation results demonstrate that the proposed LEESE algorithm can provide 21.9\% higher data sensing rate than the representative benchmark methods considered, while incurring sub-millisecond computation delay suitable for real-time control under fading channel.

Online Cognitive Data Sensing and Processing Optimization in Energy-harvesting Edge Computing Systems

Jun 27, 2021

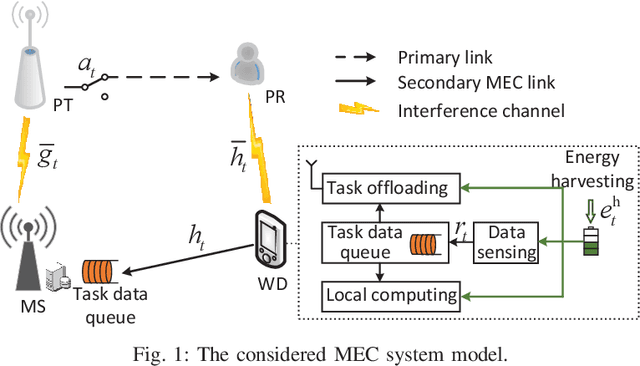

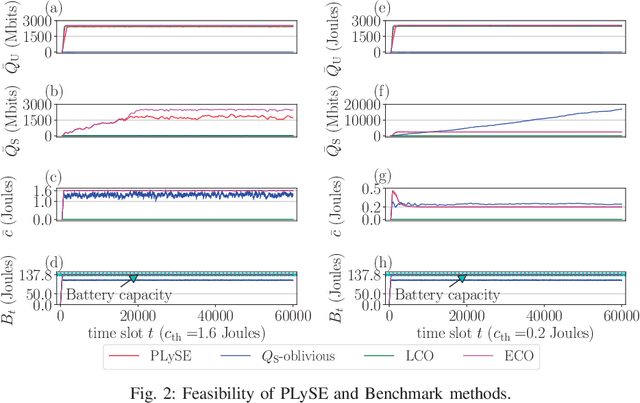

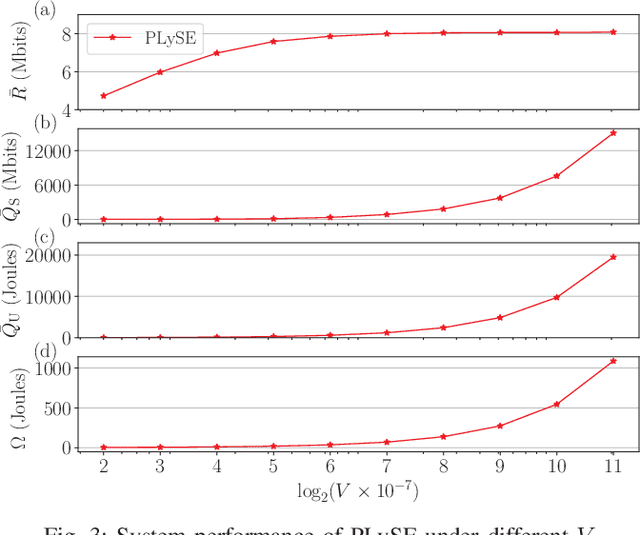

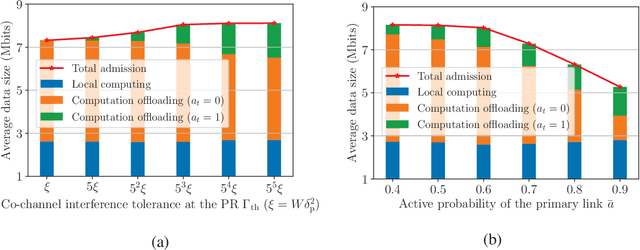

Abstract:Mobile edge computing (MEC) has recently become a prevailing technique to alleviate the intensive computation burden in Internet of Things (IoT) networks. However, the limited device battery capacity and stringent spectrum resource significantly restrict the data processing performance of MEC-enabled IoT networks. To address the two performance limitations, we consider in this paper an MEC-enabled IoT system with an energy harvesting (EH) wireless device (WD) which opportunistically accesses the licensed spectrum of an overlaid primary communication link for task offloading. We aim to maximize the long-term average sensing rate of the WD subject to quality of service (QoS) requirement of primary link, average power constraint of MEC server (MS) and data queue stability of both MS and WD. We formulate the problem as a multi-stage stochastic optimization and propose an online algorithm named PLySE that applies the perturbed Lyapunov optimization technique to decompose the original problem into per-slot deterministic optimization problems. For each per-slot problem, we derive the closed-form optimal solution of data sensing and processing control to facilitate low-complexity real-time implementation. Interestingly, our analysis finds that the optimal solution exhibits an threshold-based structure. Simulation results collaborate with our analysis and demonstrate more than 46.7\% data sensing rate improvement of the proposed PLySE over representative benchmark methods.

Optimal Model Placement and Online Model Splitting for Device-Edge Co-Inference

May 28, 2021

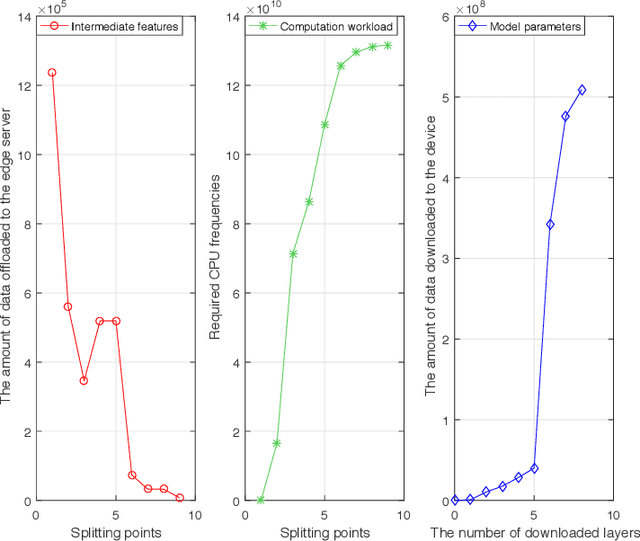

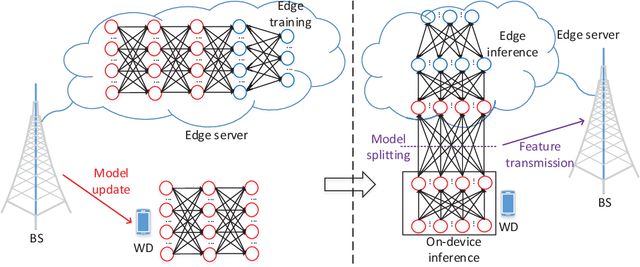

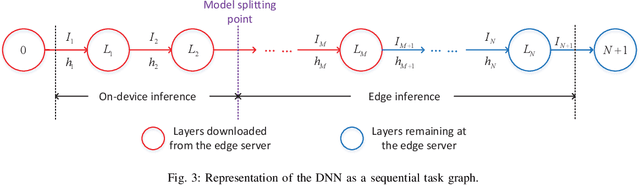

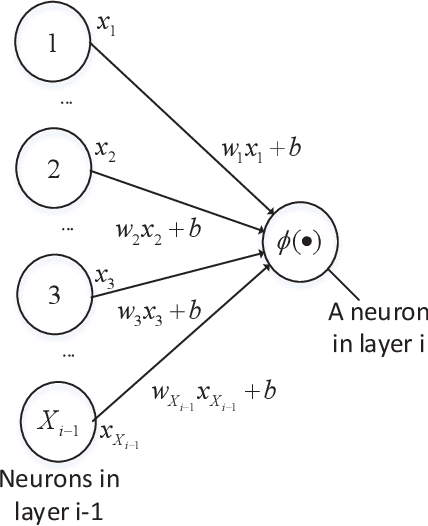

Abstract:Device-edge co-inference opens up new possibilities for resource-constrained wireless devices (WDs) to execute deep neural network (DNN)-based applications with heavy computation workloads. In particular, the WD executes the first few layers of the DNN and sends the intermediate features to the edge server that processes the remaining layers of the DNN. By adapting the model splitting decision, there exists a tradeoff between local computation cost and communication overhead. In practice, the DNN model is re-trained and updated periodically at the edge server. Once the DNN parameters are regenerated, part of the updated model must be placed at the WD to facilitate on-device inference. In this paper, we study the joint optimization of the model placement and online model splitting decisions to minimize the energy-and-time cost of device-edge co-inference in presence of wireless channel fading. The problem is challenging because the model placement and model splitting decisions are strongly coupled, while involving two different time scales. We first tackle online model splitting by formulating an optimal stopping problem, where the finite horizon of the problem is determined by the model placement decision. In addition to deriving the optimal model splitting rule based on backward induction, we further investigate a simple one-stage look-ahead rule, for which we are able to obtain analytical expressions of the model splitting decision. The analysis is useful for us to efficiently optimize the model placement decision in a larger time scale. In particular, we obtain a closed-form model placement solution for the fully-connected multilayer perceptron with equal neurons. Simulation results validate the superior performance of the joint optimal model placement and splitting with various DNN structures.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge