Shuoyao Wang

Robust MIMO Semantic Communication with Imperfect CSI via Knowledge Distillation

Sep 04, 2025Abstract:Semantic communication (SemComm) has emerged as a new communication paradigm. To enhance efficiency, multiple-input-multiple-output (MIMO) technology has been further integrated into SemComm systems. However, existing MIMO SemComm systems assume perfect channel matrix estimation for channel-adaptive joint source-channel coding, which is impractical due to hardware and pilot overhead constraints. In this paper, we propose a semantic image transmission system with channel matrix and channel noise adaptation, named HANA-JSCC, to cope with channel estimation errors in MIMO systems. We propose a channel matrix adaptor that collaborates with the channel codec to adapt to misaligned channel state information, thereby mitigating the impact of estimation errors. Since the relationship between the estimated channel matrix and true channel matrix is ill-posed (one-to-many), we further introduce a two-stage training strategy with knowledge distillation to overcome the convergence difficulties caused by the ill-posed problem. Comparing with the state-of-the-art benchmarks, HANA-JSCC achieves $0.40\sim0.54$dB higher average performance across various noise and estimation error levels in various datasets.

Aligning Beam with Imbalanced Multi-modality: A Generative Federated Learning Approach

Apr 21, 2025Abstract:As vehicle intelligence advances, multi-modal sensing-aided communication emerges as a key enabler for reliable Vehicle-to-Everything (V2X) connectivity through precise environmental characterization. As centralized learning may suffer from data privacy, model heterogeneity and communication overhead issues, federated learning (FL) has been introduced to support V2X. However, the practical deployment of FL faces critical challenges: model performance degradation from label imbalance across vehicles and training instability induced by modality disparities in sensor-equipped agents. To overcome these limitations, we propose a generative FL approach for beam selection (GFL4BS). Our solution features two core innovations: 1) An adaptive zero-shot multi-modal generator coupled with spectral-regularized loss functions to enhance the expressiveness of synthetic data compensating for both label scarcity and missing modalities; 2) A hybrid training paradigm integrating feature fusion with decentralized optimization to ensure training resilience while minimizing communication costs. Experimental evaluations demonstrate significant improvements over baselines achieving 16.2% higher accuracy than the current state-of-the-art under severe label imbalance conditions while maintaining over 70% successful rate even when two agents lack both LiDAR and RGB camera inputs.

Scalable Multi-task Edge Sensing via Task-oriented Joint Information Gathering and Broadcast

Apr 16, 2025Abstract:The recent advance of edge computing technology enables significant sensing performance improvement of Internet of Things (IoT) networks. In particular, an edge server (ES) is responsible for gathering sensing data from distributed sensing devices, and immediately executing different sensing tasks to accommodate the heterogeneous service demands of mobile users. However, as the number of users surges and the sensing tasks become increasingly compute-intensive, the huge amount of computation workloads and data transmissions may overwhelm the edge system of limited resources. Accordingly, we propose in this paper a scalable edge sensing framework for multi-task execution, in the sense that the computation workload and communication overhead of the ES do not increase with the number of downstream users or tasks. By exploiting the task-relevant correlations, the proposed scheme implements a unified encoder at the ES, which produces a common low-dimensional message from the sensing data and broadcasts it to all users to execute their individual tasks. To achieve high sensing accuracy, we extend the well-known information bottleneck theory to a multi-task scenario to jointly optimize the information gathering and broadcast processes. We also develop an efficient two-step training procedure to optimize the parameters of the neural network-based codecs deployed in the edge sensing system. Experiment results show that the proposed scheme significantly outperforms the considered representative benchmark methods in multi-task inference accuracy. Besides, the proposed scheme is scalable to the network size, which maintains almost constant computation delay with less than 1% degradation of inference performance when the user number increases by four times.

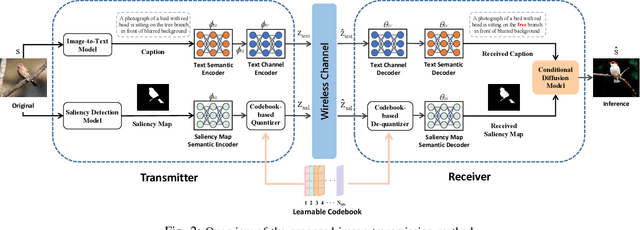

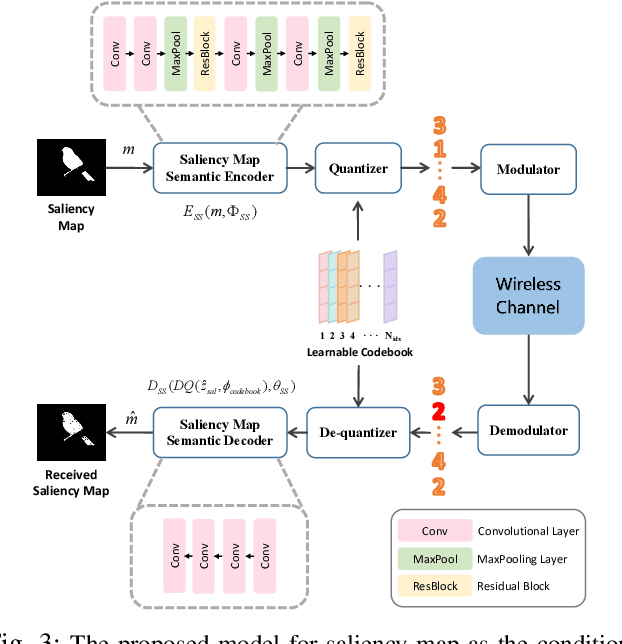

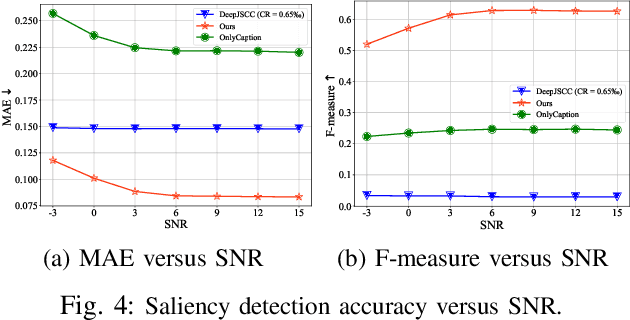

Low-Rate Semantic Communication with Codebook-based Conditional Generative Models

Apr 07, 2025

Abstract:Generative semantic communication models are reshaping semantic communication frameworks by moving beyond pixel-wise optimization to align with human perception. However, many existing approaches prioritize image-level perceptual quality, often neglecting alignment with downstream tasks, which can lead to suboptimal semantic representation. This paper introduces an Ultra-Low Bitrate Semantic Communication (ULBSC) system that employs a conditional generative model and a learnable condition codebook.By integrating saliency conditions and image-level semantic information, the proposed method enables high-perceptual-quality and controllable task-oriented image transmission. Recognizing shared patterns among objects, we propose a codebook-assisted condition transmission method, integrated with joint source-channel coding (JSCC)-based text transmission to establish ULBSC. The codebook serves as a knowledge base, reducing communication costs to achieve ultra-low bitrate while enhancing robustness against noise and inaccuracies in saliency detection. Simulation results indicate that, under ultra-low bitrate conditions with an average compression ratio of 0.57Z%o, the proposed system delivers superior visual quality compared to traditional JSCC techniques and achieves higher saliency similarity between the generated and source images compared to state-of-the-art generative semantic communication methods.

Predictive Target-to-User Association in Complex Scenarios via Hybrid-Field ISAC Signaling

Jan 18, 2025

Abstract:This paper presents a novel and robust target-to-user (T2U) association framework to support reliable vehicle-to-infrastructure (V2I) networks that potentially operate within the hybrid field (near-field and far-field). To address the challenges posed by complex vehicle maneuvers and user association ambiguity, an interacting multiple-model filtering scheme is developed, which combines coordinated turn and constant velocity models for predictive beamforming. Building upon this foundation, a lightweight association scheme leverages user-specific integrated sensing and communication (ISAC) signaling while employing probabilistic data association to manage clutter measurements in dense traffic. Numerical results validate that the proposed framework significantly outperforms conventional methods in terms of both tracking accuracy and association reliability.

Digital Semantic Communications: An Alternating Multi-Phase Training Strategy with Mask Attack

Aug 09, 2024Abstract:Semantic communication (SemComm) has emerged as new paradigm shifts.Most existing SemComm systems transmit continuously distributed signals in analog fashion.However, the analog paradigm is not compatible with current digital communication frameworks. In this paper, we propose an alternating multi-phase training strategy (AMP) to enable the joint training of the networks in the encoder and decoder through non-differentiable digital processes. AMP contains three training phases, aiming at feature extraction (FE), robustness enhancement (RE), and training-testing alignment (TTA), respectively. AMP contains three training phases, aiming at feature extraction (FE), robustness enhancement (RE), and training-testing alignment (TTA), respectively. In particular, in the FE stage, we learn the representation ability of semantic information by end-to-end training the encoder and decoder in an analog manner. When we take digital communication into consideration, the domain shift between digital and analog demands the fine-tuning for encoder and decoder. To cope with joint training process within the non-differentiable digital processes, we propose the alternation between updating the decoder individually and jointly training the codec in RE phase. To boost robustness further, we investigate a mask-attack (MATK) in RE to simulate an evident and severe bit-flipping effect in a differentiable manner. To address the training-testing inconsistency introduced by MATK, we employ an additional TTA phase, fine-tuning the decoder without MATK. Combining with AMP and an information restoration network, we propose a digital SemComm system for image transmission, named AMP-SC. Comparing with the representative benchmark, AMP-SC achieves $0.82 \sim 1.65$dB higher average reconstruction performance among various representative datasets at different scales and a wide range of signal-to-noise ratio.

Compression before Fusion: Broadcast Semantic Communication System for Heterogeneous Tasks

Jan 31, 2024Abstract:Semantic communication has emerged as new paradigm shifts in 6G from the conventional syntax-oriented communications. Recently, the wireless broadcast technology has been introduced to support semantic communication system toward higher communication efficiency. Nevertheless, existing broadcast semantic communication systems target on general representation within one stage and fail to balance the inference accuracy among users. In this paper, the broadcast encoding process is decomposed into compression and fusion to improves communication efficiency with adaptation to tasks and channels.Particularly, we propose multiple task-channel-aware sub-encoders (TCE) and a channel-aware feature fusion sub-encoder (CFE) towards compression and fusion, respectively. In TCEs, multiple local-channel-aware attention blocks are employed to extract and compress task-relevant information for each user. In GFE, we introduce a global-channel-aware fine-tuning block to merge these compressed task-relevant signals into a compact broadcast signal. Notably, we retrieve the bottleneck in DeepBroadcast and leverage information bottleneck theory to further optimize the parameter tuning of TCEs and CFE.We substantiate our approach through experiments on a range of heterogeneous tasks across various channels with additive white Gaussian noise (AWGN) channel, Rayleigh fading channel, and Rician fading channel. Simulation results evidence that the proposed DeepBroadcast outperforms the state-of-the-art methods.

Enhancement or Super-Resolution: Learning-based Adaptive Video Streaming with Client-Side Video Processing

Jan 20, 2022

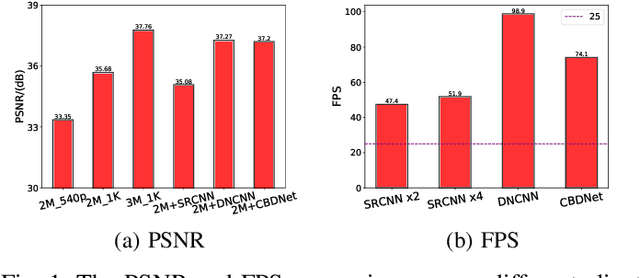

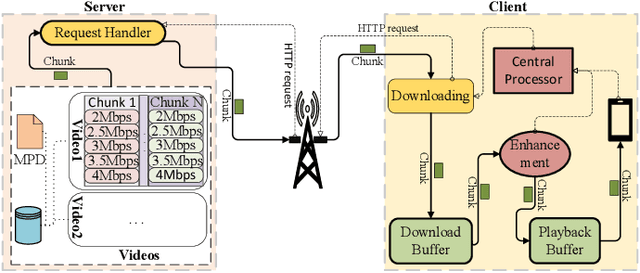

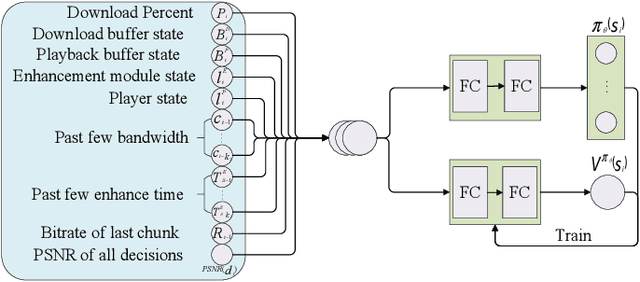

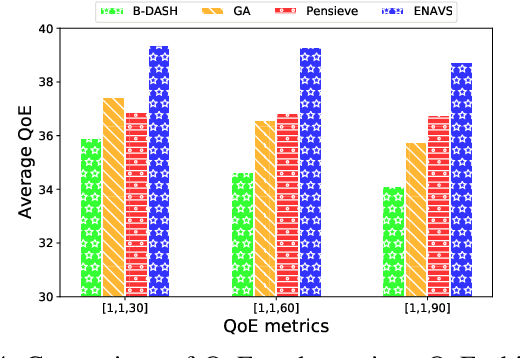

Abstract:The rapid development of multimedia and communication technology has resulted in an urgent need for high-quality video streaming. However, robust video streaming under fluctuating network conditions and heterogeneous client computing capabilities remains a challenge. In this paper, we consider an enhancement-enabled video streaming network under a time-varying wireless network and limited computation capacity. "Enhancement" means that the client can improve the quality of the downloaded video segments via image processing modules. We aim to design a joint bitrate adaptation and client-side enhancement algorithm toward maximizing the quality of experience (QoE). We formulate the problem as a Markov decision process (MDP) and propose a deep reinforcement learning (DRL)-based framework, named ENAVS. As video streaming quality is mainly affected by video compression, we demonstrate that the video enhancement algorithm outperforms the super-resolution algorithm in terms of signal-to-noise ratio and frames per second, suggesting a better solution for client processing in video streaming. Ultimately, we implement ENAVS and demonstrate extensive testbed results under real-world bandwidth traces and videos. The simulation shows that ENAVS is capable of delivering 5%-14% more QoE under the same bandwidth and computing power conditions as conventional ABR streaming.

Interpretable Multimodal Learning for Intelligent Regulation in Online Payment Systems

Jun 10, 2020

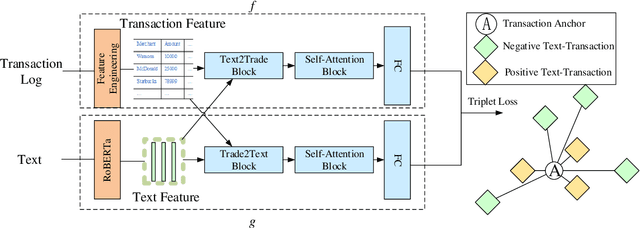

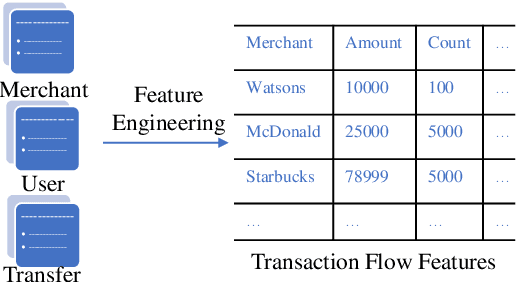

Abstract:With the explosive growth of transaction activities in online payment systems, effective and realtime regulation becomes a critical problem for payment service providers. Thanks to the rapid development of artificial intelligence (AI), AI-enable regulation emerges as a promising solution. One main challenge of the AI-enabled regulation is how to utilize multimedia information, i.e., multimodal signals, in Financial Technology (FinTech). Inspired by the attention mechanism in nature language processing, we propose a novel cross-modal and intra-modal attention network (CIAN) to investigate the relation between the text and transaction. More specifically, we integrate the text and transaction information to enhance the text-trade jointembedding learning, which clusters positive pairs and push negative pairs away from each other. Another challenge of intelligent regulation is the interpretability of complicated machine learning models. To sustain the requirements of financial regulation, we design a CIAN-Explainer to interpret how the attention mechanism interacts the original features, which is formulated as a low-rank matrix approximation problem. With the real datasets from the largest online payment system, WeChat Pay of Tencent, we conduct experiments to validate the practical application value of CIAN, where our method outperforms the state-of-the-art methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge