Sukru Burc Eryilmaz

ScaleFold: Reducing AlphaFold Initial Training Time to 10 Hours

Apr 17, 2024

Abstract:AlphaFold2 has been hailed as a breakthrough in protein folding. It can rapidly predict protein structures with lab-grade accuracy. However, its implementation does not include the necessary training code. OpenFold is the first trainable public reimplementation of AlphaFold. AlphaFold training procedure is prohibitively time-consuming, and gets diminishing benefits from scaling to more compute resources. In this work, we conducted a comprehensive analysis on the AlphaFold training procedure based on Openfold, identified that inefficient communications and overhead-dominated computations were the key factors that prevented the AlphaFold training from effective scaling. We introduced ScaleFold, a systematic training method that incorporated optimizations specifically for these factors. ScaleFold successfully scaled the AlphaFold training to 2080 NVIDIA H100 GPUs with high resource utilization. In the MLPerf HPC v3.0 benchmark, ScaleFold finished the OpenFold benchmark in 7.51 minutes, shown over $6\times$ speedup than the baseline. For training the AlphaFold model from scratch, ScaleFold completed the pretraining in 10 hours, a significant improvement over the seven days required by the original AlphaFold pretraining baseline.

Device and System Level Design Considerations for Analog-Non-Volatile-Memory Based Neuromorphic Architectures

May 06, 2016

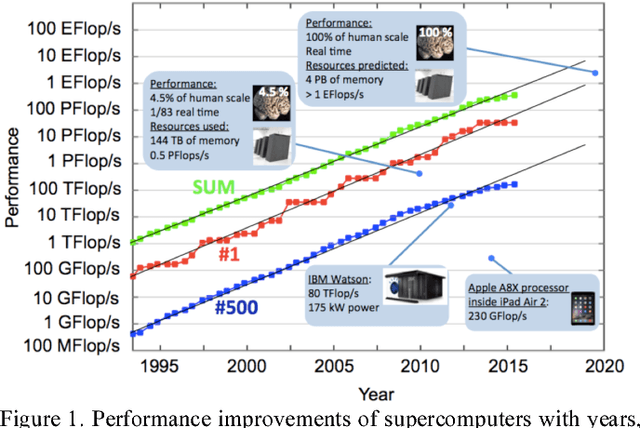

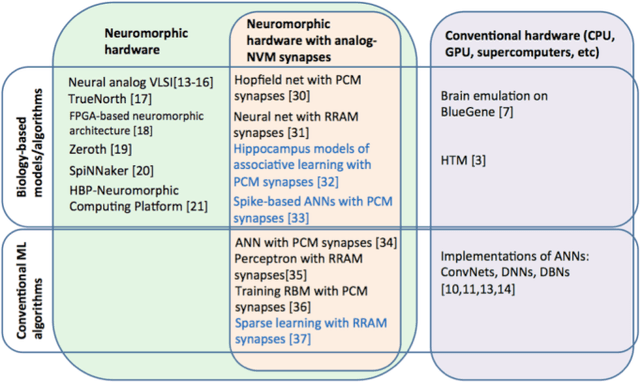

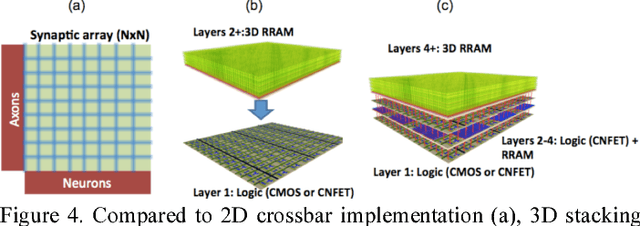

Abstract:This paper gives an overview of recent progress in the brain inspired computing field with a focus on implementation using emerging memories as electronic synapses. Design considerations and challenges such as requirements and design targets on multilevel states, device variability, programming energy, array-level connectivity, fan-in/fanout, wire energy, and IR drop are presented. Wires are increasingly important in design decisions, especially for large systems, and cycle-to-cycle variations have large impact on learning performance.

* 4 pages, In Electron Devices Meeting (IEDM), 2015 IEEE International (pp. 4.1). IEEE. Original paper can be found here: http://ieeexplore.ieee.org/stamp/stamp.jsp?tp=&arnumber=7409622. Abstract can be found here: http://ieeexplore.ieee.org/xpl/articleDetails.jsp?arnumber=7409622&refinements%3D4224410500%26filter%3DAND%28p_IS_Number%3A7409598%29

Brain-like associative learning using a nanoscale non-volatile phase change synaptic device array

Jul 14, 2014

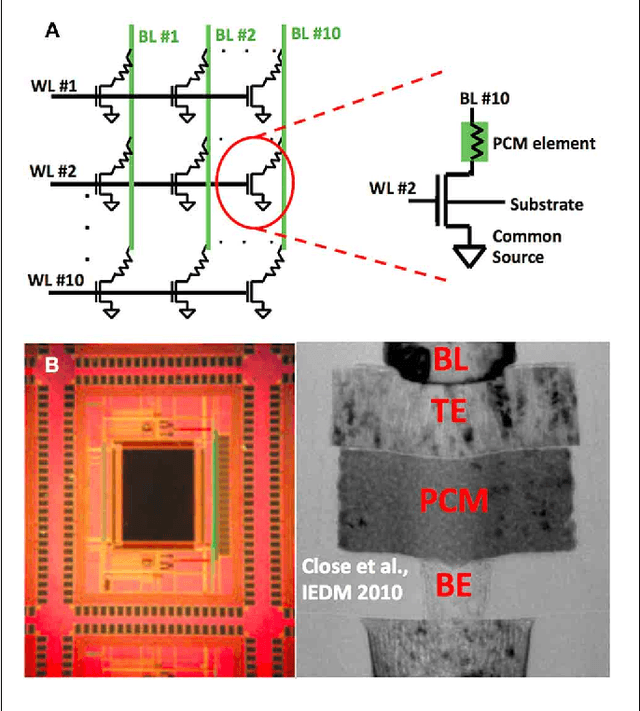

Abstract:Recent advances in neuroscience together with nanoscale electronic device technology have resulted in huge interests in realizing brain-like computing hardwares using emerging nanoscale memory devices as synaptic elements. Although there has been experimental work that demonstrated the operation of nanoscale synaptic element at the single device level, network level studies have been limited to simulations. In this work, we demonstrate, using experiments, array level associative learning using phase change synaptic devices connected in a grid like configuration similar to the organization of the biological brain. Implementing Hebbian learning with phase change memory cells, the synaptic grid was able to store presented patterns and recall missing patterns in an associative brain-like fashion. We found that the system is robust to device variations, and large variations in cell resistance states can be accommodated by increasing the number of training epochs. We illustrated the tradeoff between variation tolerance of the network and the overall energy consumption, and found that energy consumption is decreased significantly for lower variation tolerance.

* Original article can be found here: http://journal.frontiersin.org/Journal/10.3389/fnins.2014.00205/abstract

Supervised classification-based stock prediction and portfolio optimization

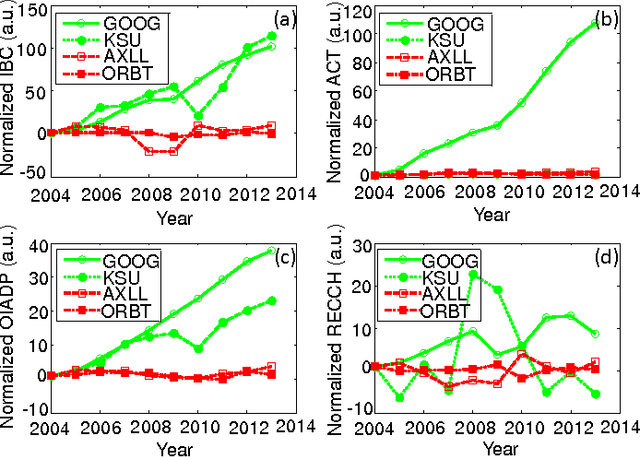

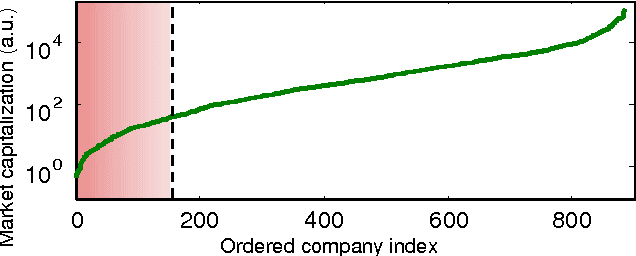

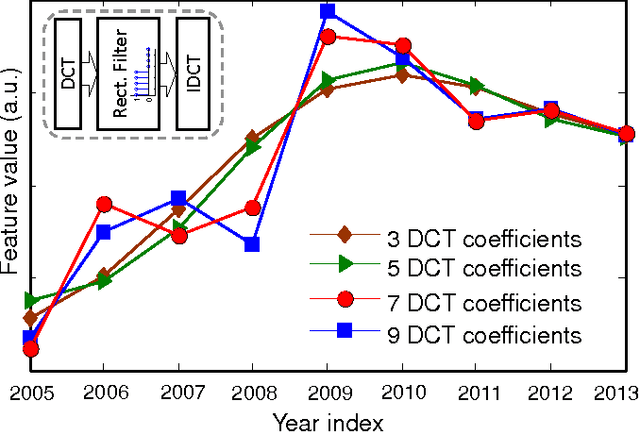

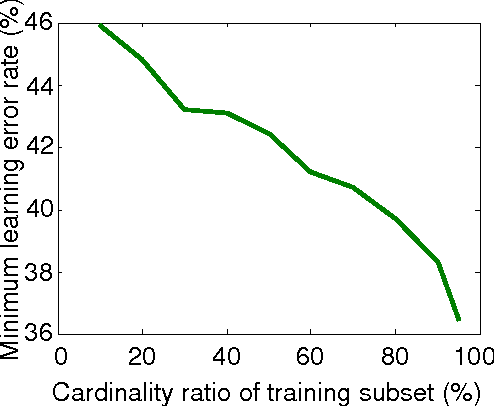

Jun 03, 2014

Abstract:As the number of publicly traded companies as well as the amount of their financial data grows rapidly, it is highly desired to have tracking, analysis, and eventually stock selections automated. There have been few works focusing on estimating the stock prices of individual companies. However, many of those have worked with very small number of financial parameters. In this work, we apply machine learning techniques to address automated stock picking, while using a larger number of financial parameters for individual companies than the previous studies. Our approaches are based on the supervision of prediction parameters using company fundamentals, time-series properties, and correlation information between different stocks. We examine a variety of supervised learning techniques and found that using stock fundamentals is a useful approach for the classification problem, when combined with the high dimensional data handling capabilities of support vector machine. The portfolio our system suggests by predicting the behavior of stocks results in a 3% larger growth on average than the overall market within a 3-month time period, as the out-of-sample test suggests.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge