Chung Lam

Training a Probabilistic Graphical Model with Resistive Switching Electronic Synapses

Oct 10, 2016

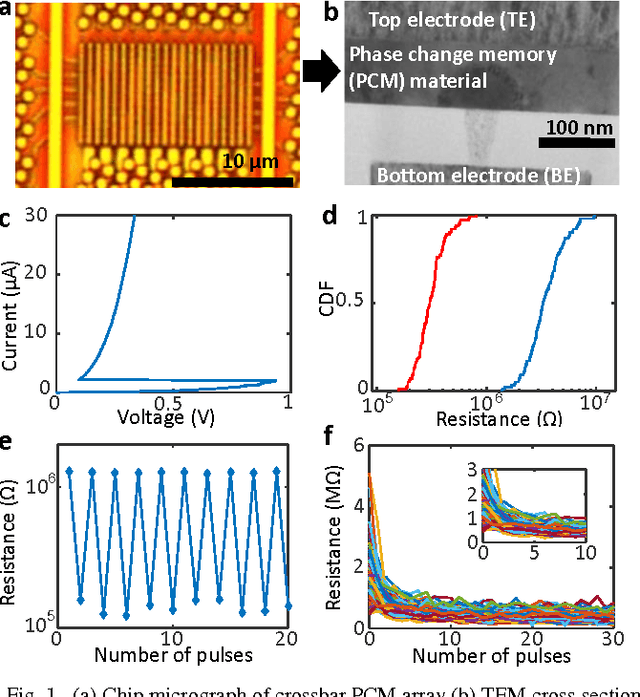

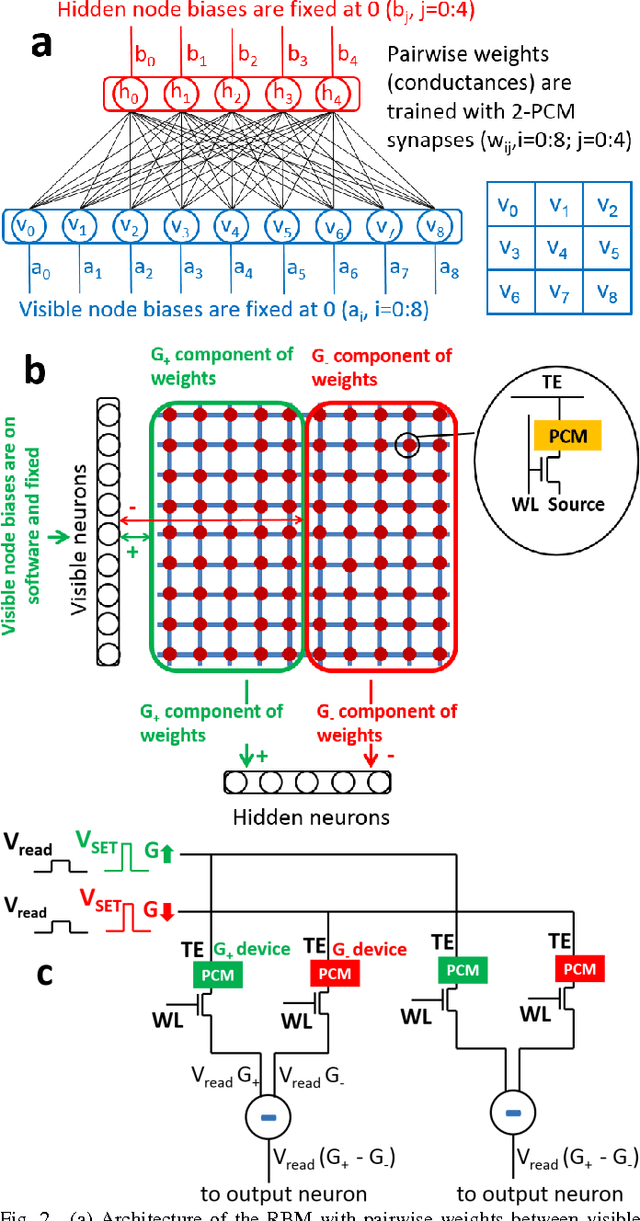

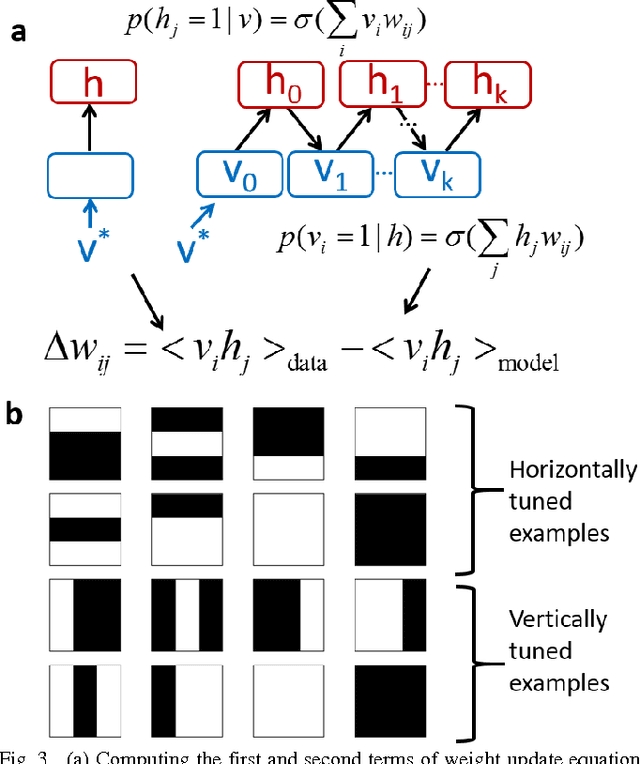

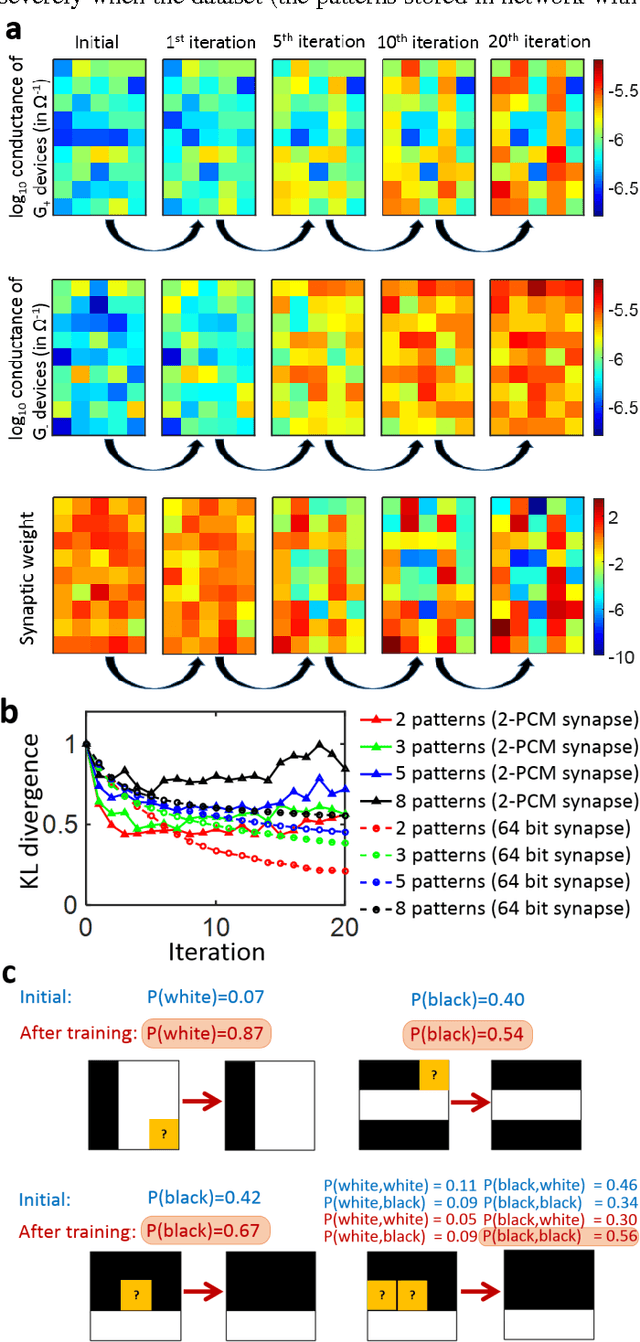

Abstract:Current large scale implementations of deep learning and data mining require thousands of processors, massive amounts of off-chip memory, and consume gigajoules of energy. Emerging memory technologies such as nanoscale two-terminal resistive switching memory devices offer a compact, scalable and low power alternative that permits on-chip co-located processing and memory in fine-grain distributed parallel architecture. Here we report first use of resistive switching memory devices for implementing and training a Restricted Boltzmann Machine (RBM), a generative probabilistic graphical model as a key component for unsupervised learning in deep networks. We experimentally demonstrate a 45-synapse RBM realized with 90 resistive switching phase change memory (PCM) elements trained with a bio-inspired variant of the Contrastive Divergence (CD) algorithm, implementing Hebbian and anti-Hebbian weight updates. The resistive PCM devices show a two-fold to ten-fold reduction in error rate in a missing pixel pattern completion task trained over 30 epochs, compared to untrained case. Measured programming energy consumption is 6.1 nJ per epoch with the resistive switching PCM devices, a factor of ~150 times lower than conventional processor-memory systems. We analyze and discuss the dependence of learning performance on cycle-to-cycle variations as well as number of gradual levels in the PCM analog memory devices.

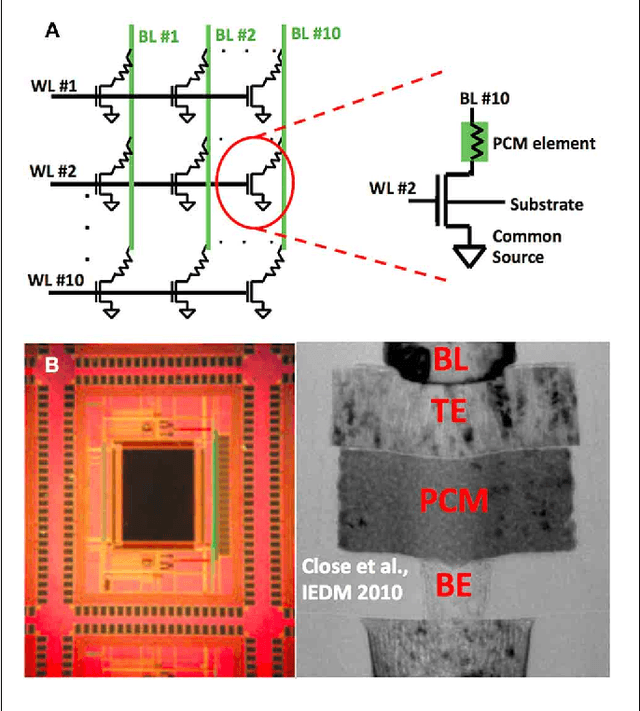

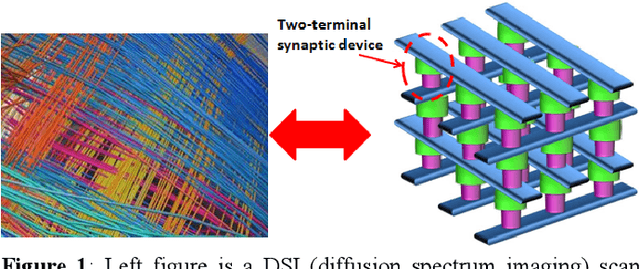

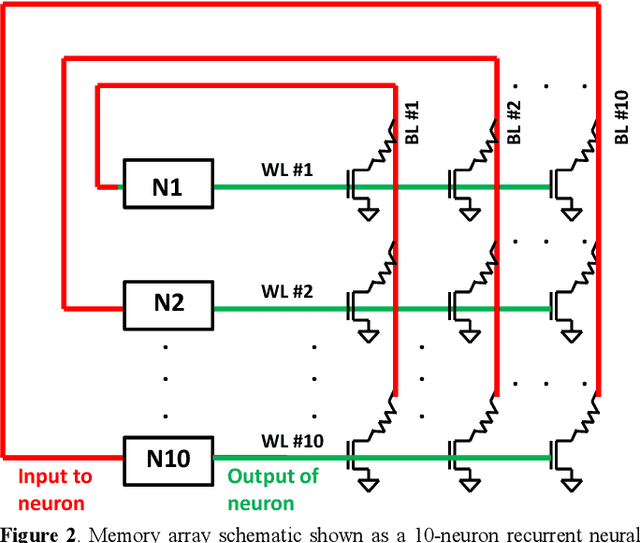

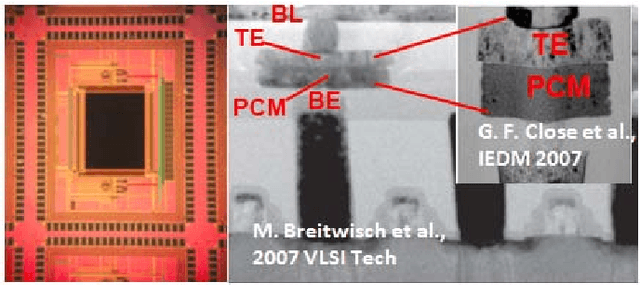

Brain-like associative learning using a nanoscale non-volatile phase change synaptic device array

Jul 14, 2014

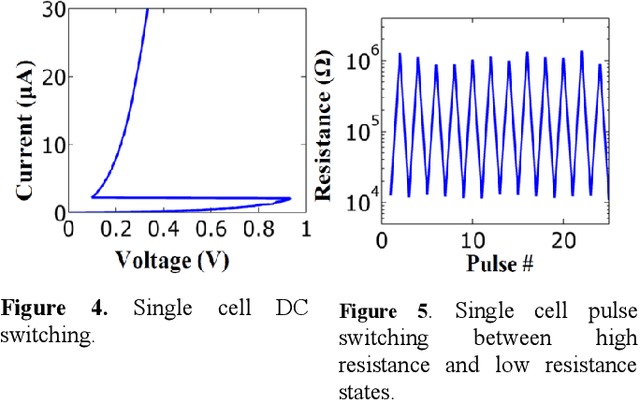

Abstract:Recent advances in neuroscience together with nanoscale electronic device technology have resulted in huge interests in realizing brain-like computing hardwares using emerging nanoscale memory devices as synaptic elements. Although there has been experimental work that demonstrated the operation of nanoscale synaptic element at the single device level, network level studies have been limited to simulations. In this work, we demonstrate, using experiments, array level associative learning using phase change synaptic devices connected in a grid like configuration similar to the organization of the biological brain. Implementing Hebbian learning with phase change memory cells, the synaptic grid was able to store presented patterns and recall missing patterns in an associative brain-like fashion. We found that the system is robust to device variations, and large variations in cell resistance states can be accommodated by increasing the number of training epochs. We illustrated the tradeoff between variation tolerance of the network and the overall energy consumption, and found that energy consumption is decreased significantly for lower variation tolerance.

* Original article can be found here: http://journal.frontiersin.org/Journal/10.3389/fnins.2014.00205/abstract

Experimental Demonstration of Array-level Learning with Phase Change Synaptic Devices

Jun 03, 2014

Abstract:The computational performance of the biological brain has long attracted significant interest and has led to inspirations in operating principles, algorithms, and architectures for computing and signal processing. In this work, we focus on hardware implementation of brain-like learning in a brain-inspired architecture. We demonstrate, in hardware, that 2-D crossbar arrays of phase change synaptic devices can achieve associative learning and perform pattern recognition. Device and array-level studies using an experimental 10x10 array of phase change synaptic devices have shown that pattern recognition is robust against synaptic resistance variations and large variations can be tolerated by increasing the number of training iterations. Our measurements show that increase in initial variation from 9 % to 60 % causes required training iterations to increase from 1 to 11.

* IEDM 2013

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge