Sukhpal Singh Gill

Stock Market Price Prediction: A Hybrid LSTM and Sequential Self-Attention based Approach

Aug 07, 2023Abstract:One of the most enticing research areas is the stock market, and projecting stock prices may help investors profit by making the best decisions at the correct time. Deep learning strategies have emerged as a critical technique in the field of the financial market. The stock market is impacted due to two aspects, one is the geo-political, social and global events on the bases of which the price trends could be affected. Meanwhile, the second aspect purely focuses on historical price trends and seasonality, allowing us to forecast stock prices. In this paper, our aim is to focus on the second aspect and build a model that predicts future prices with minimal errors. In order to provide better prediction results of stock price, we propose a new model named Long Short-Term Memory (LSTM) with Sequential Self-Attention Mechanism (LSTM-SSAM). Finally, we conduct extensive experiments on the three stock datasets: SBIN, HDFCBANK, and BANKBARODA. The experimental results prove the effectiveness and feasibility of the proposed model compared to existing models. The experimental findings demonstrate that the root-mean-squared error (RMSE), and R-square (R2) evaluation indicators are giving the best results.

A Meta-learning based Stacked Regression Approach for Customer Lifetime Value Prediction

Aug 07, 2023

Abstract:Companies across the globe are keen on targeting potential high-value customers in an attempt to expand revenue and this could be achieved only by understanding the customers more. Customer Lifetime Value (CLV) is the total monetary value of transactions/purchases made by a customer with the business over an intended period of time and is used as means to estimate future customer interactions. CLV finds application in a number of distinct business domains such as Banking, Insurance, Online-entertainment, Gaming, and E-Commerce. The existing distribution-based and basic (recency, frequency & monetary) based models face a limitation in terms of handling a wide variety of input features. Moreover, the more advanced Deep learning approaches could be superfluous and add an undesirable element of complexity in certain application areas. We, therefore, propose a system which is able to qualify both as effective, and comprehensive yet simple and interpretable. With that in mind, we develop a meta-learning-based stacked regression model which combines the predictions from bagging and boosting models that each is found to perform well individually. Empirical tests have been carried out on an openly available Online Retail dataset to evaluate various models and show the efficacy of the proposed approach.

ChatGPT: Vision and Challenges

May 08, 2023Abstract:Artificial intelligence (AI) and machine learning have changed the nature of scientific inquiry in recent years. Of these, the development of virtual assistants has accelerated greatly in the past few years, with ChatGPT becoming a prominent AI language model. In this study, we examine the foundations, vision, research challenges of ChatGPT. This article investigates into the background and development of the technology behind it, as well as its popular applications. Moreover, we discuss the advantages of bringing everything together through ChatGPT and Internet of Things (IoT). Further, we speculate on the future of ChatGPT by considering various possibilities for study and development, such as energy-efficiency, cybersecurity, enhancing its applicability to additional technologies (Robotics and Computer Vision), strengthening human-AI communications, and bridging the technological gap. Finally, we discuss the important ethics and current trends of ChatGPT.

HUNTER: AI based Holistic Resource Management for Sustainable Cloud Computing

Oct 28, 2021

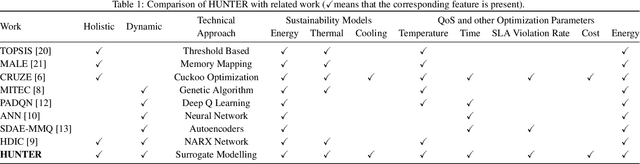

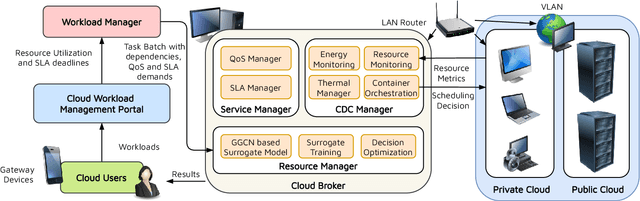

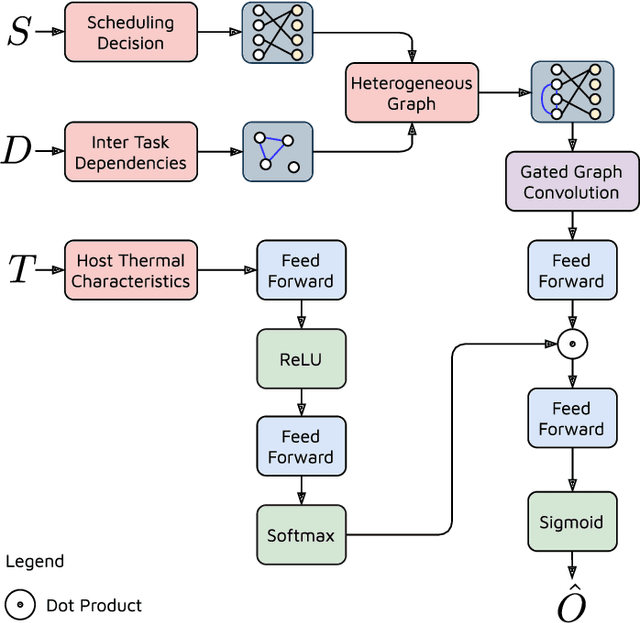

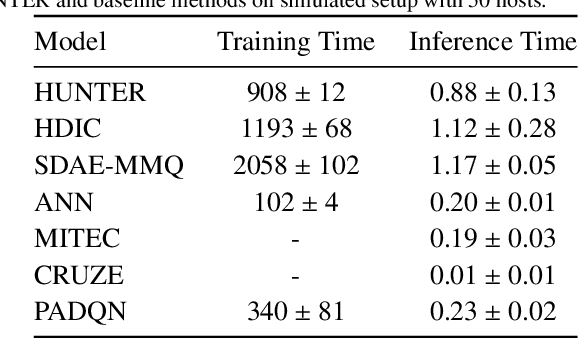

Abstract:The worldwide adoption of cloud data centers (CDCs) has given rise to the ubiquitous demand for hosting application services on the cloud. Further, contemporary data-intensive industries have seen a sharp upsurge in the resource requirements of modern applications. This has led to the provisioning of an increased number of cloud servers, giving rise to higher energy consumption and, consequently, sustainability concerns. Traditional heuristics and reinforcement learning based algorithms for energy-efficient cloud resource management address the scalability and adaptability related challenges to a limited extent. Existing work often fails to capture dependencies across thermal characteristics of hosts, resource consumption of tasks and the corresponding scheduling decisions. This leads to poor scalability and an increase in the compute resource requirements, particularly in environments with non-stationary resource demands. To address these limitations, we propose an artificial intelligence (AI) based holistic resource management technique for sustainable cloud computing called HUNTER. The proposed model formulates the goal of optimizing energy efficiency in data centers as a multi-objective scheduling problem, considering three important models: energy, thermal and cooling. HUNTER utilizes a Gated Graph Convolution Network as a surrogate model for approximating the Quality of Service (QoS) for a system state and generating optimal scheduling decisions. Experiments on simulated and physical cloud environments using the CloudSim toolkit and the COSCO framework show that HUNTER outperforms state-of-the-art baselines in terms of energy consumption, SLA violation, scheduling time, cost and temperature by up to 12, 35, 43, 54 and 3 percent respectively.

Quantum Artificial Intelligence for the Science of Climate Change

Jul 28, 2021

Abstract:Climate change has become one of the biggest global problems increasingly compromising the Earth's habitability. Recent developments such as the extraordinary heat waves in California & Canada, and the devastating floods in Germany point to the role of climate change in the ever-increasing frequency of extreme weather. Numerical modelling of the weather and climate have seen tremendous improvements in the last five decades, yet stringent limitations remain to be overcome. Spatially and temporally localized forecasting is the need of the hour for effective adaptation measures towards minimizing the loss of life and property. Artificial Intelligence-based methods are demonstrating promising results in improving predictions, but are still limited by the availability of requisite hardware and software required to process the vast deluge of data at a scale of the planet Earth. Quantum computing is an emerging paradigm that has found potential applicability in several fields. In this opinion piece, we argue that new developments in Artificial Intelligence algorithms designed for quantum computers - also known as Quantum Artificial Intelligence (QAI) - may provide the key breakthroughs necessary to furthering the science of climate change. The resultant improvements in weather and climate forecasts are expected to cascade to numerous societal benefits.

Deep learning for improved global precipitation in numerical weather prediction systems

Jun 20, 2021

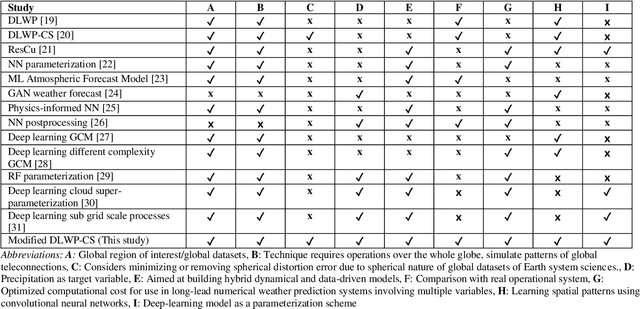

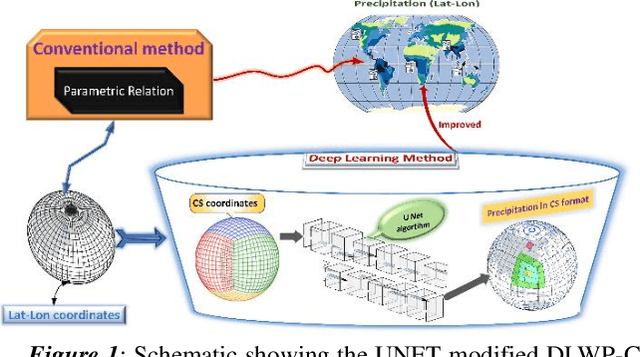

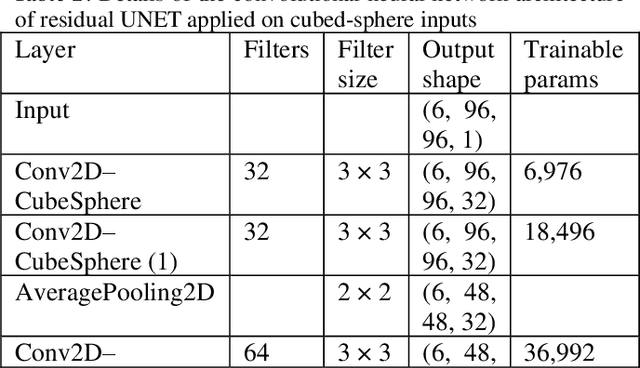

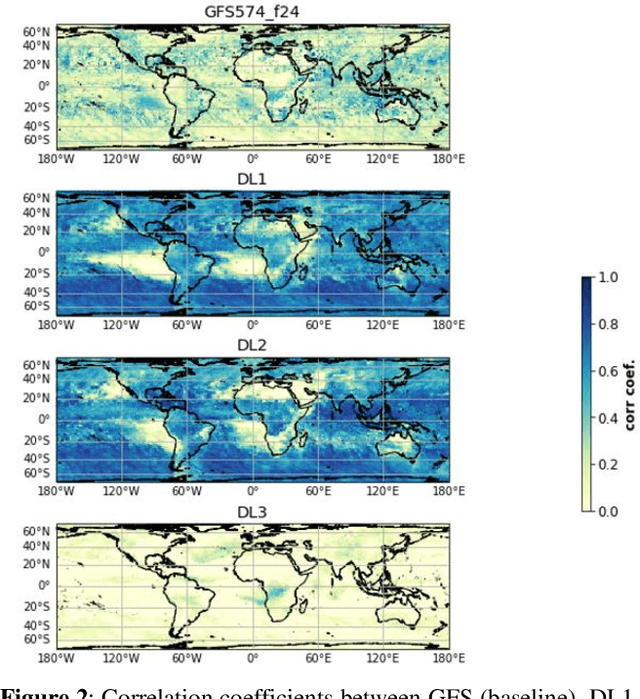

Abstract:The formation of precipitation in state-of-the-art weather and climate models is an important process. The understanding of its relationship with other variables can lead to endless benefits, particularly for the world's monsoon regions dependent on rainfall as a support for livelihood. Various factors play a crucial role in the formation of rainfall, and those physical processes are leading to significant biases in the operational weather forecasts. We use the UNET architecture of a deep convolutional neural network with residual learning as a proof of concept to learn global data-driven models of precipitation. The models are trained on reanalysis datasets projected on the cubed-sphere projection to minimize errors due to spherical distortion. The results are compared with the operational dynamical model used by the India Meteorological Department. The theoretical deep learning-based model shows doubling of the grid point, as well as area averaged skill measured in Pearson correlation coefficients relative to operational system. This study is a proof-of-concept showing that residual learning-based UNET can unravel physical relationships to target precipitation, and those physical constraints can be used in the dynamical operational models towards improved precipitation forecasts. Our results pave the way for the development of online, hybrid models in the future.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge