Rizos Sakellariou

Graph Structure Learning with Temporal Graph Information Bottleneck for Inductive Representation Learning

Aug 20, 2025Abstract:Temporal graph learning is crucial for dynamic networks where nodes and edges evolve over time and new nodes continuously join the system. Inductive representation learning in such settings faces two major challenges: effectively representing unseen nodes and mitigating noisy or redundant graph information. We propose GTGIB, a versatile framework that integrates Graph Structure Learning (GSL) with Temporal Graph Information Bottleneck (TGIB). We design a novel two-step GSL-based structural enhancer to enrich and optimize node neighborhoods and demonstrate its effectiveness and efficiency through theoretical proofs and experiments. The TGIB refines the optimized graph by extending the information bottleneck principle to temporal graphs, regularizing both edges and features based on our derived tractable TGIB objective function via variational approximation, enabling stable and efficient optimization. GTGIB-based models are evaluated to predict links on four real-world datasets; they outperform existing methods in all datasets under the inductive setting, with significant and consistent improvement in the transductive setting.

Trajectory Encoding Temporal Graph Networks

Apr 15, 2025Abstract:Temporal Graph Networks (TGNs) have demonstrated significant success in dynamic graph tasks such as link prediction and node classification. Both tasks comprise transductive settings, where the model predicts links among known nodes, and in inductive settings, where it generalises learned patterns to previously unseen nodes. Existing TGN designs face a dilemma under these dual scenarios. Anonymous TGNs, which rely solely on temporal and structural information, offer strong inductive generalisation but struggle to distinguish known nodes. In contrast, non-anonymous TGNs leverage node features to excel in transductive tasks yet fail to adapt to new nodes. To address this challenge, we propose Trajectory Encoding TGN (TETGN). Our approach introduces automatically expandable node identifiers (IDs) as learnable temporal positional features and performs message passing over these IDs to capture each node's historical context. By integrating this trajectory-aware module with a standard TGN using multi-head attention, TETGN effectively balances transductive accuracy with inductive generalisation. Experimental results on three real-world datasets show that TETGN significantly outperforms strong baselines on both link prediction and node classification tasks, demonstrating its ability to unify the advantages of anonymous and non-anonymous models for dynamic graph learning.

A Survey of Link Prediction in Temporal Networks

Feb 28, 2025Abstract:Temporal networks have gained significant prominence in the past decade for modelling dynamic interactions within complex systems. A key challenge in this domain is Temporal Link Prediction (TLP), which aims to forecast future connections by analysing historical network structures across various applications including social network analysis. While existing surveys have addressed specific aspects of TLP, they typically lack a comprehensive framework that distinguishes between representation and inference methods. This survey bridges this gap by introducing a novel taxonomy that explicitly examines representation and inference from existing methods, providing a novel classification of approaches for TLP. We analyse how different representation techniques capture temporal and structural dynamics, examining their compatibility with various inference methods for both transductive and inductive prediction tasks. Our taxonomy not only clarifies the methodological landscape but also reveals promising unexplored combinations of existing techniques. This taxonomy provides a systematic foundation for emerging challenges in TLP, including model explainability and scalable architectures for complex temporal networks.

HUNTER: AI based Holistic Resource Management for Sustainable Cloud Computing

Oct 28, 2021

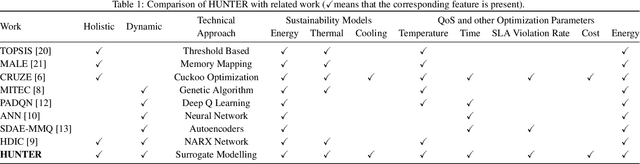

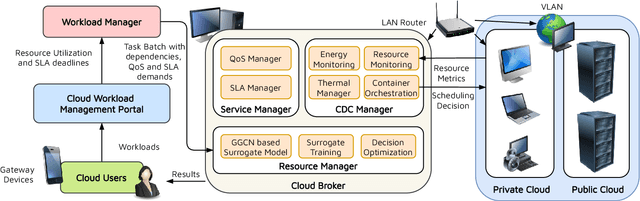

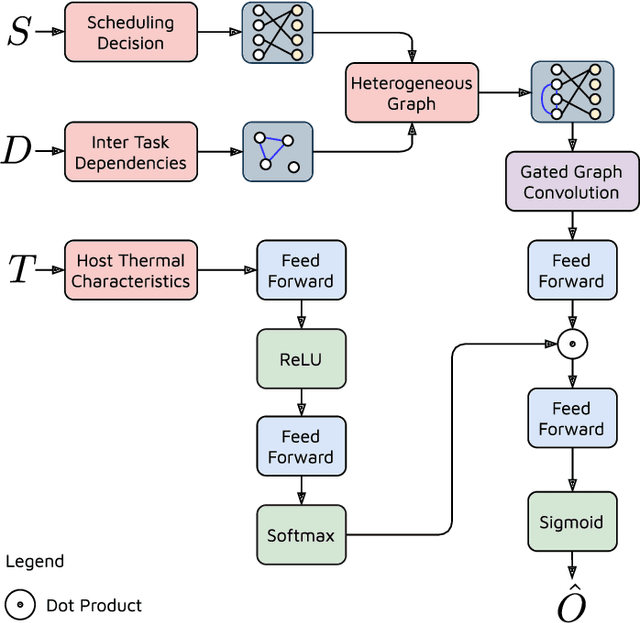

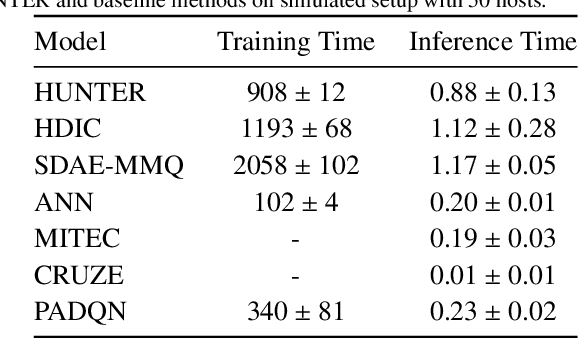

Abstract:The worldwide adoption of cloud data centers (CDCs) has given rise to the ubiquitous demand for hosting application services on the cloud. Further, contemporary data-intensive industries have seen a sharp upsurge in the resource requirements of modern applications. This has led to the provisioning of an increased number of cloud servers, giving rise to higher energy consumption and, consequently, sustainability concerns. Traditional heuristics and reinforcement learning based algorithms for energy-efficient cloud resource management address the scalability and adaptability related challenges to a limited extent. Existing work often fails to capture dependencies across thermal characteristics of hosts, resource consumption of tasks and the corresponding scheduling decisions. This leads to poor scalability and an increase in the compute resource requirements, particularly in environments with non-stationary resource demands. To address these limitations, we propose an artificial intelligence (AI) based holistic resource management technique for sustainable cloud computing called HUNTER. The proposed model formulates the goal of optimizing energy efficiency in data centers as a multi-objective scheduling problem, considering three important models: energy, thermal and cooling. HUNTER utilizes a Gated Graph Convolution Network as a surrogate model for approximating the Quality of Service (QoS) for a system state and generating optimal scheduling decisions. Experiments on simulated and physical cloud environments using the CloudSim toolkit and the COSCO framework show that HUNTER outperforms state-of-the-art baselines in terms of energy consumption, SLA violation, scheduling time, cost and temperature by up to 12, 35, 43, 54 and 3 percent respectively.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge