Manmeet Singh

CloudSense: A Model for Cloud Type Identification using Machine Learning from Radar data

May 08, 2024

Abstract:The knowledge of type of precipitating cloud is crucial for radar based quantitative estimates of precipitation. We propose a novel model called CloudSense which uses machine learning to accurately identify the type of precipitating clouds over the complex terrain locations in the Western Ghats (WGs) of India. CloudSense uses vertical reflectivity profiles collected during July-August 2018 from an X-band radar to classify clouds into four categories namely stratiform,mixed stratiform-convective,convective and shallow clouds. The machine learning(ML) model used in CloudSense was trained using a dataset balanced by Synthetic Minority Oversampling Technique (SMOTE), with features selected based on physical characteristics relevant to different cloud types. Among various ML models evaluated Light Gradient Boosting Machine (LightGBM) demonstrate superior performance in classifying cloud types with a BAC of 0.8 and F1-Score of 0.82. CloudSense generated results are also compared against conventional radar algorithms and we find that CloudSense performs better than radar algorithms. For 200 samples tested, the radar algorithm achieved a BAC of 0.69 and F1-Score of 0.68, whereas CloudSense achieved a BAC and F1-Score of 0.77. Our results show that ML based approach can provide more accurate cloud detection and classification which would be useful to improve precipitation estimates over the complex terrain of the WG.

Mind meets machine: Unravelling GPT-4's cognitive psychology

Mar 20, 2023

Abstract:Commonsense reasoning is a basic ingredient of intelligence in humans, empowering the ability to deduce conclusions based on the observations of surroundings. Large language models (LLMs) are emerging as potent tools increasingly capable of performing human-level tasks. The recent development in the form of GPT-4 and its demonstrated success in tasks complex to humans such as medical exam, bar exam and others has led to an increased confidence in the LLMs to become perfect instruments of intelligence. Though, the GPT-4 paper has shown performance on some common sense reasoning tasks, a comprehensive assessment of GPT-4 on common sense reasoning tasks, particularly on the existing well-established datasets is missing. In this study, we focus on the evaluation of GPT-4's performance on a set of common sense reasoning questions from the widely used CommonsenseQA dataset along with tools from cognitive psychology. In doing so, we understand how GPT-4 processes and integrates common sense knowledge with contextual information, providing insight into the underlying cognitive processes that enable its ability to generate common sense responses. We show that GPT-4 exhibits a high level of accuracy in answering common sense questions, outperforming its predecessor, GPT-3 and GPT-3.5. We show that the accuracy of GPT-4 on CommonSenseQA is 83 % and it has been shown in the original study that human accuracy over the same data was 89 %. Although, GPT-4 falls short of the human performance, it is a substantial improvement from the original 56.5 % in the original language model used by the CommonSenseQA study. Our results strengthen the already available assessments and confidence on GPT-4's common sense reasoning abilities which have significant potential to revolutionize the field of AI, by enabling machines to bridge the gap between human and machine reasoning.

DeepClouds.ai: Deep learning enabled computationally cheap direct numerical simulations

Aug 18, 2022

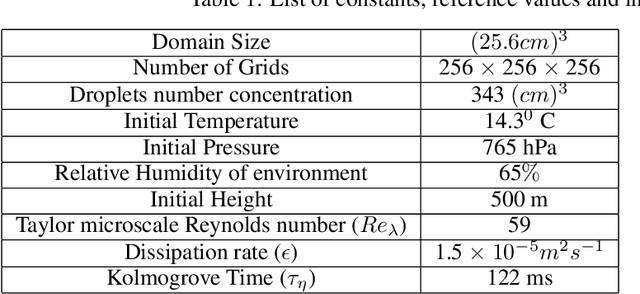

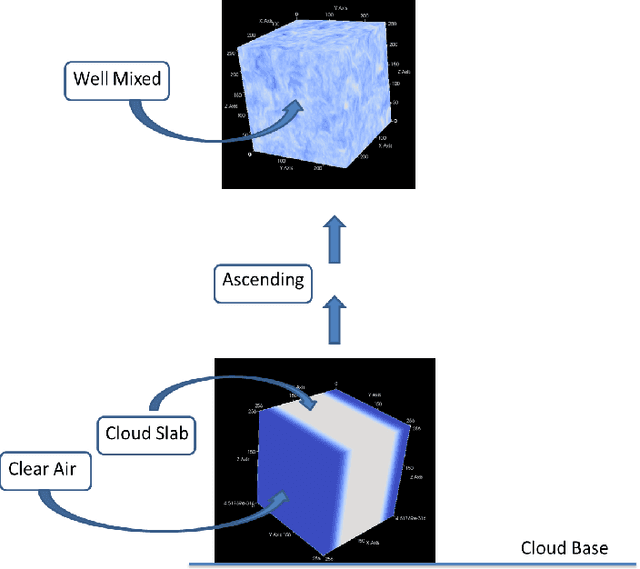

Abstract:Simulation of turbulent flows, especially at the edges of clouds in the atmosphere, is an inherently challenging task. Hitherto, the best possible computational method to perform such experiments is the Direct Numerical Simulation (DNS). DNS involves solving non-linear partial differential equations for fluid flows, also known as Navier-Stokes equations, on discretized grid boxes in a three-dimensional space. It is a valuable paradigm that has guided the numerical weather prediction models to compute rainfall formation. However, DNS cannot be performed for large domains of practical utility to the weather forecast community. Here, we introduce DeepClouds.ai, a 3D-UNET that simulates the outputs of a rising cloud DNS experiment. The problem of increasing the domain size in DNS is addressed by mapping an inner 3D cube to the complete 3D cube from the output of the DNS discretized grid simulation. Our approach effectively captures turbulent flow dynamics without having to solve the complex dynamical core. The baseline shows that the deep learning-based simulation is comparable to the partial-differential equation-based model as measured by various score metrics. This framework can be used to further the science of turbulence and cloud flows by enabling simulations over large physical domains in the atmosphere. It would lead to cascading societal benefits by improved weather predictions via advanced parameterization schemes.

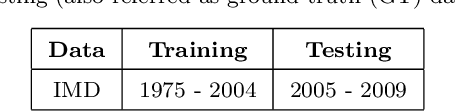

On the modern deep learning approaches for precipitation downscaling

Jul 02, 2022

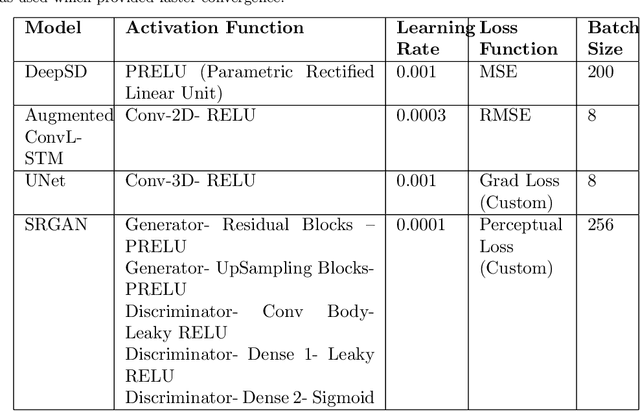

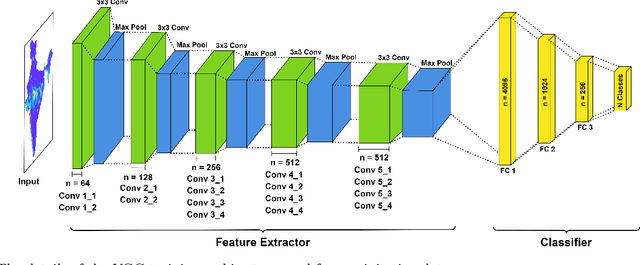

Abstract:Deep Learning (DL) based downscaling has become a popular tool in earth sciences recently. Increasingly, different DL approaches are being adopted to downscale coarser precipitation data and generate more accurate and reliable estimates at local (~few km or even smaller) scales. Despite several studies adopting dynamical or statistical downscaling of precipitation, the accuracy is limited by the availability of ground truth. A key challenge to gauge the accuracy of such methods is to compare the downscaled data to point-scale observations which are often unavailable at such small scales. In this work, we carry out the DL-based downscaling to estimate the local precipitation data from the India Meteorological Department (IMD), which was created by approximating the value from station location to a grid point. To test the efficacy of different DL approaches, we apply four different methods of downscaling and evaluate their performance. The considered approaches are (i) Deep Statistical Downscaling (DeepSD), augmented Convolutional Long Short Term Memory (ConvLSTM), fully convolutional network (U-NET), and Super-Resolution Generative Adversarial Network (SR-GAN). A custom VGG network, used in the SR-GAN, is developed in this work using precipitation data. The results indicate that SR-GAN is the best method for precipitation data downscaling. The downscaled data is validated with precipitation values at IMD station. This DL method offers a promising alternative to statistical downscaling.

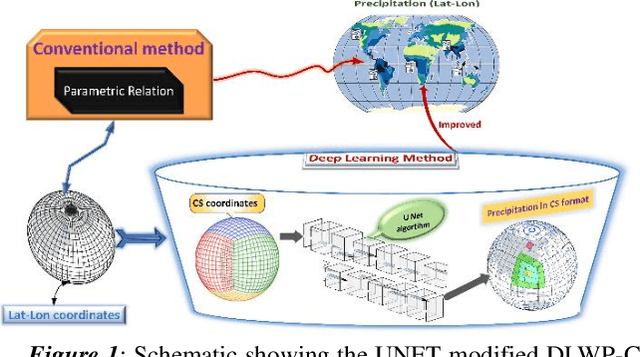

Short-range forecasts of global precipitation using deep learning-augmented numerical weather prediction

Jun 24, 2022

Abstract:Precipitation governs Earth's hydroclimate, and its daily spatiotemporal fluctuations have major socioeconomic effects. Advances in Numerical weather prediction (NWP) have been measured by the improvement of forecasts for various physical fields such as temperature and pressure; however, large biases exist in precipitation prediction. We augment the output of the well-known NWP model CFSv2 with deep learning to create a hybrid model that improves short-range global precipitation at 1-, 2-, and 3-day lead times. To hybridise, we address the sphericity of the global data by using modified DLWP-CS architecture which transforms all the fields to cubed-sphere projection. Dynamical model precipitation and surface temperature outputs are fed into a modified DLWP-CS (UNET) to forecast ground truth precipitation. While CFSv2's average bias is +5 to +7 mm/day over land, the multivariate deep learning model decreases it to within -1 to +1 mm/day. Hurricane Katrina in 2005, Hurricane Ivan in 2004, China floods in 2010, India floods in 2005, and Myanmar storm Nargis in 2008 are used to confirm the substantial enhancement in the skill for the hybrid dynamical-deep learning model. CFSv2 typically shows a moderate to large bias in the spatial pattern and overestimates the precipitation at short-range time scales. The proposed deep learning augmented NWP model can address these biases and vastly improve the spatial pattern and magnitude of predicted precipitation. Deep learning enhanced CFSv2 reduces mean bias by 8x over important land regions for 1 day lead compared to CFSv2. The spatio-temporal deep learning system opens pathways to further the precision and accuracy in global short-range precipitation forecasts.

GLOBUS: GLObal Building heights for Urban Studies

May 24, 2022

Abstract:Urban weather and climate studies continue to be important as extreme events cause economic loss and impact public health. Weather models seek to represent urban areas but are oversimplified due to data availability, especially building information. This paper introduces a novel Level of Detail-1 (LoD-1) building dataset derived from a Deep Neural Network (DNN) called GLObal Building heights for Urban Studies (GLOBUS). GLOBUS uses open-source datasets as predictors: Advanced Land Observation Satellite (ALOS) Digital Surface Model (DSM) normalized using Shuttle Radar Topography Mission (SRTM) Digital Elevation Model (DEM), Landscan population density, and building footprints. The building information from GLOBUS can be ingested in Numerical Weather Prediction (NWP) and urban energy-water balance models to study localized phenomena such as the Urban Heat Island (UHI) effect. GLOBUS has been trained and validated using the United States Geological Survey (USGS) 3DEP Light Detection and Ranging (LiDAR) data. We used data from 5 US cities for training and the model was validated over 6 cities. Performance metrics are computed at a spatial resolution of 300-meter. The Root Mean Squared Error (RMSE) and Mean Absolute Percentage Error (MAPE) were 5.15 meters and 28.8 %, respectively. The standard deviation and histogram of building heights over a 300-meter grid are well represented using GLOBUS.

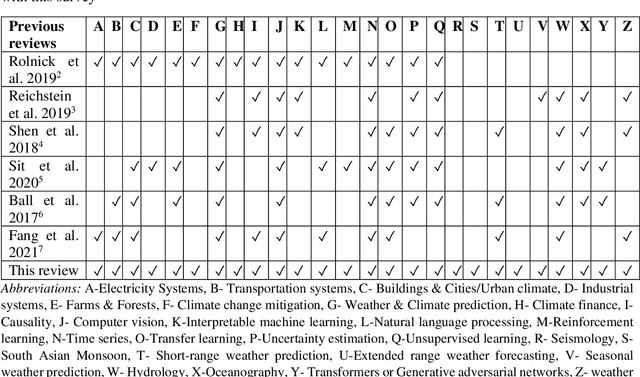

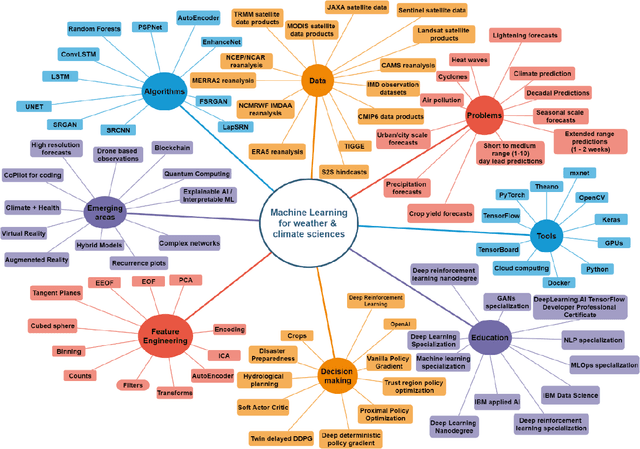

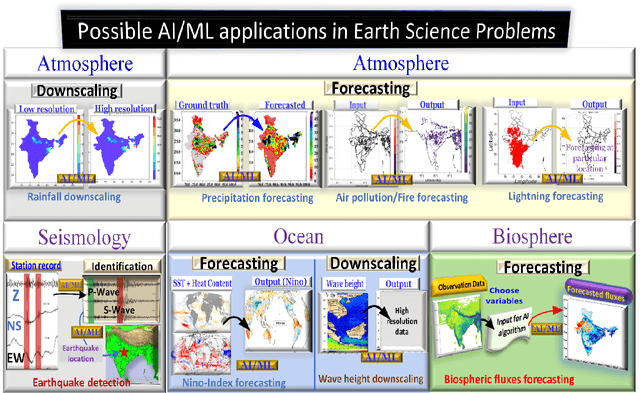

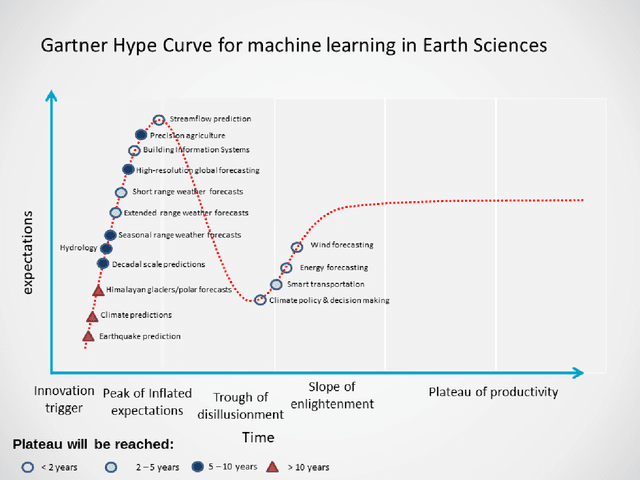

Machine learning for Earth System Science : A survey, status and future directions for South Asia

Dec 24, 2021

Abstract:This survey focuses on the current problems in Earth systems science where machine learning algorithms can be applied. It provides an overview of previous work, ongoing work at the Ministry of Earth Sciences, Gov. of India, and future applications of ML algorithms to some significant earth science problems. We provide a comparison of previous work with this survey, a mind map of multidimensional areas related to machine learning and a Gartner's hype cycle for machine learning in Earth system science (ESS). We mainly focus on the critical components in Earth Sciences, including atmospheric, Ocean, Seismology, and biosphere, and cover AI/ML applications to statistical downscaling and forecasting problems.

Quantum Artificial Intelligence for the Science of Climate Change

Jul 28, 2021

Abstract:Climate change has become one of the biggest global problems increasingly compromising the Earth's habitability. Recent developments such as the extraordinary heat waves in California & Canada, and the devastating floods in Germany point to the role of climate change in the ever-increasing frequency of extreme weather. Numerical modelling of the weather and climate have seen tremendous improvements in the last five decades, yet stringent limitations remain to be overcome. Spatially and temporally localized forecasting is the need of the hour for effective adaptation measures towards minimizing the loss of life and property. Artificial Intelligence-based methods are demonstrating promising results in improving predictions, but are still limited by the availability of requisite hardware and software required to process the vast deluge of data at a scale of the planet Earth. Quantum computing is an emerging paradigm that has found potential applicability in several fields. In this opinion piece, we argue that new developments in Artificial Intelligence algorithms designed for quantum computers - also known as Quantum Artificial Intelligence (QAI) - may provide the key breakthroughs necessary to furthering the science of climate change. The resultant improvements in weather and climate forecasts are expected to cascade to numerous societal benefits.

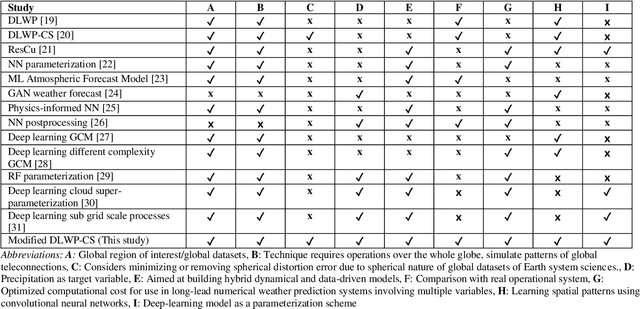

Deep learning for improved global precipitation in numerical weather prediction systems

Jun 20, 2021

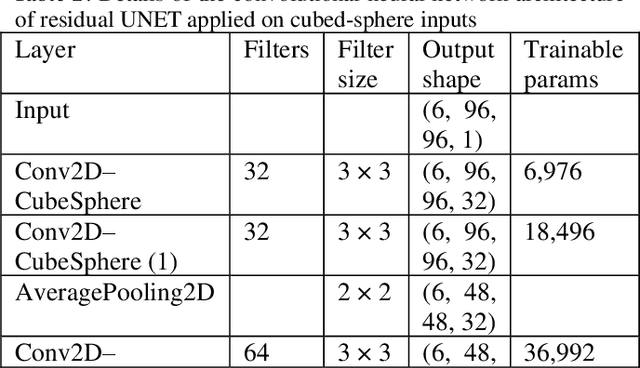

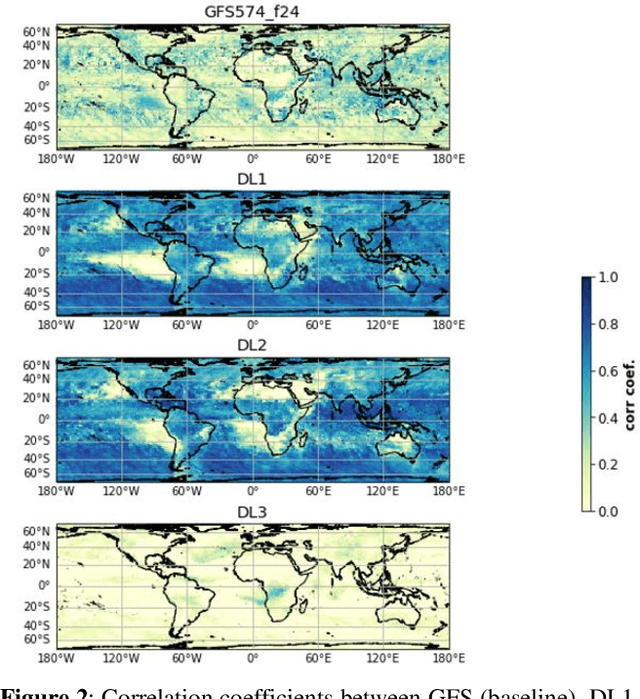

Abstract:The formation of precipitation in state-of-the-art weather and climate models is an important process. The understanding of its relationship with other variables can lead to endless benefits, particularly for the world's monsoon regions dependent on rainfall as a support for livelihood. Various factors play a crucial role in the formation of rainfall, and those physical processes are leading to significant biases in the operational weather forecasts. We use the UNET architecture of a deep convolutional neural network with residual learning as a proof of concept to learn global data-driven models of precipitation. The models are trained on reanalysis datasets projected on the cubed-sphere projection to minimize errors due to spherical distortion. The results are compared with the operational dynamical model used by the India Meteorological Department. The theoretical deep learning-based model shows doubling of the grid point, as well as area averaged skill measured in Pearson correlation coefficients relative to operational system. This study is a proof-of-concept showing that residual learning-based UNET can unravel physical relationships to target precipitation, and those physical constraints can be used in the dynamical operational models towards improved precipitation forecasts. Our results pave the way for the development of online, hybrid models in the future.

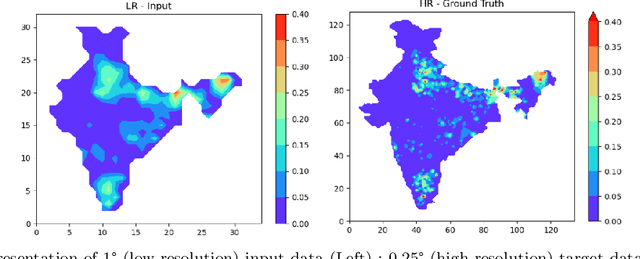

Deep-learning based down-scaling of summer monsoon rainfall data over Indian region

Dec 08, 2020

Abstract:Downscaling is necessary to generate high-resolution observation data to validate the climate model forecast or monitor rainfall at the micro-regional level operationally. Dynamical and statistical downscaling models are often used to get information at high-resolution gridded data over larger domains. As rainfall variability is dependent on the complex Spatio-temporal process leading to non-linear or chaotic Spatio-temporal variations, no single downscaling method can be considered efficient enough. In data with complex topographies, quasi-periodicities, and non-linearities, deep Learning (DL) based methods provide an efficient solution in downscaling rainfall data for regional climate forecasting and real-time rainfall observation data at high spatial resolutions. In this work, we employed three deep learning-based algorithms derived from the super-resolution convolutional neural network (SRCNN) methods, to precipitation data, in particular, IMD and TRMM data to produce 4x-times high-resolution downscaled rainfall data during the summer monsoon season. Among the three algorithms, namely SRCNN, stacked SRCNN, and DeepSD, employed here, the best spatial distribution of rainfall amplitude and minimum root-mean-square error is produced by DeepSD based downscaling. Hence, the use of the DeepSD algorithm is advocated for future use. We found that spatial discontinuity in amplitude and intensity rainfall patterns is the main obstacle in the downscaling of precipitation. Furthermore, we applied these methods for model data postprocessing, in particular, ERA5 data. Downscaled ERA5 rainfall data show a much better distribution of spatial covariance and temporal variance when compared with observation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge