Sudip Das

Reflective Teacher: Semi-Supervised Multimodal 3D Object Detection in Bird's-Eye-View via Uncertainty Measure

Dec 05, 2024Abstract:Applying pseudo labeling techniques has been found to be advantageous in semi-supervised 3D object detection (SSOD) in Bird's-Eye-View (BEV) for autonomous driving, particularly where labeled data is limited. In the literature, Exponential Moving Average (EMA) has been used for adjustments of the weights of teacher network by the student network. However, the same induces catastrophic forgetting in the teacher network. In this work, we address this issue by introducing a novel concept of Reflective Teacher where the student is trained by both labeled and pseudo labeled data while its knowledge is progressively passed to the teacher through a regularizer to ensure retention of previous knowledge. Additionally, we propose Geometry Aware BEV Fusion (GA-BEVFusion) for efficient alignment of multi-modal BEV features, thus reducing the disparity between the modalities - camera and LiDAR. This helps to map the precise geometric information embedded among LiDAR points reliably with the spatial priors for extraction of semantic information from camera images. Our experiments on the nuScenes and Waymo datasets demonstrate: 1) improved performance over state-of-the-art methods in both fully supervised and semi-supervised settings; 2) Reflective Teacher achieves equivalent performance with only 25% and 22% of labeled data for nuScenes and Waymo datasets respectively, in contrast to other fully supervised methods that utilize the full labeled dataset.

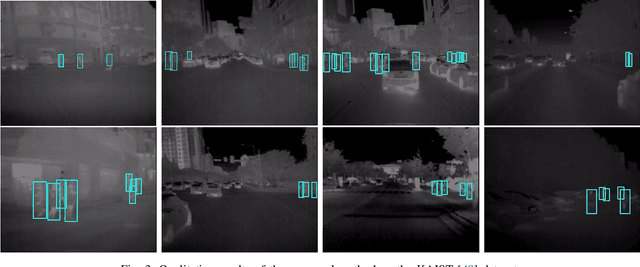

Revisiting Modality Imbalance In Multimodal Pedestrian Detection

Feb 24, 2023

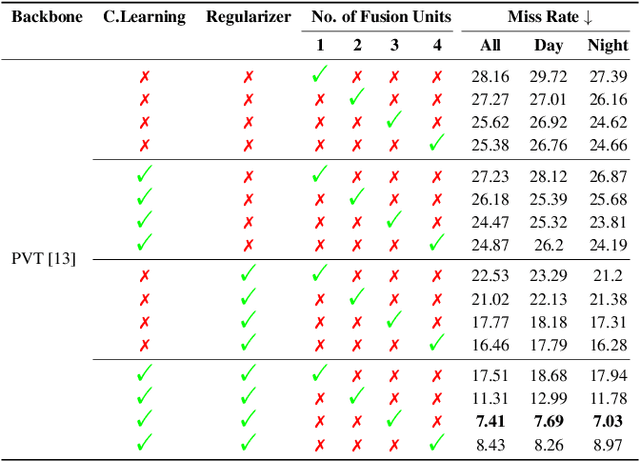

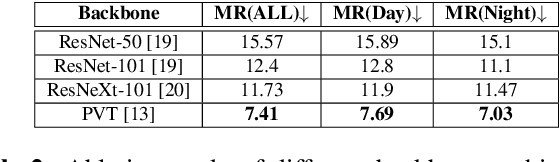

Abstract:Multimodal learning, particularly for pedestrian detection, has recently received emphasis due to its capability to function equally well in several critical autonomous driving scenarios such as low-light, night-time, and adverse weather conditions. However, in most cases, the training distribution largely emphasizes the contribution of one specific input that makes the network biased towards one modality. Hence, the generalization of such models becomes a significant problem where the non-dominant input modality during training could be contributing more to the course of inference. Here, we introduce a novel training setup with regularizer in the multimodal architecture to resolve the problem of this disparity between the modalities. Specifically, our regularizer term helps to make the feature fusion method more robust by considering both the feature extractors equivalently important during the training to extract the multimodal distribution which is referred to as removing the imbalance problem. Furthermore, our decoupling concept of output stream helps the detection task by sharing the spatial sensitive information mutually. Extensive experiments of the proposed method on KAIST and UTokyo datasets shows improvement of the respective state-of-the-art performance.

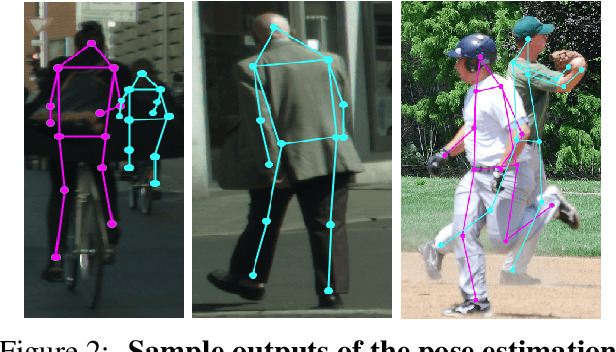

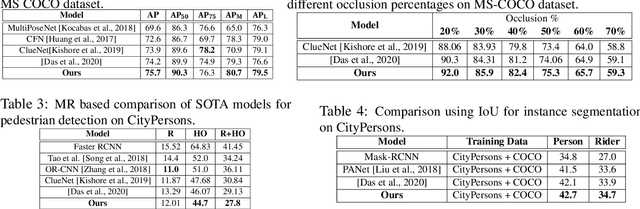

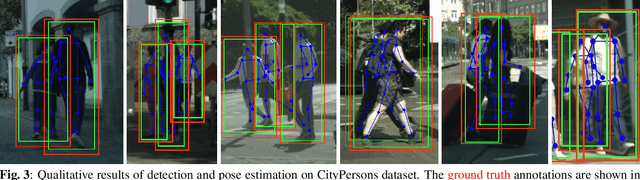

Deep Multi-Task Networks For Occluded Pedestrian Pose Estimation

Jun 15, 2022

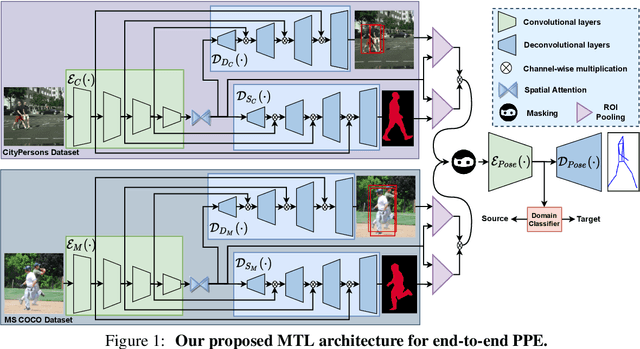

Abstract:Most of the existing works on pedestrian pose estimation do not consider estimating the pose of an occluded pedestrians, as the annotations of the occluded parts are not available in relevant automotive datasets. For example, CityPersons, a well-known dataset for pedestrian detection in automotive scenes does not provide pose annotations, whereas MS-COCO, a non-automotive dataset, contains human pose estimation. In this work, we propose a multi-task framework to extract pedestrian features through detection and instance segmentation tasks performed separately on these two distributions. Thereafter, an encoder learns pose specific features using an unsupervised instance-level domain adaptation method for the pedestrian instances from both distributions. The proposed framework has improved state-of-the-art performances of pose estimation, pedestrian detection, and instance segmentation.

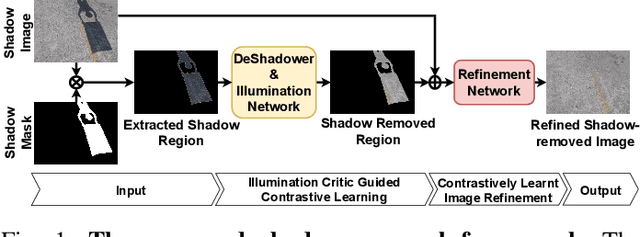

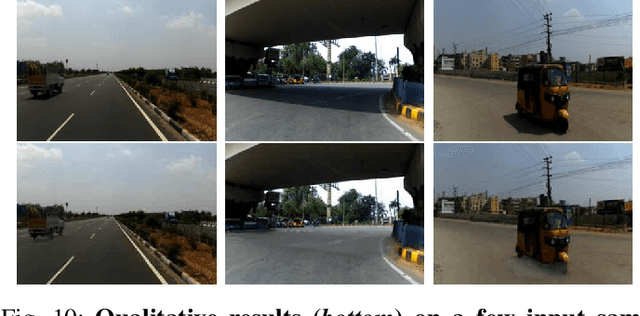

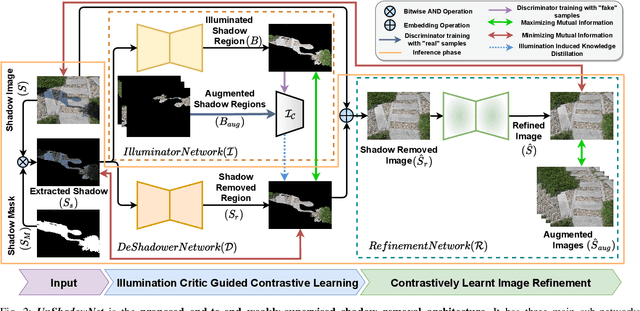

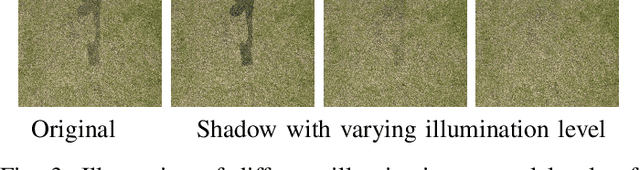

UnShadowNet: Illumination Critic Guided Contrastive Learning For Shadow Removal

Mar 29, 2022

Abstract:Shadows are frequently encountered natural phenomena that significantly hinder the performance of computer vision perception systems in practical settings, e.g., autonomous driving. A solution to this would be to eliminate shadow regions from the images before the processing of the perception system. Yet, training such a solution requires pairs of aligned shadowed and non-shadowed images which are difficult to obtain. We introduce a novel weakly supervised shadow removal framework UnShadowNet trained using contrastive learning. It comprises of a DeShadower network responsible for removal of the extracted shadow under the guidance of an Illumination network which is trained adversarially by the illumination critic and a Refinement network to further remove artifacts. We show that UnShadowNet can also be easily extended to a fully-supervised setup to exploit the ground-truth when available. UnShadowNet outperforms existing state-of-the-art approaches on three publicly available shadow datasets (ISTD, adjusted ISTD, SRD) in both the weakly and fully supervised setups.

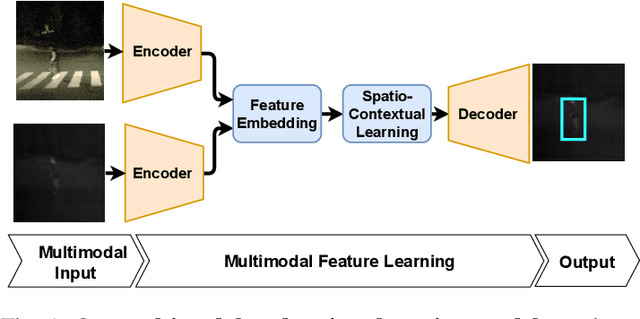

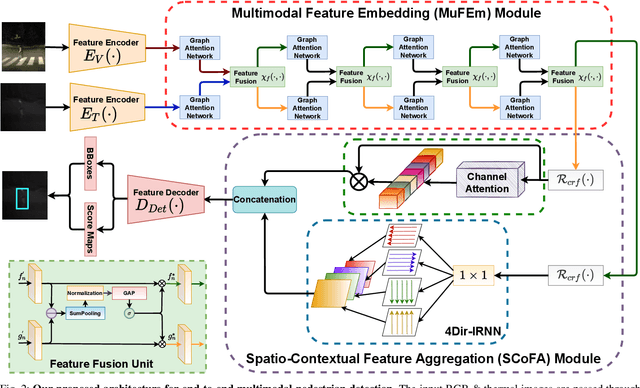

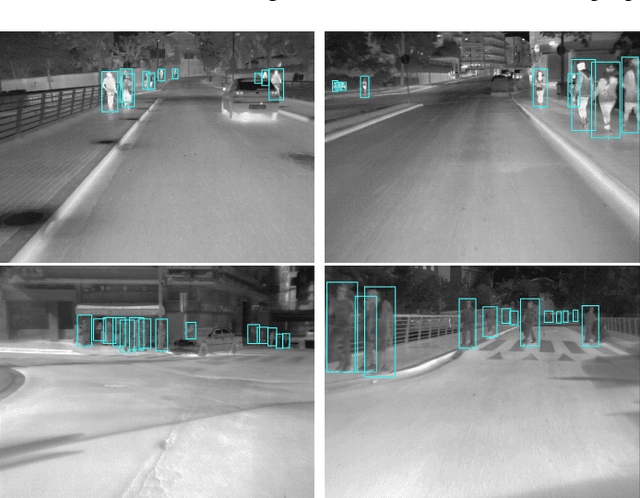

Spatio-Contextual Deep Network Based Multimodal Pedestrian Detection For Autonomous Driving

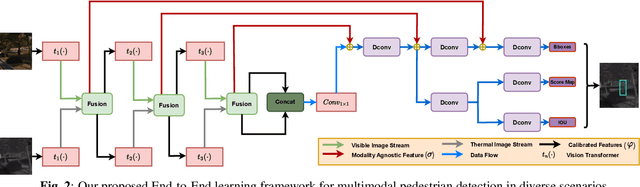

May 26, 2021

Abstract:Pedestrian Detection is the most critical module of an Autonomous Driving system. Although a camera is commonly used for this purpose, its quality degrades severely in low-light night time driving scenarios. On the other hand, the quality of a thermal camera image remains unaffected in similar conditions. This paper proposes an end-to-end multimodal fusion model for pedestrian detection using RGB and thermal images. Its novel spatio-contextual deep network architecture is capable of exploiting the multimodal input efficiently. It consists of two distinct deformable ResNeXt-50 encoders for feature extraction from the two modalities. Fusion of these two encoded features takes place inside a multimodal feature embedding module (MuFEm) consisting of several groups of a pair of Graph Attention Network and a feature fusion unit. The output of the last feature fusion unit of MuFEm is subsequently passed to two CRFs for their spatial refinement. Further enhancement of the features is achieved by applying channel-wise attention and extraction of contextual information with the help of four RNNs traversing in four different directions. Finally, these feature maps are used by a single-stage decoder to generate the bounding box of each pedestrian and the score map. We have performed extensive experiments of the proposed framework on three publicly available multimodal pedestrian detection benchmark datasets, namely KAIST, CVC-14, and UTokyo. The results on each of them improved the respective state-of-the-art performance. A short video giving an overview of this work along with its qualitative results can be seen at https://youtu.be/FDJdSifuuCs.

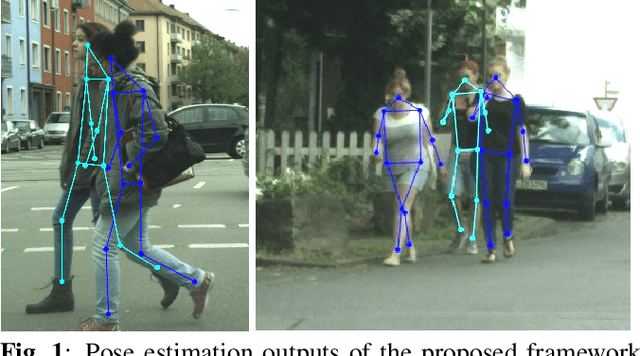

An End-to-End Framework for Unsupervised Pose Estimation of Occluded Pedestrians

Feb 15, 2020

Abstract:Pose estimation in the wild is a challenging problem, particularly in situations of (i) occlusions of varying degrees and (ii) crowded outdoor scenes. Most of the existing studies of pose estimation did not report the performance in similar situations. Moreover, pose annotations for occluded parts of human figures have not been provided in any of the relevant standard datasets which in turn creates further difficulties to the required studies for pose estimation of the entire figure of occluded humans. Well known pedestrian detection datasets such as CityPersons contains samples of outdoor scenes but it does not include pose annotations. Here, we propose a novel multi-task framework for end-to-end training towards the entire pose estimation of pedestrians including in situations of any kind of occlusion. To tackle this problem for training the network, we make use of a pose estimation dataset, MS-COCO, and employ unsupervised adversarial instance-level domain adaptation for estimating the entire pose of occluded pedestrians. The experimental studies show that the proposed framework outperforms the SOTA results for pose estimation, instance segmentation and pedestrian detection in cases of heavy occlusions (HO) and reasonable + heavy occlusions (R + HO) on the two benchmark datasets.

Scale-Invariant Multi-Oriented Text Detection in Wild Scene Images

Feb 15, 2020

Abstract:Automatic detection of scene texts in the wild is a challenging problem, particularly due to the difficulties in handling (i) occlusions of varying percentages, (ii) widely different scales and orientations, (iii) severe degradations in the image quality etc. In this article, we propose a fully convolutional neural network architecture consisting of a novel Feature Representation Block (FRB) capable of efficient abstraction of information. The proposed network has been trained using curriculum learning with respect to difficulties in image samples and gradual pixel-wise blurring. It is capable of detecting texts of different scales and orientations suffered by blurring from multiple possible sources, non-uniform illumination as well as partial occlusions of varying percentages. Text detection performance of the proposed framework on various benchmark sample databases including ICDAR 2015, ICDAR 2017 MLT, COCO-Text and MSRA-TD500 improves respective state-of-the-art results significantly. Source code of the proposed architecture will be made available at github.

Seek and You Will Find: A New Optimized Framework for Efficient Detection of Pedestrian

Dec 21, 2019

Abstract:Studies of object detection and localization, particularly pedestrian detection have received considerable attention in recent times due to its several prospective applications such as surveillance, driving assistance, autonomous cars, etc. Also, a significant trend of latest research studies in related problem areas is the use of sophisticated Deep Learning based approaches to improve the benchmark performance on various standard datasets. A trade-off between the speed (number of video frames processed per second) and detection accuracy has often been reported in the existing literature. In this article, we present a new but simple deep learning based strategy for pedestrian detection that improves this trade-off. Since training of similar models using publicly available sample datasets failed to improve the detection performance to some significant extent, particularly for the instances of pedestrians of smaller sizes, we have developed a new sample dataset consisting of more than 80K annotated pedestrian figures in videos recorded under varying traffic conditions. Performance of the proposed model on the test samples of the new dataset and two other existing datasets, namely Caltech Pedestrian Dataset (CPD) and CityPerson Dataset (CD) have been obtained. Our proposed system shows nearly 16\% improvement over the existing state-of-the-art result.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge