Sudhakar Sah

CompressNAS : A Fast and Efficient Technique for Model Compression using Decomposition

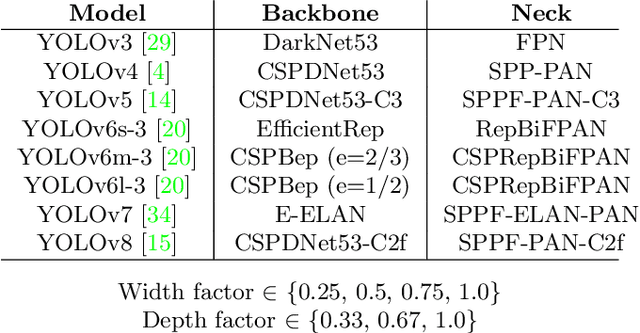

Nov 12, 2025Abstract:Deep Convolutional Neural Networks (CNNs) are increasingly difficult to deploy on microcontrollers (MCUs) and lightweight NPUs (Neural Processing Units) due to their growing size and compute demands. Low-rank tensor decomposition, such as Tucker factorization, is a promising way to reduce parameters and operations with reasonable accuracy loss. However, existing approaches select ranks locally and often ignore global trade-offs between compression and accuracy. We introduce CompressNAS, a MicroNAS-inspired framework that treats rank selection as a global search problem. CompressNAS employs a fast accuracy estimator to evaluate candidate decompositions, enabling efficient yet exhaustive rank exploration under memory and accuracy constraints. In ImageNet, CompressNAS compresses ResNet-18 by 8x with less than 4% accuracy drop; on COCO, we achieve 2x compression of YOLOv5s without any accuracy drop and 2x compression of YOLOv5n with a 2.5% drop. Finally, we present a new family of compressed models, STResNet, with competitive performance compared to other efficient models.

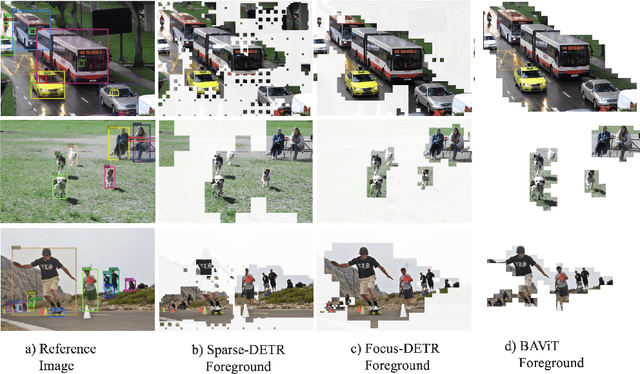

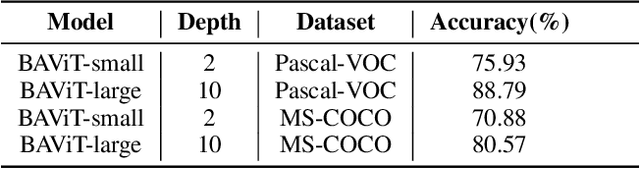

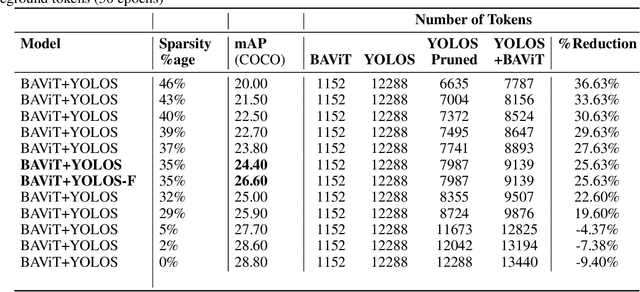

Token Pruning using a Lightweight Background Aware Vision Transformer

Oct 12, 2024

Abstract:High runtime memory and high latency puts significant constraint on Vision Transformer training and inference, especially on edge devices. Token pruning reduces the number of input tokens to the ViT based on importance criteria of each token. We present a Background Aware Vision Transformer (BAViT) model, a pre-processing block to object detection models like DETR/YOLOS aimed to reduce runtime memory and increase throughput by using a novel approach to identify background tokens in the image. The background tokens can be pruned completely or partially before feeding to a ViT based object detector. We use the semantic information provided by segmentation map and/or bounding box annotation to train a few layers of ViT to classify tokens to either foreground or background. Using 2 layers and 10 layers of BAViT, background and foreground tokens can be separated with 75% and 88% accuracy on VOC dataset and 71% and 80% accuracy on COCO dataset respectively. We show a 2 layer BAViT-small model as pre-processor to YOLOS can increase the throughput by 30% - 40% with a mAP drop of 3% without any sparse fine-tuning and 2% with sparse fine-tuning. Our approach is specifically targeted for Edge AI use cases.

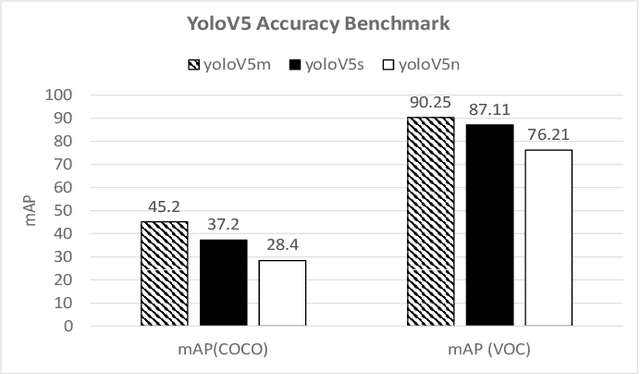

ActNAS : Generating Efficient YOLO Models using Activation NAS

Oct 11, 2024Abstract:Activation functions introduce non-linearity into Neural Networks, enabling them to learn complex patterns. Different activation functions vary in speed and accuracy, ranging from faster but less accurate options like ReLU to slower but more accurate functions like SiLU or SELU. Typically, same activation function is used throughout an entire model architecture. In this paper, we conduct a comprehensive study on the effects of using mixed activation functions in YOLO-based models, evaluating their impact on latency, memory usage, and accuracy across CPU, NPU, and GPU edge devices. We also propose a novel approach that leverages Neural Architecture Search (NAS) to design YOLO models with optimized mixed activation functions.The best model generated through this method demonstrates a slight improvement in mean Average Precision (mAP) compared to baseline model (SiLU), while it is 22.28% faster and consumes 64.15% less memory on the reference NPU device.

MCUBench: A Benchmark of Tiny Object Detectors on MCUs

Sep 27, 2024Abstract:We introduce MCUBench, a benchmark featuring over 100 YOLO-based object detection models evaluated on the VOC dataset across seven different MCUs. This benchmark provides detailed data on average precision, latency, RAM, and Flash usage for various input resolutions and YOLO-based one-stage detectors. By conducting a controlled comparison with a fixed training pipeline, we collect comprehensive performance metrics. Our Pareto-optimal analysis shows that integrating modern detection heads and training techniques allows various YOLO architectures, including legacy models like YOLOv3, to achieve a highly efficient tradeoff between mean Average Precision (mAP) and latency. MCUBench serves as a valuable tool for benchmarking the MCU performance of contemporary object detectors and aids in model selection based on specific constraints.

QGen: On the Ability to Generalize in Quantization Aware Training

Apr 19, 2024

Abstract:Quantization lowers memory usage, computational requirements, and latency by utilizing fewer bits to represent model weights and activations. In this work, we investigate the generalization properties of quantized neural networks, a characteristic that has received little attention despite its implications on model performance. In particular, first, we develop a theoretical model for quantization in neural networks and demonstrate how quantization functions as a form of regularization. Second, motivated by recent work connecting the sharpness of the loss landscape and generalization, we derive an approximate bound for the generalization of quantized models conditioned on the amount of quantization noise. We then validate our hypothesis by experimenting with over 2000 models trained on CIFAR-10, CIFAR-100, and ImageNet datasets on convolutional and transformer-based models.

Accelerating Deep Neural Networks via Semi-Structured Activation Sparsity

Sep 27, 2023Abstract:The demand for efficient processing of deep neural networks (DNNs) on embedded devices is a significant challenge limiting their deployment. Exploiting sparsity in the network's feature maps is one of the ways to reduce its inference latency. It is known that unstructured sparsity results in lower accuracy degradation with respect to structured sparsity but the former needs extensive inference engine changes to get latency benefits. To tackle this challenge, we propose a solution to induce semi-structured activation sparsity exploitable through minor runtime modifications. To attain high speedup levels at inference time, we design a sparse training procedure with awareness of the final position of the activations while computing the General Matrix Multiplication (GEMM). We extensively evaluate the proposed solution across various models for image classification and object detection tasks. Remarkably, our approach yields a speed improvement of $1.25 \times$ with a minimal accuracy drop of $1.1\%$ for the ResNet18 model on the ImageNet dataset. Furthermore, when combined with a state-of-the-art structured pruning method, the resulting models provide a good latency-accuracy trade-off, outperforming models that solely employ structured pruning techniques.

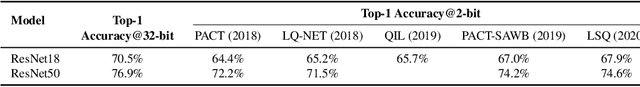

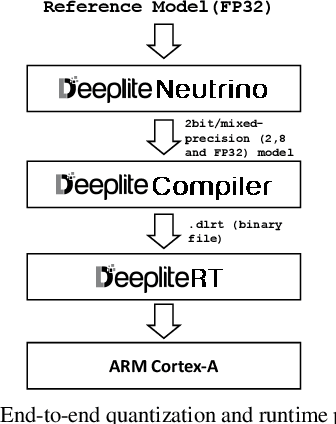

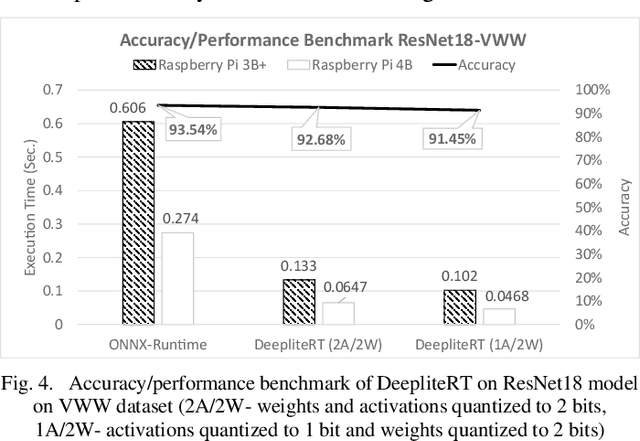

DeepliteRT: Computer Vision at the Edge

Sep 19, 2023

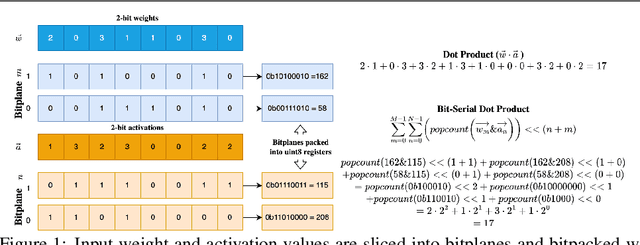

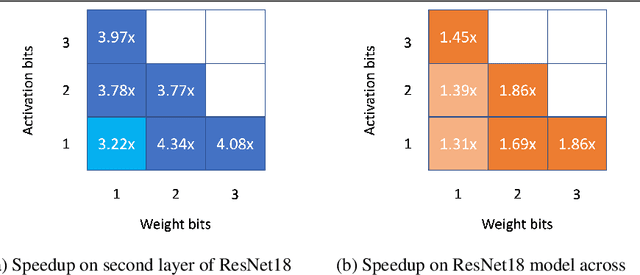

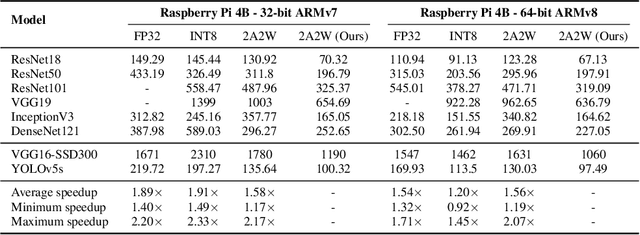

Abstract:The proliferation of edge devices has unlocked unprecedented opportunities for deep learning model deployment in computer vision applications. However, these complex models require considerable power, memory and compute resources that are typically not available on edge platforms. Ultra low-bit quantization presents an attractive solution to this problem by scaling down the model weights and activations from 32-bit to less than 8-bit. We implement highly optimized ultra low-bit convolution operators for ARM-based targets that outperform existing methods by up to 4.34x. Our operator is implemented within Deeplite Runtime (DeepliteRT), an end-to-end solution for the compilation, tuning, and inference of ultra low-bit models on ARM devices. Compiler passes in DeepliteRT automatically convert a fake-quantized model in full precision to a compact ultra low-bit representation, easing the process of quantized model deployment on commodity hardware. We analyze the performance of DeepliteRT on classification and detection models against optimized 32-bit floating-point, 8-bit integer, and 2-bit baselines, achieving significant speedups of up to 2.20x, 2.33x and 2.17x, respectively.

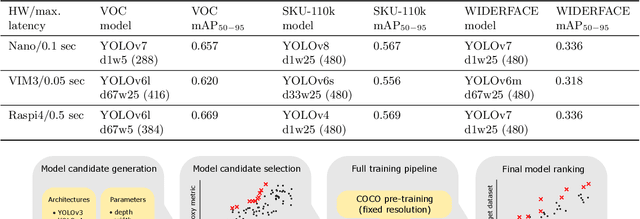

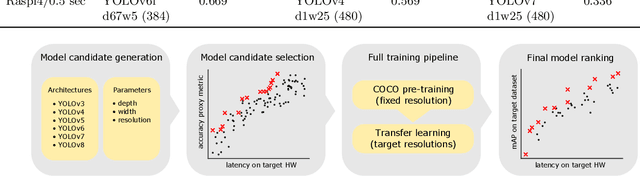

YOLOBench: Benchmarking Efficient Object Detectors on Embedded Systems

Jul 26, 2023

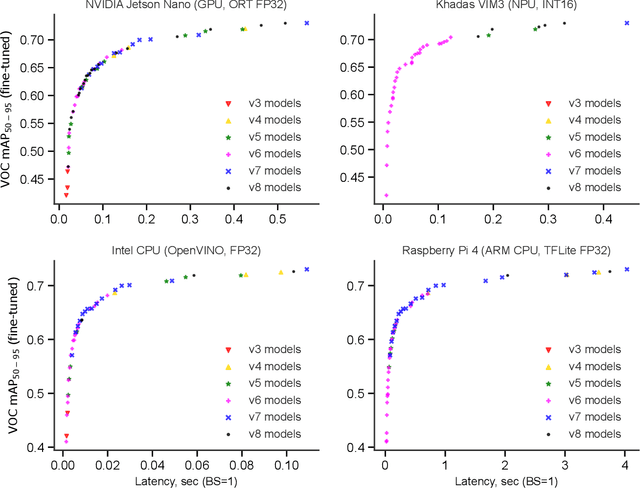

Abstract:We present YOLOBench, a benchmark comprised of 550+ YOLO-based object detection models on 4 different datasets and 4 different embedded hardware platforms (x86 CPU, ARM CPU, Nvidia GPU, NPU). We collect accuracy and latency numbers for a variety of YOLO-based one-stage detectors at different model scales by performing a fair, controlled comparison of these detectors with a fixed training environment (code and training hyperparameters). Pareto-optimality analysis of the collected data reveals that, if modern detection heads and training techniques are incorporated into the learning process, multiple architectures of the YOLO series achieve a good accuracy-latency trade-off, including older models like YOLOv3 and YOLOv4. We also evaluate training-free accuracy estimators used in neural architecture search on YOLOBench and demonstrate that, while most state-of-the-art zero-cost accuracy estimators are outperformed by a simple baseline like MAC count, some of them can be effectively used to predict Pareto-optimal detection models. We showcase that by using a zero-cost proxy to identify a YOLO architecture competitive against a state-of-the-art YOLOv8 model on a Raspberry Pi 4 CPU. The code and data are available at https://github.com/Deeplite/deeplite-torch-zoo

DeepGEMM: Accelerated Ultra Low-Precision Inference on CPU Architectures using Lookup Tables

Apr 18, 2023

Abstract:A lot of recent progress has been made in ultra low-bit quantization, promising significant improvements in latency, memory footprint and energy consumption on edge devices. Quantization methods such as Learned Step Size Quantization can achieve model accuracy that is comparable to full-precision floating-point baselines even with sub-byte quantization. However, it is extremely challenging to deploy these ultra low-bit quantized models on mainstream CPU devices because commodity SIMD (Single Instruction, Multiple Data) hardware typically supports no less than 8-bit precision. To overcome this limitation, we propose DeepGEMM, a lookup table based approach for the execution of ultra low-precision convolutional neural networks on SIMD hardware. The proposed method precomputes all possible products of weights and activations, stores them in a lookup table, and efficiently accesses them at inference time to avoid costly multiply-accumulate operations. Our 2-bit implementation outperforms corresponding 8-bit integer kernels in the QNNPACK framework by up to 1.74x on x86 platforms.

Accelerating Deep Learning Model Inference on Arm CPUs with Ultra-Low Bit Quantization and Runtime

Jul 18, 2022

Abstract:Deep Learning has been one of the most disruptive technological advancements in recent times. The high performance of deep learning models comes at the expense of high computational, storage and power requirements. Sensing the immediate need for accelerating and compressing these models to improve on-device performance, we introduce Deeplite Neutrino for production-ready optimization of the models and Deeplite Runtime for deployment of ultra-low bit quantized models on Arm-based platforms. We implement low-level quantization kernels for Armv7 and Armv8 architectures enabling deployment on the vast array of 32-bit and 64-bit Arm-based devices. With efficient implementations using vectorization, parallelization, and tiling, we realize speedups of up to 2x and 2.2x compared to TensorFlow Lite with XNNPACK backend on classification and detection models, respectively. We also achieve significant speedups of up to 5x and 3.2x compared to ONNX Runtime for classification and detection models, respectively.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge