Steve McLaughlin

Real-time, low-cost multi-person 3D pose estimation

Oct 11, 2021

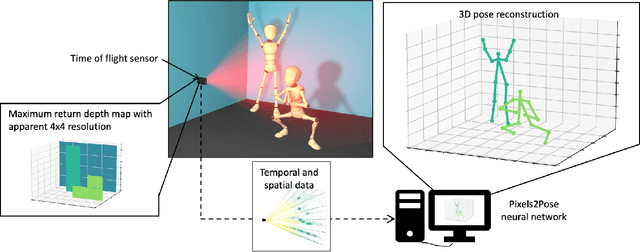

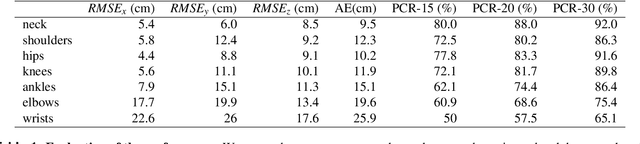

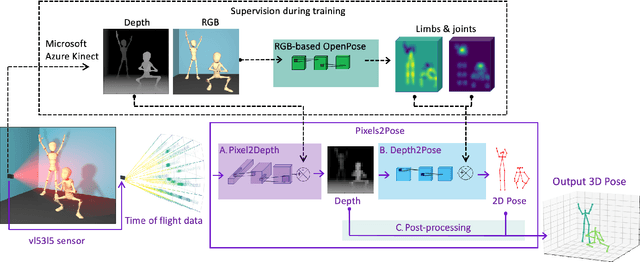

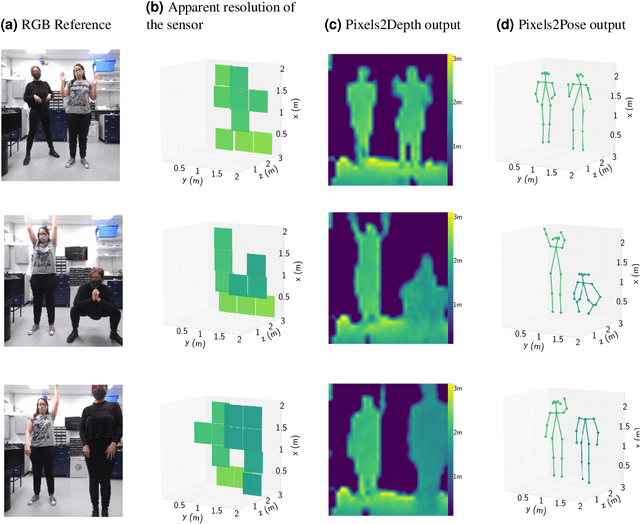

Abstract:The process of tracking human anatomy in computer vision is referred to pose estimation, and it is used in fields ranging from gaming to surveillance. Three-dimensional pose estimation traditionally requires advanced equipment, such as multiple linked intensity cameras or high-resolution time-of-flight cameras to produce depth images. However, there are applications, e.g.~consumer electronics, where significant constraints are placed on the size, power consumption, weight and cost of the usable technology. Here, we demonstrate that computational imaging methods can achieve accurate pose estimation and overcome the apparent limitations of time-of-flight sensors designed for much simpler tasks. The sensor we use is already widely integrated in consumer-grade mobile devices, and despite its low spatial resolution, only 4$\times$4 pixels, our proposed Pixels2Pose system transforms its data into accurate depth maps and 3D pose data of multiple people up to a distance of 3 m from the sensor. We are able to generate depth maps at a resolution of 32$\times$32 and 3D localization of a body parts with an error of only $\approx$10 cm at a frame rate of 7 fps. This work opens up promising real-life applications in scenarios that were previously restricted by the advanced hardware requirements and cost of time-of-flight technology.

Fast unsupervised Bayesian image segmentation with adaptive spatial regularisation

Feb 02, 2016

Abstract:This paper presents a new Bayesian estimation technique for hidden Potts-Markov random fields with unknown regularisation parameters, with application to fast unsupervised K-class image segmentation. The technique is derived by first removing the regularisation parameter from the Bayesian model by marginalisation, followed by a small-variance-asymptotic (SVA) analysis in which the spatial regularisation and the integer-constrained terms of the Potts model are decoupled. The evaluation of this SVA Bayesian estimator is then relaxed into a problem that can be computed efficiently by iteratively solving a convex total-variation denoising problem and a least-squares clustering (K-means) problem, both of which can be solved straightforwardly, even in high-dimensions, and with parallel computing techniques. This leads to a fast fully unsupervised Bayesian image segmentation methodology in which the strength of the spatial regularisation is adapted automatically to the observed image during the inference procedure, and that can be easily applied in large 2D and 3D scenarios or in applications requiring low computing times. Experimental results on real images, as well as extensive comparisons with state-of-the-art algorithms, confirm that the proposed methodology offer extremely fast convergence and produces accurate segmentation results, with the important additional advantage of self-adjusting regularisation parameters.

Bayesian estimation of the multifractality parameter for image texture using a Whittle approximation

Apr 09, 2015

Abstract:Texture characterization is a central element in many image processing applications. Multifractal analysis is a useful signal and image processing tool, yet, the accurate estimation of multifractal parameters for image texture remains a challenge. This is due in the main to the fact that current estimation procedures consist of performing linear regressions across frequency scales of the two-dimensional (2D) dyadic wavelet transform, for which only a few such scales are computable for images. The strongly non-Gaussian nature of multifractal processes, combined with their complicated dependence structure, makes it difficult to develop suitable models for parameter estimation. Here, we propose a Bayesian procedure that addresses the difficulties in the estimation of the multifractality parameter. The originality of the procedure is threefold: The construction of a generic semi-parametric statistical model for the logarithm of wavelet leaders; the formulation of Bayesian estimators that are associated with this model and the set of parameter values admitted by multifractal theory; the exploitation of a suitable Whittle approximation within the Bayesian model which enables the otherwise infeasible evaluation of the posterior distribution associated with the model. Performance is assessed numerically for several 2D multifractal processes, for several image sizes and a large range of process parameters. The procedure yields significant benefits over current benchmark estimators in terms of estimation performance and ability to discriminate between the two most commonly used classes of multifractal process models. The gains in performance are particularly pronounced for small image sizes, notably enabling for the first time the analysis of image patches as small as 64x64 pixels.

Nonlinear spectral unmixing of hyperspectral images using Gaussian processes

Jul 23, 2012

Abstract:This paper presents an unsupervised algorithm for nonlinear unmixing of hyperspectral images. The proposed model assumes that the pixel reflectances result from a nonlinear function of the abundance vectors associated with the pure spectral components. We assume that the spectral signatures of the pure components and the nonlinear function are unknown. The first step of the proposed method consists of the Bayesian estimation of the abundance vectors for all the image pixels and the nonlinear function relating the abundance vectors to the observations. The endmembers are subsequently estimated using Gaussian process regression. The performance of the unmixing strategy is evaluated with simulations conducted on synthetic and real data.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge