Steve Deppen

Technical Report: Quality Assessment Tool for Machine Learning with Clinical CT

Jul 27, 2021

Abstract:Image Quality Assessment (IQA) is important for scientific inquiry, especially in medical imaging and machine learning. Potential data quality issues can be exacerbated when human-based workflows use limited views of the data that may obscure digital artifacts. In practice, multiple factors such as network issues, accelerated acquisitions, motion artifacts, and imaging protocol design can impede the interpretation of image collections. The medical image processing community has developed a wide variety of tools for the inspection and validation of imaging data. Yet, IQA of computed tomography (CT) remains an under-recognized challenge, and no user-friendly tool is commonly available to address these potential issues. Here, we create and illustrate a pipeline specifically designed to identify and resolve issues encountered with large-scale data mining of clinically acquired CT data. Using the widely studied National Lung Screening Trial (NLST), we have identified approximately 4% of image volumes with quality concerns out of 17,392 scans. To assess robustness, we applied the proposed pipeline to our internal datasets where we find our tool is generalizable to clinically acquired medical images. In conclusion, the tool has been useful and time-saving for research study of clinical data, and the code and tutorials are publicly available at https://github.com/MASILab/QA_tool.

Lung Cancer Risk Estimation with Incomplete Data: A Joint Missing Imputation Perspective

Jul 25, 2021

Abstract:Data from multi-modality provide complementary information in clinical prediction, but missing data in clinical cohorts limits the number of subjects in multi-modal learning context. Multi-modal missing imputation is challenging with existing methods when 1) the missing data span across heterogeneous modalities (e.g., image vs. non-image); or 2) one modality is largely missing. In this paper, we address imputation of missing data by modeling the joint distribution of multi-modal data. Motivated by partial bidirectional generative adversarial net (PBiGAN), we propose a new Conditional PBiGAN (C-PBiGAN) method that imputes one modality combining the conditional knowledge from another modality. Specifically, C-PBiGAN introduces a conditional latent space in a missing imputation framework that jointly encodes the available multi-modal data, along with a class regularization loss on imputed data to recover discriminative information. To our knowledge, it is the first generative adversarial model that addresses multi-modal missing imputation by modeling the joint distribution of image and non-image data. We validate our model with both the national lung screening trial (NLST) dataset and an external clinical validation cohort. The proposed C-PBiGAN achieves significant improvements in lung cancer risk estimation compared with representative imputation methods (e.g., AUC values increase in both NLST (+2.9\%) and in-house dataset (+4.3\%) compared with PBiGAN, p$<$0.05).

Deep Multi-path Network Integrating Incomplete Biomarker and Chest CT Data for Evaluating Lung Cancer Risk

Oct 19, 2020

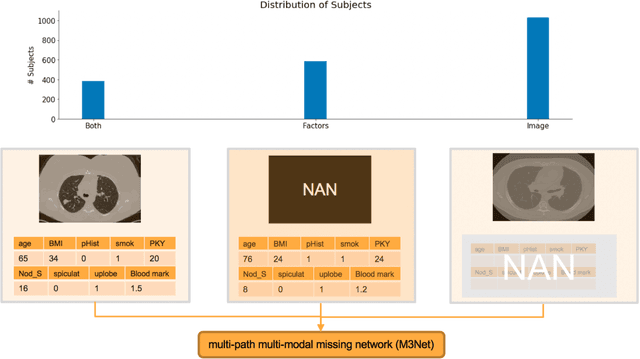

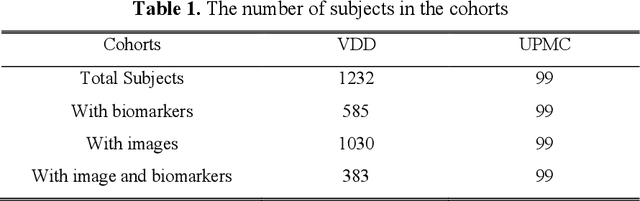

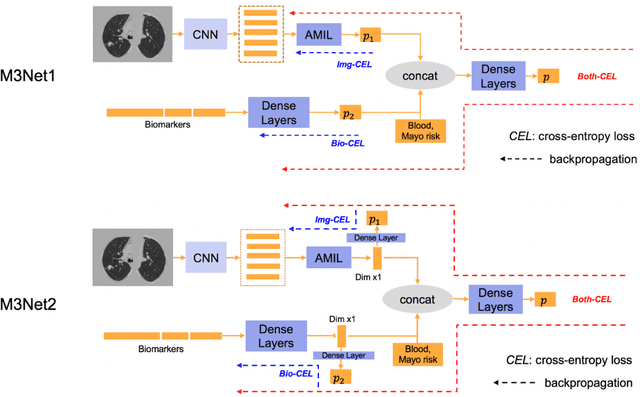

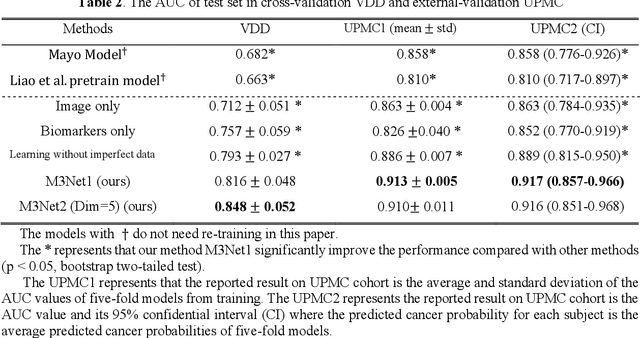

Abstract:Clinical data elements (CDEs) (e.g., age, smoking history), blood markers and chest computed tomography (CT) structural features have been regarded as effective means for assessing lung cancer risk. These independent variables can provide complementary information and we hypothesize that combining them will improve the prediction accuracy. In practice, not all patients have all these variables available. In this paper, we propose a new network design, termed as multi-path multi-modal missing network (M3Net), to integrate the multi-modal data (i.e., CDEs, biomarker and CT image) considering missing modality with multiple paths neural network. Each path learns discriminative features of one modality, and different modalities are fused in a second stage for an integrated prediction. The network can be trained end-to-end with both medical image features and CDEs/biomarkers, or make a prediction with single modality. We evaluate M3Net with datasets including three sites from the Consortium for Molecular and Cellular Characterization of Screen-Detected Lesions (MCL) project. Our method is cross validated within a cohort of 1291 subjects (383 subjects with complete CDEs/biomarkers and CT images), and externally validated with a cohort of 99 subjects (99 with complete CDEs/biomarkers and CT images). Both cross-validation and external-validation results show that combining multiple modality significantly improves the predicting performance of single modality. The results suggest that integrating subjects with missing either CDEs/biomarker or CT imaging features can contribute to the discriminatory power of our model (p < 0.05, bootstrap two-tailed test). In summary, the proposed M3Net framework provides an effective way to integrate image and non-image data in the context of missing information.

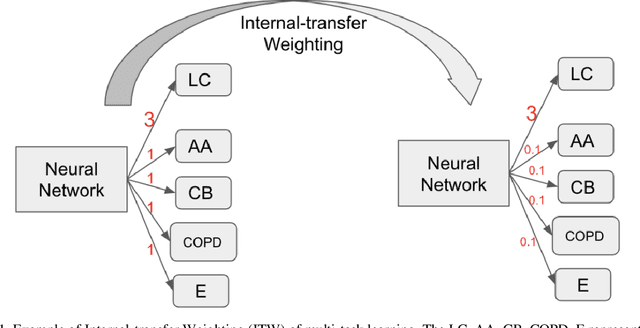

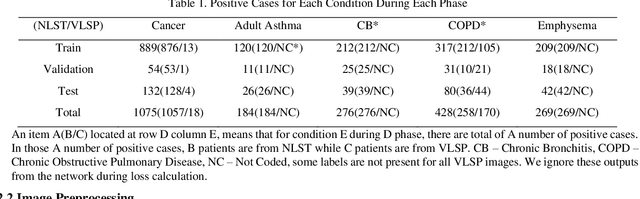

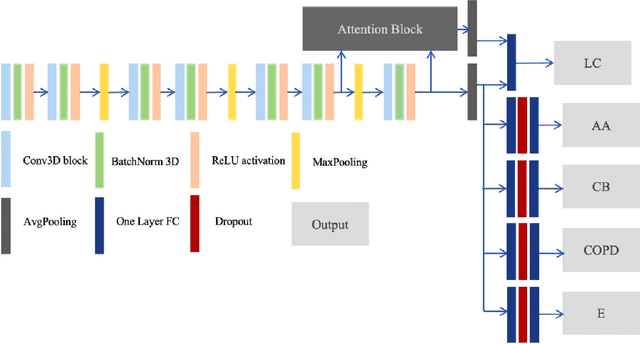

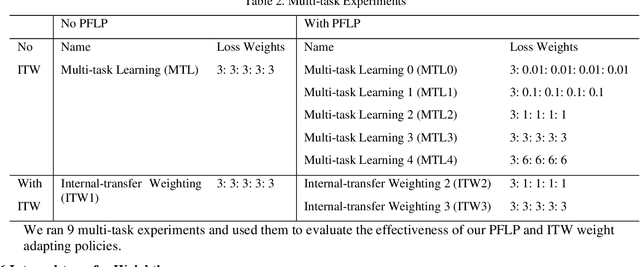

Internal-transfer Weighting of Multi-task Learning for Lung Cancer Detection

Dec 16, 2019

Abstract:Recently, multi-task networks have shown to both offer additional estimation capabilities, and, perhaps more importantly, increased performance over single-task networks on a "main/primary" task. However, balancing the optimization criteria of multi-task networks across different tasks is an area of active exploration. Here, we extend a previously proposed 3D attention-based network with four additional multi-task subnetworks for the detection of lung cancer and four auxiliary tasks (diagnosis of asthma, chronic bronchitis, chronic obstructive pulmonary disease, and emphysema). We introduce and evaluate a learning policy, Periodic Focusing Learning Policy (PFLP), that alternates the dominance of tasks throughout the training. To improve performance on the primary task, we propose an Internal-Transfer Weighting (ITW) strategy to suppress the loss functions on auxiliary tasks for the final stages of training. To evaluate this approach, we examined 3386 patients (single scan per patient) from the National Lung Screening Trial (NLST) and de-identified data from the Vanderbilt Lung Screening Program, with a 2517/277/592 (scans) split for training, validation, and testing. Baseline networks include a single-task strategy and a multi-task strategy without adaptive weights (PFLP/ITW), while primary experiments are multi-task trials with either PFLP or ITW or both. On the test set for lung cancer prediction, the baseline single-task network achieved prediction AUC of 0.8080 and the multi-task baseline failed to converge (AUC 0.6720). However, applying PFLP helped multi-task network clarify and achieved test set lung cancer prediction AUC of 0.8402. Furthermore, our ITW technique boosted the PFLP enabled multi-task network and achieved an AUC of 0.8462 (McNemar test, p < 0.01).

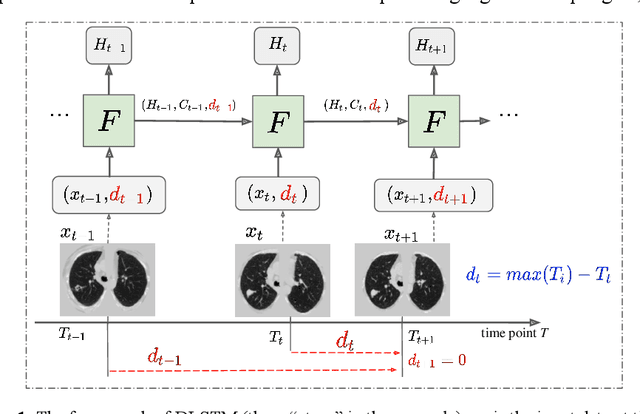

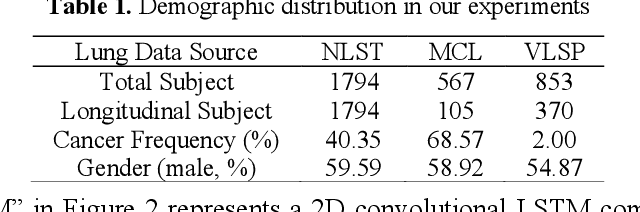

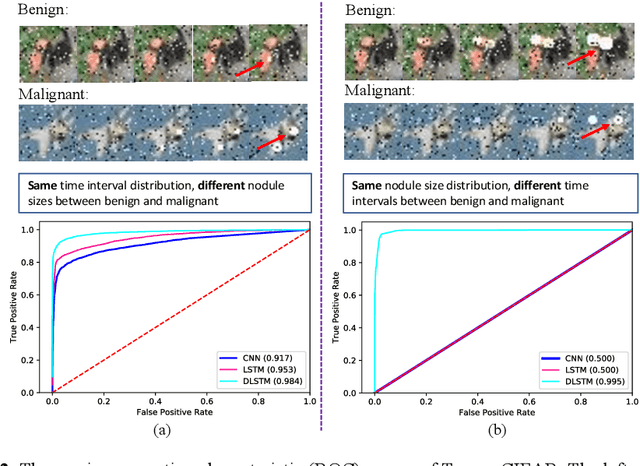

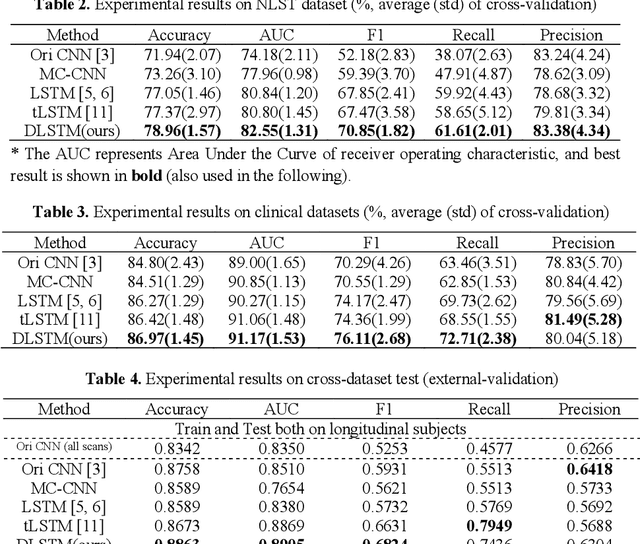

Distanced LSTM: Time-Distanced Gates in Long Short-Term Memory Models for Lung Cancer Detection

Sep 11, 2019

Abstract:The field of lung nodule detection and cancer prediction has been rapidly developing with the support of large public data archives. Previous studies have largely focused on cross-sectional (single) CT data. Herein, we consider longitudinal data. The Long Short-Term Memory (LSTM) model addresses learning with regularly spaced time points (i.e., equal temporal intervals). However, clinical imaging follows patient needs with often heterogeneous, irregular acquisitions. To model both regular and irregular longitudinal samples, we generalize the LSTM model with the Distanced LSTM (DLSTM) for temporally varied acquisitions. The DLSTM includes a Temporal Emphasis Model (TEM) that enables learning across regularly and irregularly sampled intervals. Briefly, (1) the time intervals between longitudinal scans are modeled explicitly, (2) temporally adjustable forget and input gates are introduced for irregular temporal sampling; and (3) the latest longitudinal scan has an additional emphasis term. We evaluate the DLSTM framework in three datasets including simulated data, 1794 National Lung Screening Trial (NLST) scans, and 1420 clinically acquired data with heterogeneous and irregular temporal accession. The experiments on the first two datasets demonstrate that our method achieves competitive performance on both simulated and regularly sampled datasets (e.g. improve LSTM from 0.6785 to 0.7085 on F1 score in NLST). In external validation of clinically and irregularly acquired data, the benchmarks achieved 0.8350 (CNN feature) and 0.8380 (LSTM) on the area under the ROC curve (AUC) score, while the proposed DLSTM achieves 0.8905.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge