Alexis B. Paulson

Deep Multi-task Prediction of Lung Cancer and Cancer-free Progression from Censored Heterogenous Clinical Imaging

Nov 12, 2019

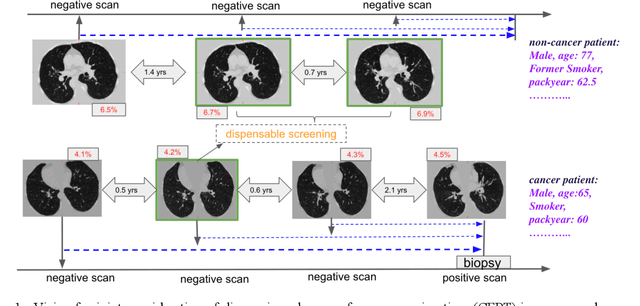

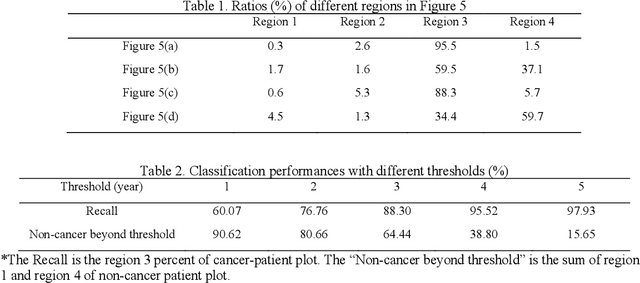

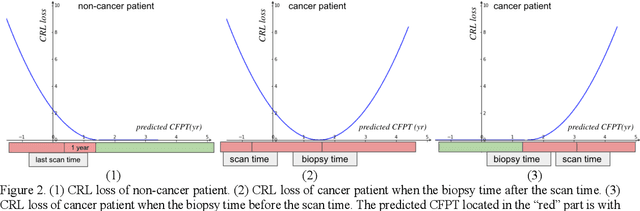

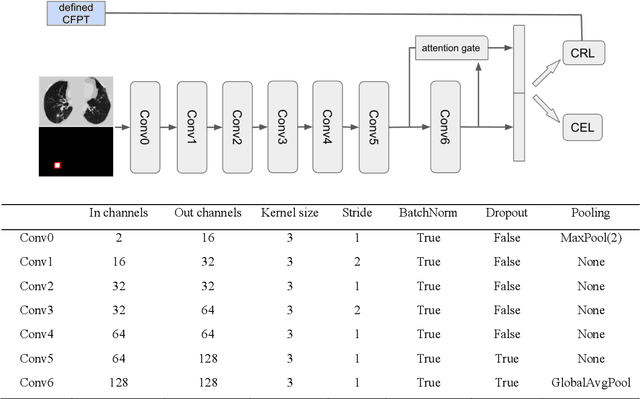

Abstract:Annual low dose computed tomography (CT) lung screening is currently advised for individuals at high risk of lung cancer (e.g., heavy smokers between 55 and 80 years old). The recommended screening practice significantly reduces all-cause mortality, but the vast majority of screening results are negative for cancer. If patients at very low risk could be identified based on individualized, image-based biomarkers, the health care resources could be more efficiently allocated to higher risk patients and reduce overall exposure to ionizing radiation. In this work, we propose a multi-task (diagnosis and prognosis) deep convolutional neural network to improve the diagnostic accuracy over a baseline model while simultaneously estimating a personalized cancer-free progression time (CFPT). A novel Censored Regression Loss (CRL) is proposed to perform weakly supervised regression so that even single negative screening scans can provide small incremental value. Herein, we study 2287 scans from 1433 de-identified patients from the Vanderbilt Lung Screening Program (VLSP) and Molecular Characterization Laboratories (MCL) cohorts. Using five-fold cross-validation, we train a 3D attention-based network under two scenarios: (1) single-task learning with only classification, and (2) multi-task learning with both classification and regression. The single-task learning leads to a higher AUC compared with the Kaggle challenge winner pre-trained model (0.878 v. 0.856), and multi-task learning significantly improves the single-task one (AUC 0.895, p<0.01, McNemar test). In summary, the image-based predicted CFPT can be used in follow-up year lung cancer prediction and data assessment.

Distanced LSTM: Time-Distanced Gates in Long Short-Term Memory Models for Lung Cancer Detection

Sep 11, 2019

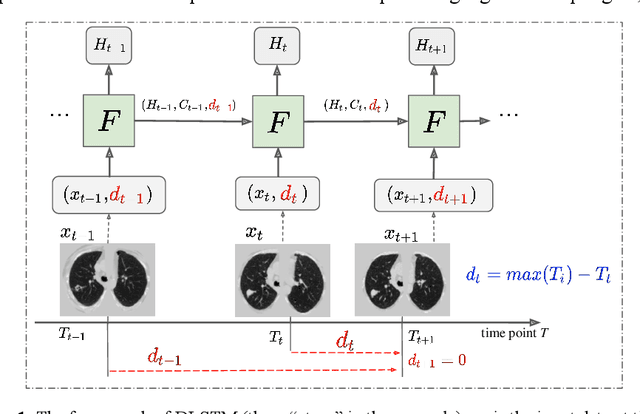

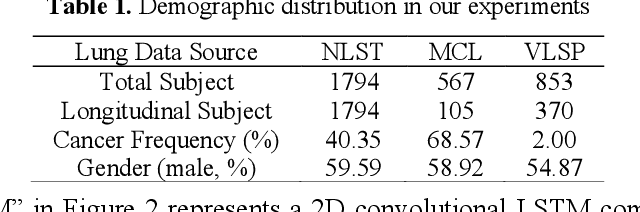

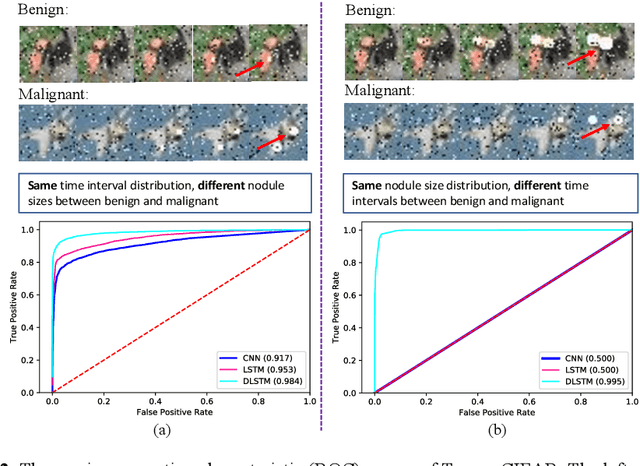

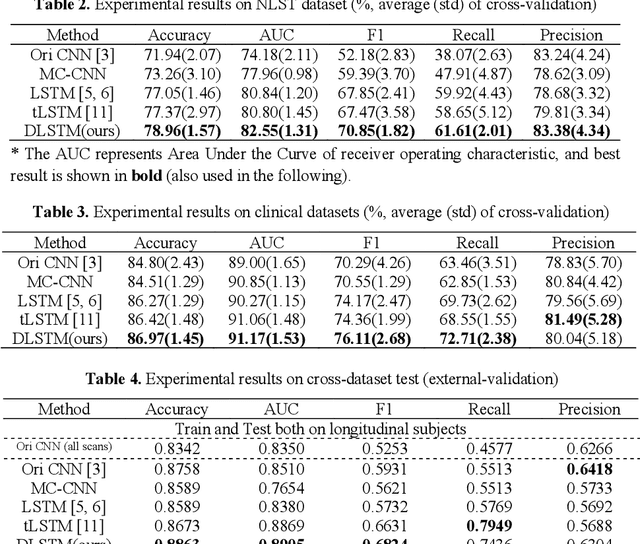

Abstract:The field of lung nodule detection and cancer prediction has been rapidly developing with the support of large public data archives. Previous studies have largely focused on cross-sectional (single) CT data. Herein, we consider longitudinal data. The Long Short-Term Memory (LSTM) model addresses learning with regularly spaced time points (i.e., equal temporal intervals). However, clinical imaging follows patient needs with often heterogeneous, irregular acquisitions. To model both regular and irregular longitudinal samples, we generalize the LSTM model with the Distanced LSTM (DLSTM) for temporally varied acquisitions. The DLSTM includes a Temporal Emphasis Model (TEM) that enables learning across regularly and irregularly sampled intervals. Briefly, (1) the time intervals between longitudinal scans are modeled explicitly, (2) temporally adjustable forget and input gates are introduced for irregular temporal sampling; and (3) the latest longitudinal scan has an additional emphasis term. We evaluate the DLSTM framework in three datasets including simulated data, 1794 National Lung Screening Trial (NLST) scans, and 1420 clinically acquired data with heterogeneous and irregular temporal accession. The experiments on the first two datasets demonstrate that our method achieves competitive performance on both simulated and regularly sampled datasets (e.g. improve LSTM from 0.6785 to 0.7085 on F1 score in NLST). In external validation of clinically and irregularly acquired data, the benchmarks achieved 0.8350 (CNN feature) and 0.8380 (LSTM) on the area under the ROC curve (AUC) score, while the proposed DLSTM achieves 0.8905.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge