Sriram Kumar

NurtureNet: A Multi-task Video-based Approach for Newborn Anthropometry

May 09, 2024Abstract:Malnutrition among newborns is a top public health concern in developing countries. Identification and subsequent growth monitoring are key to successful interventions. However, this is challenging in rural communities where health systems tend to be inaccessible and under-equipped, with poor adherence to protocol. Our goal is to equip health workers and public health systems with a solution for contactless newborn anthropometry in the community. We propose NurtureNet, a multi-task model that fuses visual information (a video taken with a low-cost smartphone) with tabular inputs to regress multiple anthropometry estimates including weight, length, head circumference, and chest circumference. We show that visual proxy tasks of segmentation and keypoint prediction further improve performance. We establish the efficacy of the model through several experiments and achieve a relative error of 3.9% and mean absolute error of 114.3 g for weight estimation. Model compression to 15 MB also allows offline deployment to low-cost smartphones.

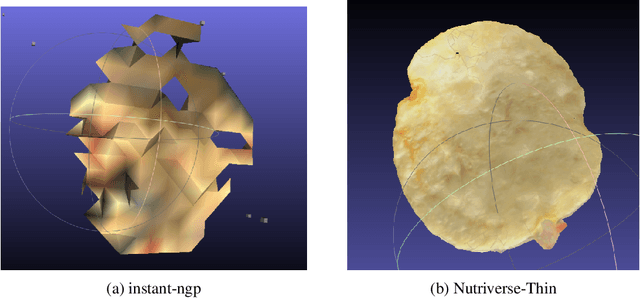

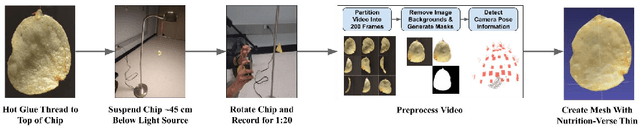

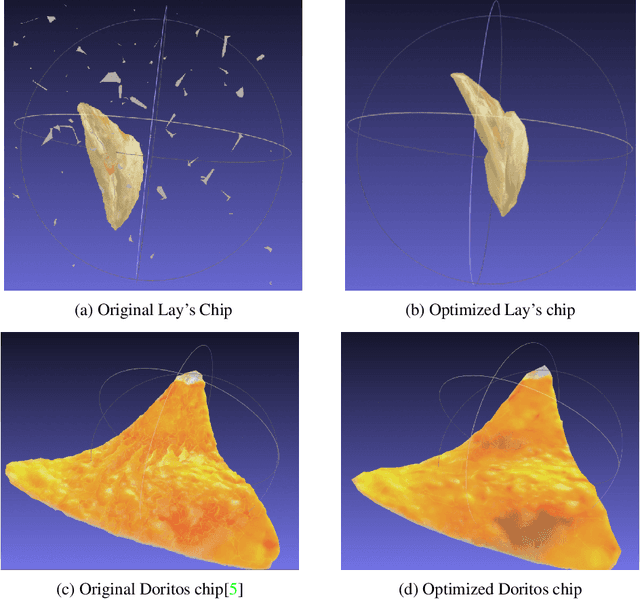

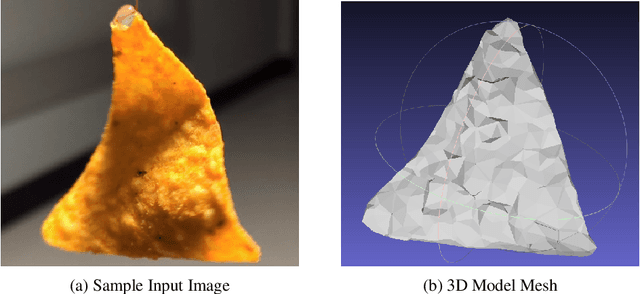

NutritionVerse-Thin: An Optimized Strategy for Enabling Improved Rendering of 3D Thin Food Models

Apr 12, 2023

Abstract:With the growth in capabilities of generative models, there has been growing interest in using photo-realistic renders of common 3D food items to improve downstream tasks such as food printing, nutrition prediction, or management of food wastage. Despite 3D modelling capabilities being more accessible than ever due to the success of NeRF based view-synthesis, such rendering methods still struggle to correctly capture thin food objects, often generating meshes with significant holes. In this study, we present an optimized strategy for enabling improved rendering of thin 3D food models, and demonstrate qualitative improvements in rendering quality. Our method generates the 3D model mesh via a proposed thin-object-optimized differentiable reconstruction method and tailors the strategy at both the data collection and training stages to better handle thin objects. While simple, we find that this technique can be employed for quick and highly consistent capturing of thin 3D objects.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge