Srinivasan Arunachalam

CWI

Efficiently learning depth-3 circuits via quantum agnostic boosting

Sep 17, 2025Abstract:We initiate the study of quantum agnostic learning of phase states with respect to a function class $\mathsf{C}\subseteq \{c:\{0,1\}^n\rightarrow \{0,1\}\}$: given copies of an unknown $n$-qubit state $|\psi\rangle$ which has fidelity $\textsf{opt}$ with a phase state $|\phi_c\rangle=\frac{1}{\sqrt{2^n}}\sum_{x\in \{0,1\}^n}(-1)^{c(x)}|x\rangle$ for some $c\in \mathsf{C}$, output $|\phi\rangle$ which has fidelity $|\langle \phi | \psi \rangle|^2 \geq \textsf{opt}-\varepsilon$. To this end, we give agnostic learning protocols for the following classes: (i) Size-$t$ decision trees which runs in time $\textsf{poly}(n,t,1/\varepsilon)$. This also implies $k$-juntas can be agnostically learned in time $\textsf{poly}(n,2^k,1/\varepsilon)$. (ii) $s$-term DNF formulas in near-polynomial time $\textsf{poly}(n,(s/\varepsilon)^{\log \log s/\varepsilon})$. Our main technical contribution is a quantum agnostic boosting protocol which converts a weak agnostic learner, which outputs a parity state $|\phi\rangle$ such that $|\langle \phi|\psi\rangle|^2\geq \textsf{opt}/\textsf{poly}(n)$, into a strong learner which outputs a superposition of parity states $|\phi'\rangle$ such that $|\langle \phi'|\psi\rangle|^2\geq \textsf{opt} - \varepsilon$. Using quantum agnostic boosting, we obtain the first near-polynomial time $n^{O(\log \log n)}$ algorithm for learning $\textsf{poly}(n)$-sized depth-$3$ circuits (consisting of $\textsf{AND}$, $\textsf{OR}$, $\textsf{NOT}$ gates) in the uniform quantum $\textsf{PAC}$ model using quantum examples. Classically, the analogue of efficient learning depth-$3$ circuits (and even depth-$2$ circuits) in the uniform $\textsf{PAC}$ model has been a longstanding open question in computational learning theory. Our work nearly settles this question, when the learner is given quantum examples.

Learning low-degree quantum objects

May 17, 2024

Abstract:We consider the problem of learning low-degree quantum objects up to $\varepsilon$-error in $\ell_2$-distance. We show the following results: $(i)$ unknown $n$-qubit degree-$d$ (in the Pauli basis) quantum channels and unitaries can be learned using $O(1/\varepsilon^d)$ queries (independent of $n$), $(ii)$ polynomials $p:\{-1,1\}^n\rightarrow [-1,1]$ arising from $d$-query quantum algorithms can be classically learned from $O((1/\varepsilon)^d\cdot \log n)$ many random examples $(x,p(x))$ (which implies learnability even for $d=O(\log n)$), and $(iii)$ degree-$d$ polynomials $p:\{-1,1\}^n\to [-1,1]$ can be learned through $O(1/\varepsilon^d)$ queries to a quantum unitary $U_p$ that block-encodes $p$. Our main technical contributions are new Bohnenblust-Hille inequalities for quantum channels and completely bounded~polynomials.

On the Role of Entanglement and Statistics in Learning

Jun 05, 2023

Abstract:In this work we make progress in understanding the relationship between learning models with access to entangled, separable and statistical measurements in the quantum statistical query (QSQ) model. To this end, we show the following results. $\textbf{Entangled versus separable measurements.}$ The goal here is to learn an unknown $f$ from the concept class $C\subseteq \{f:\{0,1\}^n\rightarrow [k]\}$ given copies of $\frac{1}{\sqrt{2^n}}\sum_x \vert x,f(x)\rangle$. We show that, if $T$ copies suffice to learn $f$ using entangled measurements, then $O(nT^2)$ copies suffice to learn $f$ using just separable measurements. $\textbf{Entangled versus statistical measurements}$ The goal here is to learn a function $f \in C$ given access to separable measurements and statistical measurements. We exhibit a class $C$ that gives an exponential separation between QSQ learning and quantum learning with entangled measurements (even in the presence of noise). This proves the "quantum analogue" of the seminal result of Blum et al. [BKW'03]. that separates classical SQ and PAC learning with classification noise. $\textbf{QSQ lower bounds for learning states.}$ We introduce a quantum statistical query dimension (QSD), which we use to give lower bounds on the QSQ learning. With this we prove superpolynomial QSQ lower bounds for testing purity, shadow tomography, Abelian hidden subgroup problem, degree-$2$ functions, planted bi-clique states and output states of Clifford circuits of depth $\textsf{polylog}(n)$. $\textbf{Further applications.}$ We give and $\textit{unconditional}$ separation between weak and strong error mitigation and prove lower bounds for learning distributions in the QSQ model. Prior works by Quek et al. [QFK+'22], Hinsche et al. [HIN+'22], and Nietner et al. [NIS+'23] proved the analogous results $\textit{assuming}$ diagonal measurements and our work removes this assumption.

A survey on the complexity of learning quantum states

May 31, 2023Abstract:We survey various recent results that rigorously study the complexity of learning quantum states. These include progress on quantum tomography, learning physical quantum states, alternate learning models to tomography and learning classical functions encoded as quantum states. We highlight how these results are paving the way for a highly successful theory with a range of exciting open questions. To this end, we distill 25 open questions from these results.

Private learning implies quantum stability

Feb 14, 2021

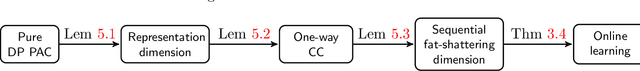

Abstract:Learning an unknown $n$-qubit quantum state $\rho$ is a fundamental challenge in quantum computing. Information-theoretically, it is known that tomography requires exponential in $n$ many copies of $\rho$ to estimate it up to trace distance. Motivated by computational learning theory, Aaronson et al. introduced many (weaker) learning models: the PAC model of learning states (Proceedings of Royal Society A'07), shadow tomography (STOC'18) for learning "shadows" of a state, a model that also requires learners to be differentially private (STOC'19) and the online model of learning states (NeurIPS'18). In these models it was shown that an unknown state can be learned "approximately" using linear-in-$n$ many copies of rho. But is there any relationship between these models? In this paper we prove a sequence of (information-theoretic) implications from differentially-private PAC learning, to communication complexity, to online learning and then to quantum stability. Our main result generalizes the recent work of Bun, Livni and Moran (Journal of the ACM'21) who showed that finite Littlestone dimension (of Boolean-valued concept classes) implies PAC learnability in the (approximate) differentially private (DP) setting. We first consider their work in the real-valued setting and further extend their techniques to the setting of learning quantum states. Key to our results is our generic quantum online learner, Robust Standard Optimal Algorithm (RSOA), which is robust to adversarial imprecision. We then show information-theoretic implications between DP learning quantum states in the PAC model, learnability of quantum states in the one-way communication model, online learning of quantum states, quantum stability (which is our conceptual contribution), various combinatorial parameters and give further applications to gentle shadow tomography and noisy quantum state learning.

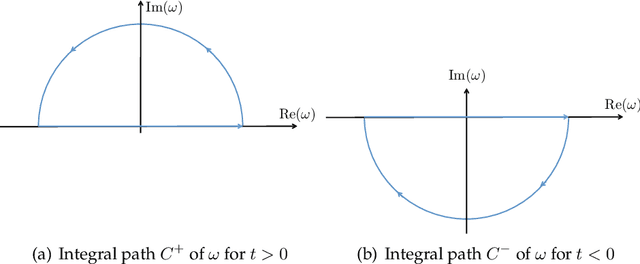

Quantum learning algorithms imply circuit lower bounds

Dec 03, 2020Abstract:We establish the first general connection between the design of quantum algorithms and circuit lower bounds. Specifically, let $\mathfrak{C}$ be a class of polynomial-size concepts, and suppose that $\mathfrak{C}$ can be PAC-learned with membership queries under the uniform distribution with error $1/2 - \gamma$ by a time $T$ quantum algorithm. We prove that if $\gamma^2 \cdot T \ll 2^n/n$, then $\mathsf{BQE} \nsubseteq \mathfrak{C}$, where $\mathsf{BQE} = \mathsf{BQTIME}[2^{O(n)}]$ is an exponential-time analogue of $\mathsf{BQP}$. This result is optimal in both $\gamma$ and $T$, since it is not hard to learn any class $\mathfrak{C}$ of functions in (classical) time $T = 2^n$ (with no error), or in quantum time $T = \mathsf{poly}(n)$ with error at most $1/2 - \Omega(2^{-n/2})$ via Fourier sampling. In other words, even a marginal improvement on these generic learning algorithms would lead to major consequences in complexity theory. Our proof builds on several works in learning theory, pseudorandomness, and computational complexity, and crucially, on a connection between non-trivial classical learning algorithms and circuit lower bounds established by Oliveira and Santhanam (CCC 2017). Extending their approach to quantum learning algorithms turns out to create significant challenges. To achieve that, we show among other results how pseudorandom generators imply learning-to-lower-bound connections in a generic fashion, construct the first conditional pseudorandom generator secure against uniform quantum computations, and extend the local list-decoding algorithm of Impagliazzo, Jaiswal, Kabanets and Wigderson (SICOMP 2010) to quantum circuits via a delicate analysis. We believe that these contributions are of independent interest and might find other applications.

A rigorous and robust quantum speed-up in supervised machine learning

Oct 05, 2020

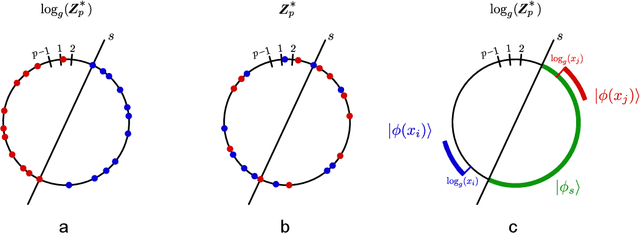

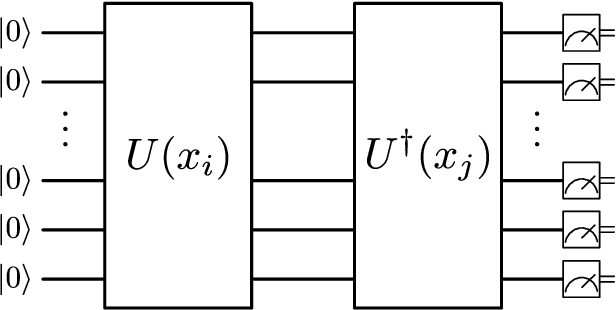

Abstract:Over the past few years several quantum machine learning algorithms were proposed that promise quantum speed-ups over their classical counterparts. Most of these learning algorithms assume quantum access to data; and it is unclear if quantum speed-ups still exist without making these strong assumptions. In this paper, we establish a rigorous quantum speed-up for supervised classification using a quantum learning algorithm that only requires classical access to data. Our quantum classifier is a conventional support vector machine that uses a fault-tolerant quantum computer to estimate a kernel function. Data samples are mapped to a quantum feature space and the kernel entries can be estimated as the transition amplitude of a quantum circuit. We construct a family of datasets and show that no classical learner can classify the data inverse-polynomially better than random guessing, assuming the widely-believed hardness of the discrete logarithm problem. Meanwhile, the quantum classifier achieves high accuracy and is robust against additive errors in the kernel entries that arise from finite sampling statistics.

Sample-efficient learning of quantum many-body systems

Apr 15, 2020

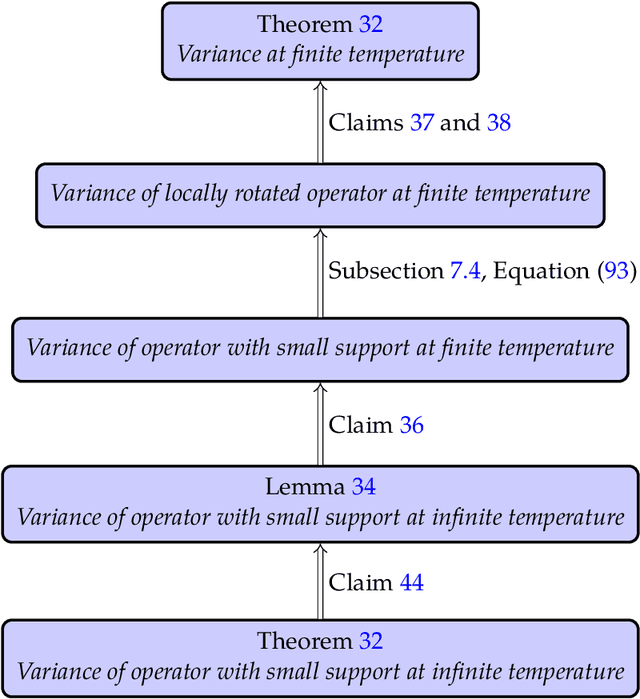

Abstract:We study the problem of learning the Hamiltonian of a quantum many-body system given samples from its Gibbs (thermal) state. The classical analog of this problem, known as learning graphical models or Boltzmann machines, is a well-studied question in machine learning and statistics. In this work, we give the first sample-efficient algorithm for the quantum Hamiltonian learning problem. In particular, we prove that polynomially many samples in the number of particles (qudits) are necessary and sufficient for learning the parameters of a spatially local Hamiltonian in l_2-norm. Our main contribution is in establishing the strong convexity of the log-partition function of quantum many-body systems, which along with the maximum entropy estimation yields our sample-efficient algorithm. Classically, the strong convexity for partition functions follows from the Markov property of Gibbs distributions. This is, however, known to be violated in its exact form in the quantum case. We introduce several new ideas to obtain an unconditional result that avoids relying on the Markov property of quantum systems, at the cost of a slightly weaker bound. In particular, we prove a lower bound on the variance of quasi-local operators with respect to the Gibbs state, which might be of independent interest. Our work paves the way toward a more rigorous application of machine learning techniques to quantum many-body problems.

Quantum statistical query learning

Feb 19, 2020Abstract:We propose a learning model called the quantum statistical learning QSQ model, which extends the SQ learning model introduced by Kearns to the quantum setting. Our model can be also seen as a restriction of the quantum PAC learning model: here, the learner does not have direct access to quantum examples, but can only obtain estimates of measurement statistics on them. Theoretically, this model provides a simple yet expressive setting to explore the power of quantum examples in machine learning. From a practical perspective, since simpler operations are required, learning algorithms in the QSQ model are more feasible for implementation on near-term quantum devices. We prove a number of results about the QSQ learning model. We first show that parity functions, (log n)-juntas and polynomial-sized DNF formulas are efficiently learnable in the QSQ model, in contrast to the classical setting where these problems are provably hard. This implies that many of the advantages of quantum PAC learning can be realized even in the more restricted quantum SQ learning model. It is well-known that weak statistical query dimension, denoted by WSQDIM(C), characterizes the complexity of learning a concept class C in the classical SQ model. We show that log(WSQDIM(C)) is a lower bound on the complexity of QSQ learning, and furthermore it is tight for certain concept classes C. Additionally, we show that this quantity provides strong lower bounds for the small-bias quantum communication model under product distributions. Finally, we introduce the notion of private quantum PAC learning, in which a quantum PAC learner is required to be differentially private. We show that learnability in the QSQ model implies learnability in the quantum private PAC model. Additionally, we show that in the private PAC learning setting, the classical and quantum sample complexities are equal, up to constant factors.

Quantum Boosting

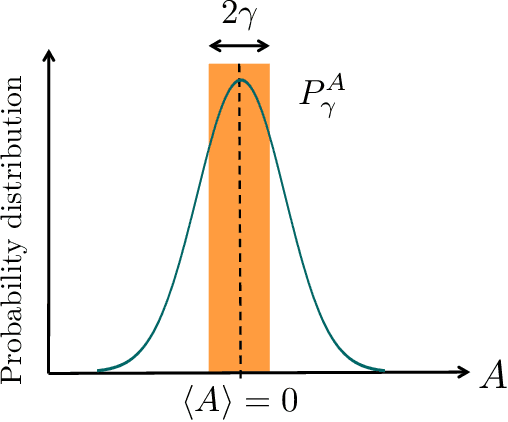

Feb 12, 2020Abstract:Suppose we have a weak learning algorithm $\mathcal{A}$ for a Boolean-valued problem: $\mathcal{A}$ produces hypotheses whose bias $\gamma$ is small, only slightly better than random guessing (this could, for instance, be due to implementing $\mathcal{A}$ on a noisy device), can we boost the performance of $\mathcal{A}$ so that $\mathcal{A}$'s output is correct on $2/3$ of the inputs? Boosting is a technique that converts a weak and inaccurate machine learning algorithm into a strong accurate learning algorithm. The AdaBoost algorithm by Freund and Schapire (for which they were awarded the G\"odel prize in 2003) is one of the widely used boosting algorithms, with many applications in theory and practice. Suppose we have a $\gamma$-weak learner for a Boolean concept class $C$ that takes time $R(C)$, then the time complexity of AdaBoost scales as $VC(C)\cdot poly(R(C), 1/\gamma)$, where $VC(C)$ is the $VC$-dimension of $C$. In this paper, we show how quantum techniques can improve the time complexity of classical AdaBoost. To this end, suppose we have a $\gamma$-weak quantum learner for a Boolean concept class $C$ that takes time $Q(C)$, we introduce a quantum boosting algorithm whose complexity scales as $\sqrt{VC(C)}\cdot poly(Q(C),1/\gamma);$ thereby achieving a quadratic quantum improvement over classical AdaBoost in terms of $VC(C)$.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge