Sotirios Tsaftaris

Surgical Task Automation Using Actor-Critic Frameworks and Self-Supervised Imitation Learning

Sep 04, 2024

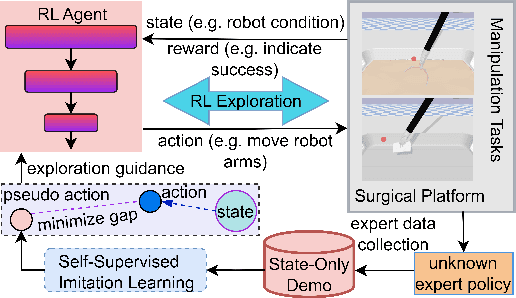

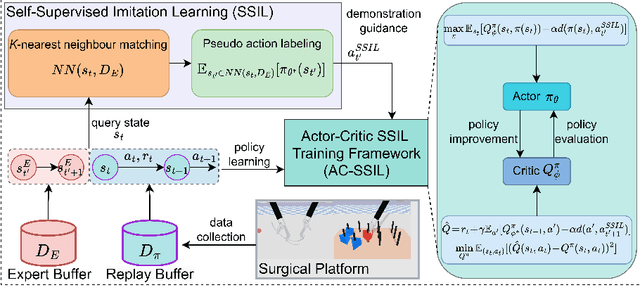

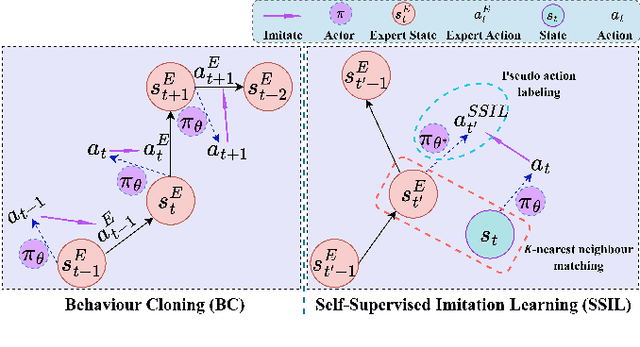

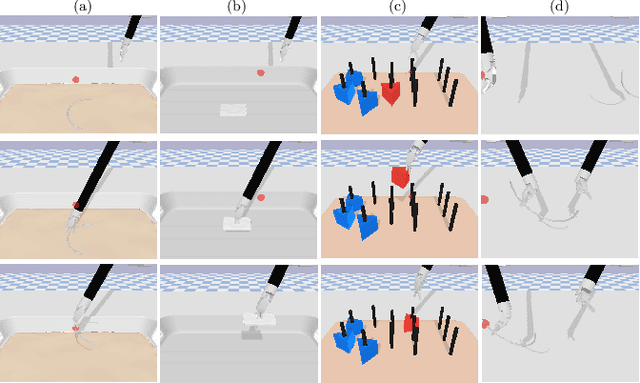

Abstract:Surgical robot task automation has recently attracted great attention due to its potential to benefit both surgeons and patients. Reinforcement learning (RL) based approaches have demonstrated promising ability to provide solutions to automated surgical manipulations on various tasks. To address the exploration challenge, expert demonstrations can be utilized to enhance the learning efficiency via imitation learning (IL) approaches. However, the successes of such methods normally rely on both states and action labels. Unfortunately action labels can be hard to capture or their manual annotation is prohibitively expensive owing to the requirement for expert knowledge. It therefore remains an appealing and open problem to leverage expert demonstrations composed of pure states in RL. In this work, we present an actor-critic RL framework, termed AC-SSIL, to overcome this challenge of learning with state-only demonstrations collected by following an unknown expert policy. It adopts a self-supervised IL method, dubbed SSIL, to effectively incorporate demonstrated states into RL paradigms by retrieving from demonstrates the nearest neighbours of the query state and utilizing the bootstrapping of actor networks. We showcase through experiments on an open-source surgical simulation platform that our method delivers remarkable improvements over the RL baseline and exhibits comparable performance against action based IL methods, which implies the efficacy and potential of our method for expert demonstration-guided learning scenarios.

Debiasing Counterfactuals In the Presence of Spurious Correlations

Aug 21, 2023

Abstract:Deep learning models can perform well in complex medical imaging classification tasks, even when basing their conclusions on spurious correlations (i.e. confounders), should they be prevalent in the training dataset, rather than on the causal image markers of interest. This would thereby limit their ability to generalize across the population. Explainability based on counterfactual image generation can be used to expose the confounders but does not provide a strategy to mitigate the bias. In this work, we introduce the first end-to-end training framework that integrates both (i) popular debiasing classifiers (e.g. distributionally robust optimization (DRO)) to avoid latching onto the spurious correlations and (ii) counterfactual image generation to unveil generalizable imaging markers of relevance to the task. Additionally, we propose a novel metric, Spurious Correlation Latching Score (SCLS), to quantify the extent of the classifier reliance on the spurious correlation as exposed by the counterfactual images. Through comprehensive experiments on two public datasets (with the simulated and real visual artifacts), we demonstrate that the debiasing method: (i) learns generalizable markers across the population, and (ii) successfully ignores spurious correlations and focuses on the underlying disease pathology.

Counterfactual Image Synthesis for Discovery of Personalized Predictive Image Markers

Aug 03, 2022

Abstract:The discovery of patient-specific imaging markers that are predictive of future disease outcomes can help us better understand individual-level heterogeneity of disease evolution. In fact, deep learning models that can provide data-driven personalized markers are much more likely to be adopted in medical practice. In this work, we demonstrate that data-driven biomarker discovery can be achieved through a counterfactual synthesis process. We show how a deep conditional generative model can be used to perturb local imaging features in baseline images that are pertinent to subject-specific future disease evolution and result in a counterfactual image that is expected to have a different future outcome. Candidate biomarkers, therefore, result from examining the set of features that are perturbed in this process. Through several experiments on a large-scale, multi-scanner, multi-center multiple sclerosis (MS) clinical trial magnetic resonance imaging (MRI) dataset of relapsing-remitting (RRMS) patients, we demonstrate that our model produces counterfactuals with changes in imaging features that reflect established clinical markers predictive of future MRI lesional activity at the population level. Additional qualitative results illustrate that our model has the potential to discover novel and subject-specific predictive markers of future activity.

Cohort Bias Adaptation in Aggregated Datasets for Lesion Segmentation

Aug 02, 2021

Abstract:Many automatic machine learning models developed for focal pathology (e.g. lesions, tumours) detection and segmentation perform well, but do not generalize as well to new patient cohorts, impeding their widespread adoption into real clinical contexts. One strategy to create a more diverse, generalizable training set is to naively pool datasets from different cohorts. Surprisingly, training on this \it{big data} does not necessarily increase, and may even reduce, overall performance and model generalizability, due to the existence of cohort biases that affect label distributions. In this paper, we propose a generalized affine conditioning framework to learn and account for cohort biases across multi-source datasets, which we call Source-Conditioned Instance Normalization (SCIN). Through extensive experimentation on three different, large scale, multi-scanner, multi-centre Multiple Sclerosis (MS) clinical trial MRI datasets, we show that our cohort bias adaptation method (1) improves performance of the network on pooled datasets relative to naively pooling datasets and (2) can quickly adapt to a new cohort by fine-tuning the instance normalization parameters, thus learning the new cohort bias with only 10 labelled samples.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge