Sonia Ben Mokhtar

On the Normalization of Confusion Matrices: Methods and Geometric Interpretations

Sep 05, 2025Abstract:The confusion matrix is a standard tool for evaluating classifiers by providing insights into class-level errors. In heterogeneous settings, its values are shaped by two main factors: class similarity -- how easily the model confuses two classes -- and distribution bias, arising from skewed distributions in the training and test sets. However, confusion matrix values reflect a mix of both factors, making it difficult to disentangle their individual contributions. To address this, we introduce bistochastic normalization using Iterative Proportional Fitting, a generalization of row and column normalization. Unlike standard normalizations, this method recovers the underlying structure of class similarity. By disentangling error sources, it enables more accurate diagnosis of model behavior and supports more targeted improvements. We also show a correspondence between confusion matrix normalizations and the model's internal class representations. Both standard and bistochastic normalizations can be interpreted geometrically in this space, offering a deeper understanding of what normalization reveals about a classifier.

A Weighted Loss Approach to Robust Federated Learning under Data Heterogeneity

Jun 12, 2025Abstract:Federated learning (FL) is a machine learning paradigm that enables multiple data holders to collaboratively train a machine learning model without sharing their training data with external parties. In this paradigm, workers locally update a model and share with a central server their updated gradients (or model parameters). While FL seems appealing from a privacy perspective, it opens a number of threats from a security perspective as (Byzantine) participants can contribute poisonous gradients (or model parameters) harming model convergence. Byzantine-resilient FL addresses this issue by ensuring that the training proceeds as if Byzantine participants were absent. Towards this purpose, common strategies ignore outlier gradients during model aggregation, assuming that Byzantine gradients deviate more from honest gradients than honest gradients do from each other. However, in heterogeneous settings, honest gradients may differ significantly, making it difficult to distinguish honest outliers from Byzantine ones. In this paper, we introduce the Worker Label Alignement Loss (WoLA), a weighted loss that aligns honest worker gradients despite data heterogeneity, which facilitates the identification of Byzantines' gradients. This approach significantly outperforms state-of-the-art methods in heterogeneous settings. In this paper, we provide both theoretical insights and empirical evidence of its effectiveness.

Dropout-Robust Mechanisms for Differentially Private and Fully Decentralized Mean Estimation

Jun 04, 2025Abstract:Achieving differentially private computations in decentralized settings poses significant challenges, particularly regarding accuracy, communication cost, and robustness against information leakage. While cryptographic solutions offer promise, they often suffer from high communication overhead or require centralization in the presence of network failures. Conversely, existing fully decentralized approaches typically rely on relaxed adversarial models or pairwise noise cancellation, the latter suffering from substantial accuracy degradation if parties unexpectedly disconnect. In this work, we propose IncA, a new protocol for fully decentralized mean estimation, a widely used primitive in data-intensive processing. Our protocol, which enforces differential privacy, requires no central orchestration and employs low-variance correlated noise, achieved by incrementally injecting sensitive information into the computation. First, we theoretically demonstrate that, when no parties permanently disconnect, our protocol achieves accuracy comparable to that of a centralized setting-already an improvement over most existing decentralized differentially private techniques. Second, we empirically show that our use of low-variance correlated noise significantly mitigates the accuracy loss experienced by existing techniques in the presence of dropouts.

GRANITE : a Byzantine-Resilient Dynamic Gossip Learning Framework

Apr 24, 2025Abstract:Gossip Learning (GL) is a decentralized learning paradigm where users iteratively exchange and aggregate models with a small set of neighboring peers. Recent GL approaches rely on dynamic communication graphs built and maintained using Random Peer Sampling (RPS) protocols. Thanks to graph dynamics, GL can achieve fast convergence even over extremely sparse topologies. However, the robustness of GL over dy- namic graphs to Byzantine (model poisoning) attacks remains unaddressed especially when Byzantine nodes attack the RPS protocol to scale up model poisoning. We address this issue by introducing GRANITE, a framework for robust learning over sparse, dynamic graphs in the presence of a fraction of Byzantine nodes. GRANITE relies on two key components (i) a History-aware Byzantine-resilient Peer Sampling protocol (HaPS), which tracks previously encountered identifiers to reduce adversarial influence over time, and (ii) an Adaptive Probabilistic Threshold (APT), which leverages an estimate of Byzantine presence to set aggregation thresholds with formal guarantees. Empirical results confirm that GRANITE maintains convergence with up to 30% Byzantine nodes, improves learning speed via adaptive filtering of poisoned models and obtains these results in up to 9 times sparser graphs than dictated by current theory.

Secure Federated Graph-Filtering for Recommender Systems

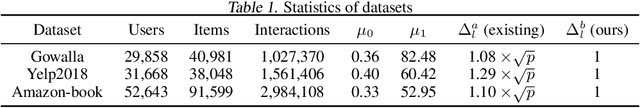

Jan 28, 2025Abstract:Recommender systems often rely on graph-based filters, such as normalized item-item adjacency matrices and low-pass filters. While effective, the centralized computation of these components raises concerns about privacy, security, and the ethical use of user data. This work proposes two decentralized frameworks for securely computing these critical graph components without centralizing sensitive information. The first approach leverages lightweight Multi-Party Computation and distributed singular vector computations to privately compute key graph filters. The second extends this framework by incorporating low-rank approximations, enabling a trade-off between communication efficiency and predictive performance. Empirical evaluations on benchmark datasets demonstrate that the proposed methods achieve comparable accuracy to centralized state-of-the-art systems while ensuring data confidentiality and maintaining low communication costs. Our results highlight the potential for privacy-preserving decentralized architectures to bridge the gap between utility and user data protection in modern recommender systems.

Scrutinizing the Vulnerability of Decentralized Learning to Membership Inference Attacks

Dec 17, 2024

Abstract:The primary promise of decentralized learning is to allow users to engage in the training of machine learning models in a collaborative manner while keeping their data on their premises and without relying on any central entity. However, this paradigm necessitates the exchange of model parameters or gradients between peers. Such exchanges can be exploited to infer sensitive information about training data, which is achieved through privacy attacks (e.g Membership Inference Attacks -- MIA). In order to devise effective defense mechanisms, it is important to understand the factors that increase/reduce the vulnerability of a given decentralized learning architecture to MIA. In this study, we extensively explore the vulnerability to MIA of various decentralized learning architectures by varying the graph structure (e.g number of neighbors), the graph dynamics, and the aggregation strategy, across diverse datasets and data distributions. Our key finding, which to the best of our knowledge we are the first to report, is that the vulnerability to MIA is heavily correlated to (i) the local model mixing strategy performed by each node upon reception of models from neighboring nodes and (ii) the global mixing properties of the communication graph. We illustrate these results experimentally using four datasets and by theoretically analyzing the mixing properties of various decentralized architectures. Our paper draws a set of lessons learned for devising decentralized learning systems that reduce by design the vulnerability to MIA.

Differentially private and decentralized randomized power method

Nov 04, 2024

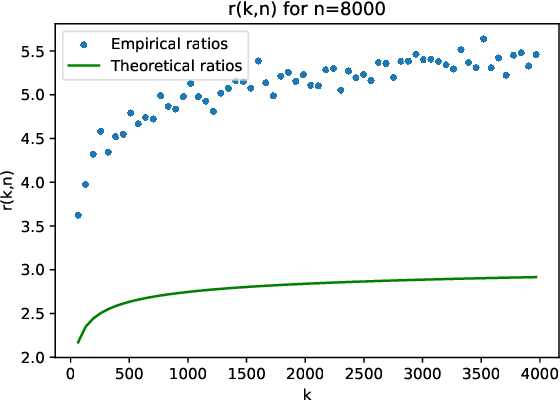

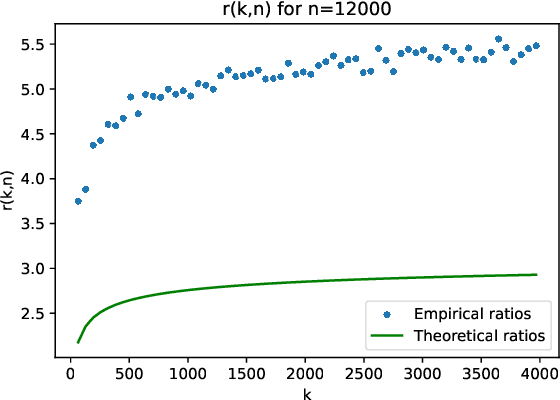

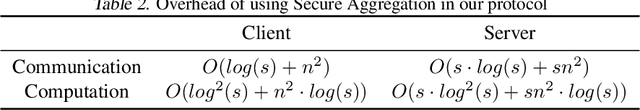

Abstract:The randomized power method has gained significant interest due to its simplicity and efficient handling of large-scale spectral analysis and recommendation tasks. As modern datasets contain sensitive private information, we need to give formal guarantees on the possible privacy leaks caused by this method. This paper focuses on enhancing privacy preserving variants of the method. We propose a strategy to reduce the variance of the noise introduced to achieve Differential Privacy (DP). We also adapt the method to a decentralized framework with a low computational and communication overhead, while preserving the accuracy. We leverage Secure Aggregation (a form of Multi-Party Computation) to allow the algorithm to perform computations using data distributed among multiple users or devices, without revealing individual data. We show that it is possible to use a noise scale in the decentralized setting that is similar to the one in the centralized setting. We improve upon existing convergence bounds for both the centralized and decentralized versions. The proposed method is especially relevant for decentralized applications such as distributed recommender systems, where privacy concerns are paramount.

Community Detection Attack against Collaborative Learning-based Recommender Systems

Jun 15, 2023

Abstract:Collaborative-learning based recommender systems emerged following the success of collaborative learning techniques such as Federated Learning (FL) and Gossip Learning (GL). In these systems, users participate in the training of a recommender system while keeping their history of consumed items on their devices. While these solutions seemed appealing for preserving the privacy of the participants at a first glance, recent studies have shown that collaborative learning can be vulnerable to a variety of privacy attacks. In this paper we propose a novel privacy attack called Community Detection Attack (CDA), which allows an adversary to discover the members of a community based on a set of items of her choice (e.g., discovering users interested in LGBT content). Through experiments on three real recommendation datasets and by using two state-of-the-art recommendation models, we assess the sensitivity of an FL-based recommender system as well as two flavors of Gossip Learning-based recommender systems to CDA. Results show that on all models and all datasets, the FL setting is more vulnerable to CDA than Gossip settings. We further evaluated two off-the-shelf mitigation strategies, namely differential privacy (DP) and a share less policy, which consists in sharing a subset of model parameters. Results show a better privacy-utility trade-off for the share less policy compared to DP especially in the Gossip setting.

Survey of Federated Learning Models for Spatial-Temporal Mobility Applications

May 10, 2023Abstract:Federated learning involves training statistical models over edge devices such as mobile phones such that the training data is kept local. Federated Learning (FL) can serve as an ideal candidate for training spatial temporal models that rely on heterogeneous and potentially massive numbers of participants while preserving the privacy of highly sensitive location data. However, there are unique challenges involved with transitioning existing spatial temporal models to decentralized learning. In this survey paper, we review the existing literature that has proposed FL-based models for predicting human mobility, traffic prediction, community detection, location-based recommendation systems, and other spatial-temporal tasks. We describe the metrics and datasets these works have been using and create a baseline of these approaches in comparison to the centralized settings. Finally, we discuss the challenges of applying spatial-temporal models in a decentralized setting and by highlighting the gaps in the literature we provide a road map and opportunities for the research community.

Shielding Federated Learning Systems against Inference Attacks with ARM TrustZone

Aug 19, 2022

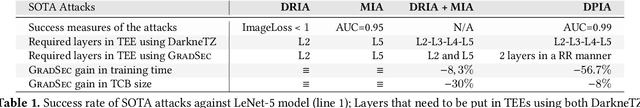

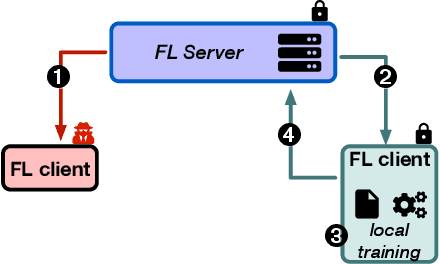

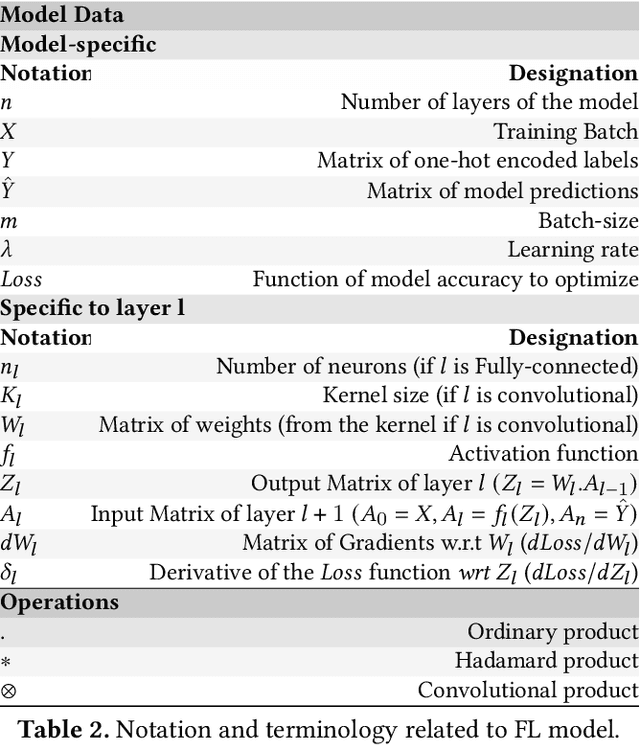

Abstract:Federated Learning (FL) opens new perspectives for training machine learning models while keeping personal data on the users premises. Specifically, in FL, models are trained on the users devices and only model updates (i.e., gradients) are sent to a central server for aggregation purposes. However, the long list of inference attacks that leak private data from gradients, published in the recent years, have emphasized the need of devising effective protection mechanisms to incentivize the adoption of FL at scale. While there exist solutions to mitigate these attacks on the server side, little has been done to protect users from attacks performed on the client side. In this context, the use of Trusted Execution Environments (TEEs) on the client side are among the most proposing solutions. However, existing frameworks (e.g., DarkneTZ) require statically putting a large portion of the machine learning model into the TEE to effectively protect against complex attacks or a combination of attacks. We present GradSec, a solution that allows protecting in a TEE only sensitive layers of a machine learning model, either statically or dynamically, hence reducing both the TCB size and the overall training time by up to 30% and 56%, respectively compared to state-of-the-art competitors.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge