Valerio Schiavoni

On the Cost of Model-Serving Frameworks: An Experimental Evaluation

Nov 15, 2024

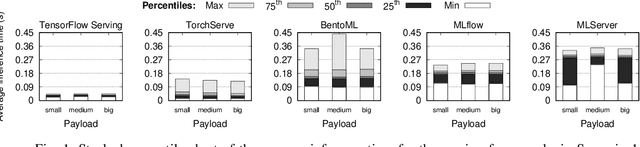

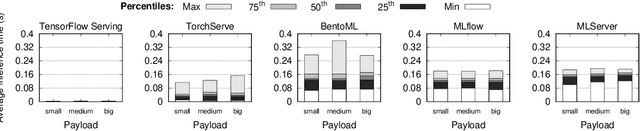

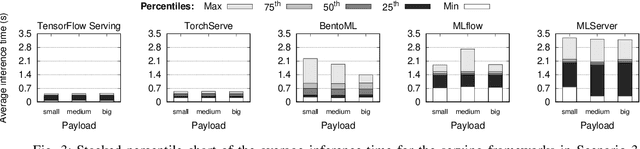

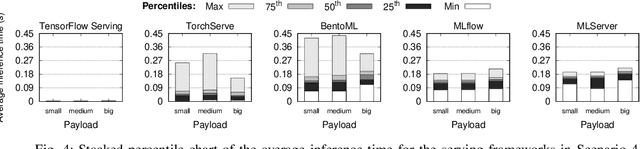

Abstract:In machine learning (ML), the inference phase is the process of applying pre-trained models to new, unseen data with the objective of making predictions. During the inference phase, end-users interact with ML services to gain insights, recommendations, or actions based on the input data. For this reason, serving strategies are nowadays crucial for deploying and managing models in production environments effectively. These strategies ensure that models are available, scalable, reliable, and performant for real-world applications, such as time series forecasting, image classification, natural language processing, and so on. In this paper, we evaluate the performances of five widely-used model serving frameworks (TensorFlow Serving, TorchServe, MLServer, MLflow, and BentoML) under four different scenarios (malware detection, cryptocoin prices forecasting, image classification, and sentiment analysis). We demonstrate that TensorFlow Serving is able to outperform all the other frameworks in serving deep learning (DL) models. Moreover, we show that DL-specific frameworks (TensorFlow Serving and TorchServe) display significantly lower latencies than the three general-purpose ML frameworks (BentoML, MLFlow, and MLServer).

Practical Forecasting of Cryptocoins Timeseries using Correlation Patterns

Sep 05, 2024

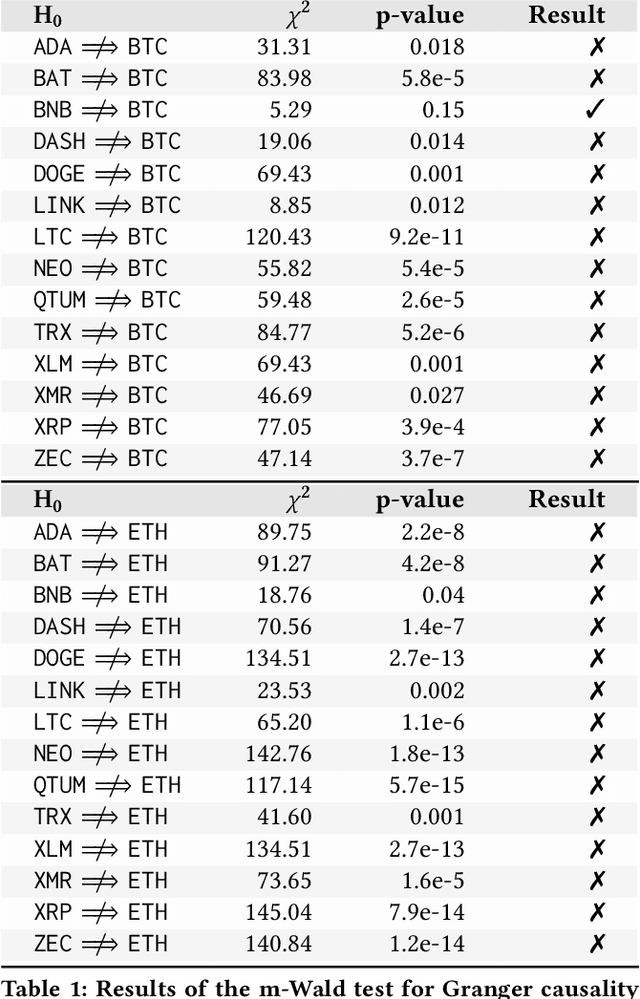

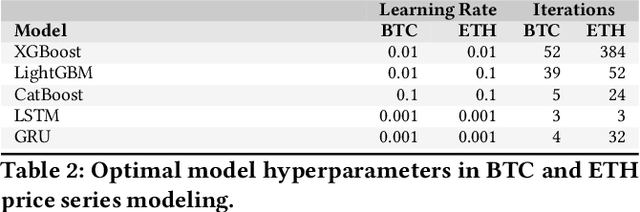

Abstract:Cryptocoins (i.e., Bitcoin, Ether, Litecoin) are tradable digital assets. Ownerships of cryptocoins are registered on distributed ledgers (i.e., blockchains). Secure encryption techniques guarantee the security of the transactions (transfers of coins among owners), registered into the ledger. Cryptocoins are exchanged for specific trading prices. The extreme volatility of such trading prices across all different sets of crypto-assets remains undisputed. However, the relations between the trading prices across different cryptocoins remains largely unexplored. Major coin exchanges indicate trend correlation to advise for sells or buys. However, price correlations remain largely unexplored. We shed some light on the trend correlations across a large variety of cryptocoins, by investigating their coin/price correlation trends over the past two years. We study the causality between the trends, and exploit the derived correlations to understand the accuracy of state-of-the-art forecasting techniques for time series modeling (e.g., GBMs, LSTM and GRU) of correlated cryptocoins. Our evaluation shows (i) strong correlation patterns between the most traded coins (e.g., Bitcoin and Ether) and other types of cryptocurrencies, and (ii) state-of-the-art time series forecasting algorithms can be used to forecast cryptocoins price trends. We released datasets and code to reproduce our analysis to the research community.

Mitigating Adversarial Attacks in Federated Learning with Trusted Execution Environments

Sep 13, 2023

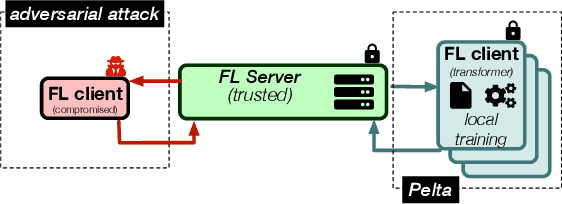

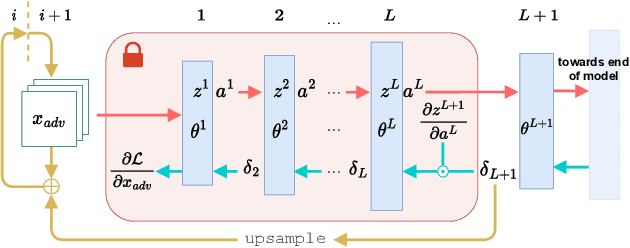

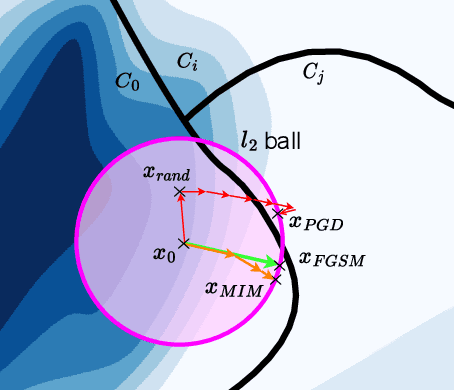

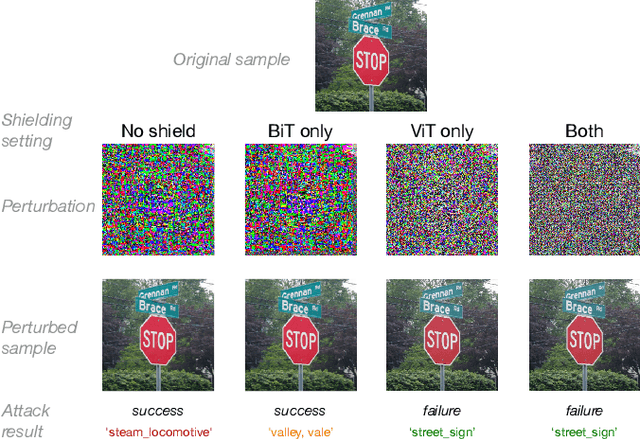

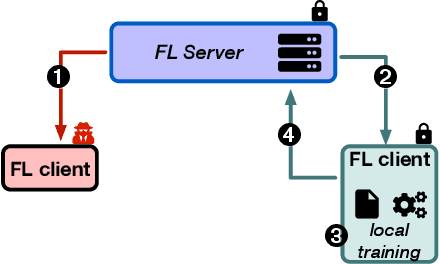

Abstract:The main premise of federated learning (FL) is that machine learning model updates are computed locally to preserve user data privacy. This approach avoids by design user data to ever leave the perimeter of their device. Once the updates aggregated, the model is broadcast to all nodes in the federation. However, without proper defenses, compromised nodes can probe the model inside their local memory in search for adversarial examples, which can lead to dangerous real-world scenarios. For instance, in image-based applications, adversarial examples consist of images slightly perturbed to the human eye getting misclassified by the local model. These adversarial images are then later presented to a victim node's counterpart model to replay the attack. Typical examples harness dissemination strategies such as altered traffic signs (patch attacks) no longer recognized by autonomous vehicles or seemingly unaltered samples that poison the local dataset of the FL scheme to undermine its robustness. Pelta is a novel shielding mechanism leveraging Trusted Execution Environments (TEEs) that reduce the ability of attackers to craft adversarial samples. Pelta masks inside the TEE the first part of the back-propagation chain rule, typically exploited by attackers to craft the malicious samples. We evaluate Pelta on state-of-the-art accurate models using three well-established datasets: CIFAR-10, CIFAR-100 and ImageNet. We show the effectiveness of Pelta in mitigating six white-box state-of-the-art adversarial attacks, such as Projected Gradient Descent, Momentum Iterative Method, Auto Projected Gradient Descent, the Carlini & Wagner attack. In particular, Pelta constitutes the first attempt at defending an ensemble model against the Self-Attention Gradient attack to the best of our knowledge. Our code is available to the research community at https://github.com/queyrusi/Pelta.

Pelta: Shielding Transformers to Mitigate Evasion Attacks in Federated Learning

Aug 08, 2023Abstract:The main premise of federated learning is that machine learning model updates are computed locally, in particular to preserve user data privacy, as those never leave the perimeter of their device. This mechanism supposes the general model, once aggregated, to be broadcast to collaborating and non malicious nodes. However, without proper defenses, compromised clients can easily probe the model inside their local memory in search of adversarial examples. For instance, considering image-based applications, adversarial examples consist of imperceptibly perturbed images (to the human eye) misclassified by the local model, which can be later presented to a victim node's counterpart model to replicate the attack. To mitigate such malicious probing, we introduce Pelta, a novel shielding mechanism leveraging trusted hardware. By harnessing the capabilities of Trusted Execution Environments (TEEs), Pelta masks part of the back-propagation chain rule, otherwise typically exploited by attackers for the design of malicious samples. We evaluate Pelta on a state of the art ensemble model and demonstrate its effectiveness against the Self Attention Gradient adversarial Attack.

Understanding Cryptocoins Trends Correlations

Nov 30, 2022Abstract:Crypto-coins (also known as cryptocurrencies) are tradable digital assets. Notable examples include Bitcoin, Ether and Litecoin. Ownerships of cryptocoins are registered on distributed ledgers (i.e., blockchains). Secure encryption techniques guarantee the security of the transactions (transfers of coins across owners), registered into the ledger. Cryptocoins are exchanged for specific trading prices. While history has shown the extreme volatility of such trading prices across all different sets of crypto-assets, it remains unclear what and if there are tight relations between the trading prices of different cryptocoins. Major coin exchanges (i.e., Coinbase) provide trend correlation indicators to coin owners, suggesting possible acquisitions or sells. However, these correlations remain largely unvalidated. In this paper, we shed lights on the trend correlations across a large variety of cryptocoins, by investigating their coin-price correlation trends over a period of two years. Our experimental results suggest strong correlation patterns between main coins (Ethereum, Bitcoin) and alt-coins. We believe our study can support forecasting techniques for time-series modeling in the context of crypto-coins. We release our dataset and code to reproduce our analysis to the research community.

* 8 pages, 4 figures

Shielding Federated Learning Systems against Inference Attacks with ARM TrustZone

Aug 19, 2022

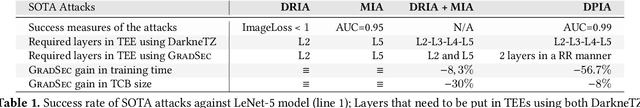

Abstract:Federated Learning (FL) opens new perspectives for training machine learning models while keeping personal data on the users premises. Specifically, in FL, models are trained on the users devices and only model updates (i.e., gradients) are sent to a central server for aggregation purposes. However, the long list of inference attacks that leak private data from gradients, published in the recent years, have emphasized the need of devising effective protection mechanisms to incentivize the adoption of FL at scale. While there exist solutions to mitigate these attacks on the server side, little has been done to protect users from attacks performed on the client side. In this context, the use of Trusted Execution Environments (TEEs) on the client side are among the most proposing solutions. However, existing frameworks (e.g., DarkneTZ) require statically putting a large portion of the machine learning model into the TEE to effectively protect against complex attacks or a combination of attacks. We present GradSec, a solution that allows protecting in a TEE only sensitive layers of a machine learning model, either statically or dynamically, hence reducing both the TCB size and the overall training time by up to 30% and 56%, respectively compared to state-of-the-art competitors.

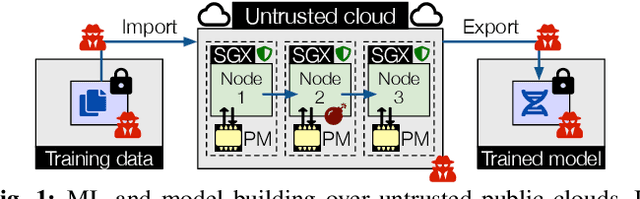

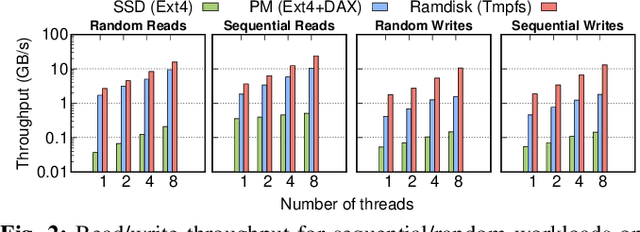

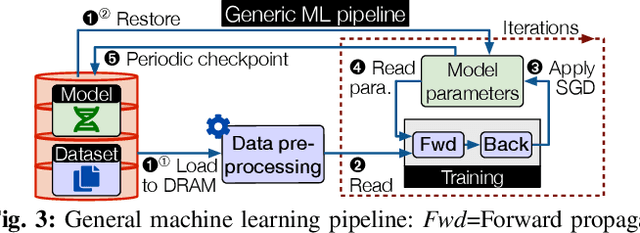

Plinius: Secure and Persistent Machine Learning Model Training

Apr 08, 2021

Abstract:With the increasing popularity of cloud based machine learning (ML) techniques there comes a need for privacy and integrity guarantees for ML data. In addition, the significant scalability challenges faced by DRAM coupled with the high access-times of secondary storage represent a huge performance bottleneck for ML systems. While solutions exist to tackle the security aspect, performance remains an issue. Persistent memory (PM) is resilient to power loss (unlike DRAM), provides fast and fine-granular access to memory (unlike disk storage) and has latency and bandwidth close to DRAM (in the order of ns and GB/s, respectively). We present PLINIUS, a ML framework using Intel SGX enclaves for secure training of ML models and PM for fault tolerance guarantees. P LINIUS uses a novel mirroring mechanism to create and maintain (i) encrypted mirror copies of ML models on PM, and (ii) encrypted training data in byte-addressable PM, for near-instantaneous data recovery after a system failure. Compared to disk-based checkpointing systems,PLINIUS is 3.2x and 3.7x faster respectively for saving and restoring models on real PM hardware, achieving robust and secure ML model training in SGX enclaves.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge