Siu Wun Cheung

Free-RBF-KAN: Kolmogorov-Arnold Networks with Adaptive Radial Basis Functions for Efficient Function Learning

Jan 13, 2026Abstract:Kolmogorov-Arnold Networks (KANs) have shown strong potential for efficiently approximating complex nonlinear functions. However, the original KAN formulation relies on B-spline basis functions, which incur substantial computational overhead due to De Boor's algorithm. To address this limitation, recent work has explored alternative basis functions such as radial basis functions (RBFs) that can improve computational efficiency and flexibility. Yet, standard RBF-KANs often sacrifice accuracy relative to the original KAN design. In this work, we propose Free-RBF-KAN, a RBF-based KAN architecture that incorporates adaptive learning grids and trainable smoothness to close this performance gap. Our method employs freely learnable RBF shapes that dynamically align grid representations with activation patterns, enabling expressive and adaptive function approximation. Additionally, we treat smoothness as a kernel parameter optimized jointly with network weights, without increasing computational complexity. We provide a general universality proof for RBF-KANs, which encompasses our Free-RBF-KAN formulation. Through a broad set of experiments, including multiscale function approximation, physics-informed machine learning, and PDE solution operator learning, Free-RBF-KAN achieves accuracy comparable to the original B-spline-based KAN while delivering faster training and inference. These results highlight Free-RBF-KAN as a compelling balance between computational efficiency and adaptive resolution, particularly for high-dimensional structured modeling tasks.

Defining Foundation Models for Computational Science: A Call for Clarity and Rigor

May 28, 2025Abstract:The widespread success of foundation models in natural language processing and computer vision has inspired researchers to extend the concept to scientific machine learning and computational science. However, this position paper argues that as the term "foundation model" is an evolving concept, its application in computational science is increasingly used without a universally accepted definition, potentially creating confusion and diluting its precise scientific meaning. In this paper, we address this gap by proposing a formal definition of foundation models in computational science, grounded in the core values of generality, reusability, and scalability. We articulate a set of essential and desirable characteristics that such models must exhibit, drawing parallels with traditional foundational methods, like the finite element and finite volume methods. Furthermore, we introduce the Data-Driven Finite Element Method (DD-FEM), a framework that fuses the modular structure of classical FEM with the representational power of data-driven learning. We demonstrate how DD-FEM addresses many of the key challenges in realizing foundation models for computational science, including scalability, adaptability, and physics consistency. By bridging traditional numerical methods with modern AI paradigms, this work provides a rigorous foundation for evaluating and developing novel approaches toward future foundation models in computational science.

A Comprehensive Review of Latent Space Dynamics Identification Algorithms for Intrusive and Non-Intrusive Reduced-Order-Modeling

Mar 16, 2024

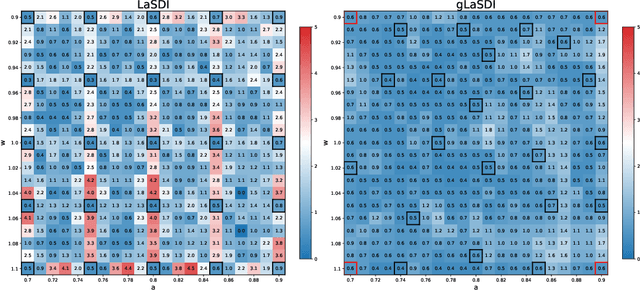

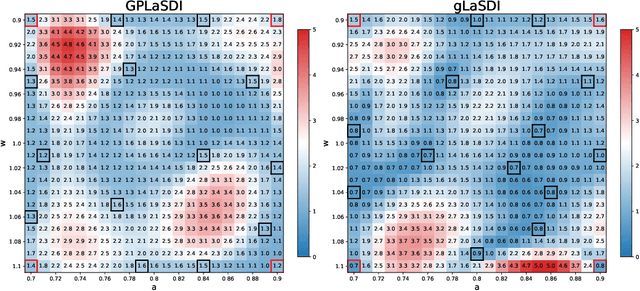

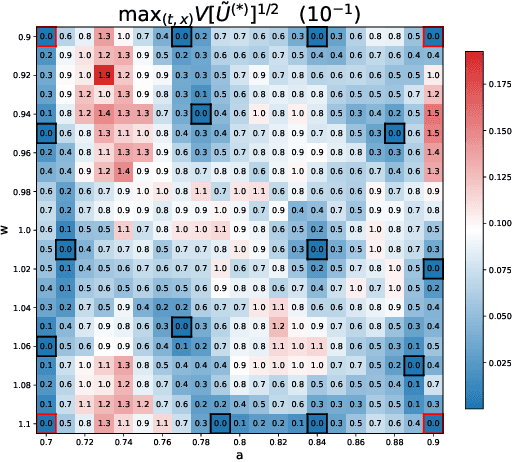

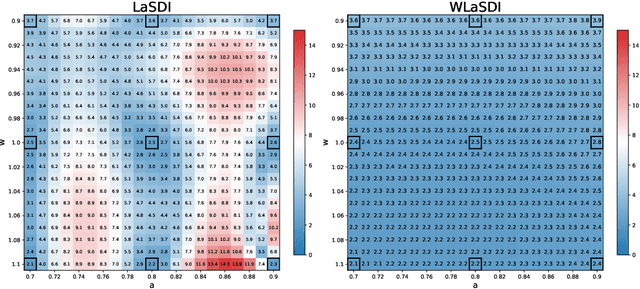

Abstract:Numerical solvers of partial differential equations (PDEs) have been widely employed for simulating physical systems. However, the computational cost remains a major bottleneck in various scientific and engineering applications, which has motivated the development of reduced-order models (ROMs). Recently, machine-learning-based ROMs have gained significant popularity and are promising for addressing some limitations of traditional ROM methods, especially for advection dominated systems. In this chapter, we focus on a particular framework known as Latent Space Dynamics Identification (LaSDI), which transforms the high-fidelity data, governed by a PDE, to simpler and low-dimensional latent-space data, governed by ordinary differential equations (ODEs). These ODEs can be learned and subsequently interpolated to make ROM predictions. Each building block of LaSDI can be easily modulated depending on the application, which makes the LaSDI framework highly flexible. In particular, we present strategies to enforce the laws of thermodynamics into LaSDI models (tLaSDI), enhance robustness in the presence of noise through the weak form (WLaSDI), select high-fidelity training data efficiently through active learning (gLaSDI, GPLaSDI), and quantify the ROM prediction uncertainty through Gaussian processes (GPLaSDI). We demonstrate the performance of different LaSDI approaches on Burgers equation, a non-linear heat conduction problem, and a plasma physics problem, showing that LaSDI algorithms can achieve relative errors of less than a few percent and up to thousands of times speed-ups.

tLaSDI: Thermodynamics-informed latent space dynamics identification

Mar 09, 2024

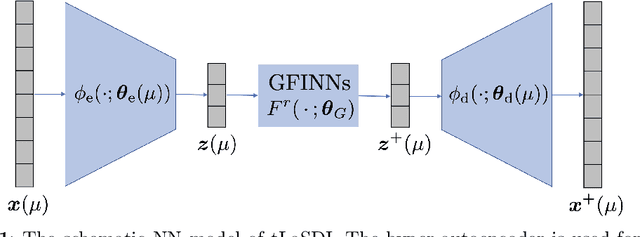

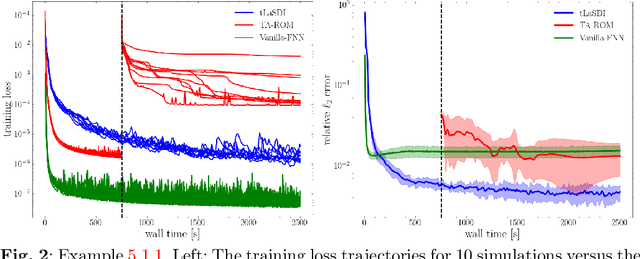

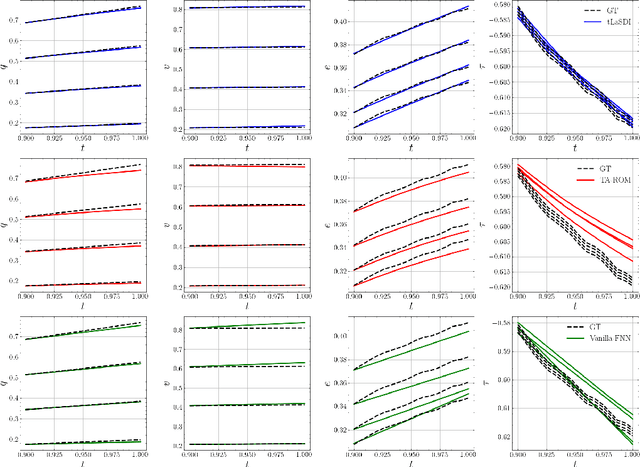

Abstract:We propose a data-driven latent space dynamics identification method (tLaSDI) that embeds the first and second principles of thermodynamics. The latent variables are learned through an autoencoder as a nonlinear dimension reduction model. The dynamics of the latent variables are constructed by a neural network-based model that preserves certain structures to respect the thermodynamic laws through the GENERIC formalism. An abstract error estimate of the approximation is established, which provides a new loss formulation involving the Jacobian computation of autoencoder. Both the autoencoder and the latent dynamics are trained to minimize the new loss. Numerical examples are presented to demonstrate the performance of tLaSDI, which exhibits robust generalization ability, even in extrapolation. In addition, an intriguing correlation is empirically observed between the entropy production rates in the latent space and the behaviors of the full-state solution.

Deep Multiscale Model Learning

Jun 13, 2018

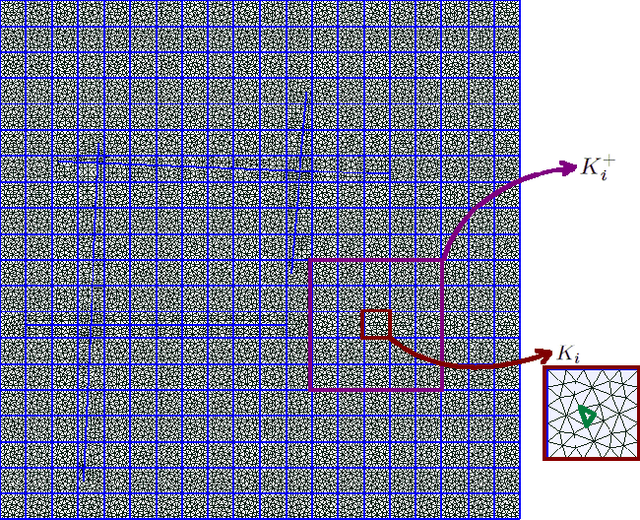

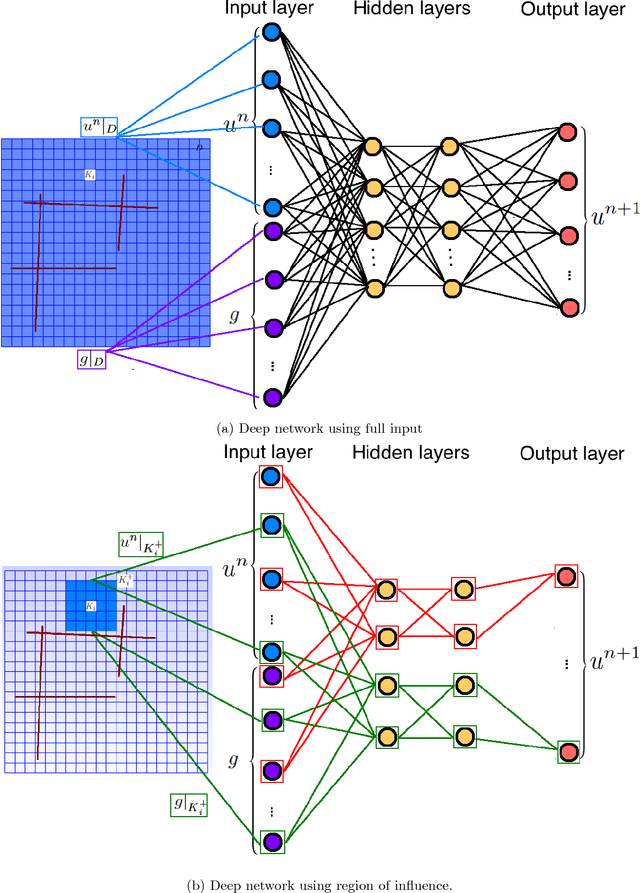

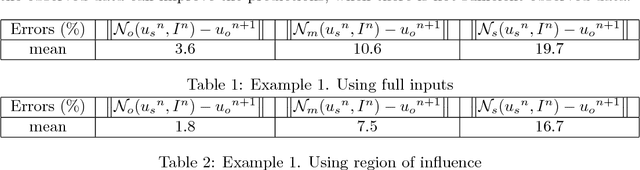

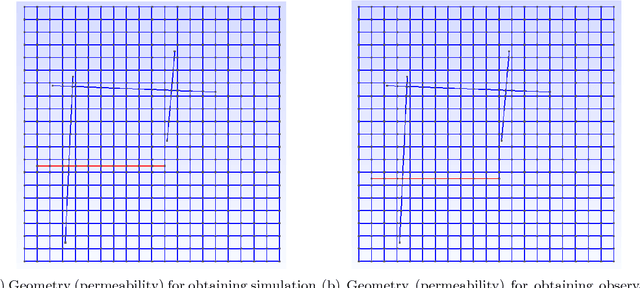

Abstract:The objective of this paper is to design novel multi-layer neural network architectures for multiscale simulations of flows taking into account the observed data and physical modeling concepts. Our approaches use deep learning concepts combined with local multiscale model reduction methodologies to predict flow dynamics. Using reduced-order model concepts is important for constructing robust deep learning architectures since the reduced-order models provide fewer degrees of freedom. Flow dynamics can be thought of as multi-layer networks. More precisely, the solution (e.g., pressures and saturations) at the time instant $n+1$ depends on the solution at the time instant $n$ and input parameters, such as permeability fields, forcing terms, and initial conditions. One can regard the solution as a multi-layer network, where each layer, in general, is a nonlinear forward map and the number of layers relates to the internal time steps. We will rely on rigorous model reduction concepts to define unknowns and connections for each layer. In each layer, our reduced-order models will provide a forward map, which will be modified ("trained") using available data. It is critical to use reduced-order models for this purpose, which will identify the regions of influence and the appropriate number of variables. Because of the lack of available data, the training will be supplemented with computational data as needed and the interpolation between data-rich and data-deficient models. We will also use deep learning algorithms to train the elements of the reduced model discrete system. We will present main ingredients of our approach and numerical results. Numerical results show that using deep learning and multiscale models, we can improve the forward models, which are conditioned to the available data.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge