Sion Hannuna

Self-Supervised Animal Identification for Long Videos

Jan 14, 2026Abstract:Identifying individual animals in long-duration videos is essential for behavioral ecology, wildlife monitoring, and livestock management. Traditional methods require extensive manual annotation, while existing self-supervised approaches are computationally demanding and ill-suited for long sequences due to memory constraints and temporal error propagation. We introduce a highly efficient, self-supervised method that reframes animal identification as a global clustering task rather than a sequential tracking problem. Our approach assumes a known, fixed number of individuals within a single video -- a common scenario in practice -- and requires only bounding box detections and the total count. By sampling pairs of frames, using a frozen pre-trained backbone, and employing a self-bootstrapping mechanism with the Hungarian algorithm for in-batch pseudo-label assignment, our method learns discriminative features without identity labels. We adapt a Binary Cross Entropy loss from vision-language models, enabling state-of-the-art accuracy ($>$97\%) while consuming less than 1 GB of GPU memory per batch -- an order of magnitude less than standard contrastive methods. Evaluated on challenging real-world datasets (3D-POP pigeons and 8-calves feeding videos), our framework matches or surpasses supervised baselines trained on over 1,000 labeled frames, effectively removing the manual annotation bottleneck. This work enables practical, high-accuracy animal identification on consumer-grade hardware, with broad applicability in resource-constrained research settings. All code written for this paper are \href{https://huggingface.co/datasets/tonyFang04/8-calves}{here}.

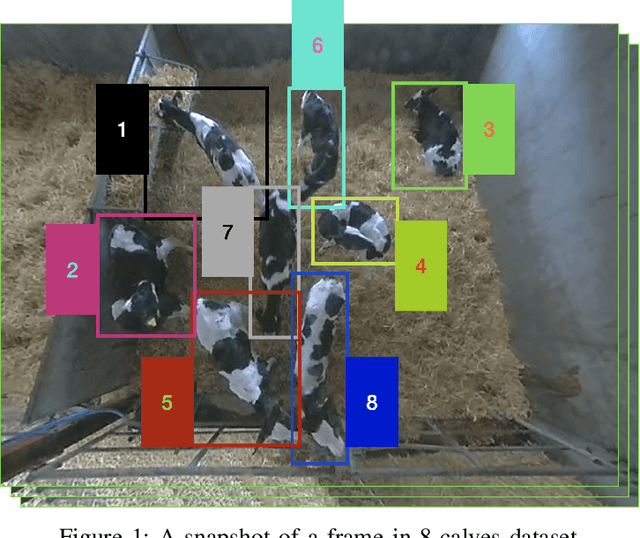

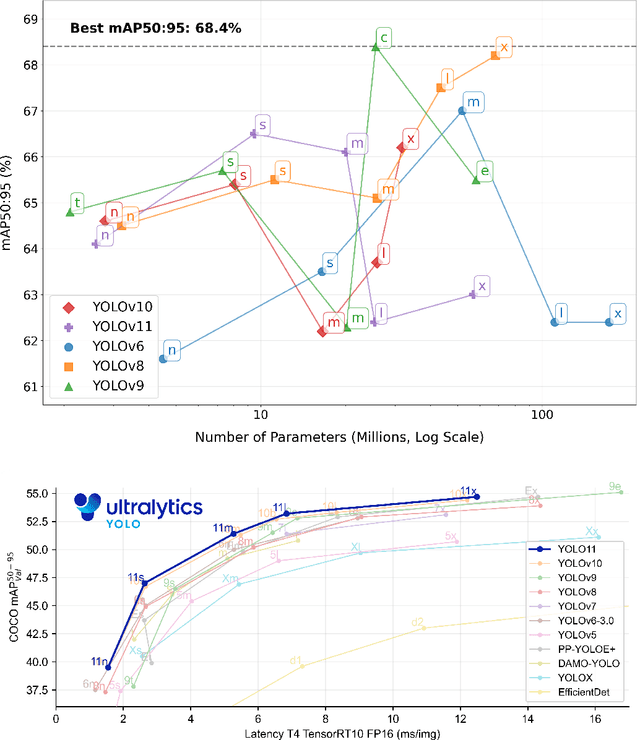

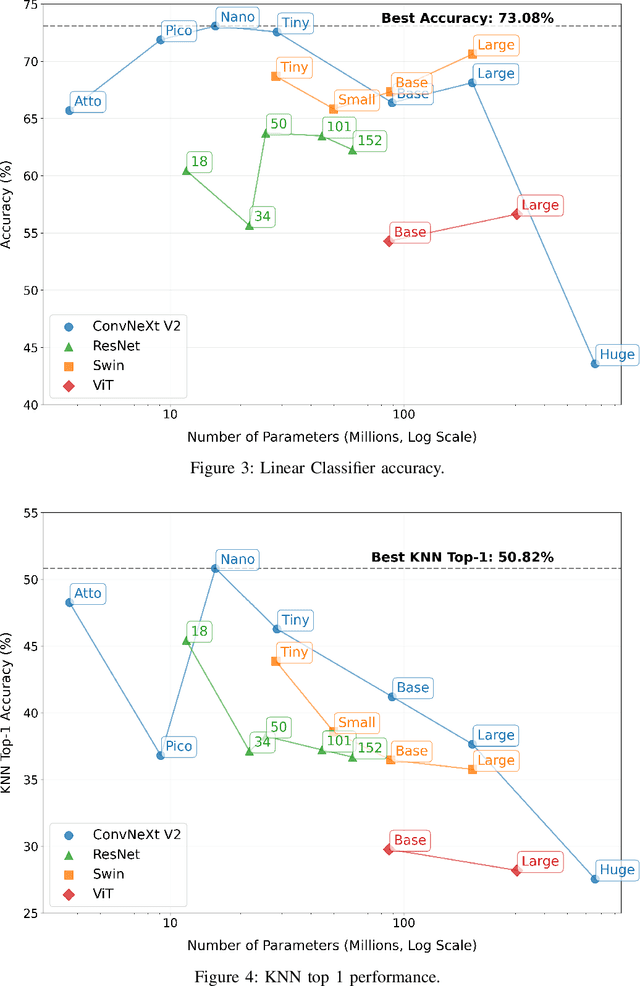

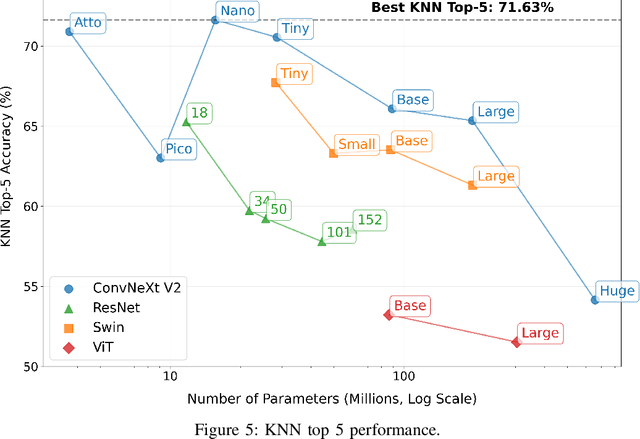

8-Calves Image dataset

Mar 17, 2025

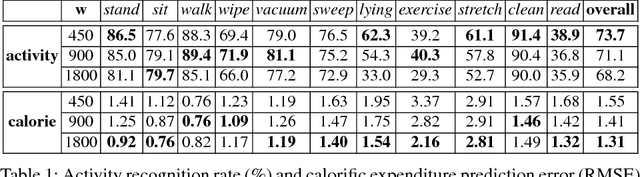

Abstract:We introduce the 8-Calves dataset, a benchmark for evaluating object detection and identity classification in occlusion-rich, temporally consistent environments. The dataset comprises a 1-hour video (67,760 frames) of eight Holstein Friesian calves in a barn, with ground truth bounding boxes and identities, alongside 900 static frames for detection tasks. Each calf exhibits a unique coat pattern, enabling precise identity distinction. For cow detection, we fine-tuned 28 models (25 YOLO variants, 3 transformers) on 600 frames, testing on the full video. Results reveal smaller YOLO models (e.g., YOLOV9c) outperform larger counterparts despite potential bias from a YOLOv8m-based labeling pipeline. For identity classification, embeddings from 23 pretrained vision models (ResNet, ConvNextV2, ViTs) were evaluated via linear classifiers and KNN. Modern architectures like ConvNextV2 excelled, while larger models frequently overfit, highlighting inefficiencies in scaling. Key findings include: (1) Minimal, targeted augmentations (e.g., rotation) outperform complex strategies on simpler datasets; (2) Pretraining strategies (e.g., BEiT, DinoV2) significantly boost identity recognition; (3) Temporal continuity and natural motion patterns offer unique challenges absent in synthetic or domain-specific benchmarks. The dataset's controlled design and extended sequences (1 hour vs. prior 10-minute benchmarks) make it a pragmatic tool for stress-testing occlusion handling, temporal consistency, and efficiency. The link to the dataset is https://github.com/tonyFang04/8-calves.

Universal Bovine Identification via Depth Data and Deep Metric Learning

Mar 29, 2024

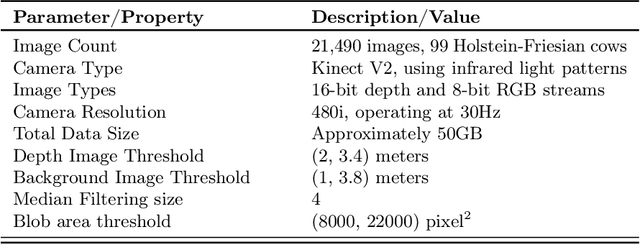

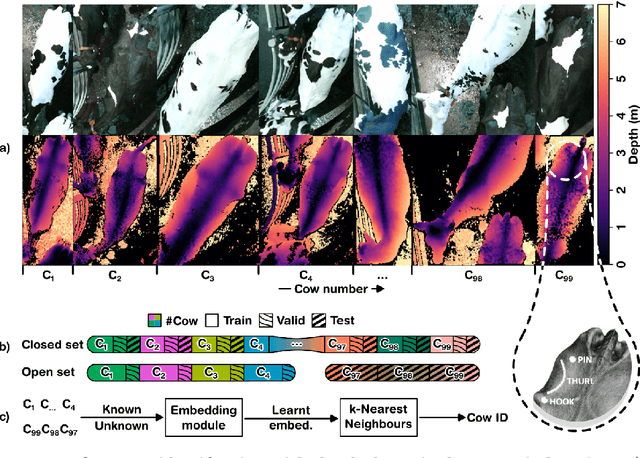

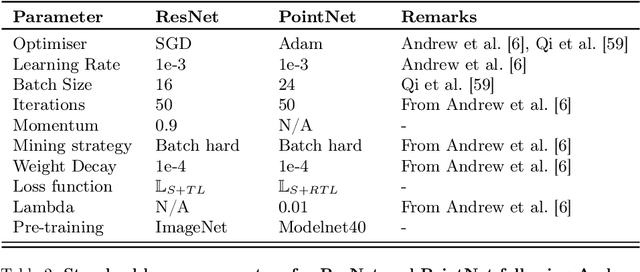

Abstract:This paper proposes and evaluates, for the first time, a top-down (dorsal view), depth-only deep learning system for accurately identifying individual cattle and provides associated code, datasets, and training weights for immediate reproducibility. An increase in herd size skews the cow-to-human ratio at the farm and makes the manual monitoring of individuals more challenging. Therefore, real-time cattle identification is essential for the farms and a crucial step towards precision livestock farming. Underpinned by our previous work, this paper introduces a deep-metric learning method for cattle identification using depth data from an off-the-shelf 3D camera. The method relies on CNN and MLP backbones that learn well-generalised embedding spaces from the body shape to differentiate individuals -- requiring neither species-specific coat patterns nor close-up muzzle prints for operation. The network embeddings are clustered using a simple algorithm such as $k$-NN for highly accurate identification, thus eliminating the need to retrain the network for enrolling new individuals. We evaluate two backbone architectures, ResNet, as previously used to identify Holstein Friesians using RGB images, and PointNet, which is specialised to operate on 3D point clouds. We also present CowDepth2023, a new dataset containing 21,490 synchronised colour-depth image pairs of 99 cows, to evaluate the backbones. Both ResNet and PointNet architectures, which consume depth maps and point clouds, respectively, led to high accuracy that is on par with the coat pattern-based backbone.

VI-Net: View-Invariant Quality of Human Movement Assessment

Aug 11, 2020

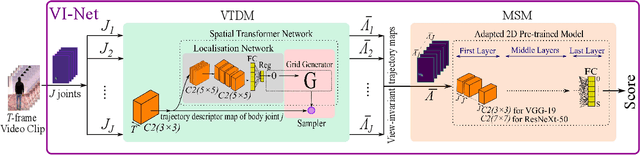

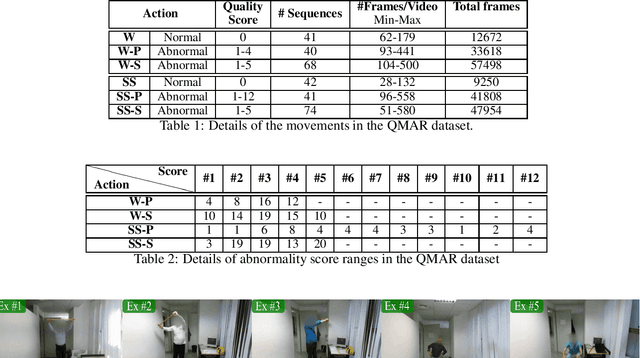

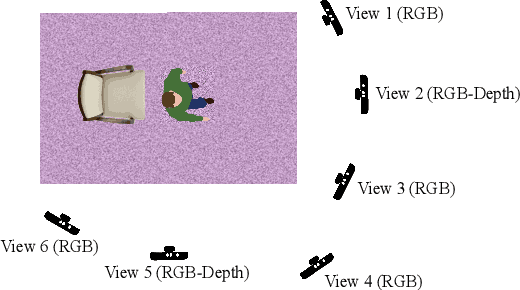

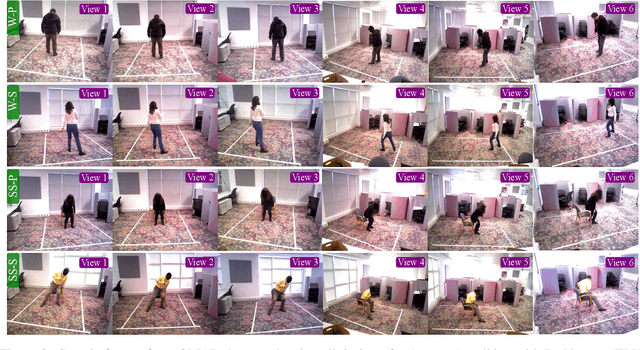

Abstract:We propose a view-invariant method towards the assessment of the quality of human movements which does not rely on skeleton data. Our end-to-end convolutional neural network consists of two stages, where at first a view-invariant trajectory descriptor for each body joint is generated from RGB images, and then the collection of trajectories for all joints are processed by an adapted, pre-trained 2D CNN (e.g. VGG-19 or ResNeXt-50) to learn the relationship amongst the different body parts and deliver a score for the movement quality. We release the only publicly-available, multi-view, non-skeleton, non-mocap, rehabilitation movement dataset (QMAR), and provide results for both cross-subject and cross-view scenarios on this dataset. We show that VI-Net achieves average rank correlation of 0.66 on cross-subject and 0.65 on unseen views when trained on only two views. We also evaluate the proposed method on the single-view rehabilitation dataset KIMORE and obtain 0.66 rank correlation against a baseline of 0.62.

Towards automated mobile-phone-based plant pathology management

Dec 20, 2019

Abstract:This paper presents a framework which uses computer vision algorithms to standardise images and analyse them for identifying crop diseases automatically. The tools are created to bridge the information gap between farmers, advisory call centres and agricultural experts using the images of diseased/infected crop captured by mobile-phones. These images are generally sensitive to a number of factors including camera type and lighting. We therefore propose a technique for standardising the colour of plant images within the context of the advisory system. Subsequently, to aid the advisory process, the disease recognition process is automated using image processing in conjunction with machine learning techniques. We describe our proposed leaf extraction, affected area segmentation and disease classification techniques. The proposed disease recognition system is tested using six mango diseases and the results show over 80% accuracy. The final output of our system is a list of possible diseases with relevant management advice.

CaloriNet: From silhouettes to calorie estimation in private environments

Jun 21, 2018

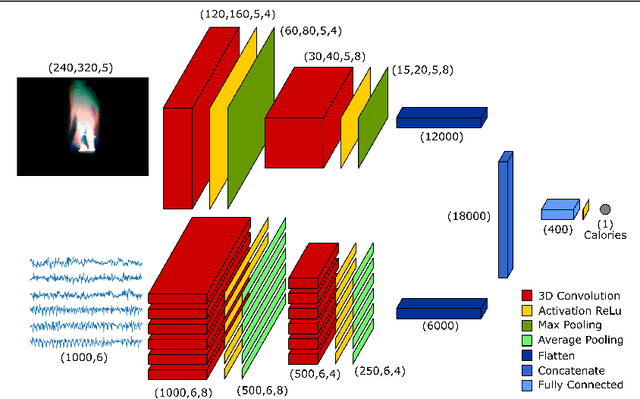

Abstract:We propose a novel deep fusion architecture, CaloriNet, for the online estimation of energy expenditure for free living monitoring in private environments, where RGB data is discarded and replaced by silhouettes. Our fused convolutional neural network architecture is trainable end-to-end, to estimate calorie expenditure, using temporal foreground silhouettes alongside accelerometer data. The network is trained and cross-validated on a publicly available dataset, SPHERE_RGBD + Inertial_calorie. Results show state-of-the-art minimum error on the estimation of energy expenditure (calories per minute), outperforming alternative, standard and single-modal techniques.

Automatic Leaf Extraction from Outdoor Images

Sep 19, 2017

Abstract:Automatic plant recognition and disease analysis may be streamlined by an image of a complete, isolated leaf as an initial input. Segmenting leaves from natural images is a hard problem. Cluttered and complex backgrounds: often composed of other leaves are commonplace. Furthermore, their appearance is highly dependent upon illumination and viewing perspective. In order to address these issues we propose a methodology which exploits the leaves venous systems in tandem with other low level features. Background and leaf markers are created using colour, intensity and texture. Two approaches are investigated: watershed and graph-cut and results compared. Primary-secondary vein detection and a protrusion-notch removal are applied to refine the extracted leaf. The efficacy of our approach is demonstrated against existing work.

Calorie Counter: RGB-Depth Visual Estimation of Energy Expenditure at Home

Jul 27, 2016

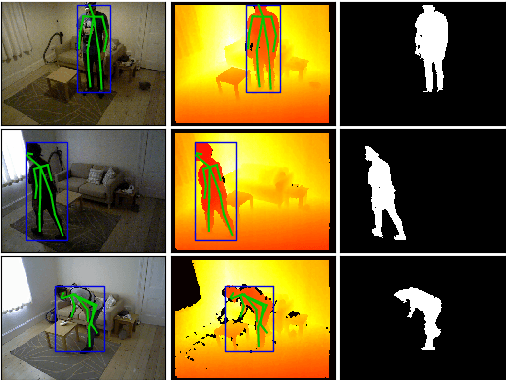

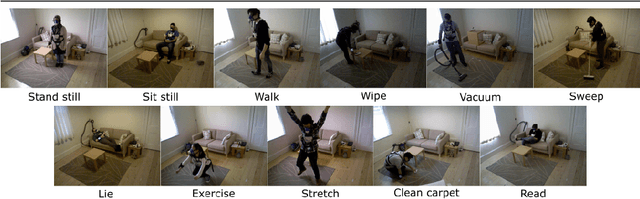

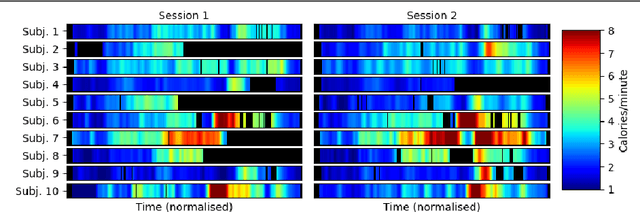

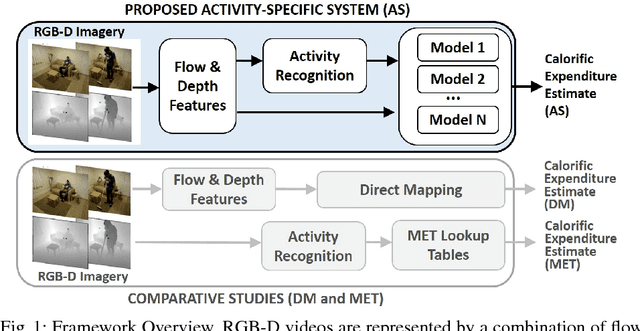

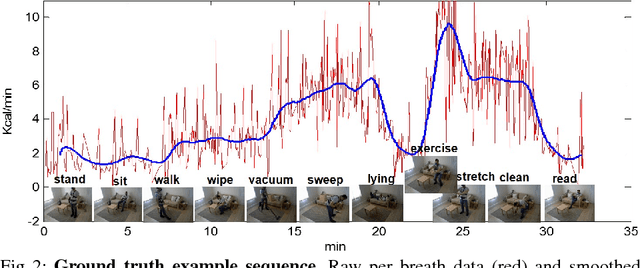

Abstract:We present a new framework for vision-based estimation of calorific expenditure from RGB-D data - the first that is validated on physical gas exchange measurements and applied to daily living scenarios. Deriving a person's energy expenditure from sensors is an important tool in tracking physical activity levels for health and lifestyle monitoring. Most existing methods use metabolic lookup tables (METs) for a manual estimate or systems with inertial sensors which ultimately require users to wear devices. In contrast, the proposed pose-invariant and individual-independent vision framework allows for a remote estimation of calorific expenditure. We introduce, and evaluate our approach on, a new dataset called SPHERE-calorie, for which visual estimates can be compared against simultaneously obtained, indirect calorimetry measures based on gas exchange. % based on per breath gas exchange. We conclude from our experiments that the proposed vision pipeline is suitable for home monitoring in a controlled environment, with calorific expenditure estimates above accuracy levels of commonly used manual estimations via METs. With the dataset released, our work establishes a baseline for future research for this little-explored area of computer vision.

Multiple Human Tracking in RGB-D Data: A Survey

Jun 14, 2016

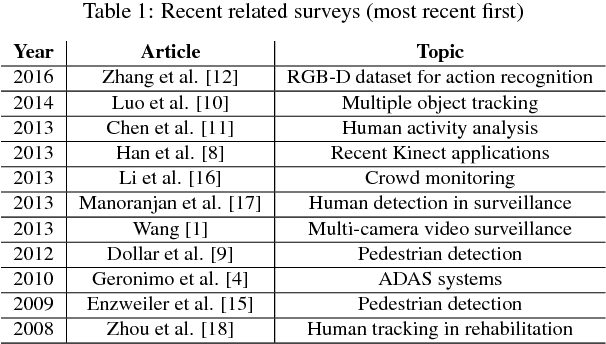

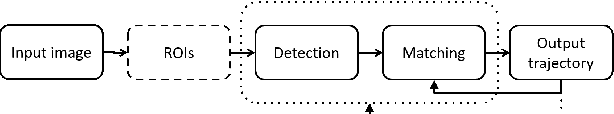

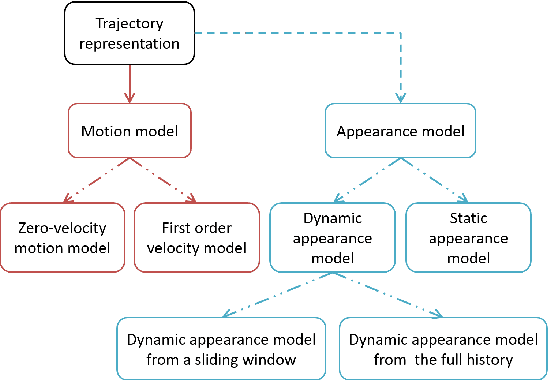

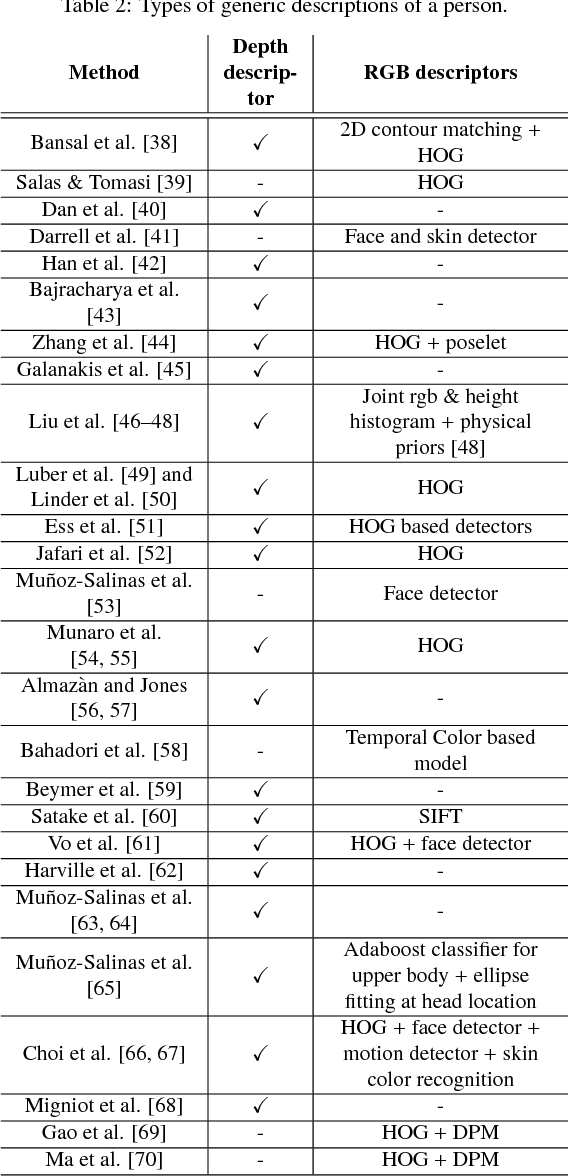

Abstract:Multiple human tracking (MHT) is a fundamental task in many computer vision applications. Appearance-based approaches, primarily formulated on RGB data, are constrained and affected by problems arising from occlusions and/or illumination variations. In recent years, the arrival of cheap RGB-Depth (RGB-D) devices has {led} to many new approaches to MHT, and many of these integrate color and depth cues to improve each and every stage of the process. In this survey, we present the common processing pipeline of these methods and review their methodology based (a) on how they implement this pipeline and (b) on what role depth plays within each stage of it. We identify and introduce existing, publicly available, benchmark datasets and software resources that fuse color and depth data for MHT. Finally, we present a brief comparative evaluation of the performance of those works that have applied their methods to these datasets.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge