Andrew W. Dowsey

Universal Bovine Identification via Depth Data and Deep Metric Learning

Mar 29, 2024

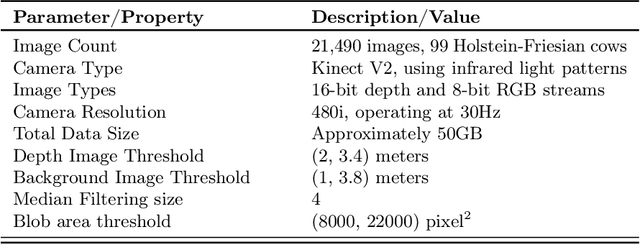

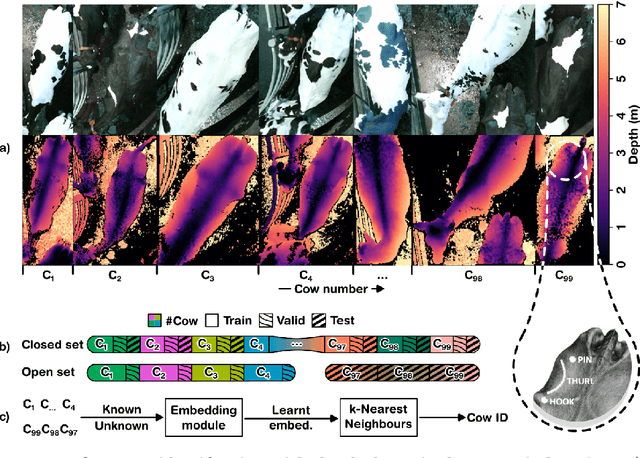

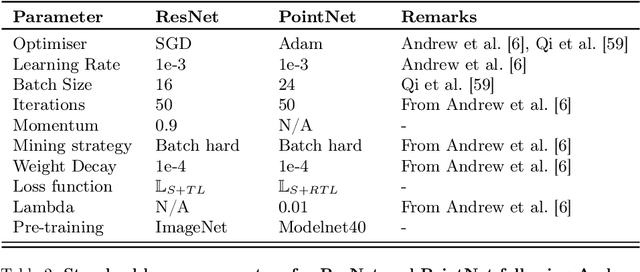

Abstract:This paper proposes and evaluates, for the first time, a top-down (dorsal view), depth-only deep learning system for accurately identifying individual cattle and provides associated code, datasets, and training weights for immediate reproducibility. An increase in herd size skews the cow-to-human ratio at the farm and makes the manual monitoring of individuals more challenging. Therefore, real-time cattle identification is essential for the farms and a crucial step towards precision livestock farming. Underpinned by our previous work, this paper introduces a deep-metric learning method for cattle identification using depth data from an off-the-shelf 3D camera. The method relies on CNN and MLP backbones that learn well-generalised embedding spaces from the body shape to differentiate individuals -- requiring neither species-specific coat patterns nor close-up muzzle prints for operation. The network embeddings are clustered using a simple algorithm such as $k$-NN for highly accurate identification, thus eliminating the need to retrain the network for enrolling new individuals. We evaluate two backbone architectures, ResNet, as previously used to identify Holstein Friesians using RGB images, and PointNet, which is specialised to operate on 3D point clouds. We also present CowDepth2023, a new dataset containing 21,490 synchronised colour-depth image pairs of 99 cows, to evaluate the backbones. Both ResNet and PointNet architectures, which consume depth maps and point clouds, respectively, led to high accuracy that is on par with the coat pattern-based backbone.

Towards Self-Supervision for Video Identification of Individual Holstein-Friesian Cattle: The Cows2021 Dataset

May 05, 2021

Abstract:In this paper we publish the largest identity-annotated Holstein-Friesian cattle dataset Cows2021 and a first self-supervision framework for video identification of individual animals. The dataset contains 10,402 RGB images with labels for localisation and identity as well as 301 videos from the same herd. The data shows top-down in-barn imagery, which captures the breed's individually distinctive black and white coat pattern. Motivated by the labelling burden involved in constructing visual cattle identification systems, we propose exploiting the temporal coat pattern appearance across videos as a self-supervision signal for animal identity learning. Using an individual-agnostic cattle detector that yields oriented bounding-boxes, rotation-normalised tracklets of individuals are formed via tracking-by-detection and enriched via augmentations. This produces a `positive' sample set per tracklet, which is paired against a `negative' set sampled from random cattle of other videos. Frame-triplet contrastive learning is then employed to construct a metric latent space. The fitting of a Gaussian Mixture Model to this space yields a cattle identity classifier. Results show an accuracy of Top-1 57.0% and Top-4: 76.9% and an Adjusted Rand Index: 0.53 compared to the ground truth. Whilst supervised training surpasses this benchmark by a large margin, we conclude that self-supervision can nevertheless play a highly effective role in speeding up labelling efforts when initially constructing supervision information. We provide all data and full source code alongside an analysis and evaluation of the system.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge