Melvyn Smith

Universal Bovine Identification via Depth Data and Deep Metric Learning

Mar 29, 2024

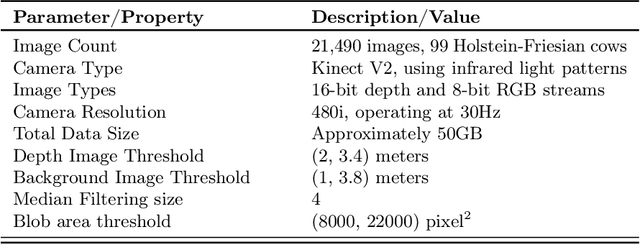

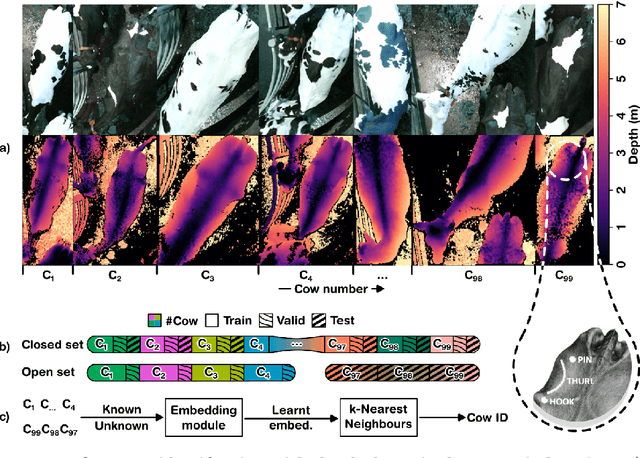

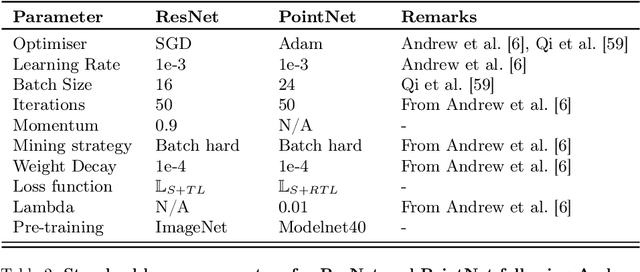

Abstract:This paper proposes and evaluates, for the first time, a top-down (dorsal view), depth-only deep learning system for accurately identifying individual cattle and provides associated code, datasets, and training weights for immediate reproducibility. An increase in herd size skews the cow-to-human ratio at the farm and makes the manual monitoring of individuals more challenging. Therefore, real-time cattle identification is essential for the farms and a crucial step towards precision livestock farming. Underpinned by our previous work, this paper introduces a deep-metric learning method for cattle identification using depth data from an off-the-shelf 3D camera. The method relies on CNN and MLP backbones that learn well-generalised embedding spaces from the body shape to differentiate individuals -- requiring neither species-specific coat patterns nor close-up muzzle prints for operation. The network embeddings are clustered using a simple algorithm such as $k$-NN for highly accurate identification, thus eliminating the need to retrain the network for enrolling new individuals. We evaluate two backbone architectures, ResNet, as previously used to identify Holstein Friesians using RGB images, and PointNet, which is specialised to operate on 3D point clouds. We also present CowDepth2023, a new dataset containing 21,490 synchronised colour-depth image pairs of 99 cows, to evaluate the backbones. Both ResNet and PointNet architectures, which consume depth maps and point clouds, respectively, led to high accuracy that is on par with the coat pattern-based backbone.

Corn Yield Prediction Model with Deep Neural Networks for Smallholder Farmer Decision Support System

Jan 08, 2024

Abstract:Given the nonlinearity of the interaction between weather and soil variables, a novel deep neural network regressor (DNNR) was carefully designed with considerations to the depth, number of neurons of the hidden layers, and the hyperparameters with their optimizations. Additionally, a new metric, the average of absolute root squared error (ARSE) was proposed to address the shortcomings of root mean square error (RMSE) and mean absolute error (MAE) while combining their strengths. Using the ARSE metric, the random forest regressor (RFR) and the extreme gradient boosting regressor (XGBR), were compared with DNNR. The RFR and XGBR achieved yield errors of 0.0000294 t/ha, and 0.000792 t/ha, respectively, compared to the DNNR(s) which achieved 0.0146 t/ha and 0.0209 t/ha, respectively. All errors were impressively small. However, with changes to the explanatory variables to ensure generalizability to unforeseen data, DNNR(s) performed best. The unforeseen data, different from unseen data, is coined to represent sudden and unexplainable change to weather and soil variables due to climate change. Further analysis reveals that a strong interaction does exist between weather and soil variables. Using precipitation and silt, which are strong-negatively and strong-positively correlated with yield, respectively, yield was observed to increase when precipitation was reduced and silt increased, and vice-versa.

Home Activity Monitoring using Low Resolution Infrared Sensor

Nov 13, 2018

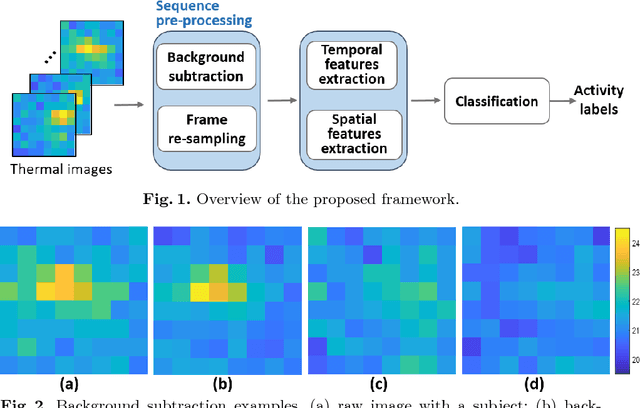

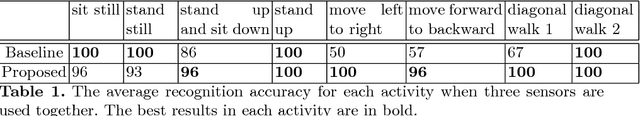

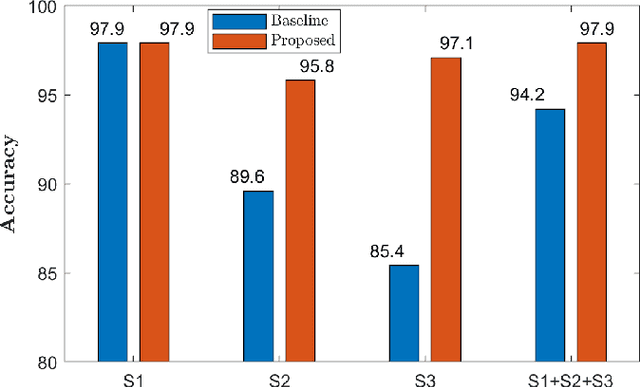

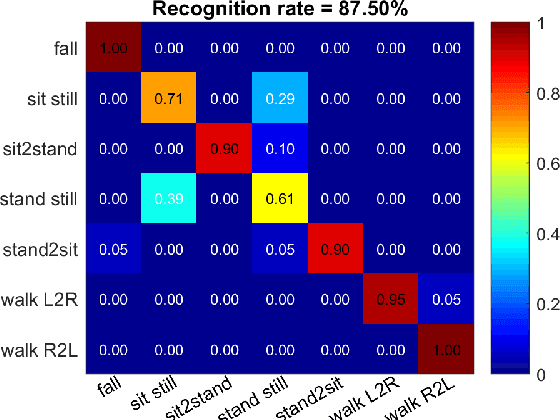

Abstract:Action monitoring in a home environment provides important information for health monitoring and may serve as input into a smart home environment. Visual analysis using cameras can recognise actions in a complex scene, such as someones living room. However, although there the huge potential benefits and importance, specifically for health, cameras are not widely accepted because of privacy concerns. This paper recognises human activities using a sensor that retains privacy. The sensor is not only different by being thermal, but it is also of low resolution: 8x8 pixels. The combination of the thermal imaging, and the low spatial resolution ensures the privacy of individuals. We present an approach to recognise daily activities using this sensor based on a discrete cosine transform. We evaluate the proposed method on a state-of-the-art dataset and experimentally confirm that our approach outperforms the baseline method. We also introduce a new dataset, and evaluate the method on it. Here we show that the sensor is considered better at detecting the occurrence of falls and Activities of Daily Living. Our method achieves an overall accuracy of 87.50% across 7 activities with a fall detection sensitivity of 100% and specificity of 99.21%.

Agricultural Robotics: The Future of Robotic Agriculture

Aug 02, 2018Abstract:Agri-Food is the largest manufacturing sector in the UK. It supports a food chain that generates over {\pounds}108bn p.a., with 3.9m employees in a truly international industry and exports {\pounds}20bn of UK manufactured goods. However, the global food chain is under pressure from population growth, climate change, political pressures affecting migration, population drift from rural to urban regions and the demographics of an aging global population. These challenges are recognised in the UK Industrial Strategy white paper and backed by significant investment via a Wave 2 Industrial Challenge Fund Investment ("Transforming Food Production: from Farm to Fork"). Robotics and Autonomous Systems (RAS) and associated digital technologies are now seen as enablers of this critical food chain transformation. To meet these challenges, this white paper reviews the state of the art in the application of RAS in Agri-Food production and explores research and innovation needs to ensure these technologies reach their full potential and deliver the necessary impacts in the Agri-Food sector.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge