N. Anantrasirichai

Atmospheric Turbulence Removal with Video Sequence Deep Visual Priors

Feb 29, 2024Abstract:Atmospheric turbulence poses a challenge for the interpretation and visual perception of visual imagery due to its distortion effects. Model-based approaches have been used to address this, but such methods often suffer from artefacts associated with moving content. Conversely, deep learning based methods are dependent on large and diverse datasets that may not effectively represent any specific content. In this paper, we address these problems with a self-supervised learning method that does not require ground truth. The proposed method is not dependent on any dataset outside of the single data sequence being processed but is also able to improve the quality of any input raw sequences or pre-processed sequences. Specifically, our method is based on an accelerated Deep Image Prior (DIP), but integrates temporal information using pixel shuffling and a temporal sliding window. This efficiently learns spatio-temporal priors leading to a system that effectively mitigates atmospheric turbulence distortions. The experiments show that our method improves visual quality results qualitatively and quantitatively.

Contextual colorization and denoising for low-light ultra high resolution sequences

Jan 05, 2021

Abstract:Low-light image sequences generally suffer from spatio-temporal incoherent noise, flicker and blurring of moving objects. These artefacts significantly reduce visual quality and, in most cases, post-processing is needed in order to generate acceptable quality. Most state-of-the-art enhancement methods based on machine learning require ground truth data but this is not usually available for naturally captured low light sequences. We tackle these problems with an unpaired-learning method that offers simultaneous colorization and denoising. Our approach is an adaptation of the CycleGAN structure. To overcome the excessive memory limitations associated with ultra high resolution content, we propose a multiscale patch-based framework, capturing both local and contextual features. Additionally, an adaptive temporal smoothing technique is employed to remove flickering artefacts. Experimental results show that our method outperforms existing approaches in terms of subjective quality and that it is robust to variations in brightness levels and noise.

Atmospheric turbulence removal using convolutional neural network

Dec 22, 2019

Abstract:This paper describes a novel deep learning-based method for mitigating the effects of atmospheric distortion. We have built an end-to-end supervised convolutional neural network (CNN) to reconstruct turbulence-corrupted video sequence. Our framework has been developed on the residual learning concept, where the spatio-temporal distortions are learnt and predicted. Our experiments demonstrate that the proposed method can deblur, remove ripple effect and enhance contrast of the video sequences simultaneously. Our model was trained and tested with both simulated and real distortions. Experimental results of the real distortions show that our method outperforms the existing ones by up to 3.8% in term of the quality of restored images, and it achieves faster speed than the state-of-the-art methods by up to 23 times with GPU implementation.

The application of Convolutional Neural Networks to Detect Slow, Sustained Deformation in InSAR Timeseries

Sep 05, 2019

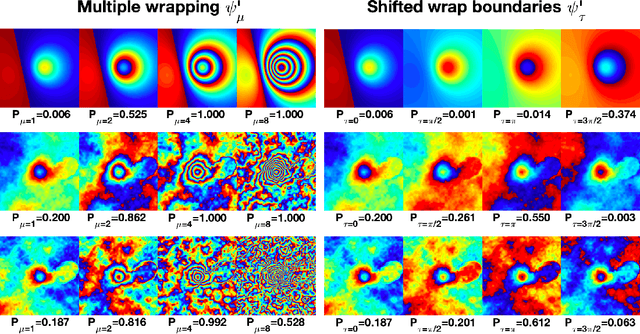

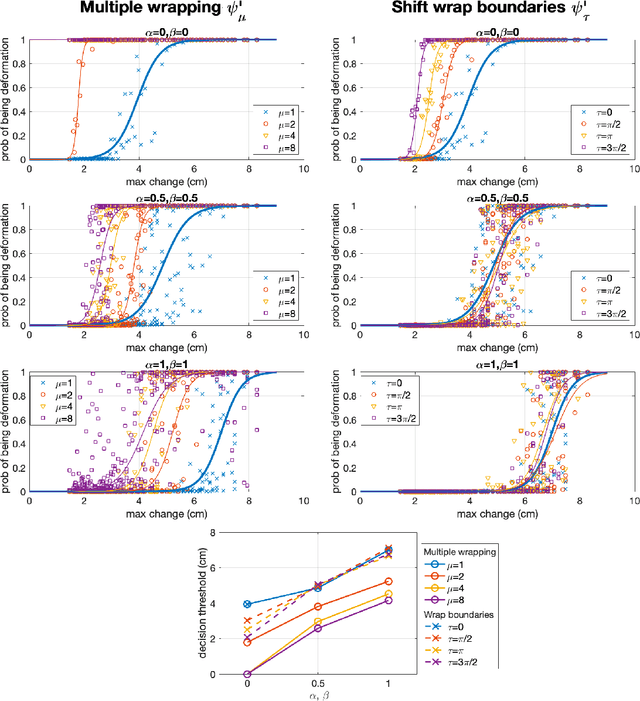

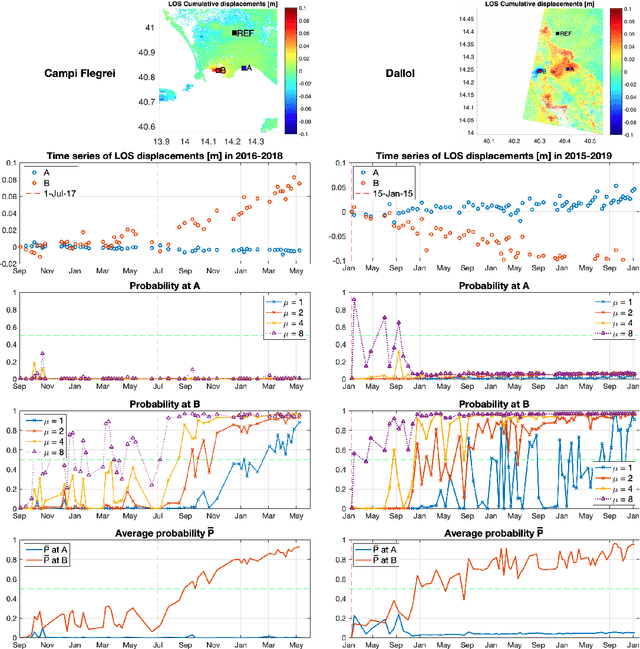

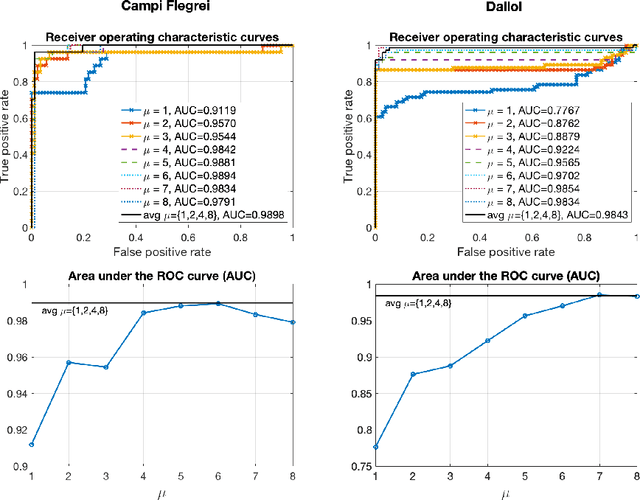

Abstract:Automated systems for detecting deformation in satellite InSAR imagery could be used to develop a global monitoring system for volcanic and urban environments. Here we explore the limits of a CNN for detecting slow, sustained deformations in wrapped interferograms. Using synthetic data, we estimate a detection threshold of 3.9cm for deformation signals alone, and 6.3cm when atmospheric artefacts are considered. Over-wrapping reduces this to 1.8cm and 5.0cm respectively as more fringes are generated without altering SNR. We test the approach on timeseries of cumulative deformation from Campi Flegrei and Dallol, where over-wrapping improves classication performance by up to 15%. We propose a mean-filtering method for combining results of different wrap parameters to flag deformation. At Campi Flegrei, deformation of 8.5cm/yr was detected after 60days and at Dallol, deformation of 3.5cm/yr was detected after 310 days. This corresponds to cumulative displacements of 3 cm and 4 cm consistent with estimates based on synthetic data.

DefectNET: multi-class fault detection on highly-imbalanced datasets

Apr 02, 2019

Abstract:As a data-driven method, the performance of deep convolutional neural networks (CNN) relies heavily on training data. The prediction results of traditional networks give a bias toward larger classes, which tend to be the background in the semantic segmentation task. This becomes a major problem for fault detection, where the targets appear very small on the images and vary in both types and sizes. In this paper we propose a new network architecture, DefectNet, that offers multi-class (including but not limited to) defect detection on highly-imbalanced datasets. DefectNet consists of two parallel paths, which are a fully convolutional network and a dilated convolutional network to detect large and small objects respectively. We propose a hybrid loss maximising the usefulness of a dice loss and a cross entropy loss, and we also employ the leaky rectified linear unit (ReLU) to deal with rare occurrence of some targets in training batches. The prediction results show that our DefectNet outperforms state-of-the-art networks for detecting multi-class defects with the average accuracy improvement of approximately 10% on a wind turbine.

Atmospheric turbulence mitigation for sequences with moving objects using recursive image fusion

Aug 10, 2018

Abstract:This paper describes a new method for mitigating the effects of atmospheric distortion on observed sequences that include large moving objects. In order to provide accurate detail from objects behind the distorting layer, we solve the space-variant distortion problem using recursive image fusion based on the Dual Tree Complex Wavelet Transform (DT-CWT). The moving objects are detected and tracked using the improved Gaussian mixture models (GMM) and Kalman filtering. New fusion rules are introduced which work on the magnitudes and angles of the DT-CWT coefficients independently to achieve a sharp image and to reduce atmospheric distortion, respectively. The subjective results show that the proposed method achieves better video quality than other existing methods with competitive speed.

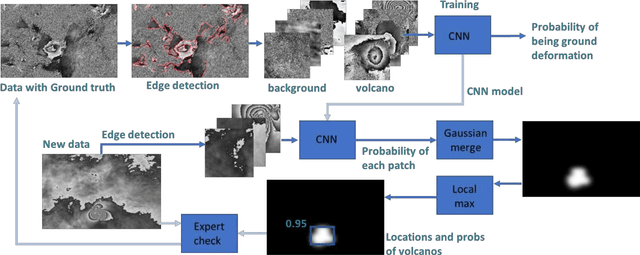

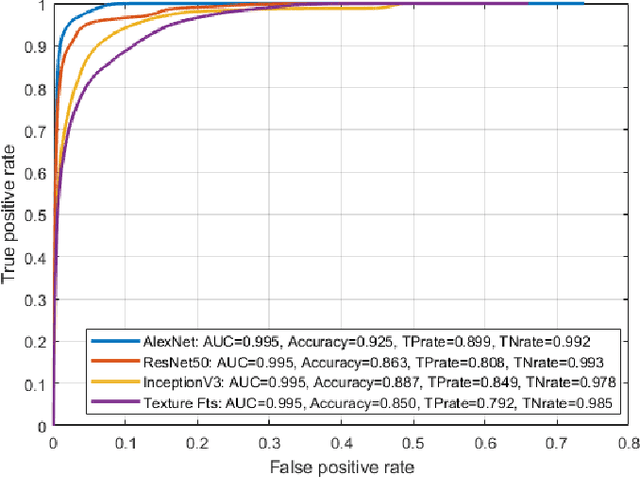

Detecting Volcano Deformation in InSAR using Deep learning

Jan 21, 2018

Abstract:Globally 800 million people live within 100 km of a volcano and currently 1500 volcanoes are considered active, but half of these have no ground-based monitoring. Alternatively, satellite radar (InSAR) can be employed to observe volcanic ground deformation, which has shown a significant statistical link to eruptions. Modern satellites provide large coverage with high resolution signals, leading to huge amounts of data. The explosion in data has brought major challenges associated with timely dissemination of information and distinguishing volcano deformation patterns from noise, which currently relies on manual inspection. Moreover, volcano observatories still lack expertise to exploit satellite datasets, particularly in developing countries. This paper presents a novel approach to detect volcanic ground deformation automatically from wrapped-phase InSAR images. Convolutional neural networks (CNN) are employed to detect unusual patterns within the radar data.

Automatic Leaf Extraction from Outdoor Images

Sep 19, 2017

Abstract:Automatic plant recognition and disease analysis may be streamlined by an image of a complete, isolated leaf as an initial input. Segmenting leaves from natural images is a hard problem. Cluttered and complex backgrounds: often composed of other leaves are commonplace. Furthermore, their appearance is highly dependent upon illumination and viewing perspective. In order to address these issues we propose a methodology which exploits the leaves venous systems in tandem with other low level features. Background and leaf markers are created using colour, intensity and texture. Two approaches are investigated: watershed and graph-cut and results compared. Primary-secondary vein detection and a protrusion-notch removal are applied to refine the extracted leaf. The efficacy of our approach is demonstrated against existing work.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge