D. R. Bull

Atmospheric Turbulence Removal with Video Sequence Deep Visual Priors

Feb 29, 2024Abstract:Atmospheric turbulence poses a challenge for the interpretation and visual perception of visual imagery due to its distortion effects. Model-based approaches have been used to address this, but such methods often suffer from artefacts associated with moving content. Conversely, deep learning based methods are dependent on large and diverse datasets that may not effectively represent any specific content. In this paper, we address these problems with a self-supervised learning method that does not require ground truth. The proposed method is not dependent on any dataset outside of the single data sequence being processed but is also able to improve the quality of any input raw sequences or pre-processed sequences. Specifically, our method is based on an accelerated Deep Image Prior (DIP), but integrates temporal information using pixel shuffling and a temporal sliding window. This efficiently learns spatio-temporal priors leading to a system that effectively mitigates atmospheric turbulence distortions. The experiments show that our method improves visual quality results qualitatively and quantitatively.

Transform and Bitstream Domain Image Classification

Oct 13, 2021

Abstract:Classification of images within the compressed domain offers significant benefits. These benefits include reduced memory and computational requirements of a classification system. This paper proposes two such methods as a proof of concept: The first classifies within the JPEG image transform domain (i.e. DCT transform data); the second classifies the JPEG compressed binary bitstream directly. These two methods are implemented using Residual Network CNNs and an adapted Vision Transformer. Top-1 accuracy of approximately 70% and 60% were achieved using these methods respectively when classifying the Caltech C101 database. Although these results are significantly behind the state of the art for classification for this database (~95%), it illustrates the first time direct bitstream image classification has been achieved. This work confirms that direct bitstream image classification is possible and could be utilised in a first pass database screening of a raw bitstream (within a wired or wireless network) or where computational, memory and bandwidth requirements are severely restricted.

Semantic Image Fusion

Oct 13, 2021

Abstract:Image fusion methods and metrics for their evaluation have conventionally used pixel-based or low-level features. However, for many applications, the aim of image fusion is to effectively combine the semantic content of the input images. This paper proposes a novel system for the semantic combination of visual content using pre-trained CNN network architectures. Our proposed semantic fusion is initiated through the fusion of the top layer feature map outputs (for each input image)through gradient updating of the fused image input (so-called image optimisation). Simple "choose maximum" and "local majority" filter based fusion rules are utilised for feature map fusion. This provides a simple method to combine layer outputs and thus a unique framework to fuse single-channel and colour images within a decomposition pre-trained for classification and therefore aligned with semantic fusion. Furthermore, class activation mappings of each input image are used to combine semantic information at a higher level. The developed methods are able to give equivalent low-level fusion performance to state of the art methods while providing a unique architecture to combine semantic information from multiple images.

HABNet: Machine Learning, Remote Sensing Based Detection and Prediction of Harmful Algal Blooms

Dec 04, 2019

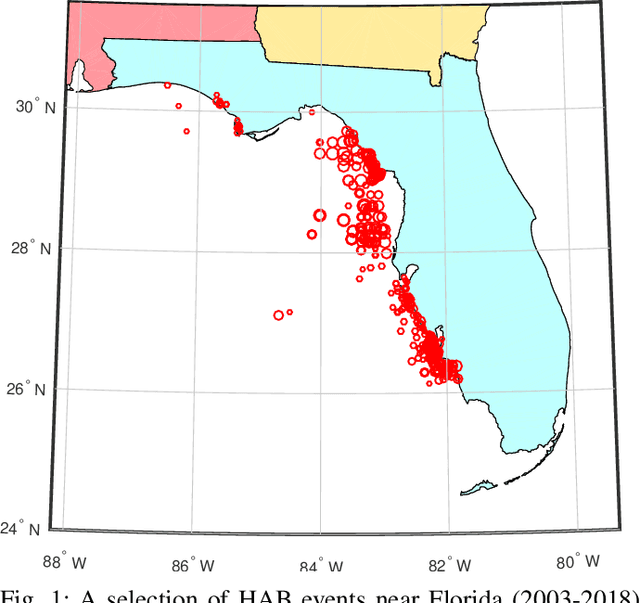

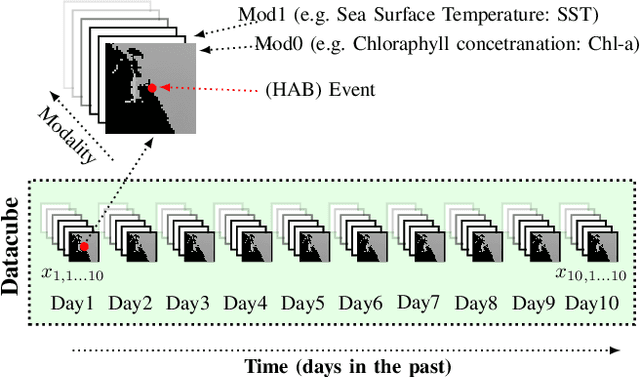

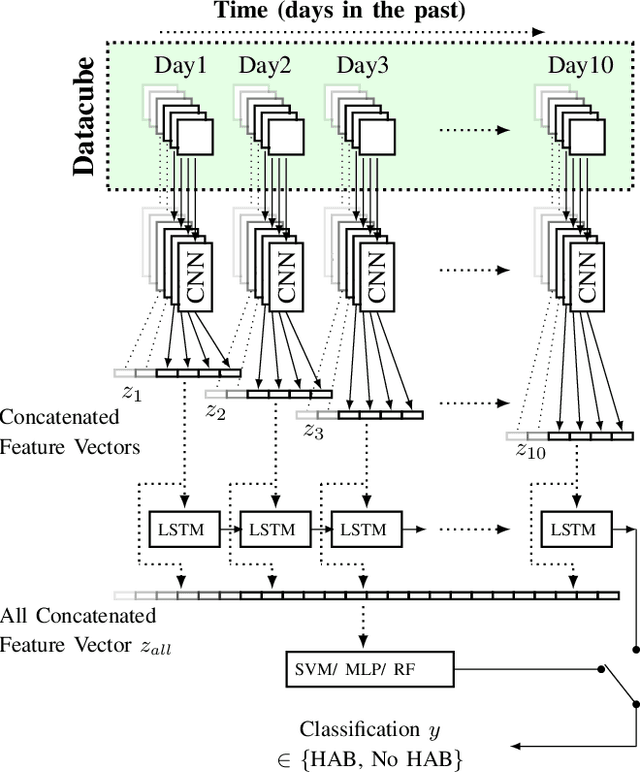

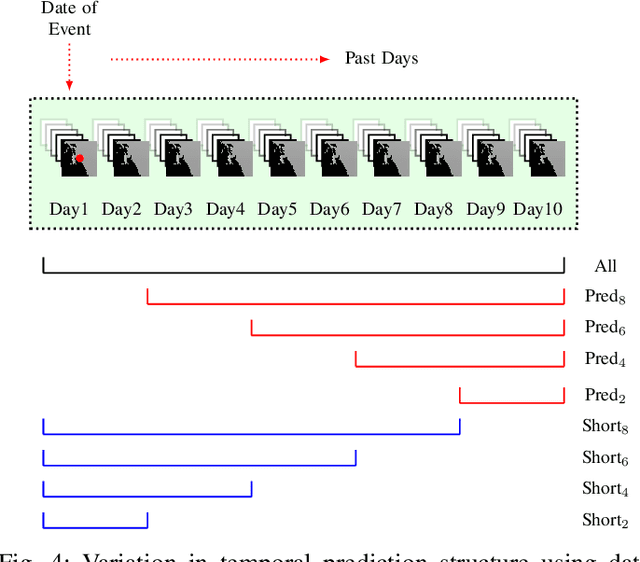

Abstract:This paper describes the application of machine learning techniques to develop a state-of-the-art detection and prediction system for spatiotemporal events found within remote sensing data; specifically, Harmful Algal Bloom events (HABs). HABs cause a large variety of human health and environmental issues together with associated economic impacts. This work has focused specifically on the case study of the detection of Karenia Brevis Algae (K. brevis) HAB events within the coastal waters of Florida (over 2850 events from 2003 to 2018: an order of magnitude larger than any previous machine learning detection study into HAB events). The development of multimodal spatiotemporal datacube data structures and associated novel machine learning methods give a unique architecture for the automatic detection of environmental events. Specifically, when applied to the detection of HAB events it gives a maximum detection accuracy of 91\% and a Kappa coefficient of 0.81 for the Florida data considered. A HAB prediction system was also developed where a temporal subset of each datacube was used to forecast the presence of a HAB in the future. This system was not significantly less accurate than the detection system being able to predict with 86\% accuracy up to 8 days in the future. The same datacube and machine learning structure were also applied to a more limited database of multi-species HAB events within the Arabian Gulf. This results for this additional study gave a classification accuracy of 93\% and a Kappa coefficient of 0.83.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge