Simon Andermatt

Department of Biomedical Engineering, University of Basel, Basel, Switzerland

MRI lung lobe segmentation in pediatric cystic fibrosis patients using a recurrent neural network trained with publicly accessible CT datasets

Aug 31, 2021

Abstract:Purpose: To introduce a widely applicable workflow for pulmonary lobe segmentation of MR images using a recurrent neural network (RNN) trained with chest computed tomography (CT) datasets. The feasibility is demonstrated for 2D coronal ultra-fast balanced steady-state free precession (ufSSFP) MRI. Methods: Lung lobes of 250 publicly accessible CT datasets of adults were segmented with an open-source CT-specific algorithm. To match 2D ufSSFP MRI data of pediatric patients, both CT data and segmentations were translated into pseudo-MR images, masked to suppress anatomy outside the lung. Network-1 was trained with pseudo-MR images and lobe segmentations, and applied to 1000 masked ufSSFP images to predict lobe segmentations. These outputs were directly used as targets to train Network-2 and Network-3 with non-masked ufSSFP data as inputs, and an additional whole-lung mask as input for Network-2. Network predictions were compared to reference manual lobe segmentations of ufSSFP data in twenty pediatric cystic fibrosis patients. Manual lobe segmentations were performed by splitting available whole-lung segmentations into lobes. Results: Network-1 was able to segment the lobes of ufSSFP images, and Network-2 and Network-3 further increased segmentation accuracy and robustness. The average all-lobe Dice similarity coefficients were 95.0$\pm$2.3 (mean$\pm$pooled SD [%]), 96.4$\pm$1.2, 93.0$\pm$1.8, and the average median Hausdorff distances were 6.1$\pm$0.9 (mean$\pm$SD [mm]), 5.3$\pm$1.1, 7.1$\pm$1.3, for Network-1, Network-2, and Network-3, respectively. Conclusions: RNN lung lobe segmentation of 2D ufSSFP imaging is feasible, in good agreement with manual segmentations. The proposed workflow might provide rapid access to automated lobe segmentations for various lung MRI examinations and quantitative analyses.

Recurrent Registration Neural Networks for Deformable Image Registration

Jun 07, 2019

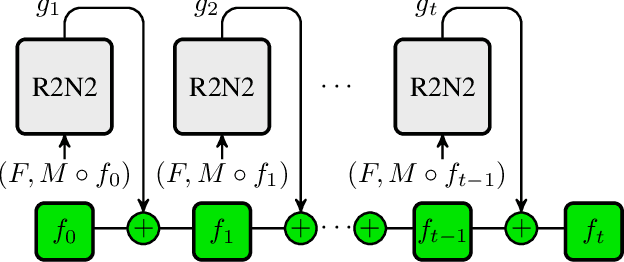

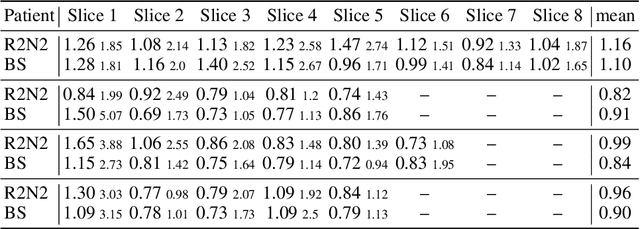

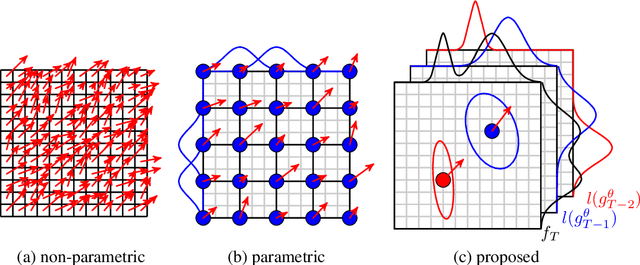

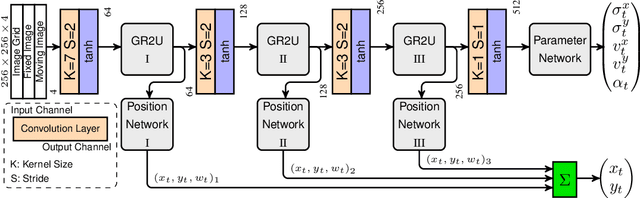

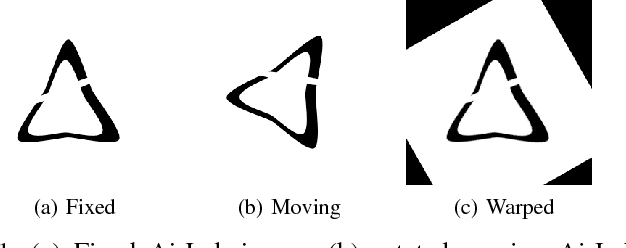

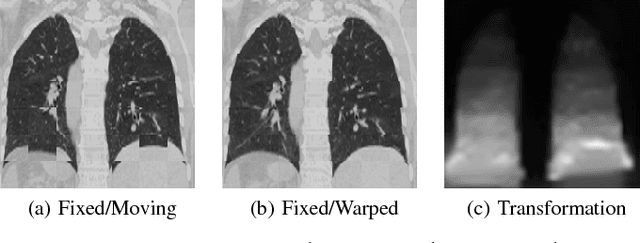

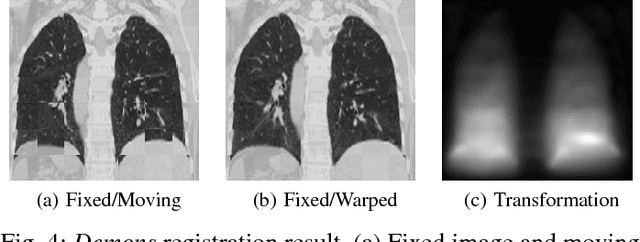

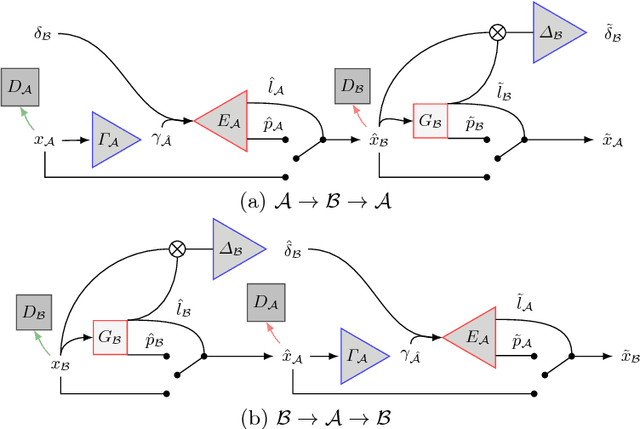

Abstract:Parametric spatial transformation models have been successfully applied to image registration tasks. In such models, the transformation of interest is parameterized by a fixed set of basis functions as for example B-splines. Each basis function is located on a fixed regular grid position among the image domain, because the transformation of interest is not known in advance. As a consequence, not all basis functions will necessarily contribute to the final transformation which results in a non-compact representation of the transformation. We reformulate the pairwise registration problem as a recursive sequence of successive alignments. For each element in the sequence, a local deformation defined by its position, shape, and weight is computed by our recurrent registration neural network. The sum of all local deformations yield the final spatial alignment of both images. Formulating the registration problem in this way allows the network to detect non-aligned regions in the images and to learn how to locally refine the registration properly. In contrast to current non-sequence-based registration methods, our approach iteratively applies local spatial deformations to the images until the desired registration accuracy is achieved. We trained our network on 2D magnetic resonance images of the lung and compared our method to a standard parametric B-spline registration. The experiments show, that our method performs on par for the accuracy but yields a more compact representation of the transformation. Furthermore, we achieve a speedup of around 15 compared to the B-spline registration.

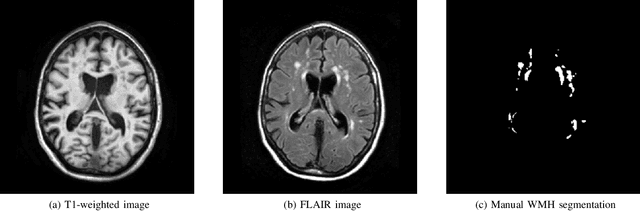

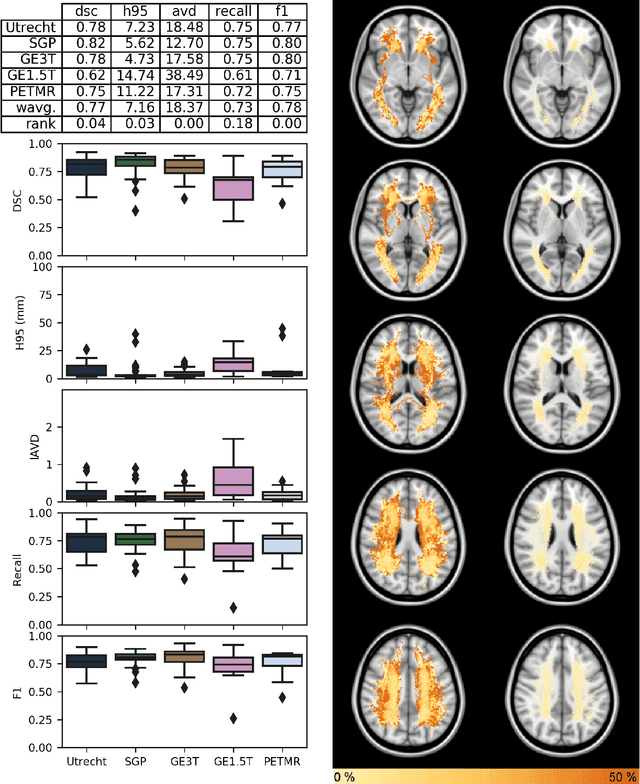

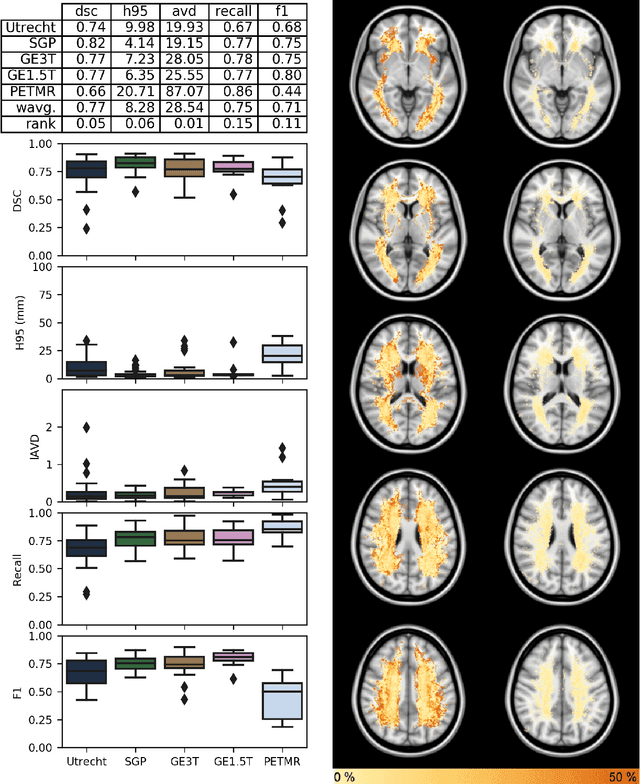

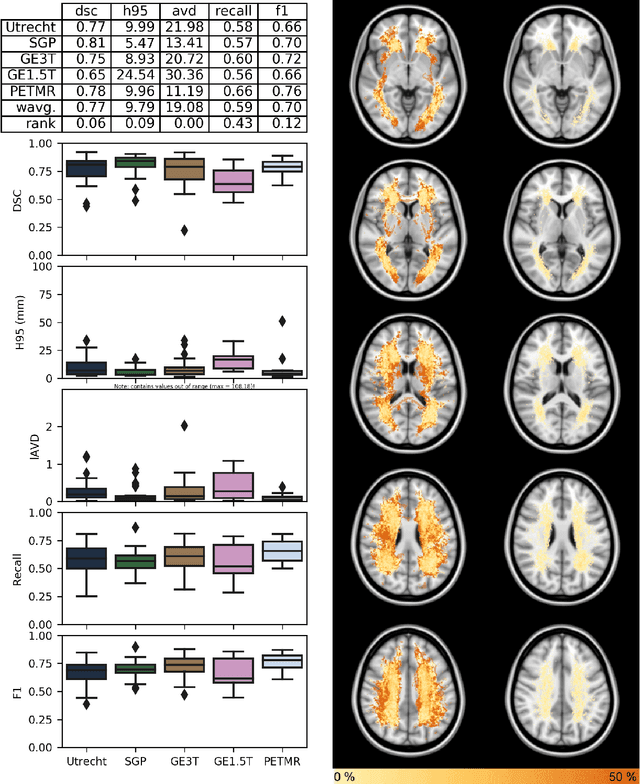

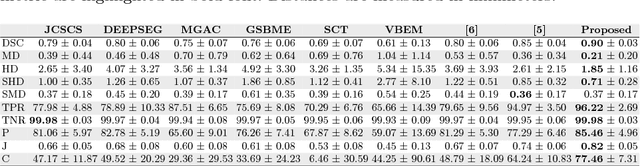

Standardized Assessment of Automatic Segmentation of White Matter Hyperintensities and Results of the WMH Segmentation Challenge

Apr 01, 2019

Abstract:Quantification of cerebral white matter hyperintensities (WMH) of presumed vascular origin is of key importance in many neurological research studies. Currently, measurements are often still obtained from manual segmentations on brain MR images, which is a laborious procedure. Automatic WMH segmentation methods exist, but a standardized comparison of the performance of such methods is lacking. We organized a scientific challenge, in which developers could evaluate their method on a standardized multi-center/-scanner image dataset, giving an objective comparison: the WMH Segmentation Challenge (https://wmh.isi.uu.nl/). Sixty T1+FLAIR images from three MR scanners were released with manual WMH segmentations for training. A test set of 110 images from five MR scanners was used for evaluation. Segmentation methods had to be containerized and submitted to the challenge organizers. Five evaluation metrics were used to rank the methods: (1) Dice similarity coefficient, (2) modified Hausdorff distance (95th percentile), (3) absolute log-transformed volume difference, (4) sensitivity for detecting individual lesions, and (5) F1-score for individual lesions. Additionally, methods were ranked on their inter-scanner robustness. Twenty participants submitted their method for evaluation. This paper provides a detailed analysis of the results. In brief, there is a cluster of four methods that rank significantly better than the other methods, with one clear winner. The inter-scanner robustness ranking shows that not all methods generalize to unseen scanners. The challenge remains open for future submissions and provides a public platform for method evaluation.

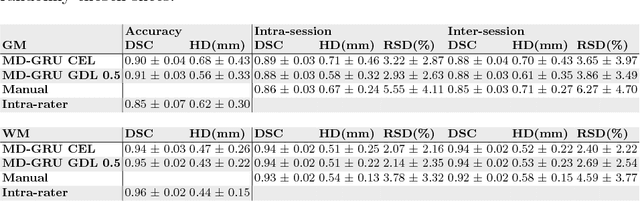

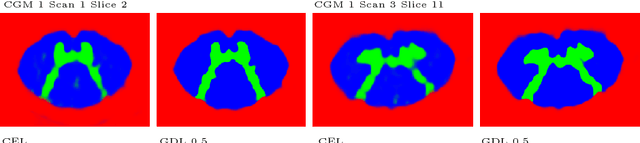

Spinal Cord Gray Matter-White Matter Segmentation on Magnetic Resonance AMIRA Images with MD-GRU

Aug 07, 2018

Abstract:The small butterfly shaped structure of spinal cord (SC) gray matter (GM) is challenging to image and to delinate from its surrounding white matter (WM). Segmenting GM is up to a point a trade-off between accuracy and precision. We propose a new pipeline for GM-WM magnetic resonance (MR) image acquisition and segmentation. We report superior results as compared to the ones recently reported in the SC GM segmentation challenge and show even better results using the averaged magnetization inversion recovery acquisitions (AMIRA) sequence. Scan-rescan experiments with the AMIRA sequence show high reproducibility in terms of Dice coefficient, Hausdorff distance and relative standard deviation. We use a recurrent neural network (RNN) with multi-dimensional gated recurrent units (MD-GRU) to train segmentation models on the AMIRA dataset of 855 slices. We added a generalized dice loss to the cross entropy loss that MD-GRU uses and were able to improve the results.

AirLab: Autograd Image Registration Laboratory

Jun 26, 2018

Abstract:Medical image registration is an active research topic and forms a basis for many medical image analysis tasks. Although image registration is a rather general concept specialized methods are usually required to target a specific registration problem. The development and implementation of such methods has been tough so far as the gradient of the objective has to be computed. Also, its evaluation has to be performed preferably on a GPU for larger images and for more complex transformation models and regularization terms. This hinders researchers from rapid prototyping and poses hurdles to reproduce research results. There is a clear need for an environment which hides this complexity to put the modeling and the experimental exploration of registration methods into the foreground. With the "Autograd Image Registration Laboratory" (AirLab), we introduce an open laboratory for image registration tasks, where the analytic gradients of the objective function are computed automatically and the device where the computations are performed, on a CPU or a GPU, is transparent. It is meant as a laboratory for researchers and developers enabling them to rapidly try out new ideas for registering images and to reproduce registration results which have already been published. AirLab is implemented in Python using PyTorch as tensor and optimization library and SimpleITK for basic image IO. Therefore, it profits from recent advances made by the machine learning community concerning optimization and deep neural network models. The present draft of this paper roughly outlines AirLab with first code snippets and performance analyses. A more exhaustive introduction will follow as a final version soon.

Pathology Segmentation using Distributional Differences to Images of Healthy Origin

May 25, 2018

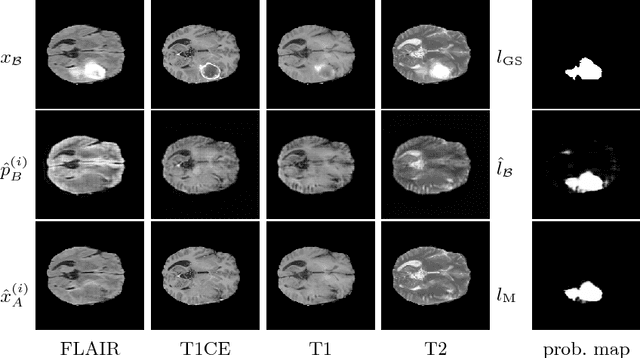

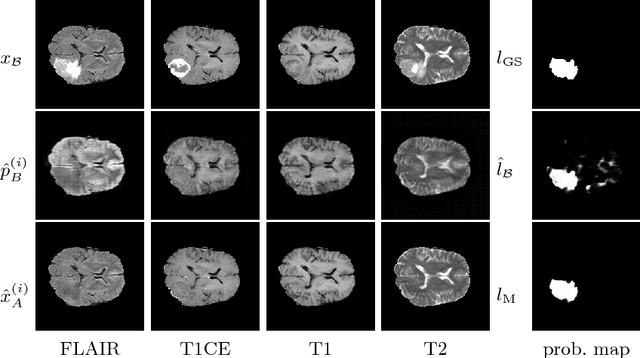

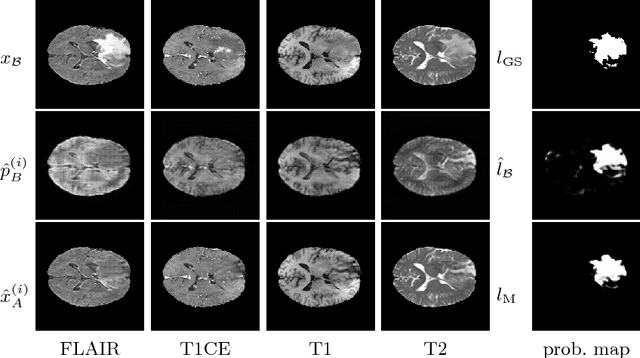

Abstract:We present a method to model pathologies in medical data, trained on data labelled on the image level as healthy or containing a visual defect. Our model not only allows us to create pixelwise semantic segmentations, it is also able to create inpaintings for the segmentations to render the pathological image healthy. Furthermore, we can draw new unseen pathology samples from this model based on the distribution in the data. We show quantitatively, that our method is able to segment pathologies with a surprising accuracy and show qualitative results of both the segmentations and inpaintings. A comparison with a supervised segmentation method indicates, that the accuracy of our proposed weakly-supervised segmentation is nevertheless quite close.

Multi-dimensional Gated Recurrent Units for Automated Anatomical Landmark Localization

Aug 09, 2017

Abstract:We present an automated method for localizing an anatomical landmark in three-dimensional medical images. The method combines two recurrent neural networks in a coarse-to-fine approach: The first network determines a candidate neighborhood by analyzing the complete given image volume. The second network localizes the actual landmark precisely and accurately in the candidate neighborhood. Both networks take advantage of multi-dimensional gated recurrent units in their main layers, which allow for high model complexity with a comparatively small set of parameters. We localize the medullopontine sulcus in 3D magnetic resonance images of the head and neck. We show that the proposed approach outperforms similar localization techniques both in terms of mean distance in millimeters and voxels w.r.t. manual labelings of the data. With a mean localization error of 1.7 mm, the proposed approach performs on par with neurological experts, as we demonstrate in an interrater comparison.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge