Sihua Wang

Collaborative Reinforcement Learning Based Unmanned Aerial Vehicle (UAV) Trajectory Design for 3D UAV Tracking

Jan 22, 2024

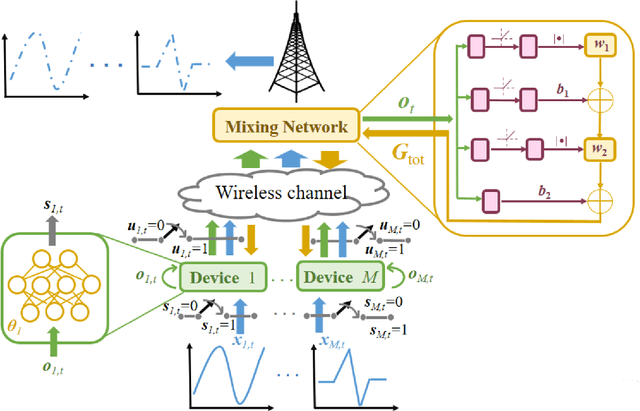

Abstract:In this paper, the problem of using one active unmanned aerial vehicle (UAV) and four passive UAVs to localize a 3D target UAV in real time is investigated. In the considered model, each passive UAV receives reflection signals from the target UAV, which are initially transmitted by the active UAV. The received reflection signals allow each passive UAV to estimate the signal transmission distance which will be transmitted to a base station (BS) for the estimation of the position of the target UAV. Due to the movement of the target UAV, each active/passive UAV must optimize its trajectory to continuously localize the target UAV. Meanwhile, since the accuracy of the distance estimation depends on the signal-to-noise ratio of the transmission signals, the active UAV must optimize its transmit power. This problem is formulated as an optimization problem whose goal is to jointly optimize the transmit power of the active UAV and trajectories of both active and passive UAVs so as to maximize the target UAV positioning accuracy. To solve this problem, a Z function decomposition based reinforcement learning (ZD-RL) method is proposed. Compared to value function decomposition based RL (VD-RL), the proposed method can find the probability distribution of the sum of future rewards to accurately estimate the expected value of the sum of future rewards thus finding better transmit power of the active UAV and trajectories for both active and passive UAVs and improving target UAV positioning accuracy. Simulation results show that the proposed ZD-RL method can reduce the positioning errors by up to 39.4% and 64.6%, compared to VD-RL and independent deep RL methods, respectively.

Cross-Layer Federated Learning Optimization in MIMO Networks

Feb 04, 2023

Abstract:In this paper, the performance optimization of federated learning (FL), when deployed over a realistic wireless multiple-input multiple-output (MIMO) communication system with digital modulation and over-the-air computation (AirComp) is studied. In particular, an MIMO system is considered in which edge devices transmit their local FL models (trained using their locally collected data) to a parameter server (PS) using beamforming to maximize the number of devices scheduled for transmission. The PS, acting as a central controller, generates a global FL model using the received local FL models and broadcasts it back to all devices. Due to the limited bandwidth in a wireless network, AirComp is adopted to enable efficient wireless data aggregation. However, fading of wireless channels can produce aggregate distortions in an AirComp-based FL scheme. To tackle this challenge, we propose a modified federated averaging (FedAvg) algorithm that combines digital modulation with AirComp to mitigate wireless fading while ensuring the communication efficiency. This is achieved by a joint transmit and receive beamforming design, which is formulated as a optimization problem to dynamically adjust the beamforming matrices based on current FL model parameters so as to minimize the transmitting error and ensure the FL performance. To achieve this goal, we first analytically characterize how the beamforming matrices affect the performance of the FedAvg in different iterations. Based on this relationship, an artificial neural network (ANN) is used to estimate the local FL models of all devices and adjust the beamforming matrices at the PS for future model transmission. The algorithmic advantages and improved performance of the proposed methodologies are demonstrated through extensive numerical experiments.

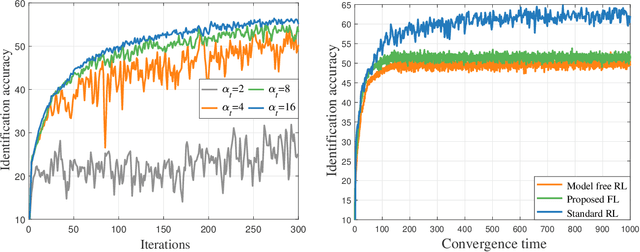

Performance Optimization for Variable Bitwidth Federated Learning in Wireless Networks

Sep 21, 2022

Abstract:This paper considers improving wireless communication and computation efficiency in federated learning (FL) via model quantization. In the proposed bitwidth FL scheme, edge devices train and transmit quantized versions of their local FL model parameters to a coordinating server, which, in turn, aggregates them into a quantized global model and synchronizes the devices. The goal is to jointly determine the bitwidths employed for local FL model quantization and the set of devices participating in FL training at each iteration. This problem is posed as an optimization problem whose goal is to minimize the training loss of quantized FL under a per-iteration device sampling budget and delay requirement. To derive the solution, an analytical characterization is performed in order to show how the limited wireless resources and induced quantization errors affect the performance of the proposed FL method. The analytical results show that the improvement of FL training loss between two consecutive iterations depends on the device selection and quantization scheme as well as on several parameters inherent to the model being learned. Given linear regression-based estimates of these model properties, it is shown that the FL training process can be described as a Markov decision process (MDP), and, then, a model-based reinforcement learning (RL) method is proposed to optimize action selection over iterations. Compared to model-free RL, this model-based RL approach leverages the derived mathematical characterization of the FL training process to discover an effective device selection and quantization scheme without imposing additional device communication overhead. Simulation results show that the proposed FL algorithm can reduce 29% and 63% convergence time compared to a model free RL method and the standard FL method, respectively.

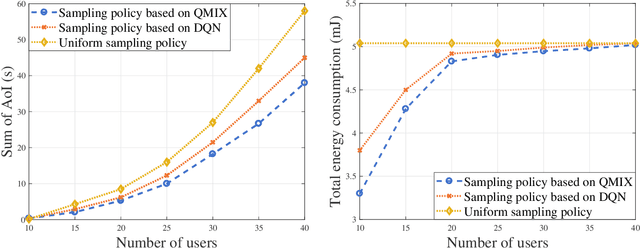

Reinforcement Learning for Minimizing Age of Information in Real-time Internet of Things Systems with Realistic Physical Dynamics

Apr 04, 2021

Abstract:In this paper, the problem of minimizing the weighted sum of age of information (AoI) and total energy consumption of Internet of Things (IoT) devices is studied. In the considered model, each IoT device monitors a physical process that follows nonlinear dynamics. As the dynamics of the physical process vary over time, each device must find an optimal sampling frequency to sample the real-time dynamics of the physical system and send sampled information to a base station (BS). Due to limited wireless resources, the BS can only select a subset of devices to transmit their sampled information. Meanwhile, changing the sampling frequency will also impact the energy used by each device for sampling and information transmission. Thus, it is necessary to jointly optimize the sampling policy of each device and the device selection scheme of the BS so as to accurately monitor the dynamics of the physical process using minimum energy. This problem is formulated as an optimization problem whose goal is to minimize the weighted sum of AoI cost and energy consumption. To solve this problem, a distributed reinforcement learning approach is proposed to optimize the sampling policy. The proposed learning method enables the IoT devices to find the optimal sampling policy using their local observations. Given the sampling policy, the device selection scheme can be optimized so as to minimize the weighted sum of AoI and energy consumption of all devices. Simulations with real data of PM 2.5 pollution show that the proposed algorithm can reduce the sum of AoI by up to 17.8% and 33.9% and the total energy consumption by up to 13.2% and 35.1%, compared to a conventional deep Q network method and a uniform sampling policy.

A Machine Learning Approach for Task and Resource Allocation in Mobile Edge Computing Based Networks

Jul 20, 2020

Abstract:In this paper, a joint task, spectrum, and transmit power allocation problem is investigated for a wireless network in which the base stations (BSs) are equipped with mobile edge computing (MEC) servers to jointly provide computational and communication services to users. Each user can request one computational task from three types of computational tasks. Since the data size of each computational task is different, as the requested computational task varies, the BSs must adjust their resource (subcarrier and transmit power) and task allocation schemes to effectively serve the users. This problem is formulated as an optimization problem whose goal is to minimize the maximal computational and transmission delay among all users. A multi-stack reinforcement learning (RL) algorithm is developed to solve this problem. Using the proposed algorithm, each BS can record the historical resource allocation schemes and users' information in its multiple stacks to avoid learning the same resource allocation scheme and users' states, thus improving the convergence speed and learning efficiency. Simulation results illustrate that the proposed algorithm can reduce the number of iterations needed for convergence and the maximal delay among all users by up to 18% and 11.1% compared to the standard Q-learning algorithm.

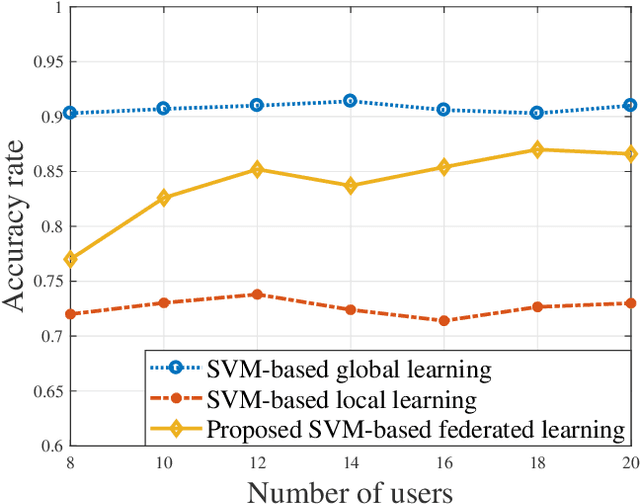

Federated Learning for Task and Resource Allocation in Wireless High Altitude Balloon Networks

Mar 19, 2020

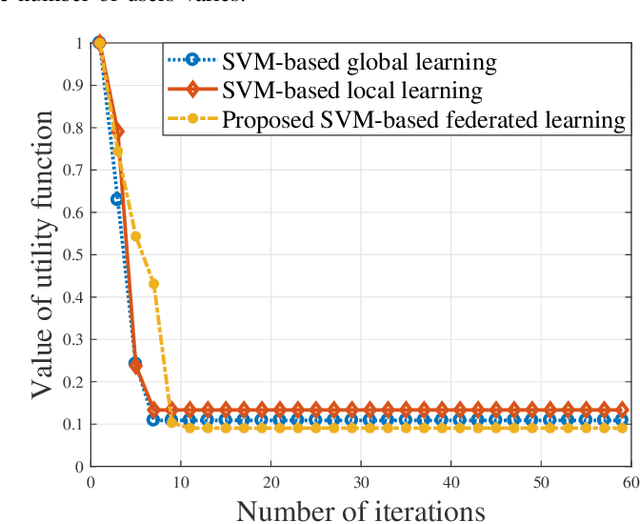

Abstract:In this paper, the problem of minimizing energy and time consumption for task computation and transmission is studied in a mobile edge computing (MEC)-enabled balloon network. In the considered network, each user needs to process a computational task in each time instant, where high-altitude balloons (HABs), acting as flying wireless base stations, can use their powerful computational abilities to process the tasks offloaded from their associated users. Since the data size of each user's computational task varies over time, the HABs must dynamically adjust the user association, service sequence, and task partition scheme to meet the users' needs. This problem is posed as an optimization problem whose goal is to minimize the energy and time consumption for task computing and transmission by adjusting the user association, service sequence, and task allocation scheme. To solve this problem, a support vector machine (SVM)-based federated learning (FL) algorithm is proposed to determine the user association proactively. The proposed SVM-based FL method enables each HAB to cooperatively build an SVM model that can determine all user associations without any transmissions of either user historical associations or computational tasks to other HABs. Given the prediction of the optimal user association, the service sequence and task allocation of each user can be optimized so as to minimize the weighted sum of the energy and time consumption. Simulations with real data of city cellular traffic from the OMNILab at Shanghai Jiao Tong University show that the proposed algorithm can reduce the weighted sum of the energy and time consumption of all users by up to 16.1% compared to a conventional centralized method.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge