Shuo Zhu

Analytical Model of NR-V2X Mode 2 with Re-Evaluation Mechanism

Oct 31, 2025Abstract:Massive message transmissions, unpredictable aperiodic messages, and high-speed moving vehicles contribute to the complex wireless environment, resulting in inefficient resource collisions in Vehicle to Everything (V2X). In order to achieve better medium access control (MAC) layer performance, 3GPP introduced several new features in NR-V2X. One of the most important is the re-evaluation mechanism. It allows the vehicle to continuously sense resources before message transmission to avoid resource collisions. So far, only a few articles have studied the re-evaluation mechanism of NR-V2X, and they mainly focus on network simulator that do not consider variable traffic, which makes analysis and comparison difficult. In this paper, an analytical model of NR-V2X Mode 2 is established, and a message generator is constructed by using discrete time Markov chain (DTMC) to simulate the traffic pattern recommended by 3GPP advanced V2X services. Our study shows that the re-evaluation mechanism improves the reliability of NR-V2X transmission, but there are still local improvements needed to reduce latency.

Near-infrared Image Deblurring and Event Denoising with Synergistic Neuromorphic Imaging

Mar 05, 2025

Abstract:The fields of imaging in the nighttime dynamic and other extremely dark conditions have seen impressive and transformative advancements in recent years, partly driven by the rise of novel sensing approaches, e.g., near-infrared (NIR) cameras with high sensitivity and event cameras with minimal blur. However, inappropriate exposure ratios of near-infrared cameras make them susceptible to distortion and blur. Event cameras are also highly sensitive to weak signals at night yet prone to interference, often generating substantial noise and significantly degrading observations and analysis. Herein, we develop a new framework for low-light imaging combined with NIR imaging and event-based techniques, named synergistic neuromorphic imaging, which can jointly achieve NIR image deblurring and event denoising. Harnessing cross-modal features of NIR images and visible events via spectral consistency and higher-order interaction, the NIR images and events are simultaneously fused, enhanced, and bootstrapped. Experiments on real and realistically simulated sequences demonstrate the effectiveness of our method and indicate better accuracy and robustness than other methods in practical scenarios. This study gives impetus to enhance both NIR images and events, which paves the way for high-fidelity low-light imaging and neuromorphic reasoning.

SweepEvGS: Event-Based 3D Gaussian Splatting for Macro and Micro Radiance Field Rendering from a Single Sweep

Dec 16, 2024

Abstract:Recent advancements in 3D Gaussian Splatting (3D-GS) have demonstrated the potential of using 3D Gaussian primitives for high-speed, high-fidelity, and cost-efficient novel view synthesis from continuously calibrated input views. However, conventional methods require high-frame-rate dense and high-quality sharp images, which are time-consuming and inefficient to capture, especially in dynamic environments. Event cameras, with their high temporal resolution and ability to capture asynchronous brightness changes, offer a promising alternative for more reliable scene reconstruction without motion blur. In this paper, we propose SweepEvGS, a novel hardware-integrated method that leverages event cameras for robust and accurate novel view synthesis across various imaging settings from a single sweep. SweepEvGS utilizes the initial static frame with dense event streams captured during a single camera sweep to effectively reconstruct detailed scene views. We also introduce different real-world hardware imaging systems for real-world data collection and evaluation for future research. We validate the robustness and efficiency of SweepEvGS through experiments in three different imaging settings: synthetic objects, real-world macro-level, and real-world micro-level view synthesis. Our results demonstrate that SweepEvGS surpasses existing methods in visual rendering quality, rendering speed, and computational efficiency, highlighting its potential for dynamic practical applications.

Ev-GS: Event-based Gaussian splatting for Efficient and Accurate Radiance Field Rendering

Jul 16, 2024

Abstract:Computational neuromorphic imaging (CNI) with event cameras offers advantages such as minimal motion blur and enhanced dynamic range, compared to conventional frame-based methods. Existing event-based radiance field rendering methods are built on neural radiance field, which is computationally heavy and slow in reconstruction speed. Motivated by the two aspects, we introduce Ev-GS, the first CNI-informed scheme to infer 3D Gaussian splatting from a monocular event camera, enabling efficient novel view synthesis. Leveraging 3D Gaussians with pure event-based supervision, Ev-GS overcomes challenges such as the detection of fast-moving objects and insufficient lighting. Experimental results show that Ev-GS outperforms the method that takes frame-based signals as input by rendering realistic views with reduced blurring and improved visual quality. Moreover, it demonstrates competitive reconstruction quality and reduced computing occupancy compared to existing methods, which paves the way to a highly efficient CNI approach for signal processing.

Neuromorphic Imaging with Super-Resolution

Jul 08, 2024Abstract:Neuromorphic imaging is a bio-inspired technique that imitates the human retina to sense variations in a dynamic scene. It responds to pixel-level brightness changes by asynchronous streaming events and boasts microsecond temporal precision over a high dynamic range, yielding blur-free recordings under extreme illumination. Nevertheless, such a modality falls short in spatial resolution and leads to a low level of visual richness and clarity. Pursuing hardware upgrades is expensive and might cause compromised performance due to more burdens on computational requirements. Another option is to harness offline, plug-in-play neuromorphic super-resolution solutions. However, existing ones, which demand substantial sample volumes for lengthy training on massive computing resources, are largely restricted by real data availability owing to the current imperfect high-resolution devices, as well as the randomness and variability of motion. To tackle these challenges, we introduce the first self-supervised neuromorphic super-resolution prototype. It can be self-adaptive to per input source from any low-resolution camera to estimate an optimal, high-resolution counterpart of any scale, without the need of side knowledge and prior training. Evaluated on downstream event-driven tasks, such a simple yet effective method can obtain competitive results against the state-of-the-arts, significantly promoting flexibility but not sacrificing accuracy. It also delivers enhancements for inferior natural images and optical micrographs acquired under non-ideal imaging conditions, breaking through the limitations that are challenging to overcome with traditional frame techniques. In the current landscape where the use of high-resolution cameras for event-based sensing remains an open debate, our solution serves as a cost-efficient and practical alternative, paving the way for more intelligent imaging systems.

Event Encryption: Rethinking Privacy Exposure for Neuromorphic Imaging

Jun 21, 2023Abstract:Bio-inspired neuromorphic cameras sense illumination changes on a per-pixel basis and generate spatiotemporal streaming events within microseconds in response, offering visual information with high temporal resolution over a high dynamic range. Such devices often serve in surveillance systems due to their applicability and robustness in environments with high dynamics and harsh lighting, where they can still supply clearer recordings than traditional imaging. In other words, when it comes to privacy-relevant cases, neuromorphic cameras also expose more sensitive data and pose serious security threats. Therefore, asynchronous event streams necessitate careful encryption before transmission and usage. This work discusses several potential attack scenarios and approaches event encryption from the perspective of neuromorphic noise removal, in which we inversely introduce well-crafted noise into raw events until they are obfuscated. Our evaluations show that the encrypted events can effectively protect information from attacks of low-level visual reconstruction and high-level neuromorphic reasoning, and thus feature dependable privacy-preserving competence. The proposed solution gives impetus to the security of event data and paves the way to a highly encrypted technique for privacy-protective neuromorphic imaging.

Learning-based real-time method to looking through scattering medium beyond the memory effect

Nov 04, 2019

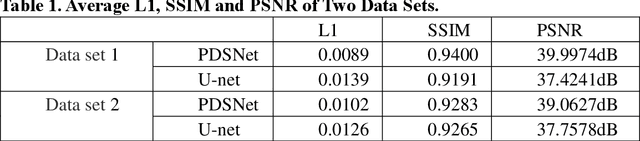

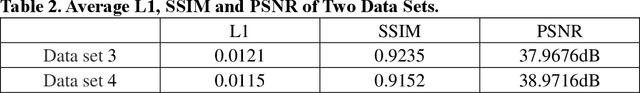

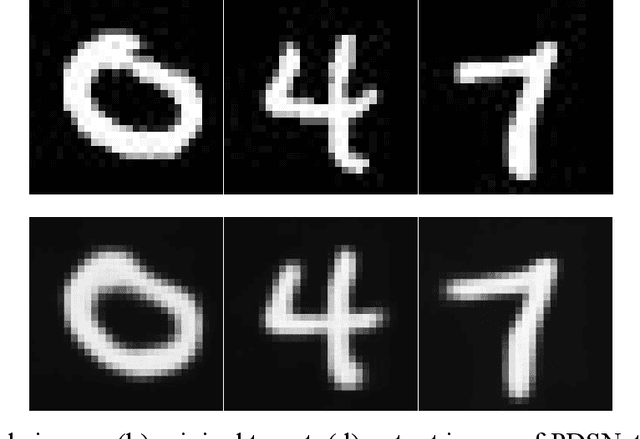

Abstract:Strong scattering medium brings great difficulties to optical imaging, which is also a problem in medical imaging and many other fields. Optical memory effect makes it possible to image through strong random scattering medium. However, this method also has the limitation of limited angle field-of-view (FOV), which prevents it from being applied in practice. In this paper, a kind of practical convolutional neural network called PDSNet is proposed, which effectively breaks through the limitation of optical memory effect on FOV. Experiments is conducted to prove that the scattered pattern can be reconstructed accurately in real-time by PDSNet, and it is widely applicable to retrieve complex objects of random scales and different scattering media.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge