Shubh Agrawal

Design and Implementation of Path Trackers for Ackermann Drive based Vehicles

Dec 05, 2020

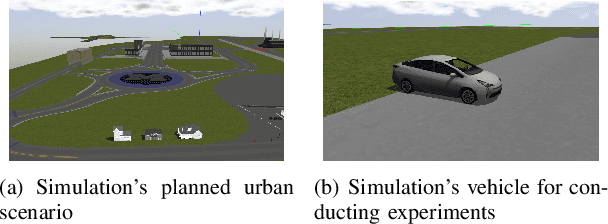

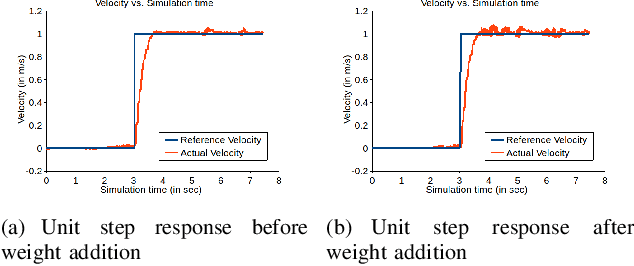

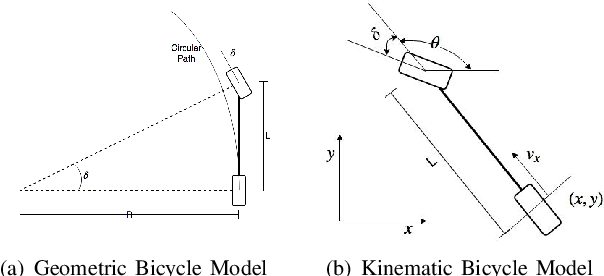

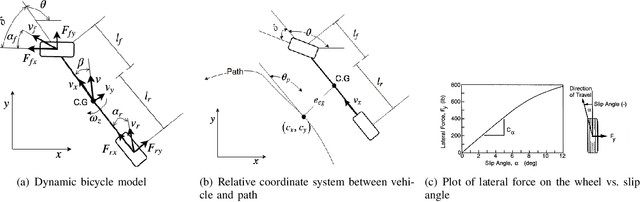

Abstract:This article is an overview of the various literature on path tracking methods and their implementation in simulation and realistic operating environments.The scope of this study includes analysis, implementation,tuning, and comparison of some selected path tracking methods commonly used in practice for trajectory tracking in autonomous vehicles. Many of these methods are applicable at low speed due to the linear assumption for the system model, and hence, some methods are also included that consider nonlinearities present in lateral vehicle dynamics during high-speed navigation. The performance evaluation and comparison of tracking methods are carried out on realistic simulations and a dedicated instrumented passenger car, Mahindra e2o, to get a performance idea of all the methods in realistic operating conditions and develop tuning methodologies for each of the methods. It has been observed that our model predictive control-based approach is able to perform better compared to the others in medium velocity ranges.

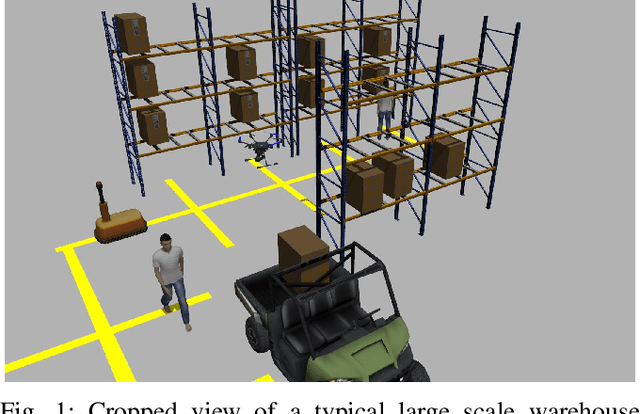

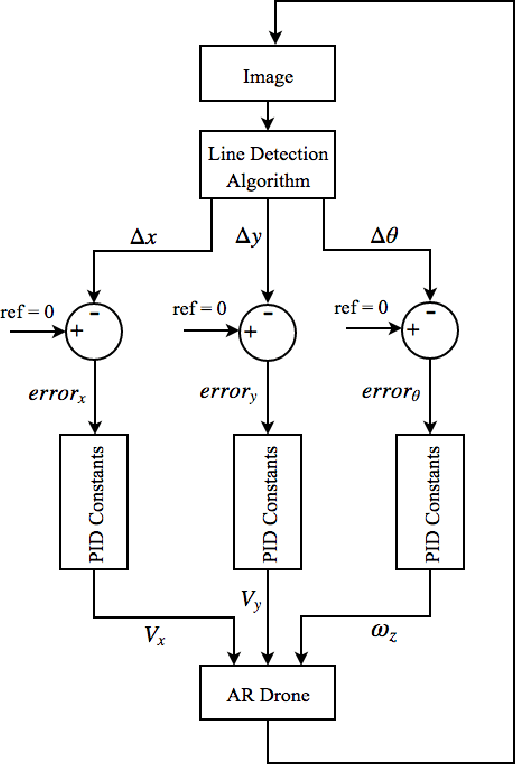

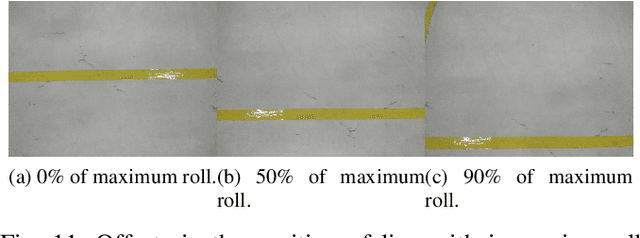

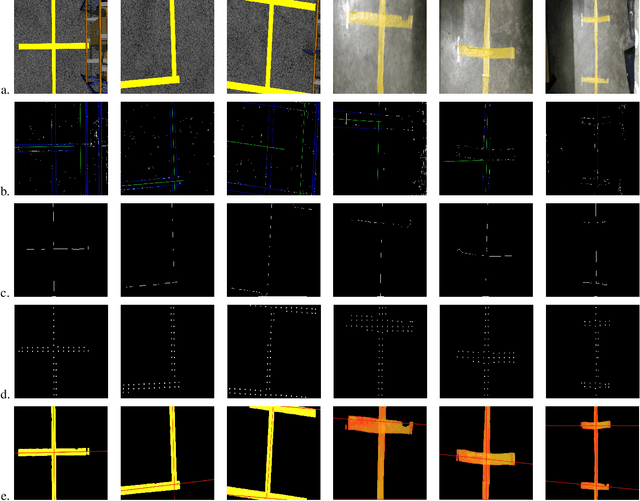

Grid-based Localization Stack for Inspection Drones towards Automation of Large Scale Warehouse Systems

Jun 04, 2019

Abstract:SLAM based techniques are often adopted for solving the navigation problem for the drones in GPS denied environment. Despite the widespread success of these approaches, they have not yet been fully exploited for automation in a warehouse system due to expensive sensors and setup requirements. This paper focuses on the use of low-cost monocular camera-equipped drones for performing warehouse management tasks like inventory scanning and position update. The methods introduced are at par with the existing state of warehouse environment present today, that is, the existence of a grid network for the ground vehicles, hence eliminating any additional infrastructure requirement for drone deployment. As we lack scale information, that in itself forbids us to use any 3D techniques, we focus more towards optimizing standard image processing algorithms like the thick line detection and further developing it into a fast and robust grid localization framework. In this paper, we show different line detection algorithms, their significance in grid localization and their limitations. We further extend our proposed implementation towards a real-time navigation stack for an actual warehouse inspection case scenario. Our line detection method using skeletonization and centroid strategy works considerably even with varying light conditions, line thicknesses, colors, orientations, and partial occlusions. A simple yet effective Kalman Filter has been used for smoothening the {\rho} and {\theta} outputs of the two different line detection methods for better drone control while grid following. A generic strategy that handles the navigation of the drone on a grid for completion of the allotted task is also developed. Based on the simulation and real-life experiments, the final developments on the drone localization and navigation in a structured environment are discussed.

DeepVO: A Deep Learning approach for Monocular Visual Odometry

Nov 18, 2016

Abstract:Deep Learning based techniques have been adopted with precision to solve a lot of standard computer vision problems, some of which are image classification, object detection and segmentation. Despite the widespread success of these approaches, they have not yet been exploited largely for solving the standard perception related problems encountered in autonomous navigation such as Visual Odometry (VO), Structure from Motion (SfM) and Simultaneous Localization and Mapping (SLAM). This paper analyzes the problem of Monocular Visual Odometry using a Deep Learning-based framework, instead of the regular 'feature detection and tracking' pipeline approaches. Several experiments were performed to understand the influence of a known/unknown environment, a conventional trackable feature and pre-trained activations tuned for object classification on the network's ability to accurately estimate the motion trajectory of the camera (or the vehicle). Based on these observations, we propose a Convolutional Neural Network architecture, best suited for estimating the object's pose under known environment conditions, and displays promising results when it comes to inferring the actual scale using just a single camera in real-time.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge