Shivank Garg

SIDiffAgent: Self-Improving Diffusion Agent

Feb 02, 2026Abstract:Text-to-image diffusion models have revolutionized generative AI, enabling high-quality and photorealistic image synthesis. However, their practical deployment remains hindered by several limitations: sensitivity to prompt phrasing, ambiguity in semantic interpretation (e.g., ``mouse" as animal vs. a computer peripheral), artifacts such as distorted anatomy, and the need for carefully engineered input prompts. Existing methods often require additional training and offer limited controllability, restricting their adaptability in real-world applications. We introduce Self-Improving Diffusion Agent (SIDiffAgent), a training-free agentic framework that leverages the Qwen family of models (Qwen-VL, Qwen-Image, Qwen-Edit, Qwen-Embedding) to address these challenges. SIDiffAgent autonomously manages prompt engineering, detects and corrects poor generations, and performs fine-grained artifact removal, yielding more reliable and consistent outputs. It further incorporates iterative self-improvement by storing a memory of previous experiences in a database. This database of past experiences is then used to inject prompt-based guidance at each stage of the agentic pipeline. \modelour achieved an average VQA score of 0.884 on GenAIBench, significantly outperforming open-source, proprietary models and agentic methods. We will publicly release our code upon acceptance.

ViT Registers and Fractal ViT

Jan 21, 2026Abstract:Drawing inspiration from recent findings including surprisingly decent performance of transformers without positional encoding (NoPE) in the domain of language models and how registers (additional throwaway tokens not tied to input) may improve the performance of large vision transformers (ViTs), we invent and test a variant of ViT called fractal ViT that breaks permutation invariance among the tokens by applying an attention mask between the regular tokens and ``summary tokens'' similar to registers, in isolation or in combination with various positional encodings. These models do not improve upon ViT with registers, highlighting the fact that these findings may be scale, domain, or application-specific.

IPO: Your Language Model is Secretly a Preference Classifier

Feb 22, 2025

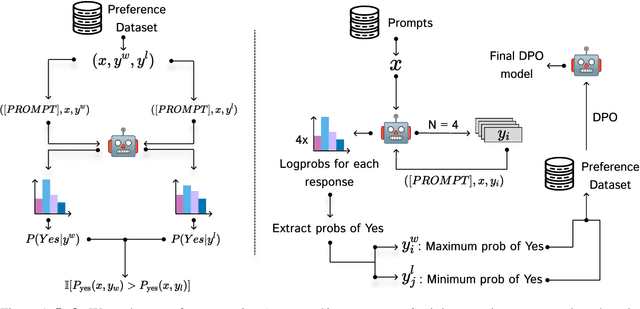

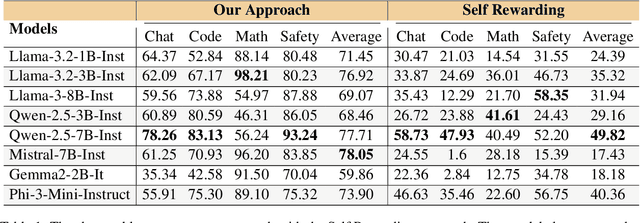

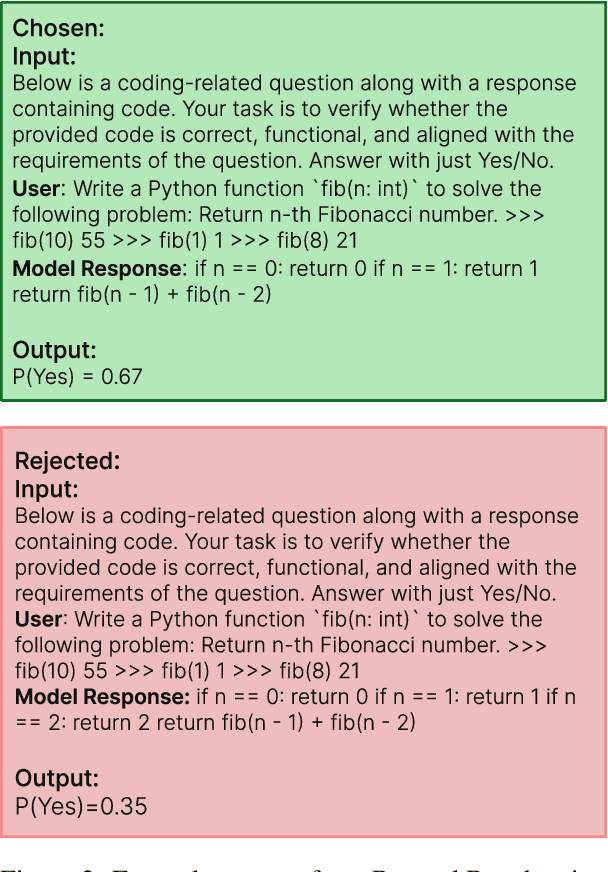

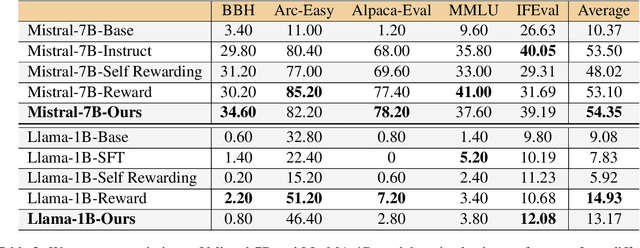

Abstract:Reinforcement learning from human feedback (RLHF) has emerged as the primary method for aligning large language models (LLMs) with human preferences. While it enables LLMs to achieve human-level alignment, it often incurs significant computational and financial costs due to its reliance on training external reward models or human-labeled preferences. In this work, we propose \textbf{Implicit Preference Optimization (IPO)}, an alternative approach that leverages generative LLMs as preference classifiers, thereby reducing the dependence on external human feedback or reward models to obtain preferences. We conduct a comprehensive evaluation on the preference classification ability of LLMs using RewardBench, assessing models across different sizes, architectures, and training levels to validate our hypothesis. Furthermore, we investigate the self-improvement capabilities of LLMs by generating multiple responses for a given instruction and employing the model itself as a preference classifier for Direct Preference Optimization (DPO)-based training. Our findings demonstrate that models trained through IPO achieve performance comparable to those utilizing state-of-the-art reward models for obtaining preferences.

Adaptive Urban Planning: A Hybrid Framework for Balanced City Development

Dec 19, 2024

Abstract:Urban planning faces a critical challenge in balancing city-wide infrastructure needs with localized demographic preferences, particularly in rapidly developing regions. Although existing approaches typically focus on top-down optimization or bottom-up community planning, only some frameworks successfully integrate both perspectives. Our methodology employs a two-tier approach: First, a deterministic solver optimizes basic infrastructure requirements in the city region. Second, four specialized planning agents, each representing distinct sub-regions, propose demographic-specific modifications to a master planner. The master planner then evaluates and integrates these suggestions to ensure cohesive urban development. We validate our framework using a newly created dataset comprising detailed region and sub-region maps from three developing cities in India, focusing on areas undergoing rapid urbanization. The results demonstrate that this hybrid approach enables more nuanced urban development while maintaining overall city functionality.

LoRA-Mini : Adaptation Matrices Decomposition and Selective Training

Nov 24, 2024

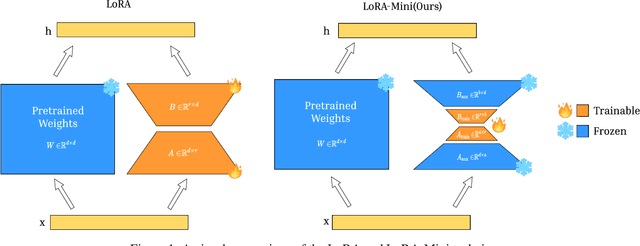

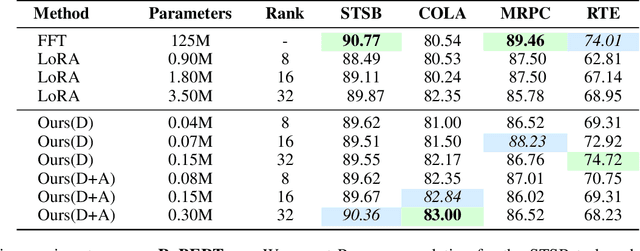

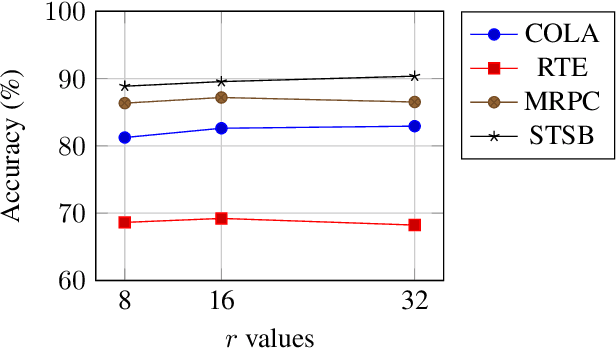

Abstract:The rapid advancements in large language models (LLMs) have revolutionized natural language processing, creating an increased need for efficient, task-specific fine-tuning methods. Traditional fine-tuning of LLMs involves updating a large number of parameters, which is computationally expensive and memory-intensive. Low-Rank Adaptation (LoRA) has emerged as a promising solution, enabling parameter-efficient fine-tuning by reducing the number of trainable parameters. However, while LoRA reduces the number of trainable parameters, LoRA modules still create significant storage challenges. We propose LoRA-Mini, an optimized adaptation of LoRA that improves parameter efficiency by splitting low-rank matrices into four parts, with only the two inner matrices being trainable. This approach achieves upto a 20x reduction compared to standard LoRA in the number of trainable parameters while preserving performance levels comparable to standard LoRA, addressing both computational and storage efficiency in LLM fine-tuning.

Are VLMs Really Blind

Oct 29, 2024Abstract:Vision Language Models excel in handling a wide range of complex tasks, including Optical Character Recognition (OCR), Visual Question Answering (VQA), and advanced geometric reasoning. However, these models fail to perform well on low-level basic visual tasks which are especially easy for humans. Our goal in this work was to determine if these models are truly "blind" to geometric reasoning or if there are ways to enhance their capabilities in this area. Our work presents a novel automatic pipeline designed to extract key information from images in response to specific questions. Instead of just relying on direct VQA, we use question-derived keywords to create a caption that highlights important details in the image related to the question. This caption is then used by a language model to provide a precise answer to the question without requiring external fine-tuning.

Give me a hint: Can LLMs take a hint to solve math problems?

Oct 08, 2024

Abstract:While many state-of-the-art LLMs have shown poor logical and basic mathematical reasoning, recent works try to improve their problem-solving abilities using prompting techniques. We propose giving "hints" to improve the language model's performance on advanced mathematical problems, taking inspiration from how humans approach math pedagogically. We also test the model's adversarial robustness to wrong hints. We demonstrate the effectiveness of our approach by evaluating various LLMs, presenting them with a diverse set of problems of different difficulties and topics from the MATH dataset and comparing against techniques such as one-shot, few-shot, and chain of thought prompting.

Beyond Captioning: Task-Specific Prompting for Improved VLM Performance in Mathematical Reasoning

Oct 08, 2024

Abstract:Vision-Language Models (VLMs) have transformed tasks requiring visual and reasoning abilities, such as image retrieval and Visual Question Answering (VQA). Despite their success, VLMs face significant challenges with tasks involving geometric reasoning, algebraic problem-solving, and counting. These limitations stem from difficulties effectively integrating multiple modalities and accurately interpreting geometry-related tasks. Various works claim that introducing a captioning pipeline before VQA tasks enhances performance. We incorporated this pipeline for tasks involving geometry, algebra, and counting. We found that captioning results are not generalizable, specifically with larger VLMs primarily trained on downstream QnA tasks showing random performance on math-related challenges. However, we present a promising alternative: task-based prompting, enriching the prompt with task-specific guidance. This approach shows promise and proves more effective than direct captioning methods for math-heavy problems.

Attention Shift: Steering AI Away from Unsafe Content

Oct 06, 2024Abstract:This study investigates the generation of unsafe or harmful content in state-of-the-art generative models, focusing on methods for restricting such generations. We introduce a novel training-free approach using attention reweighing to remove unsafe concepts without additional training during inference. We compare our method against existing ablation methods, evaluating the performance on both, direct and adversarial jailbreak prompts, using qualitative and quantitative metrics. We hypothesize potential reasons for the observed results and discuss the limitations and broader implications of content restriction.

Snowy Scenes,Clear Detections: A Robust Model for Traffic Light Detection in Adverse Weather Conditions

Jun 19, 2024

Abstract:With the rise of autonomous vehicles and advanced driver-assistance systems (ADAS), ensuring reliable object detection in all weather conditions is crucial for safety and efficiency. Adverse weather like snow, rain, and fog presents major challenges for current detection systems, often resulting in failures and potential safety risks. This paper introduces a novel framework and pipeline designed to improve object detection under such conditions, focusing on traffic signal detection where traditional methods often fail due to domain shifts caused by adverse weather. We provide a comprehensive analysis of the limitations of existing techniques. Our proposed pipeline significantly enhances detection accuracy in snow, rain, and fog. Results show a 40.8% improvement in average IoU and F1 scores compared to naive fine-tuning and a 22.4% performance increase in domain shift scenarios, such as training on artificial snow and testing on rain images.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge